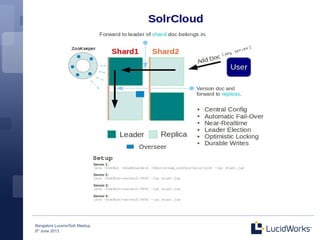

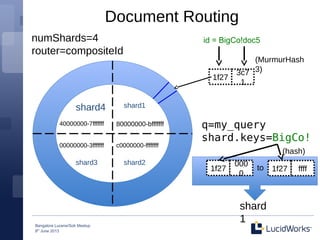

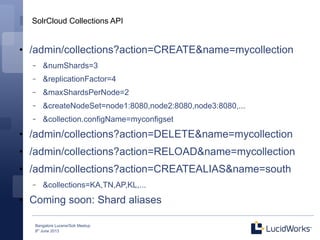

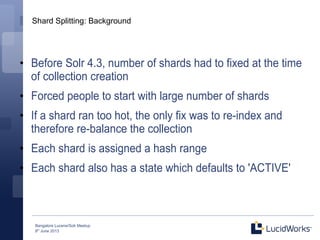

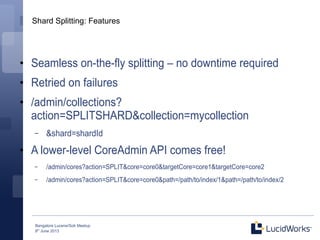

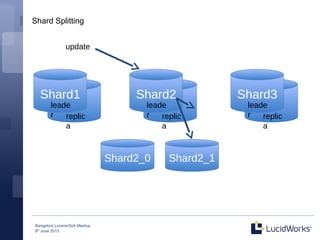

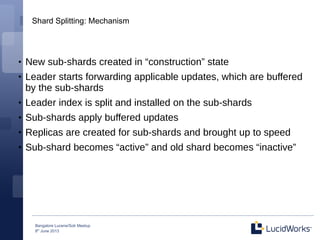

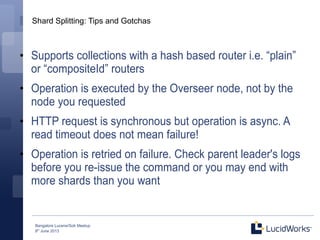

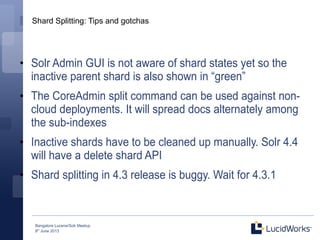

This document summarizes a presentation about SolrCloud shard splitting. It introduces the presenter and his background with Apache Lucene and Solr. The presentation covers an overview of SolrCloud, how documents are routed to shards in SolrCloud, the SolrCloud collections API, and the new functionality for splitting shards in Solr 4.3 to allow dynamic resharding of collections without downtime. It provides details on the shard splitting mechanism and tips for using the new functionality.