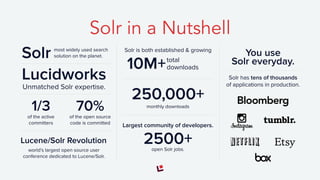

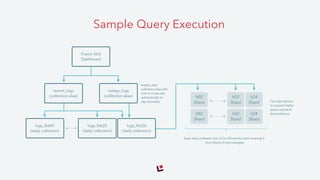

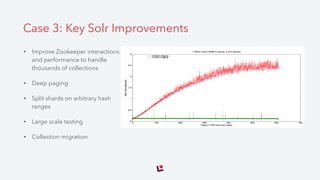

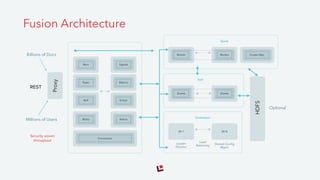

The document discusses Solr and its capabilities for large-scale search. It provides examples of how Solr has been used for compliance monitoring, web analytics, and search over consumer data and content. It also outlines the key features of Solr, such as indexing in HDFS, deployment options, and upcoming improvements to areas like security, performance, and integration with Apache Spark. Lucidworks provides commercial support for Solr and has experience implementing large-scale Solr deployments.