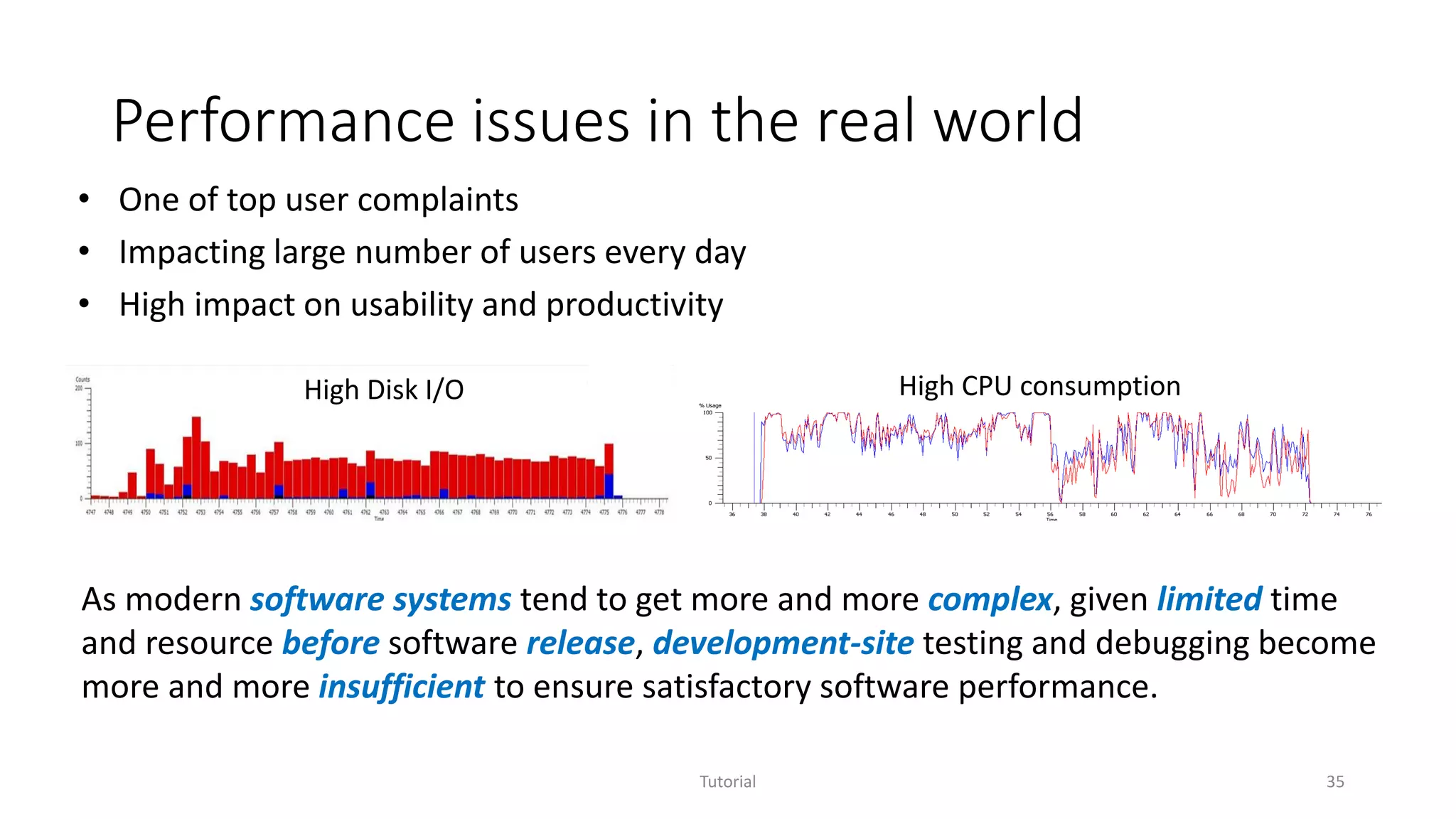

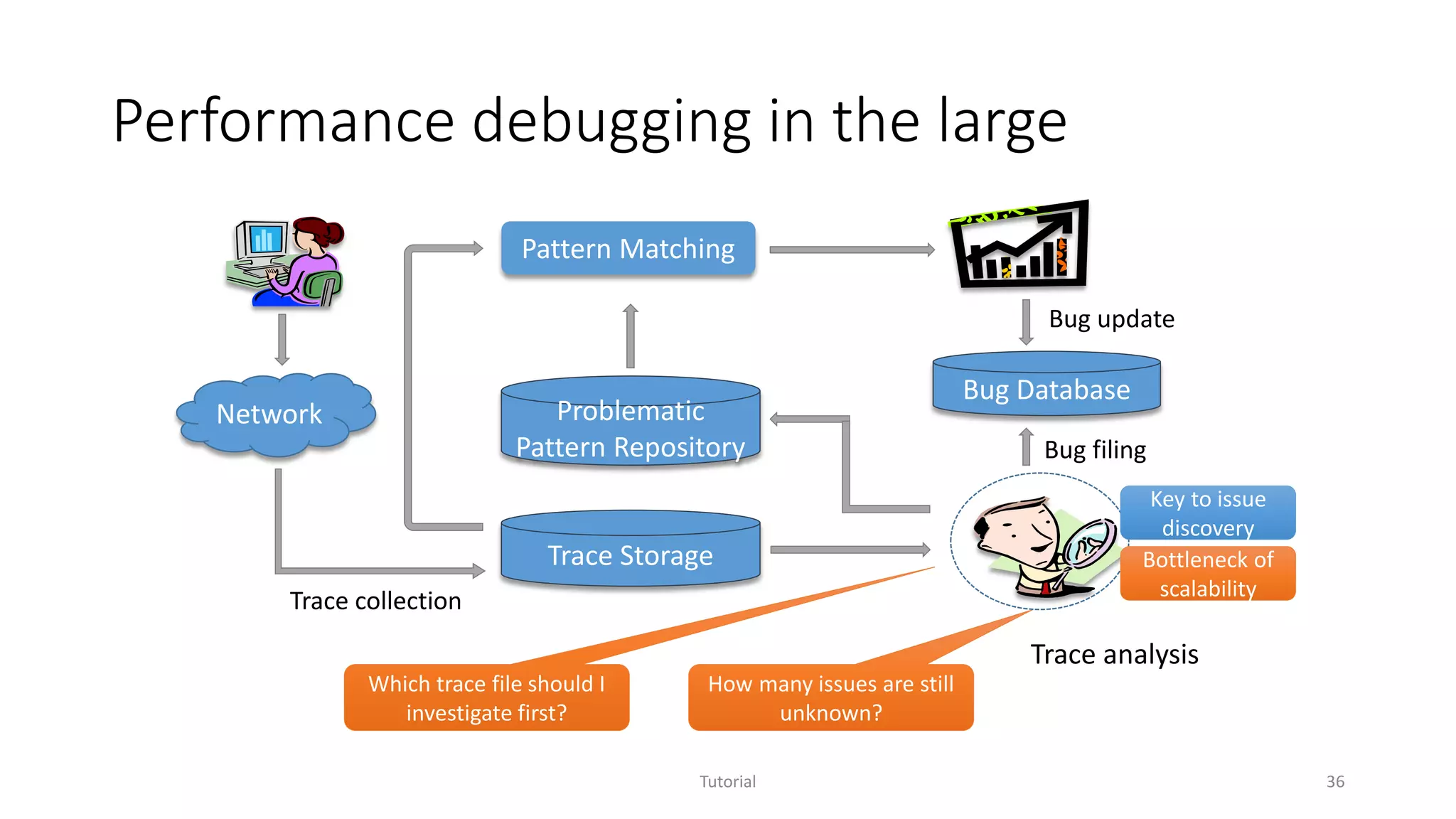

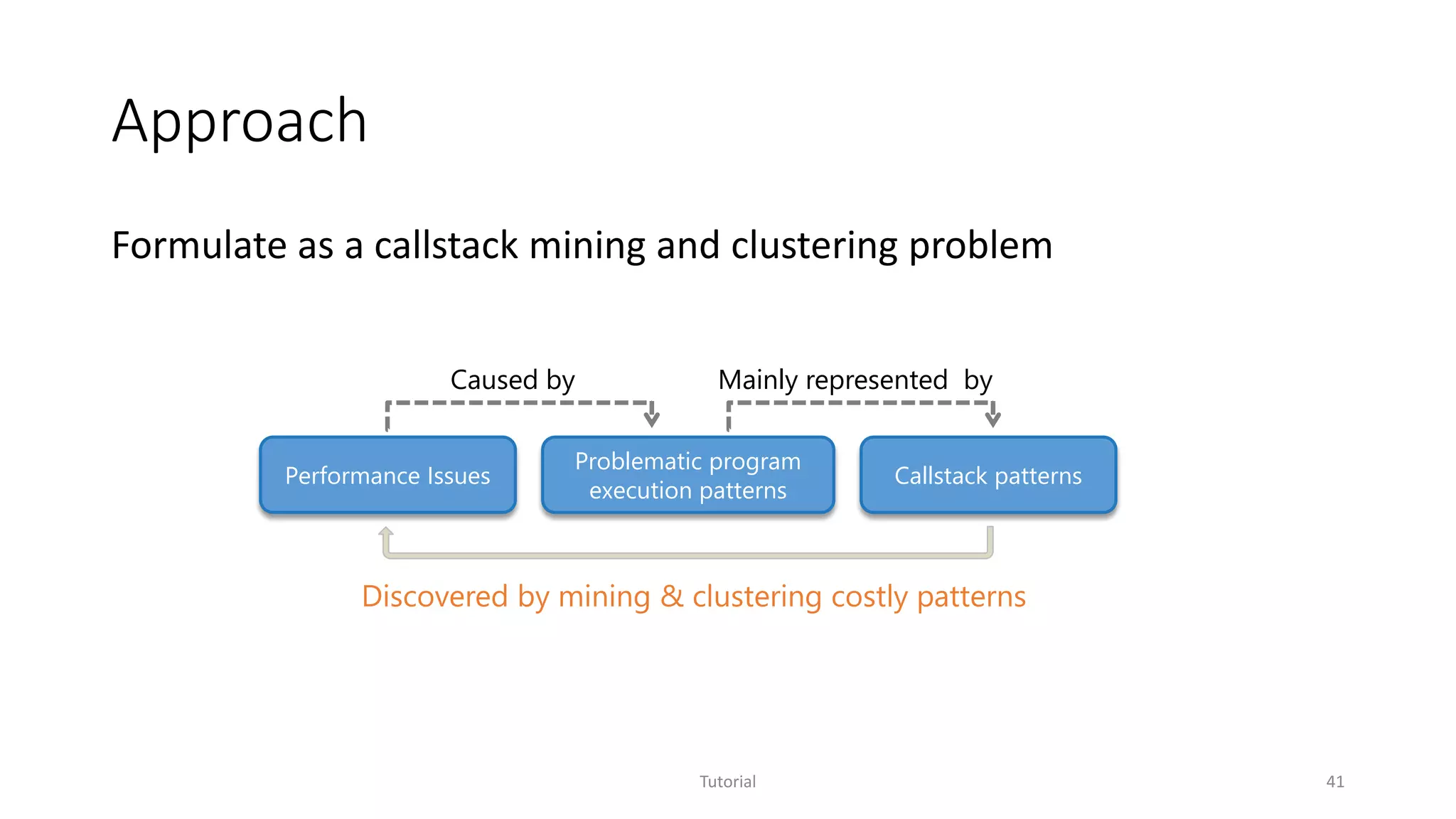

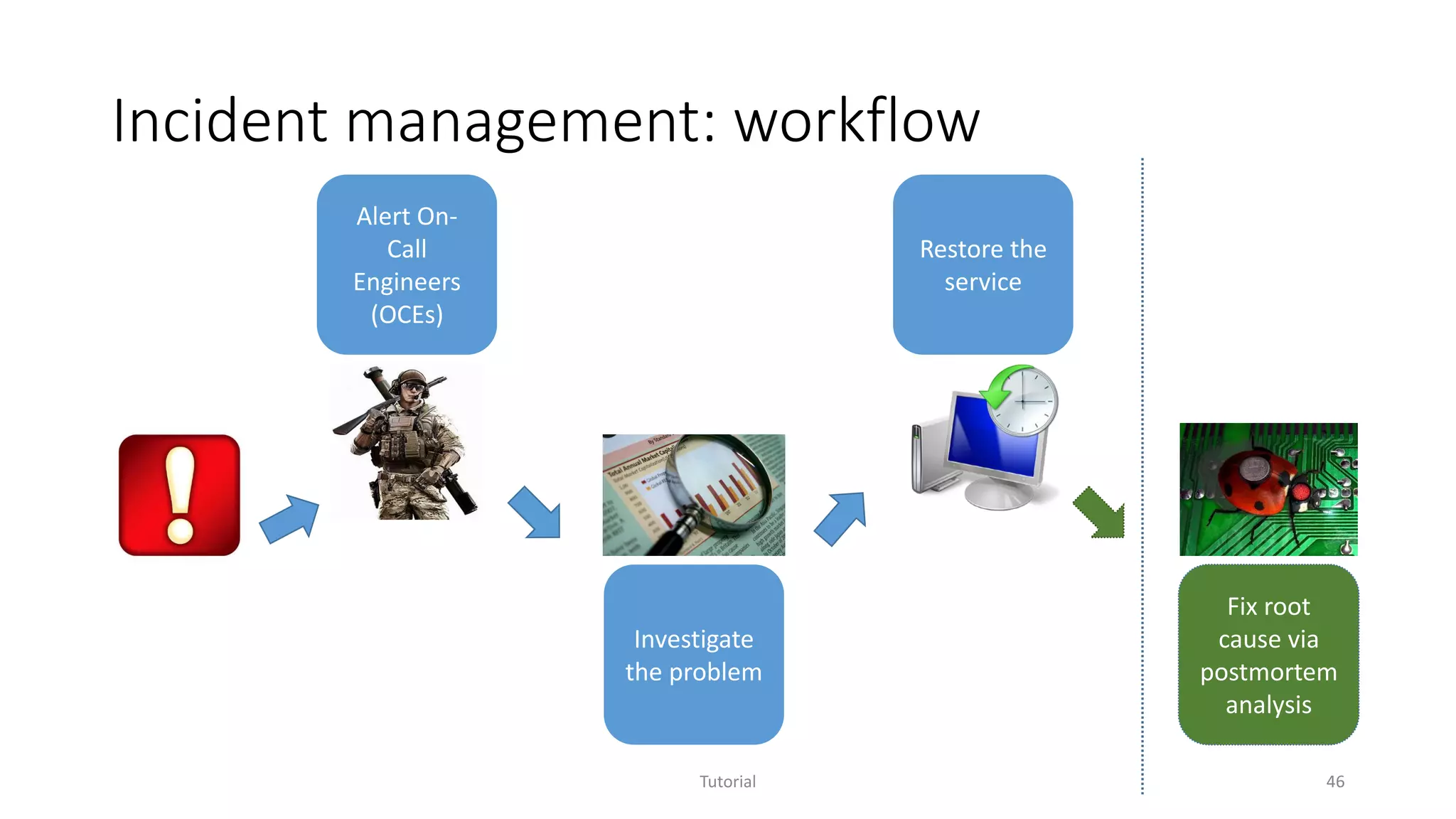

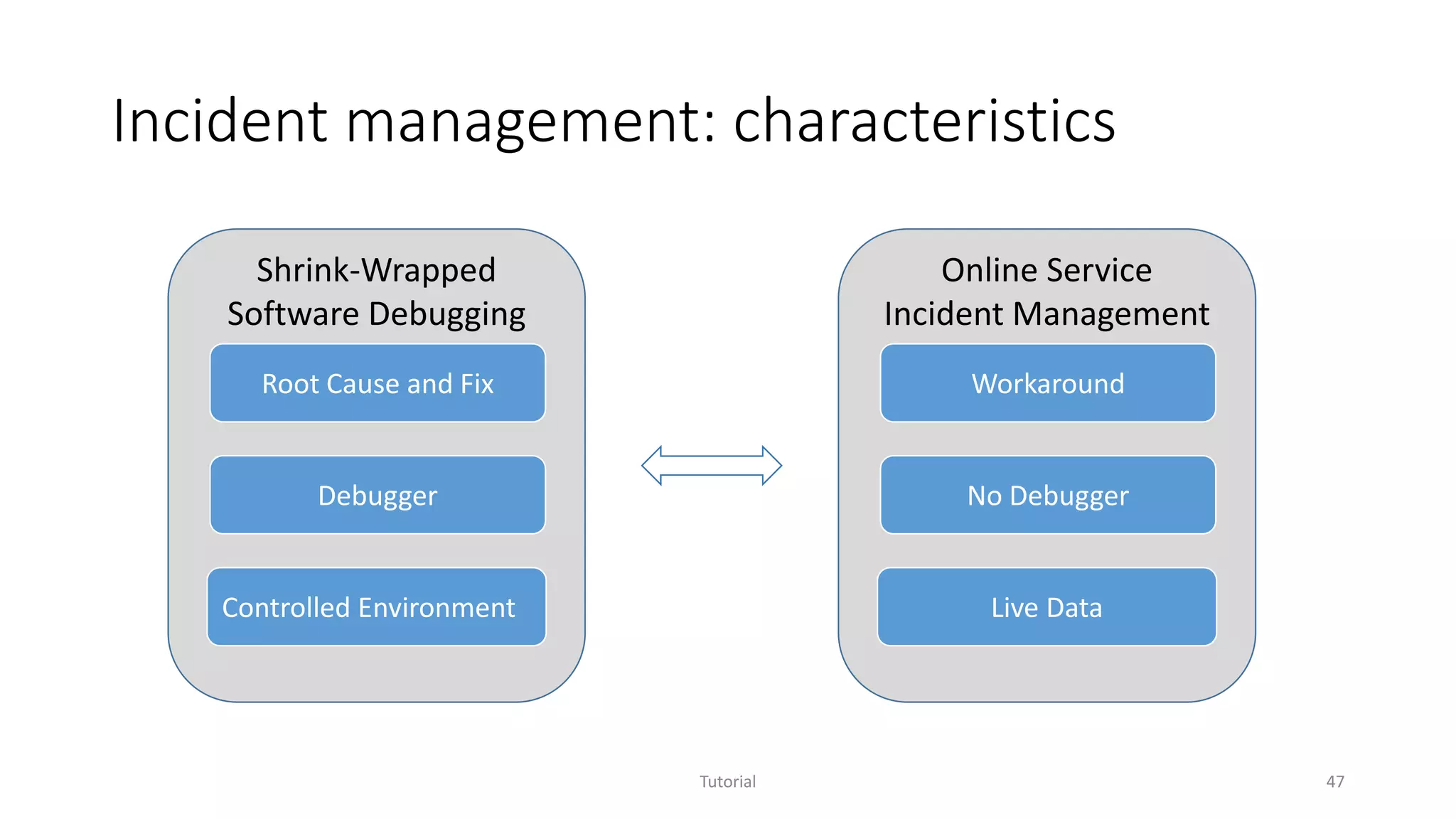

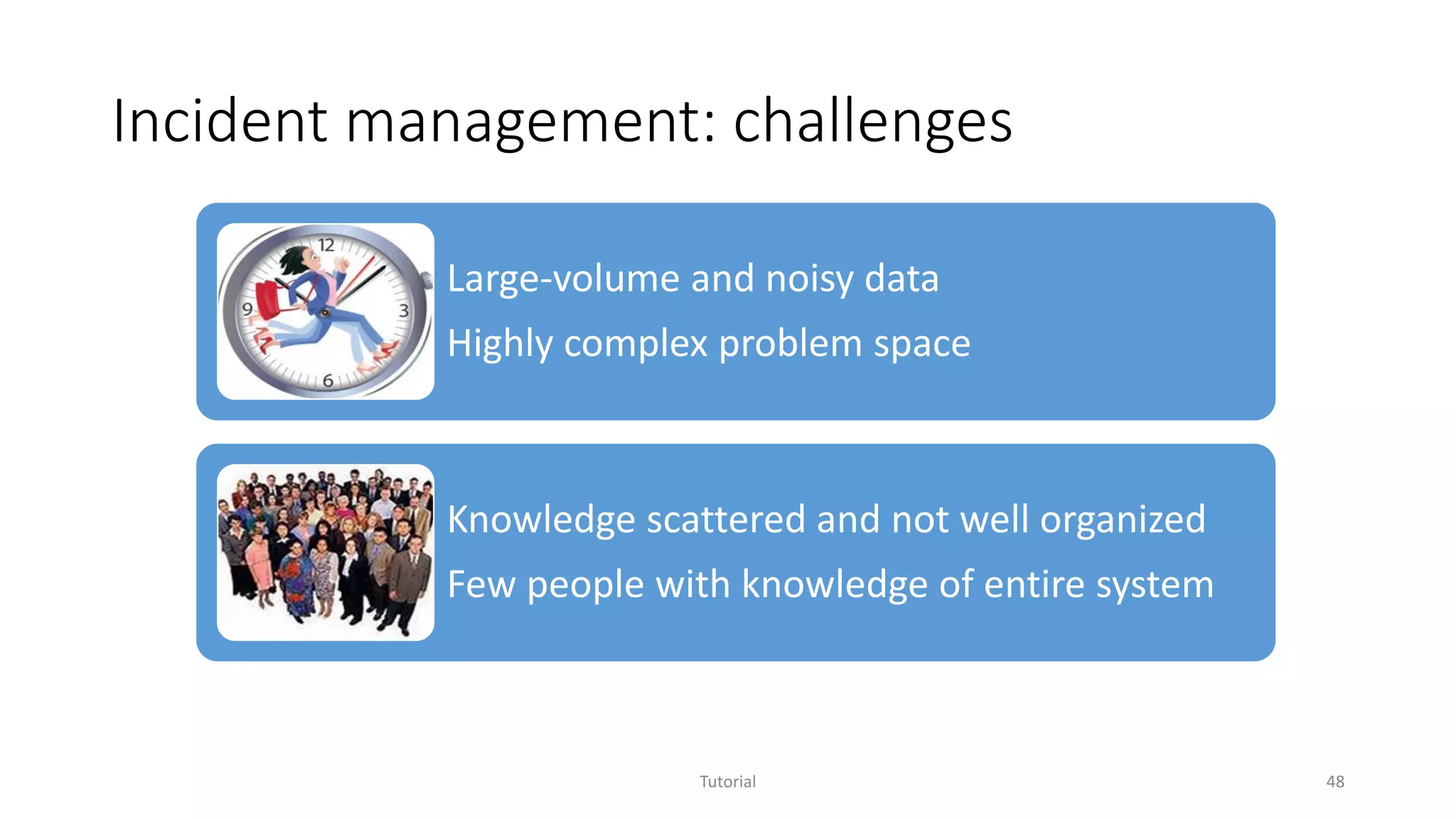

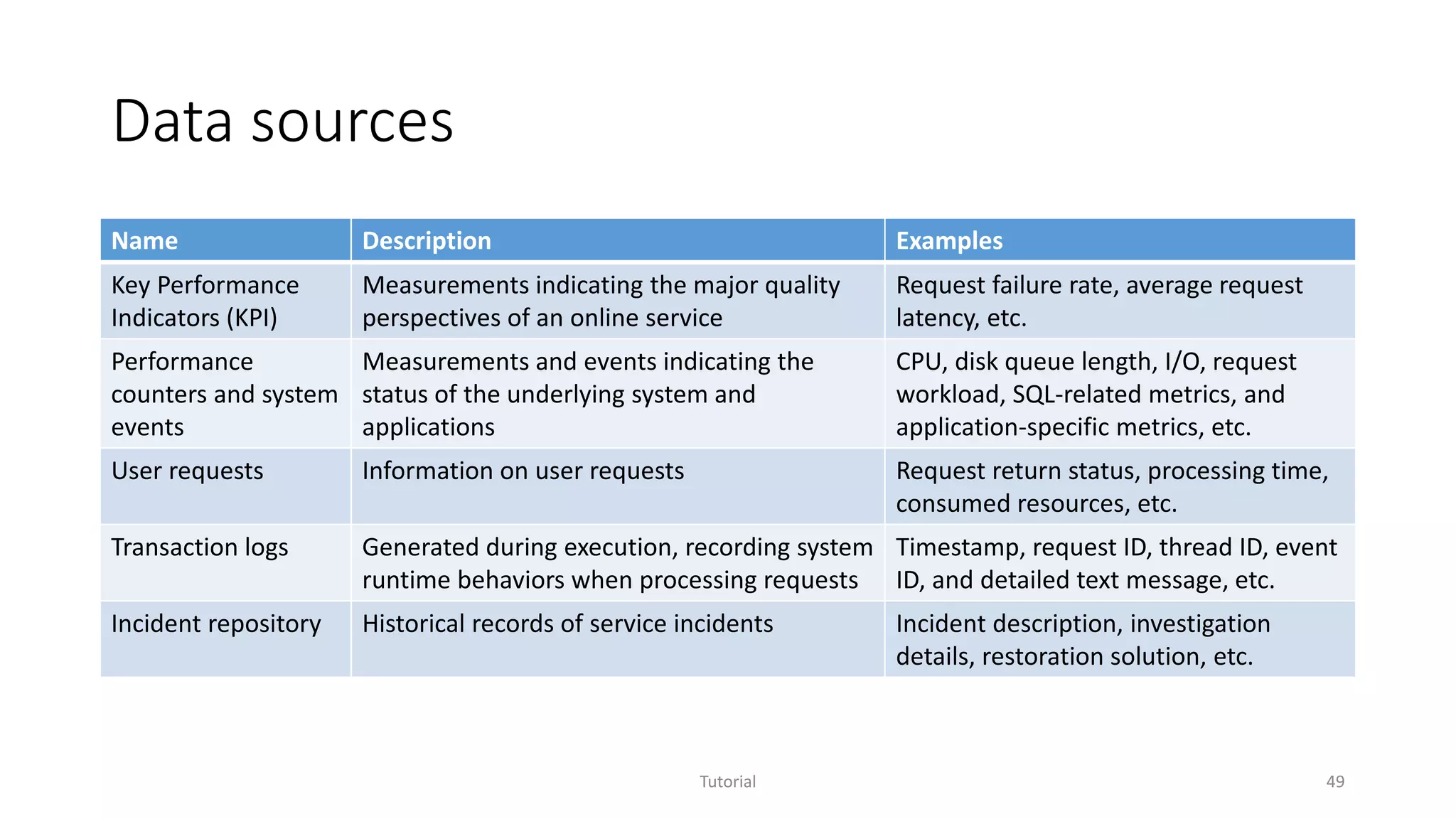

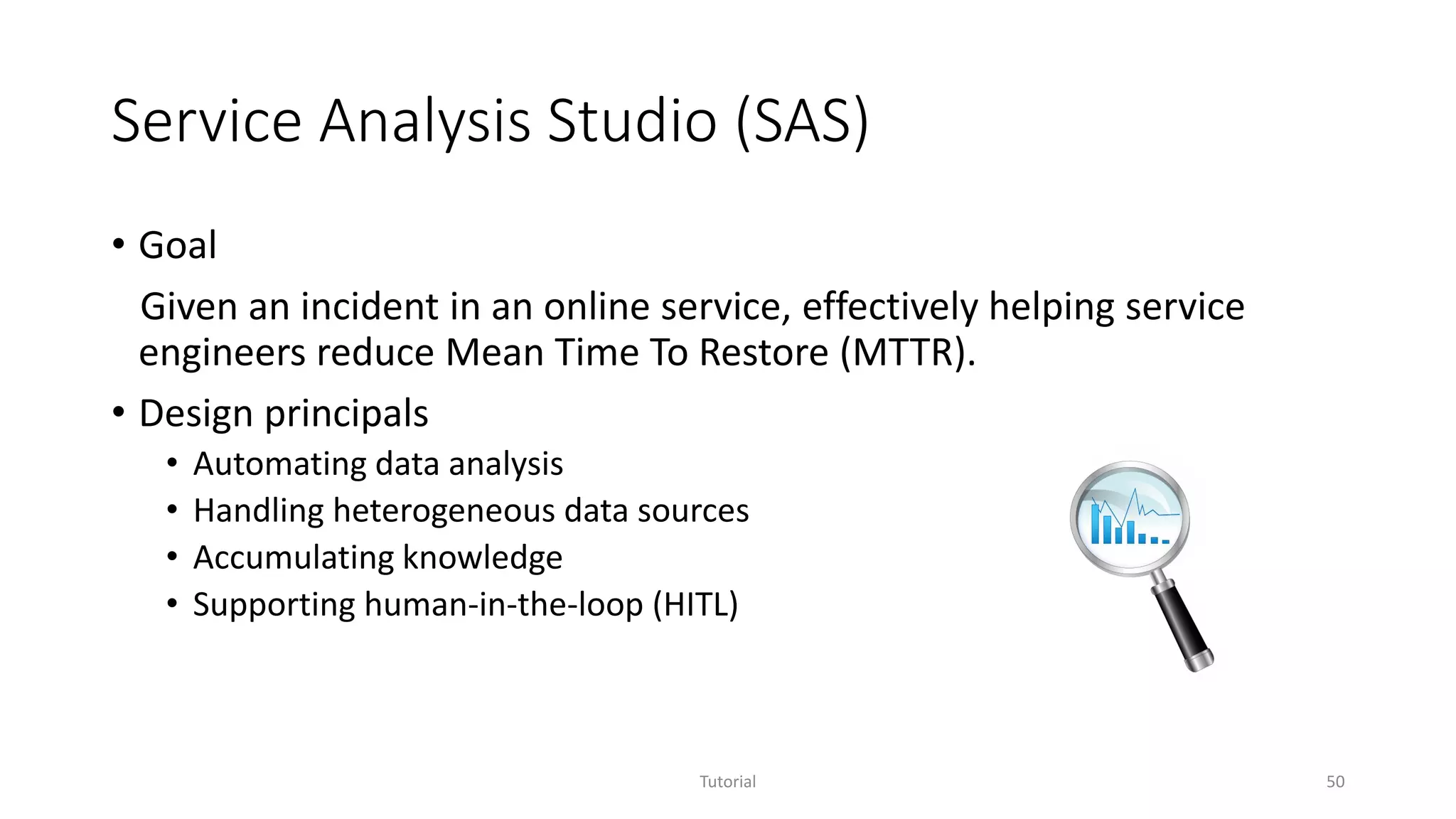

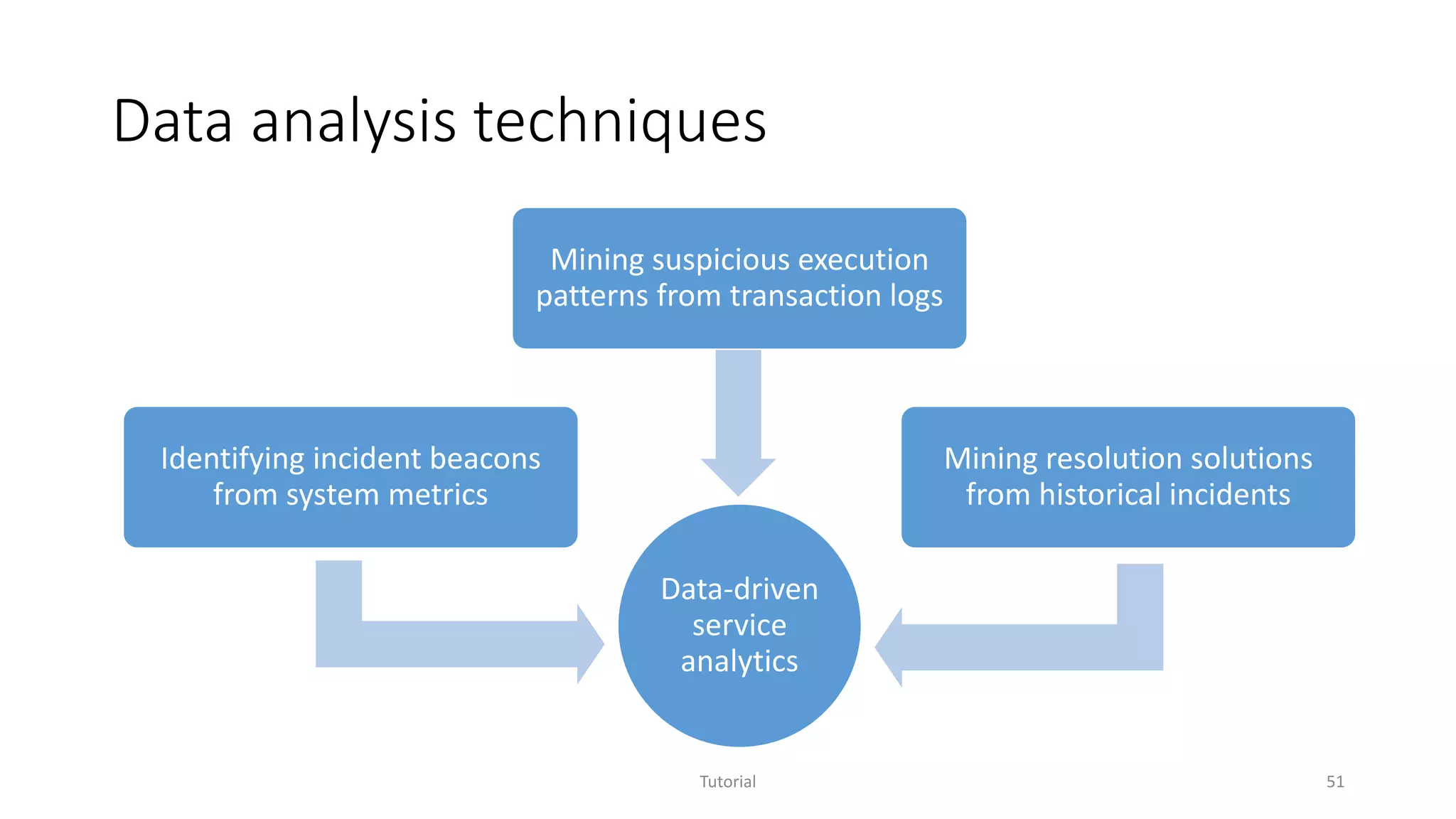

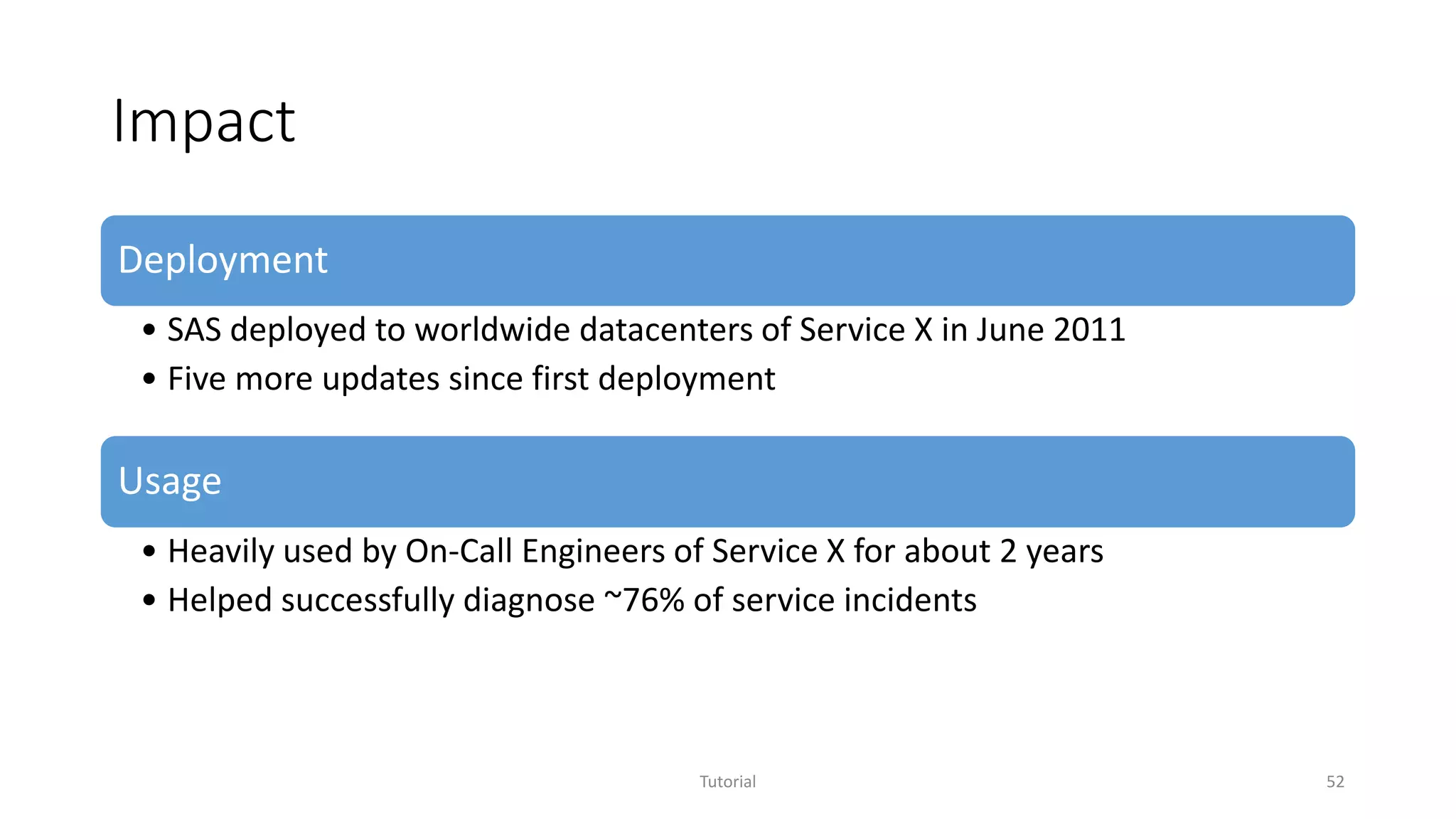

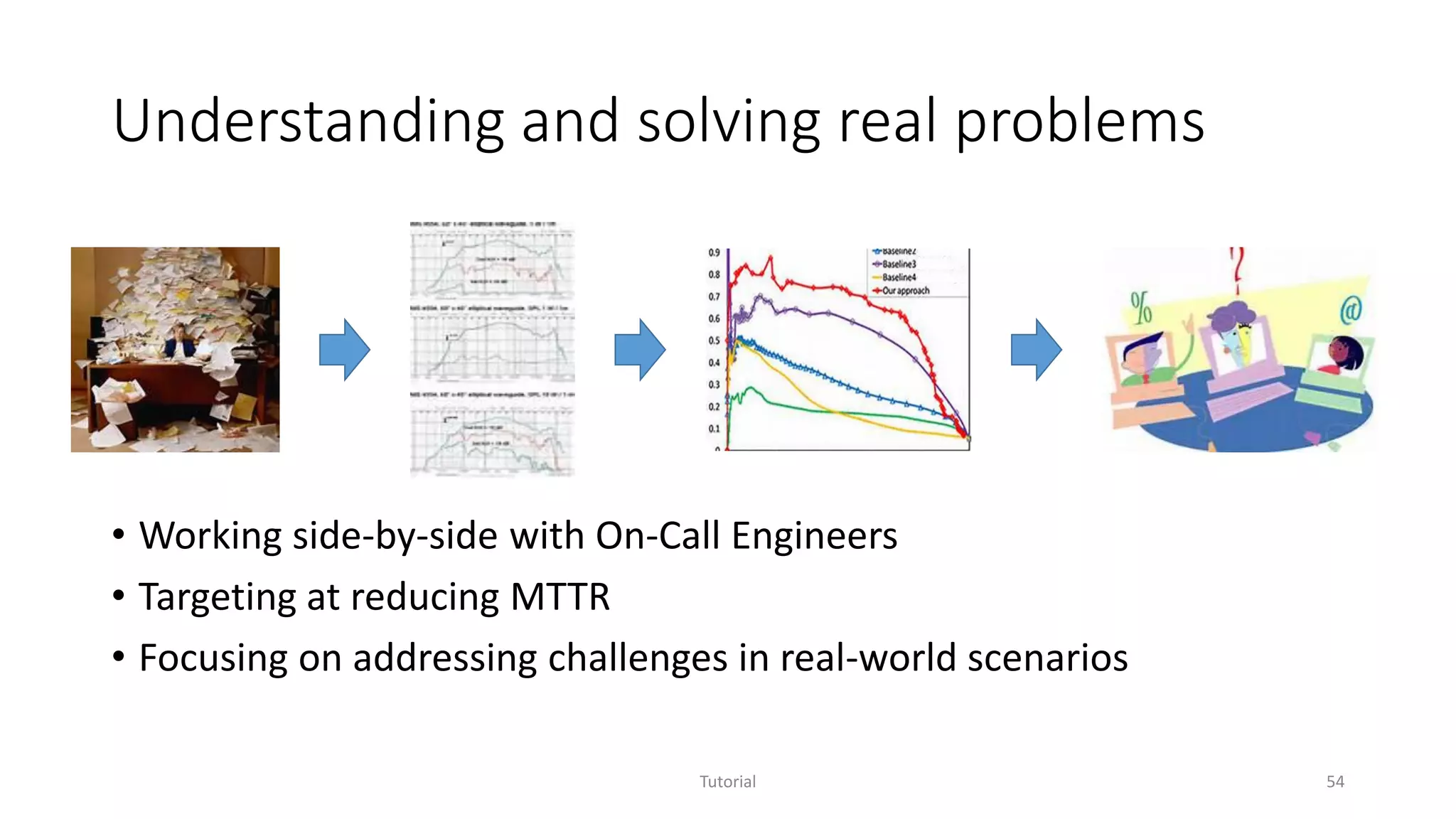

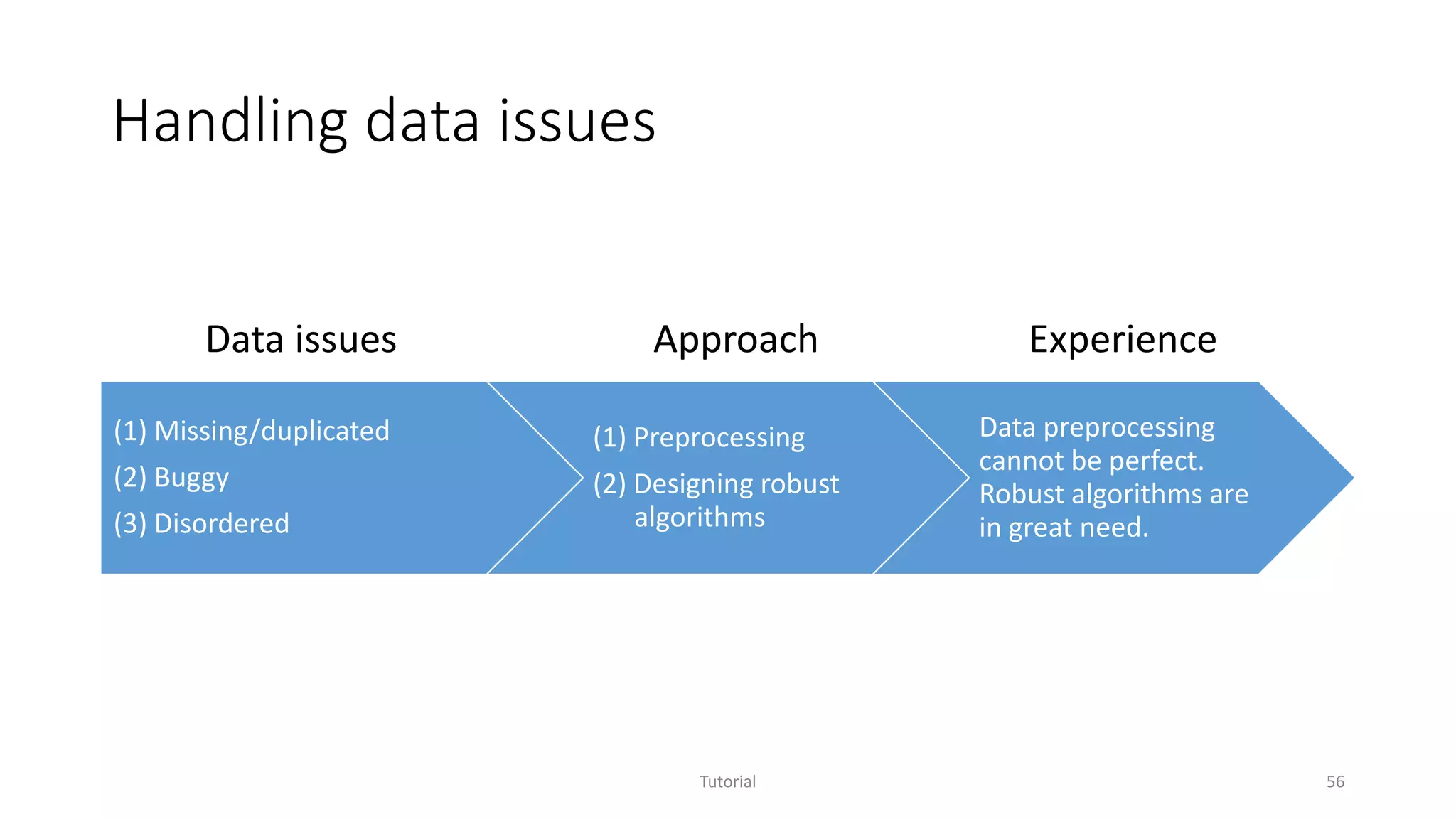

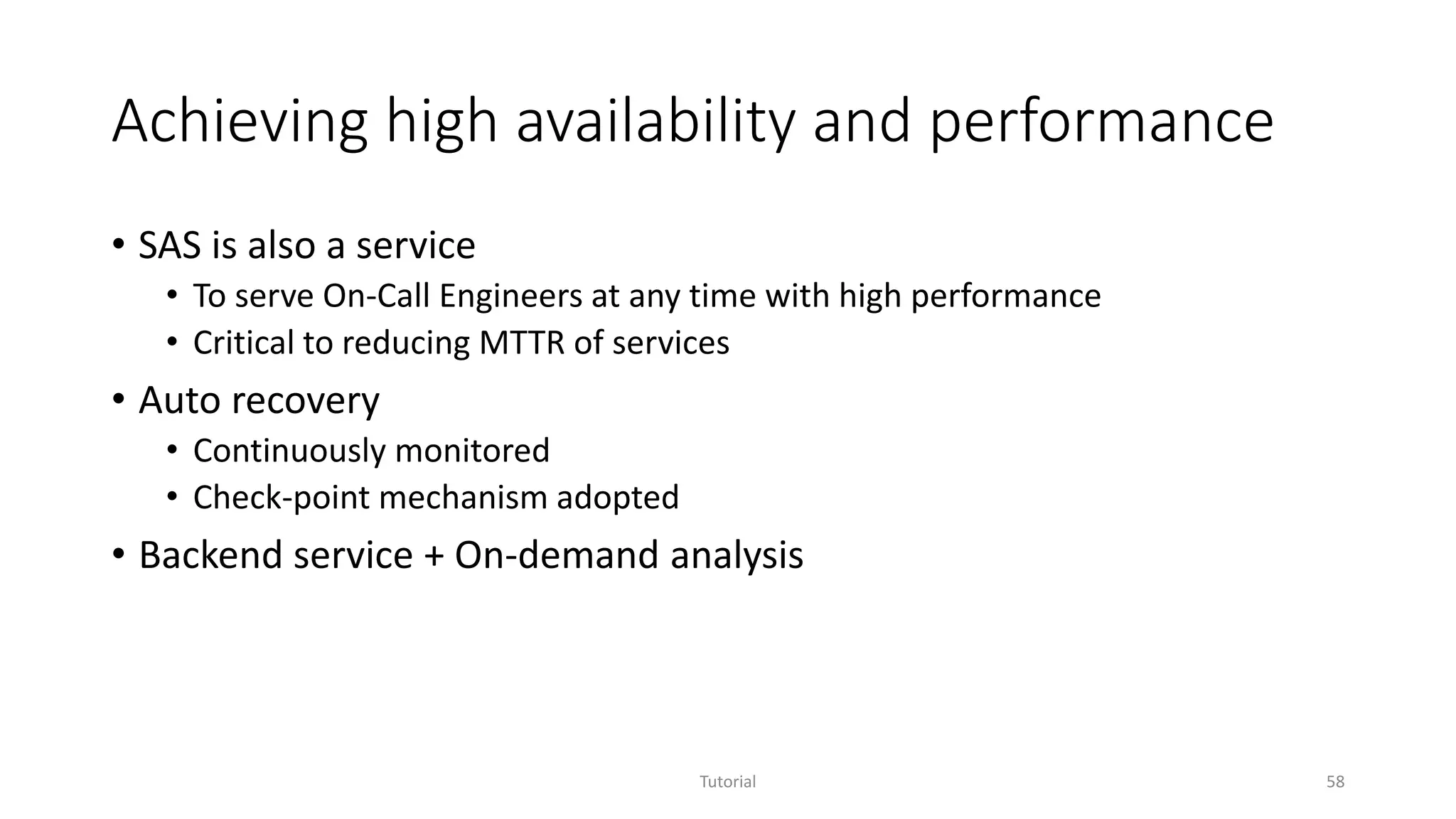

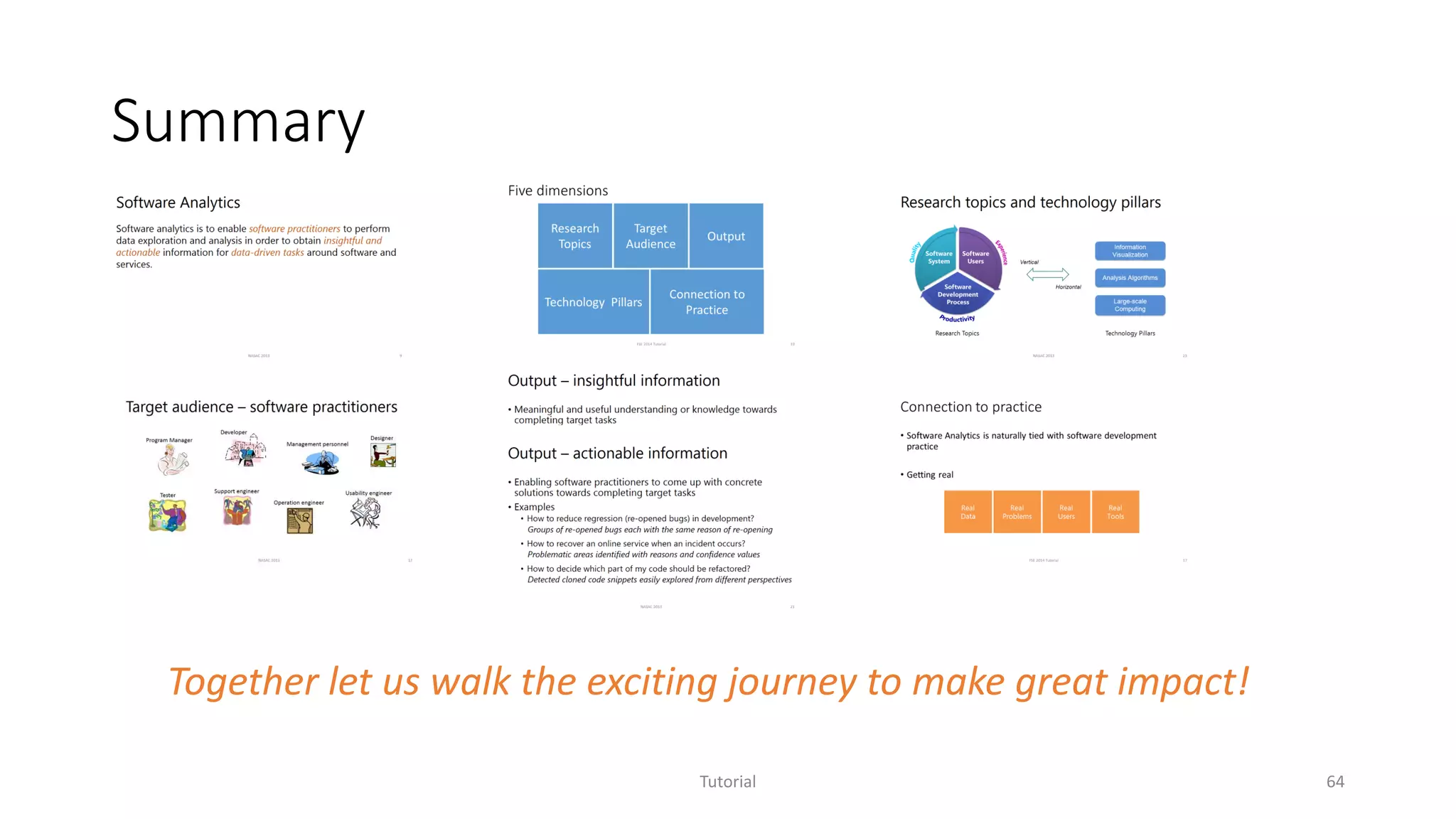

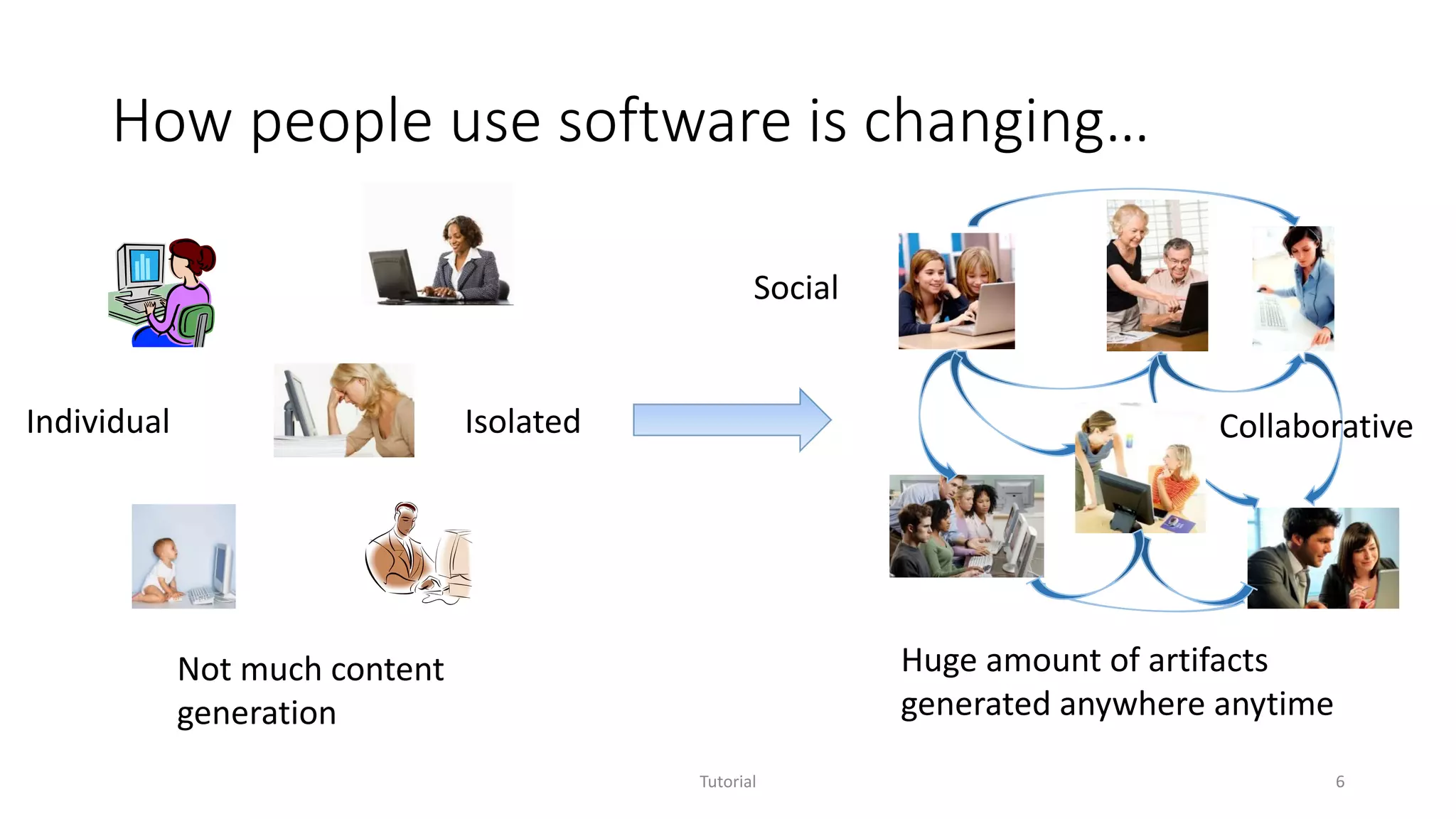

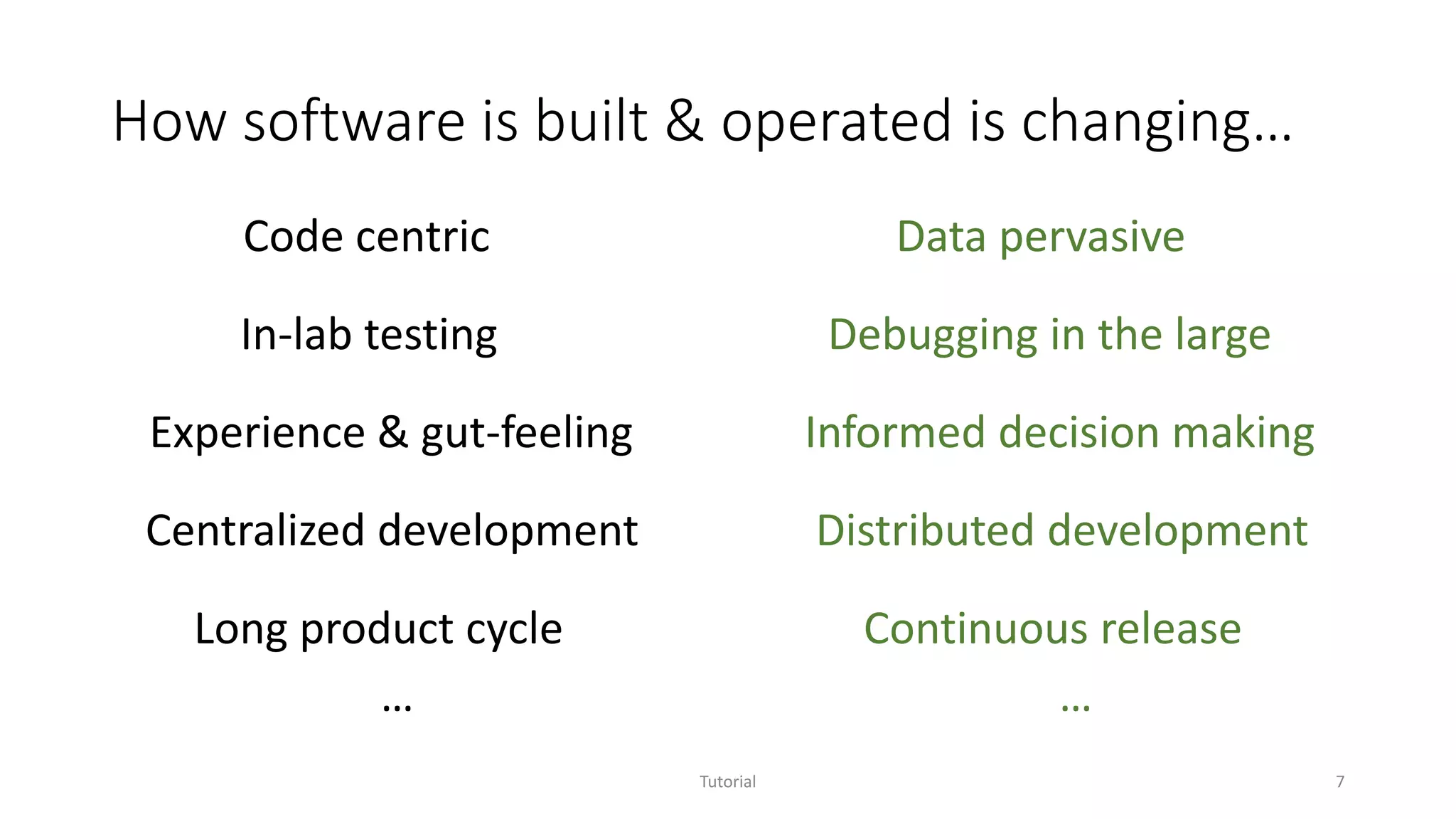

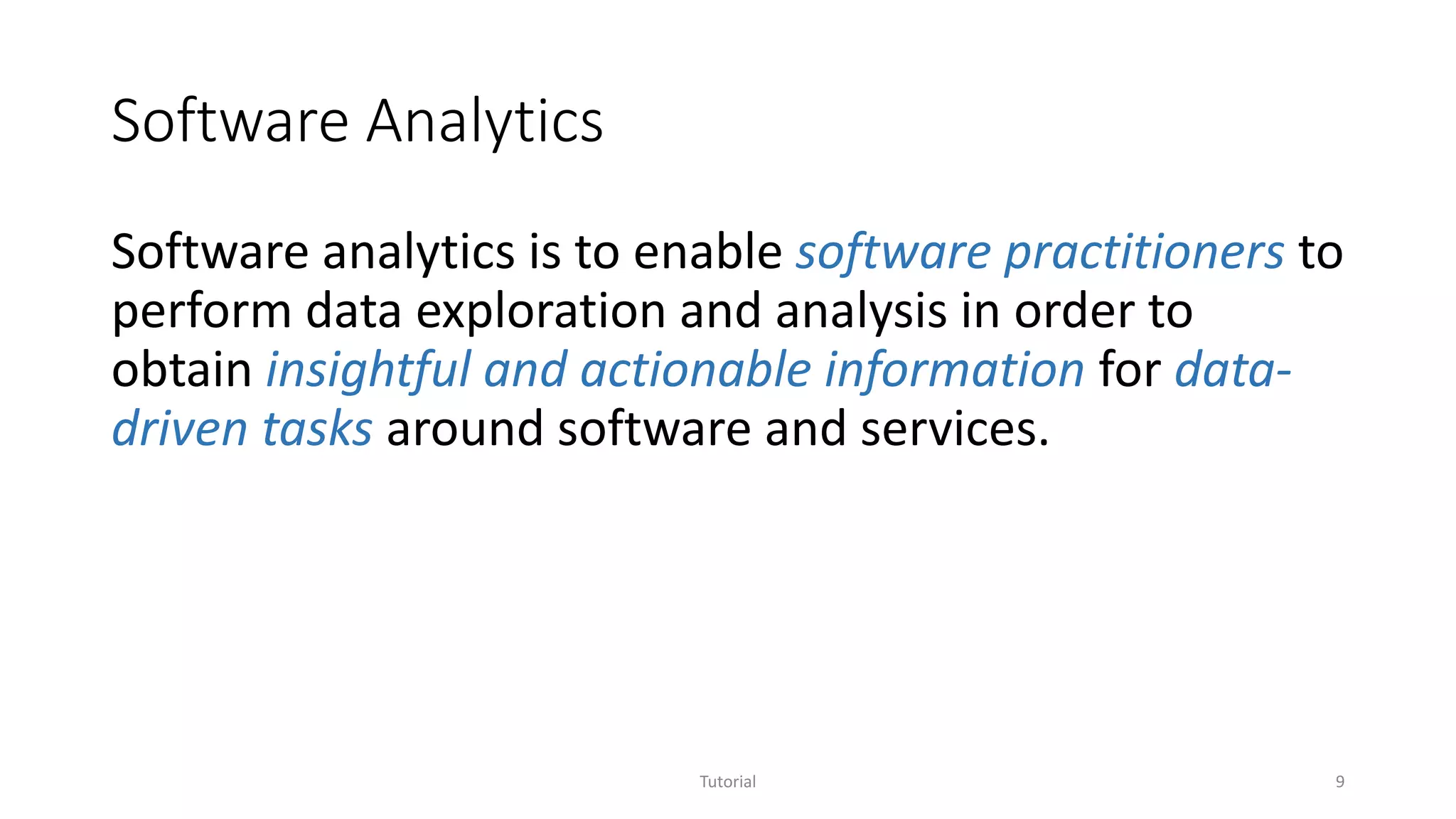

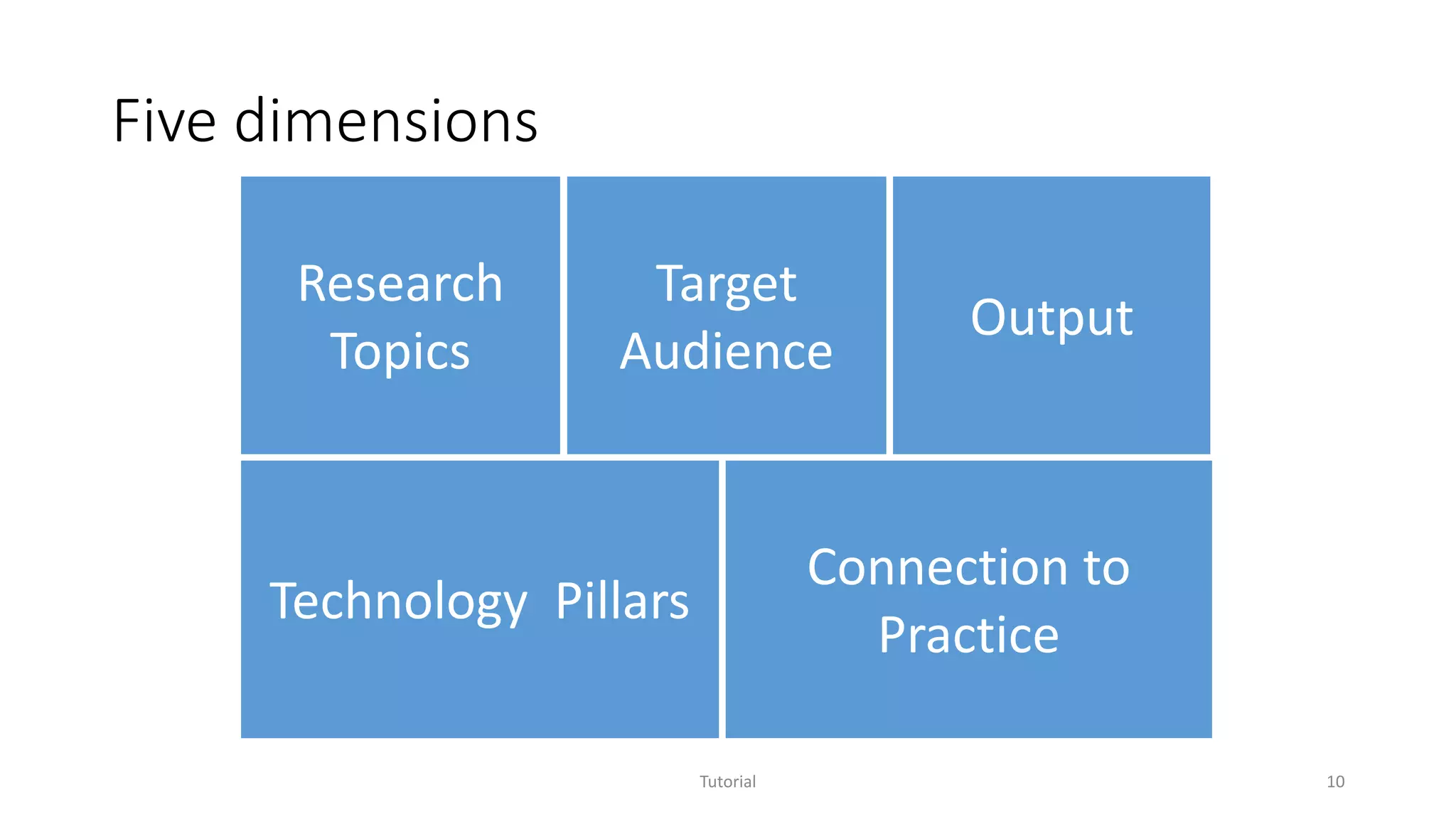

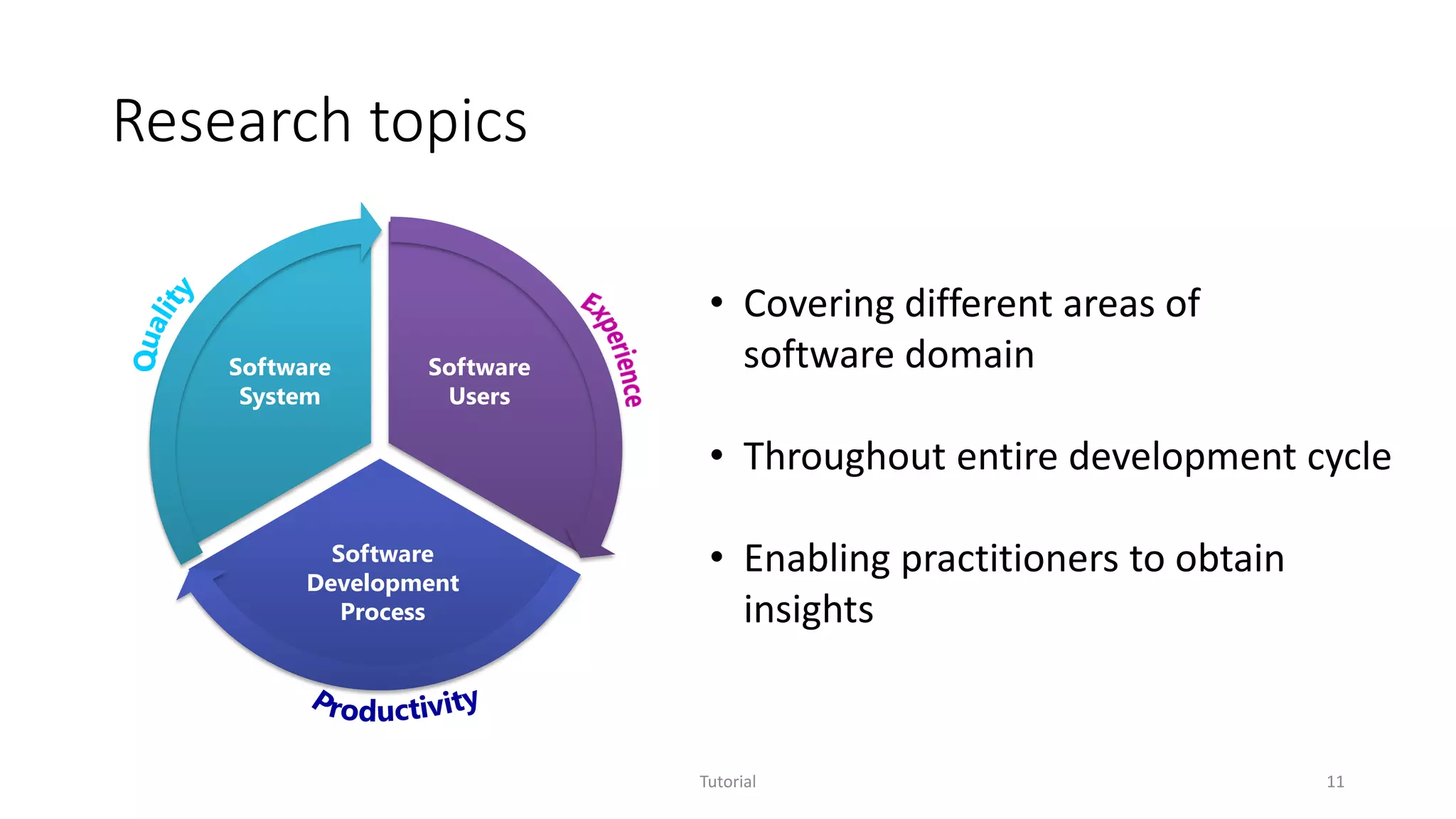

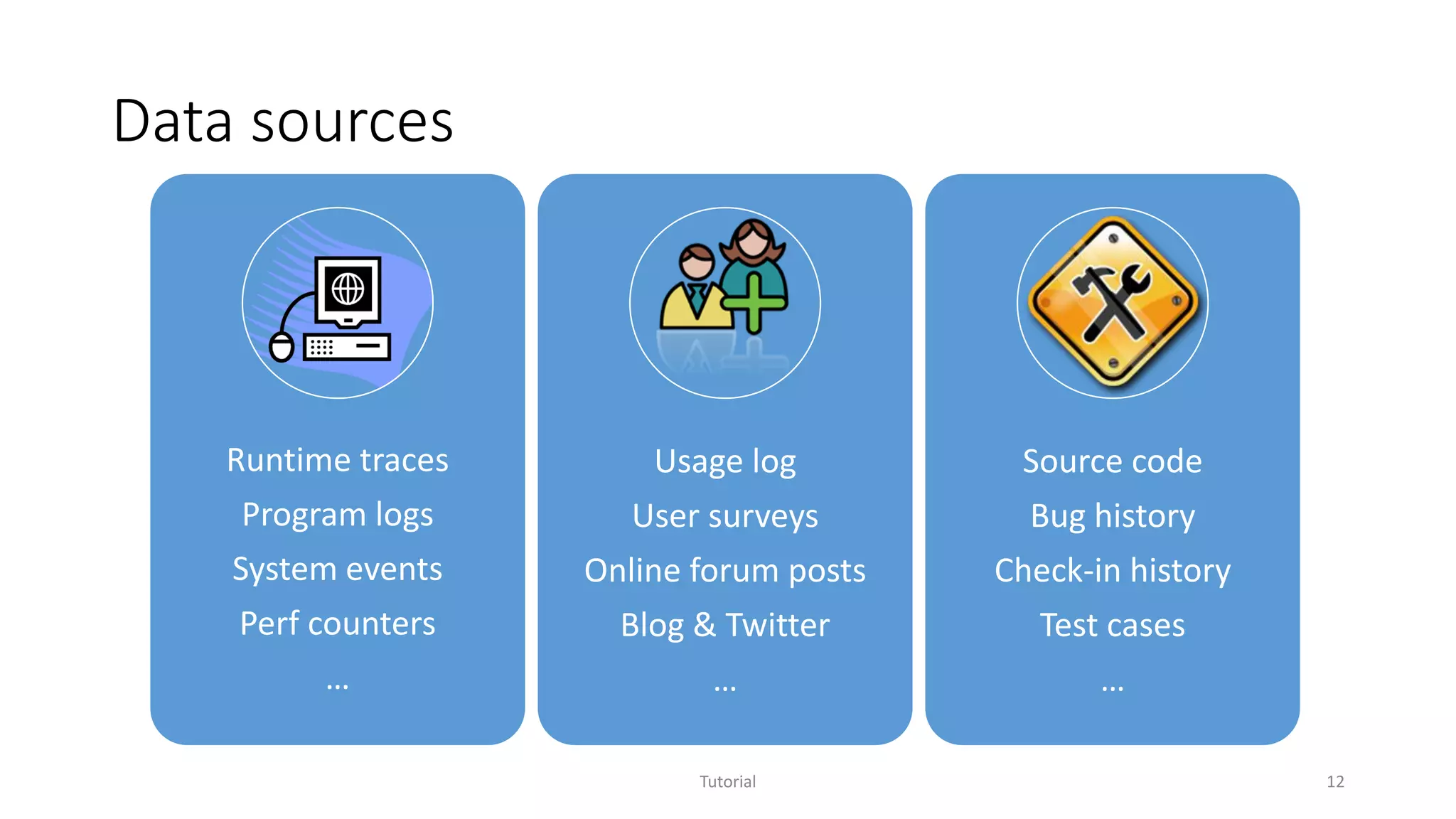

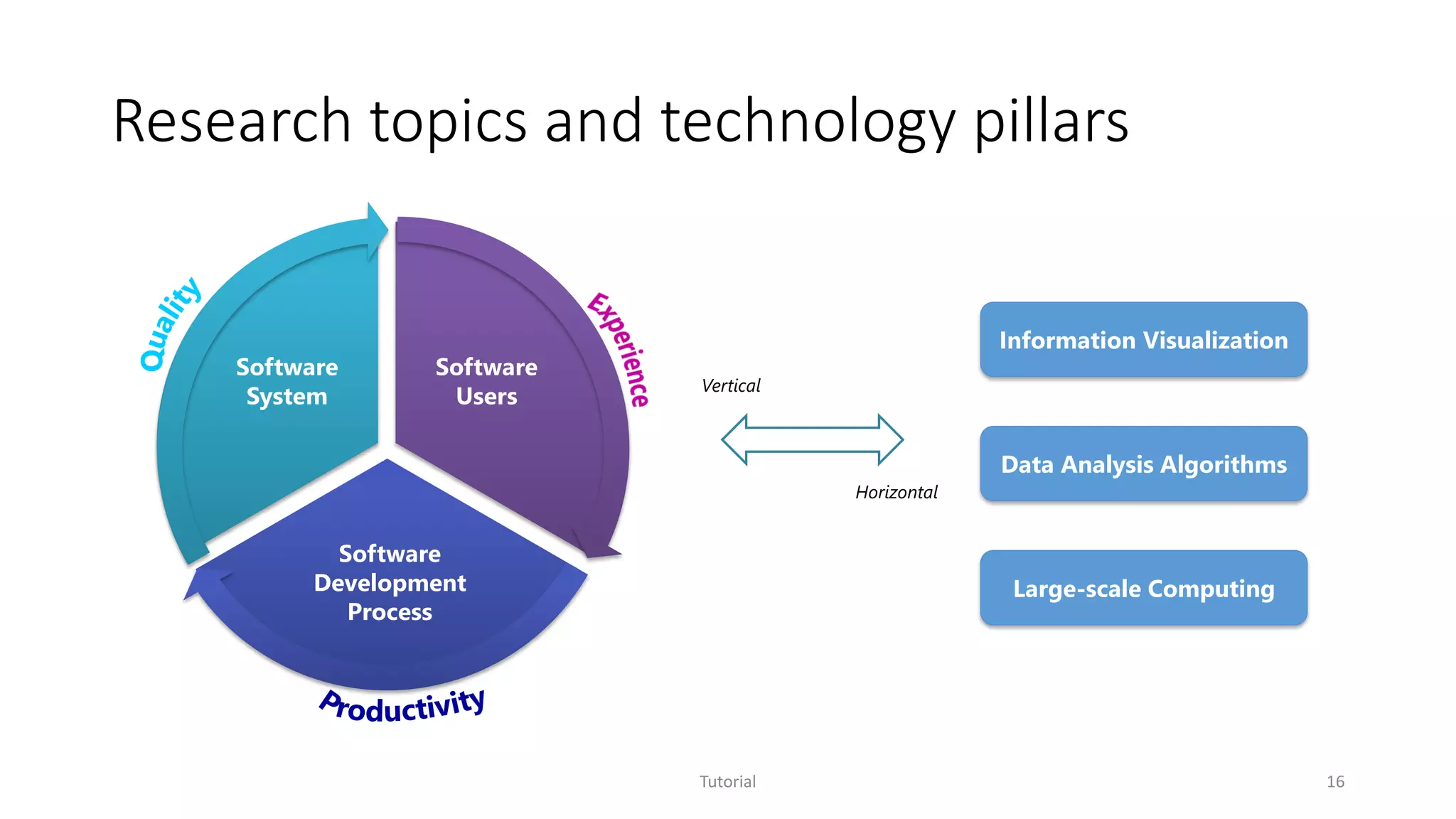

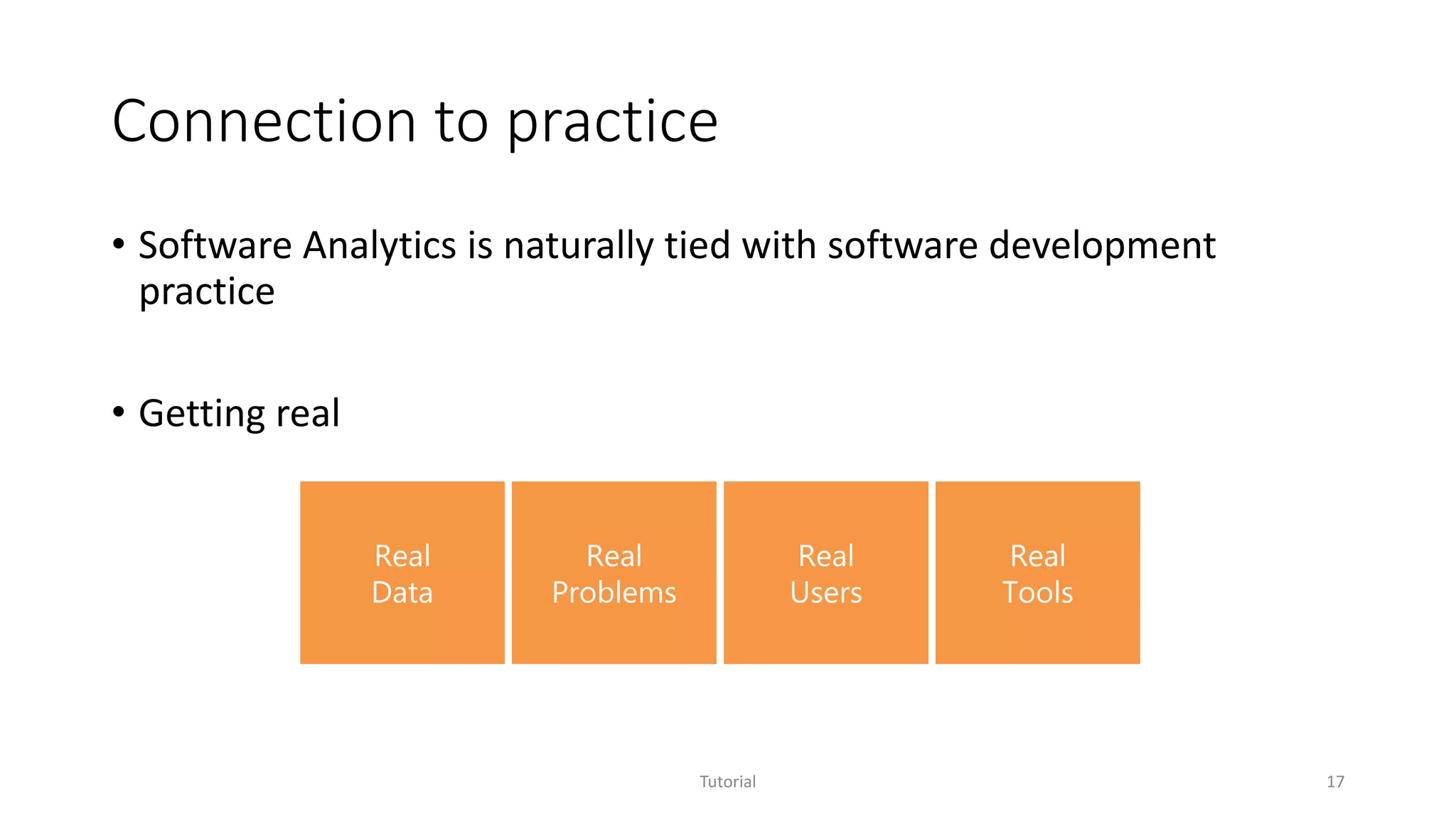

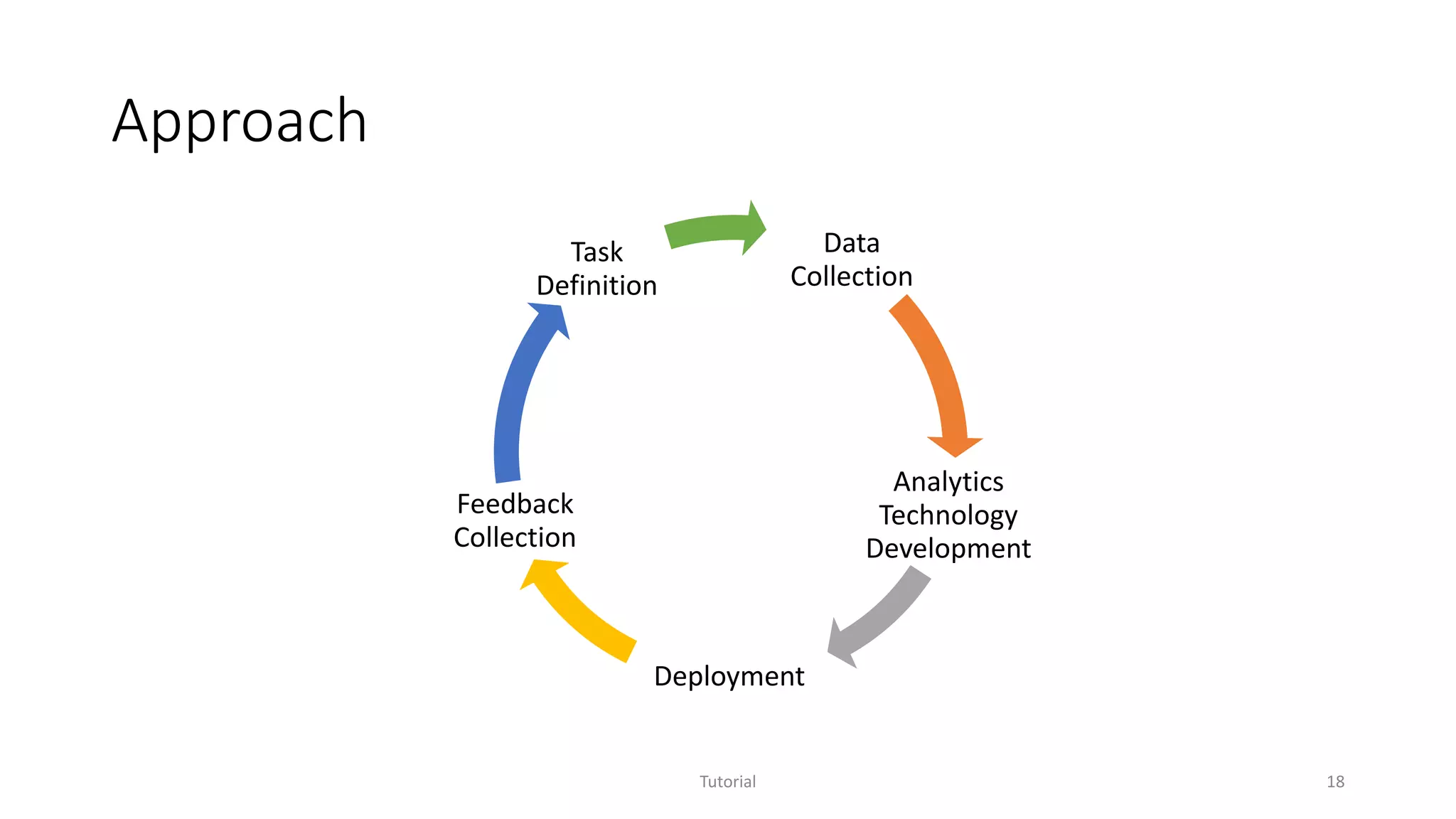

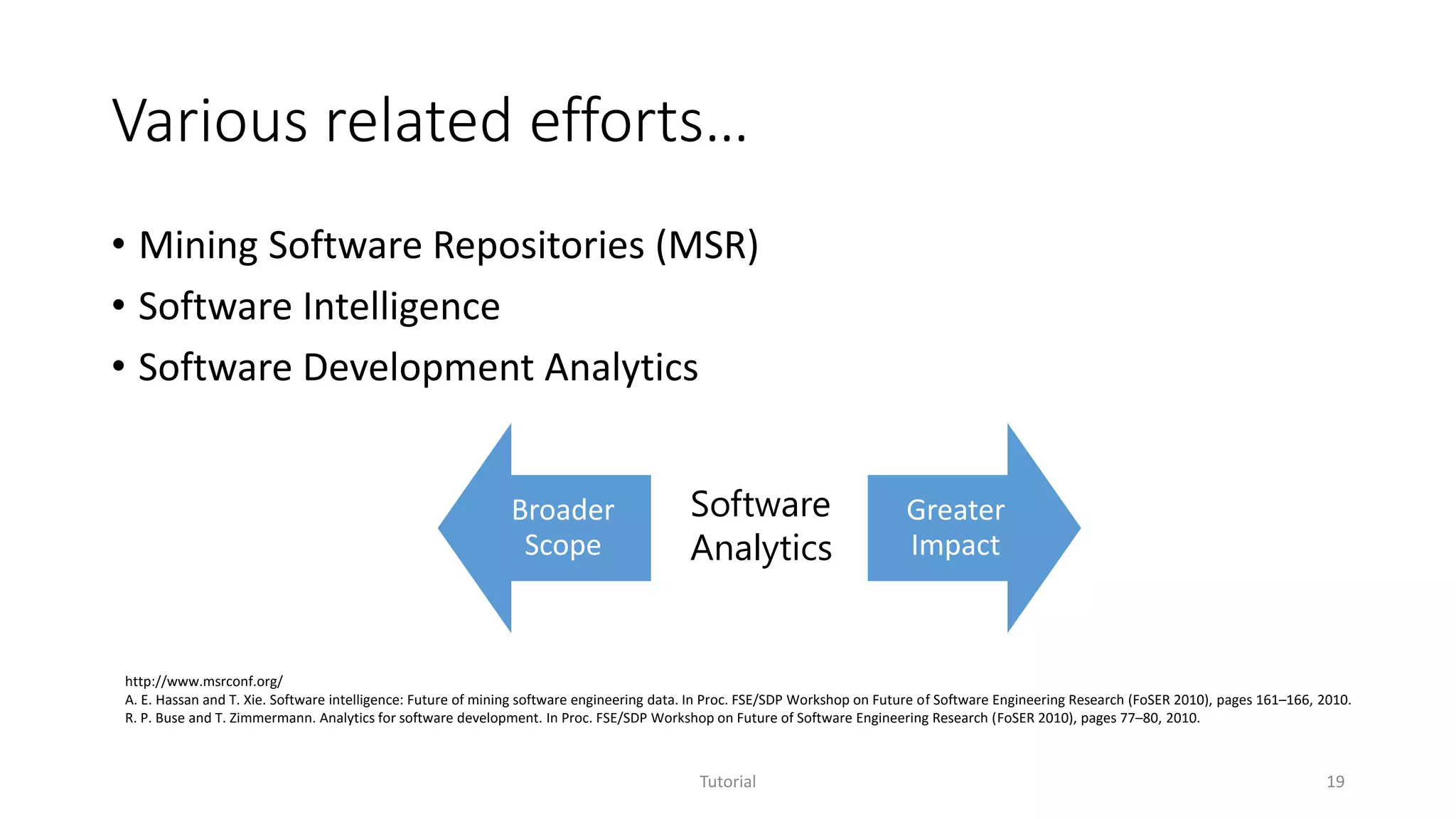

The document discusses software analytics, detailing its purpose to assist practitioners in data exploration and analysis for actionable insights in software development and services. It highlights the evolution of software analytics and its key projects, particularly focusing on performance debugging and incident management for online services. The document also stresses the importance of real user engagement and the need for analytics to effectively address practical challenges in software development.

![XIAO: Code clone analysis

• Motivation

• Copy-and-paste is a common developer behavior

• A real tool widely adopted internally and externally

• XIAO enables code clone analysis in the following way

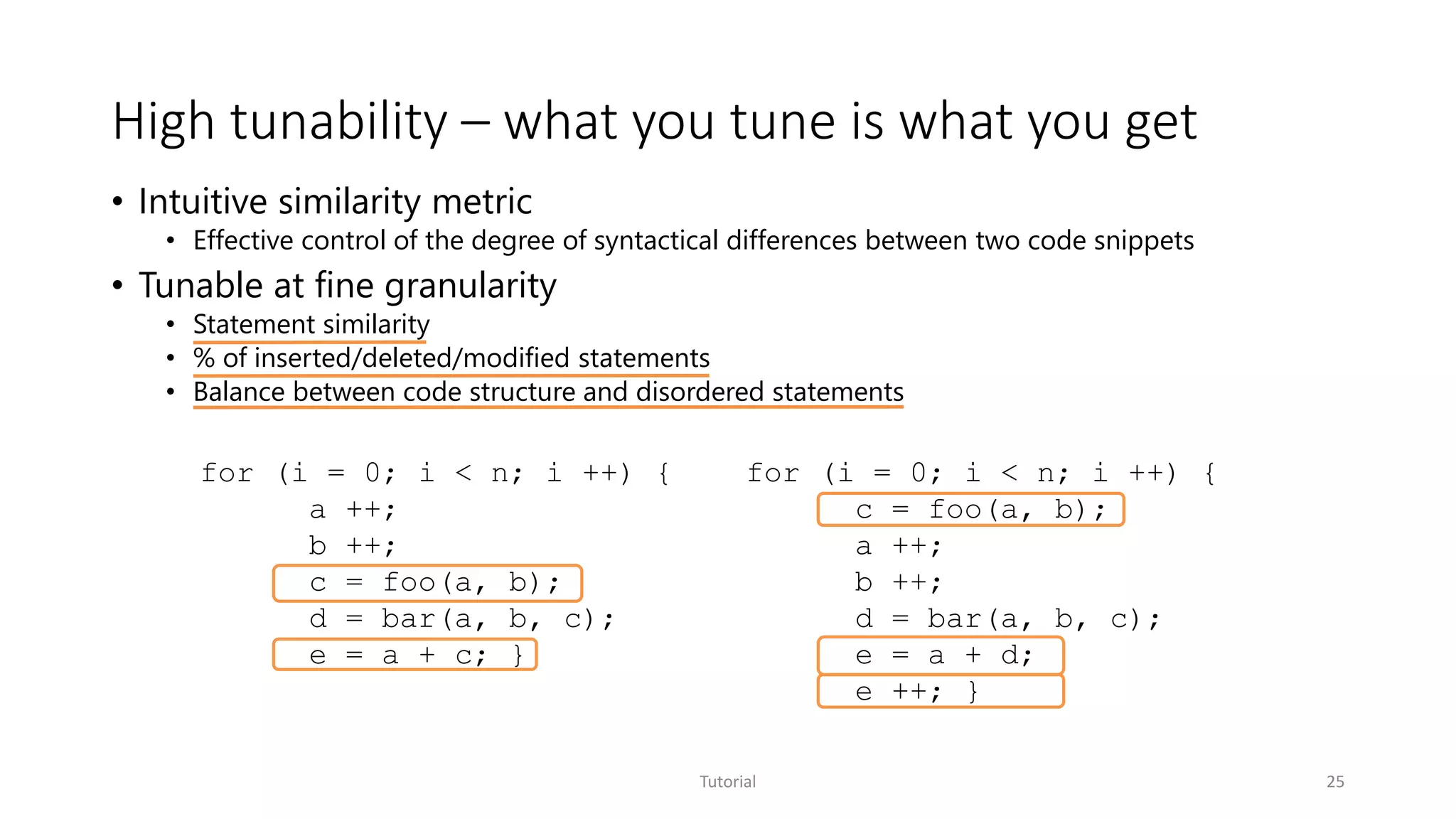

• High tunability

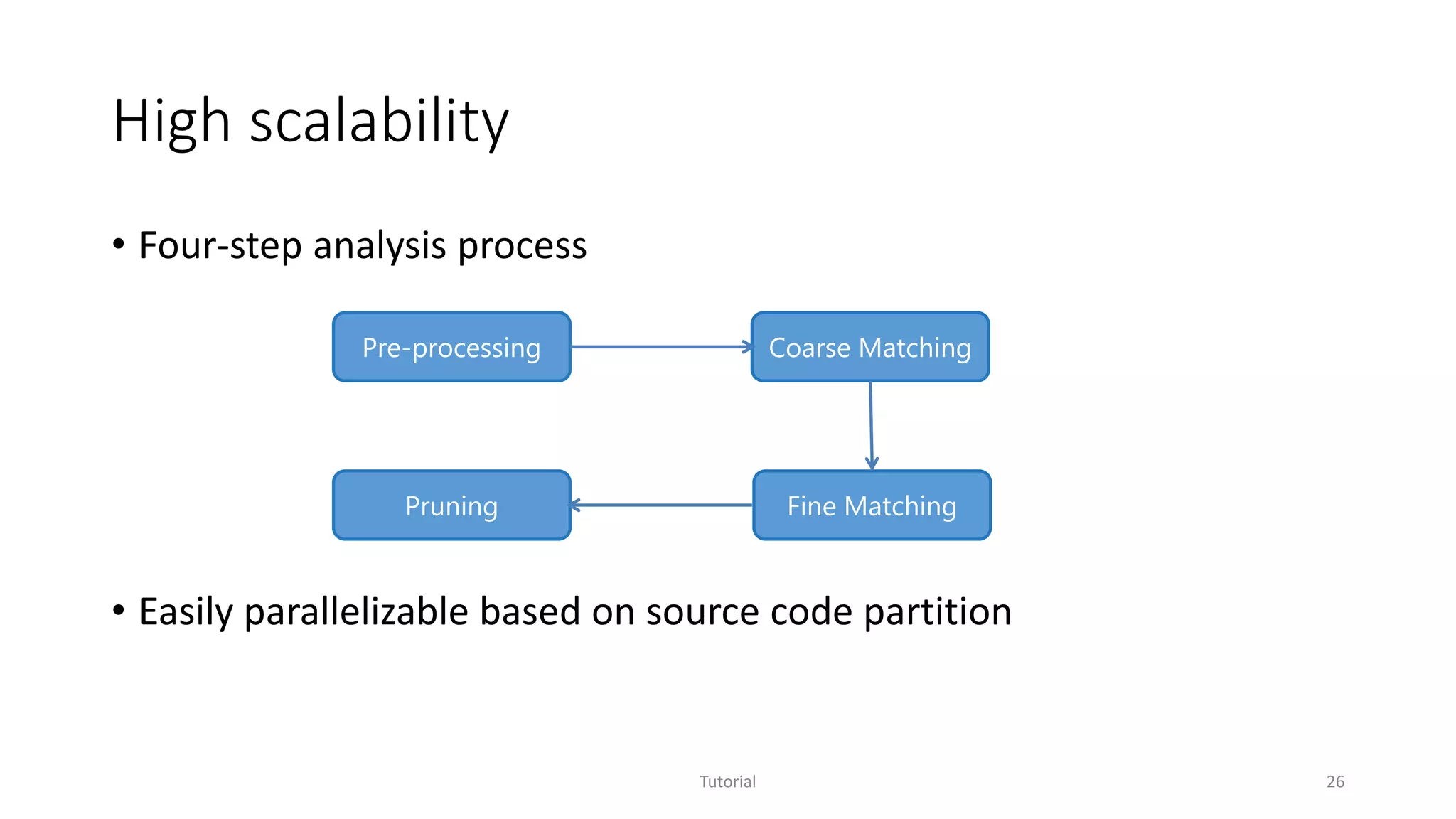

• High scalability

• High compatibility

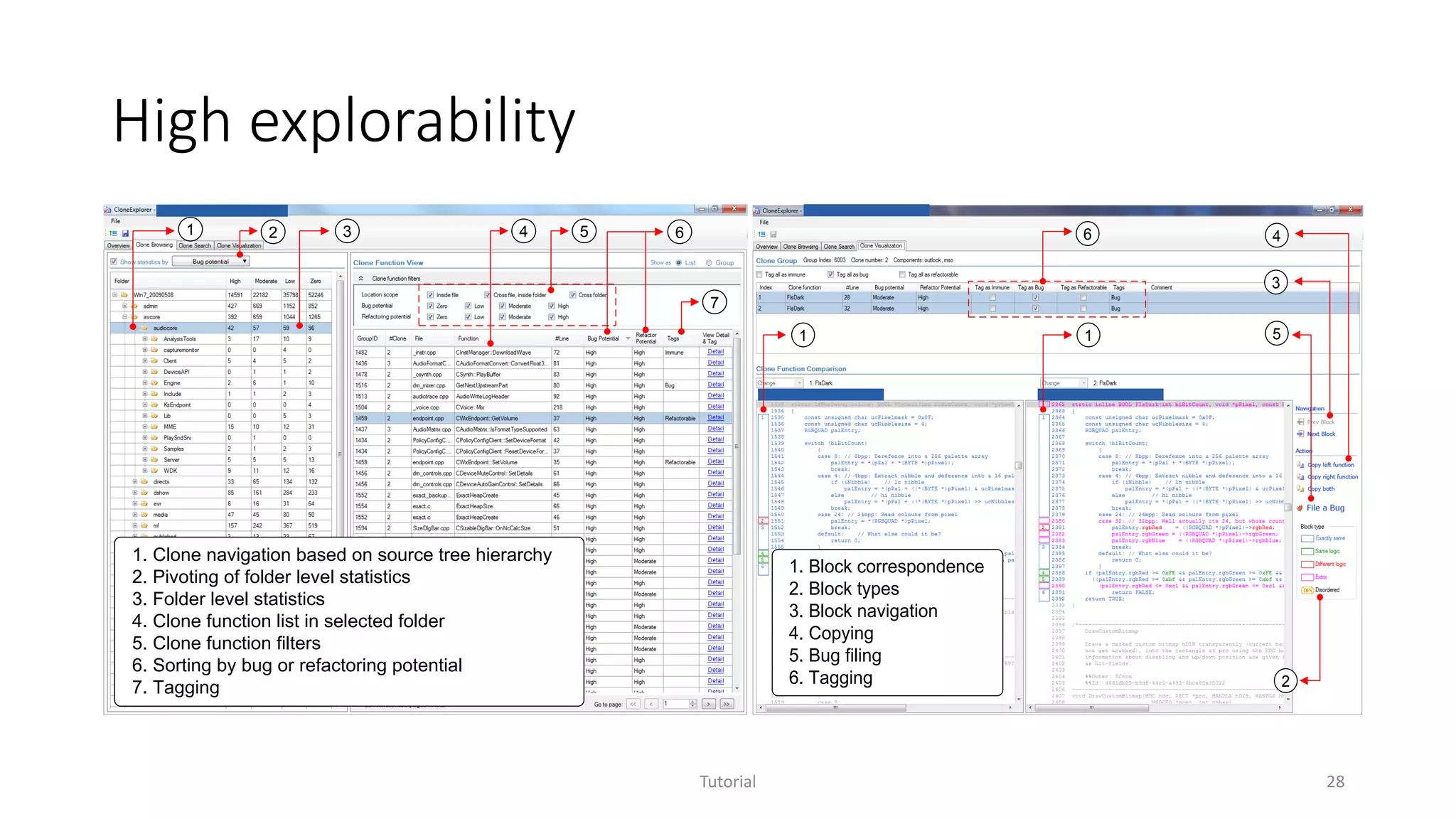

• High explorability

Tutorial 24

[IWSC’11 Dang et.al.]](https://image.slidesharecdn.com/oopsla2014tutorial-softwareanalytics-151029043059-lva1-app6892/75/Software-Analytics-Achievements-and-Challenges-24-2048.jpg)