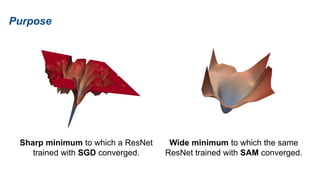

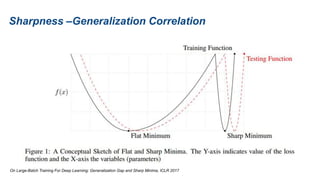

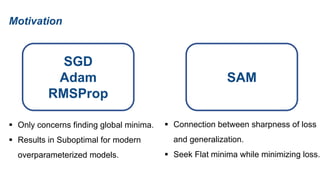

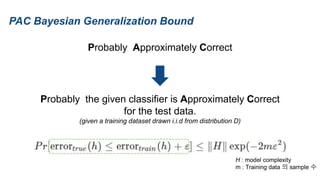

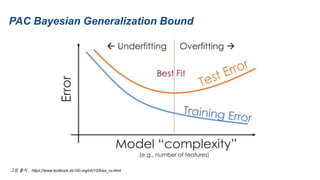

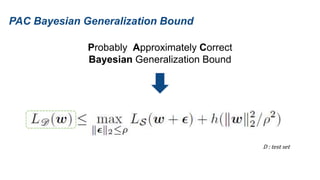

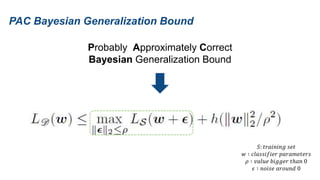

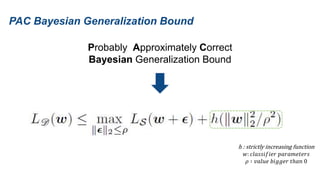

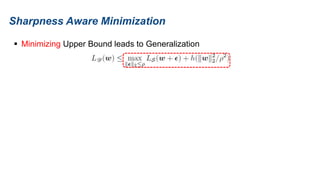

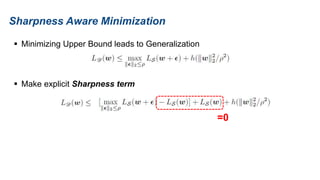

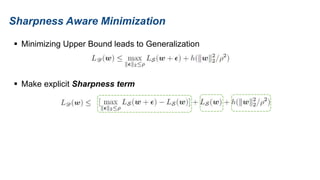

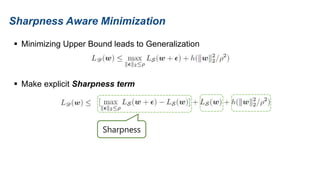

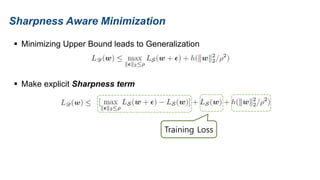

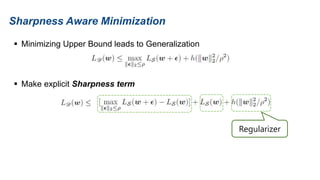

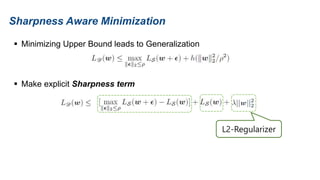

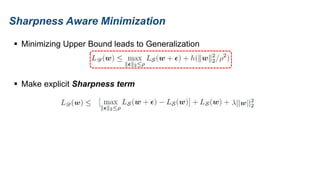

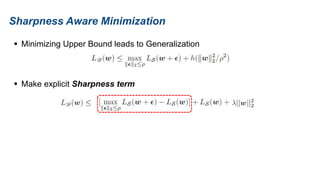

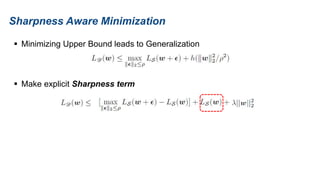

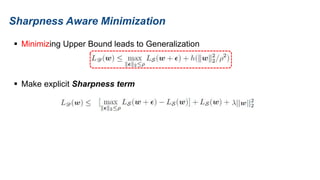

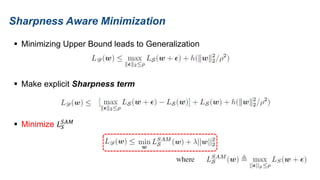

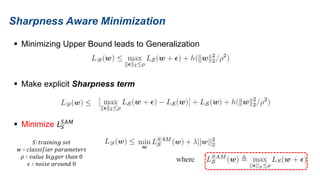

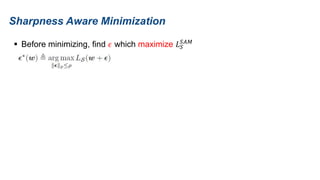

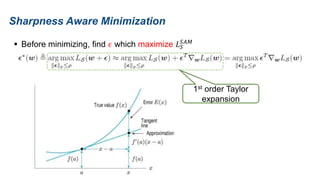

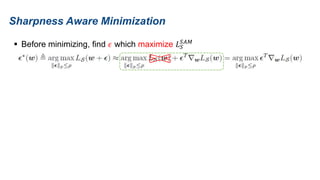

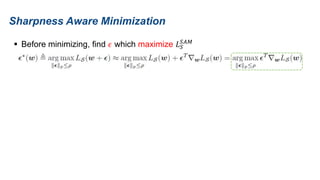

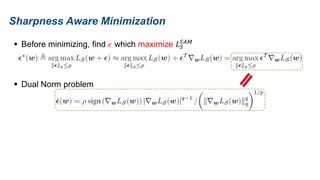

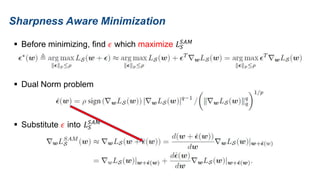

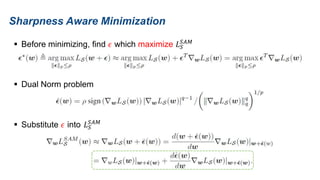

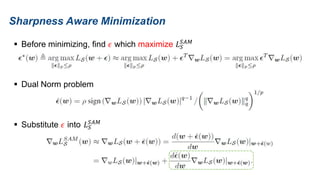

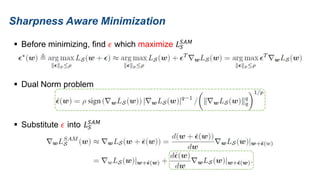

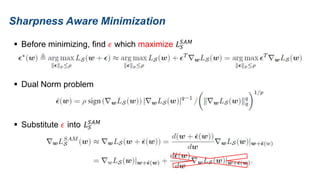

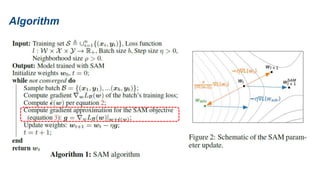

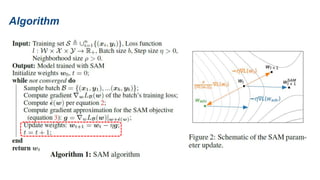

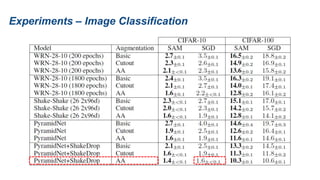

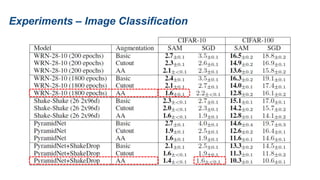

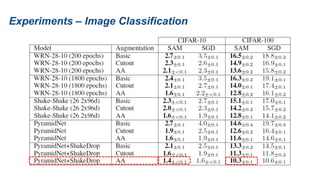

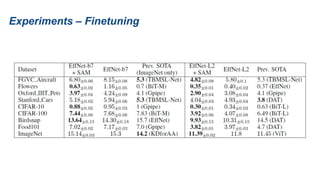

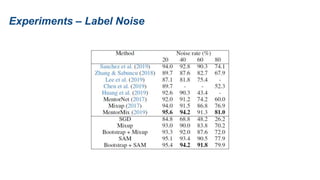

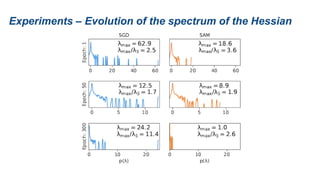

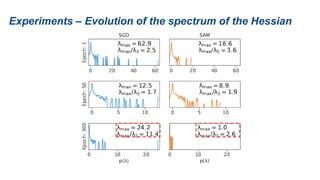

The document presents research on sharpness-aware minimization (SAM) as a method to improve generalization in deep learning, particularly for models trained with gradient descent methods like SGD. It discusses the correlation between loss sharpness and model generalization, explaining how SAM seeks to find flatter minima that enhance performance on various tasks including image classification and robustness to label noise. The approach involves minimizing upper bounds related to sharpness while also analyzing its effectiveness through experiments.