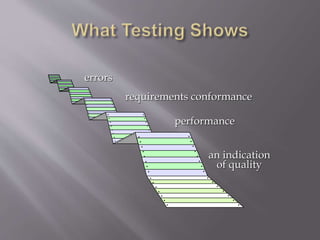

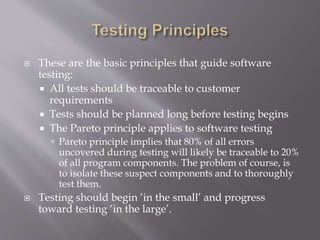

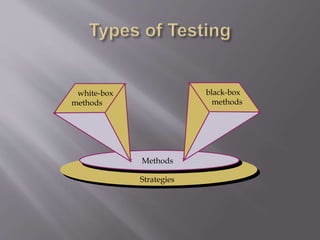

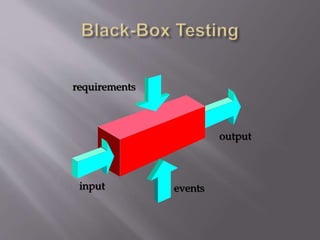

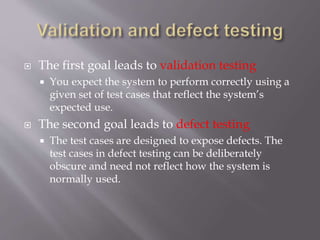

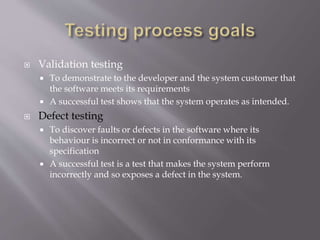

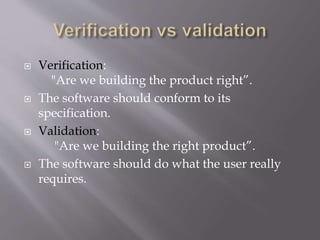

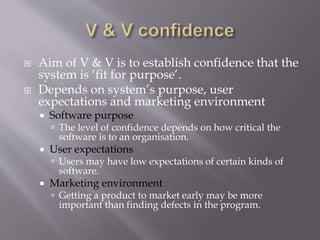

Testing is the process of executing a program to find errors prior to delivery. There are various types of testing including unit, integration, system, and validation testing which help verify requirements are met and defects are discovered. The goal is to have confidence that the system is fit for purpose and meets user expectations depending on how critical the software is and the marketing environment.