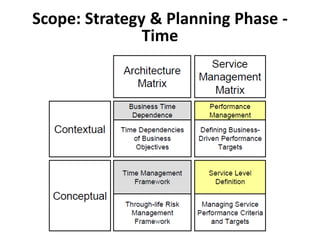

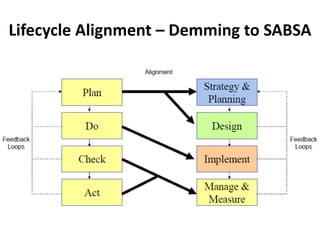

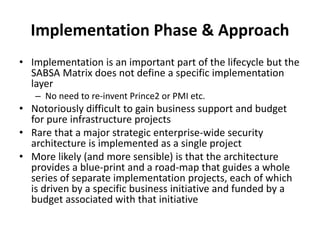

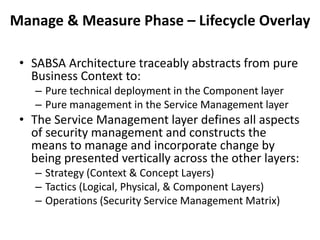

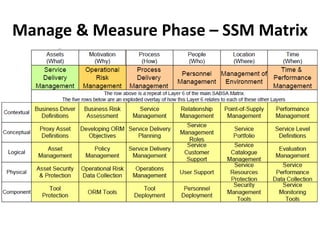

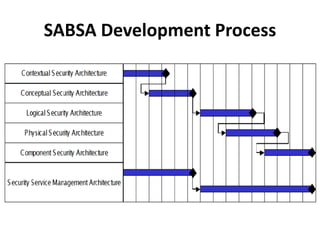

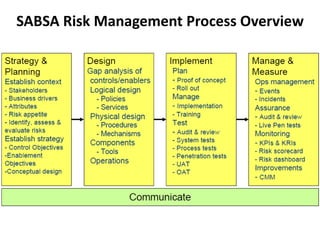

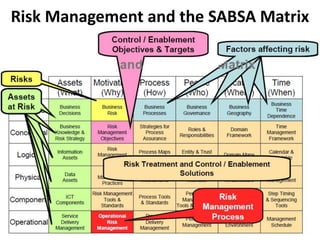

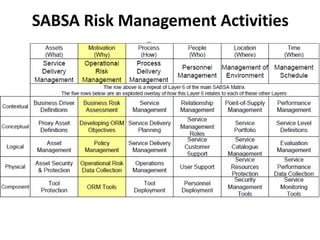

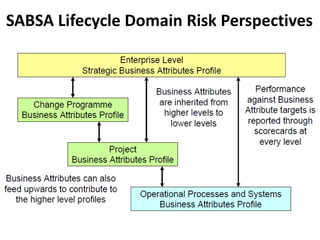

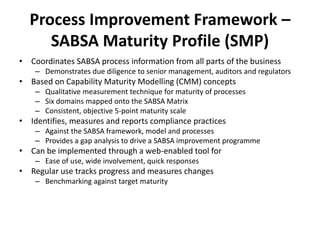

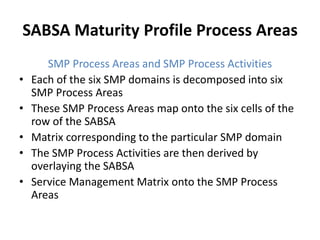

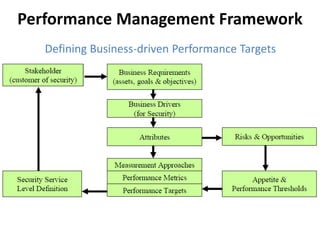

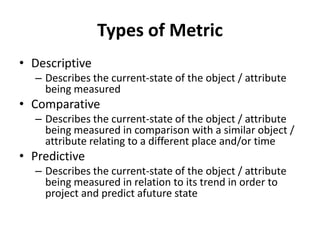

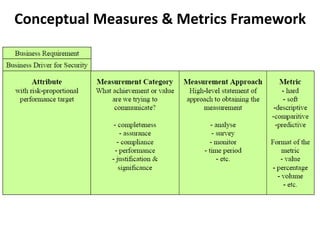

The document discusses implementation approaches for SABSA security architectures. It notes that SABSA does not define a specific implementation layer. Implementations are more likely to be a series of separate projects guided by the architecture and funded by business initiatives. The Service Management layer of SABSA defines how to manage and incorporate change across other layers through strategy, tactics, and operations. Performance management concepts are also discussed for defining business-driven targets.