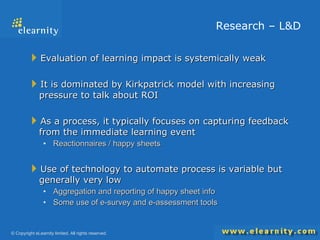

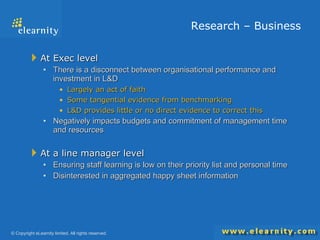

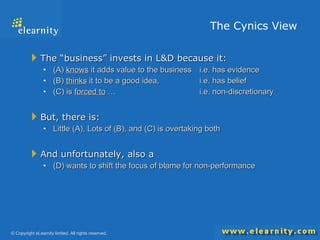

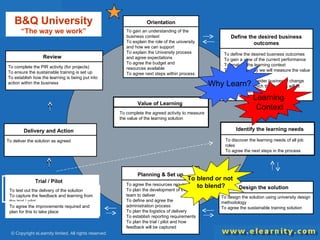

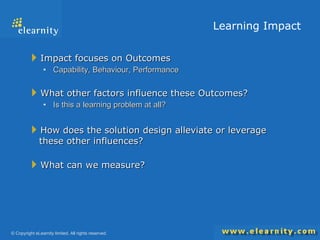

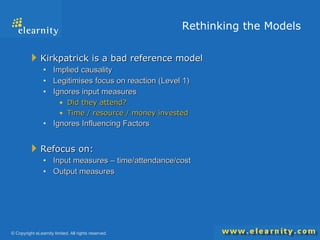

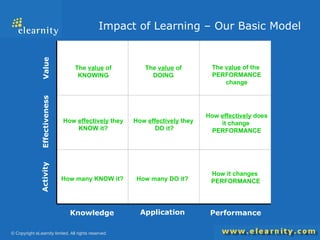

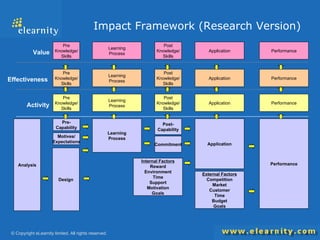

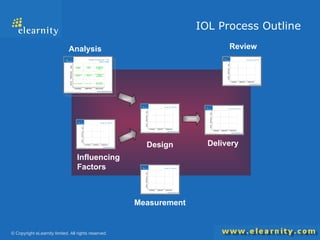

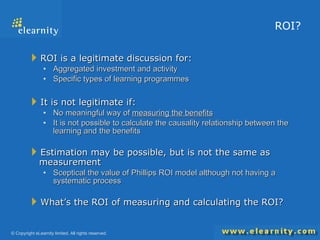

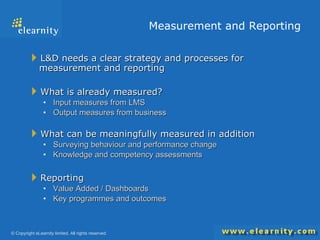

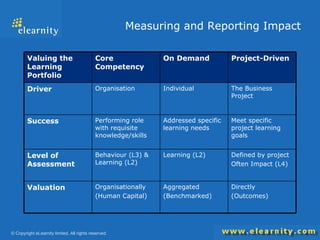

The document critiques current evaluation methods in learning and development (L&D), highlighting the dominance of the Kirkpatrick model and the weak linkage between organizational performance and L&D investment. It advocates for a refocusing on measurable business outcomes and effective learning impact analysis, suggesting that ROI can be a viable discussion point under certain conditions. The text emphasizes the need for comprehensive measurement strategies and acknowledges the complexities surrounding evidence for the benefits of L&D initiatives.

![Rethinking evaluation: its value and limits in learning David Wilson [email_address] Europe’s leading Corporate Learning Analysts](https://image.slidesharecdn.com/rethinkingevaluation-091207053649-phpapp01/75/Rethinking-Evaluation-1-2048.jpg)

![Questions? [email_address] Research Knowledge Base: http:// research.elearnity.com](https://image.slidesharecdn.com/rethinkingevaluation-091207053649-phpapp01/85/Rethinking-Evaluation-20-320.jpg)