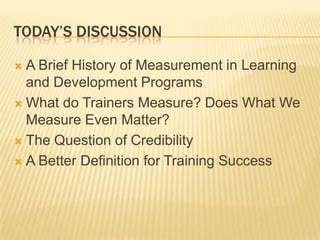

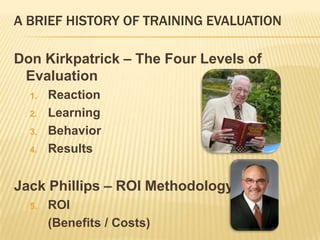

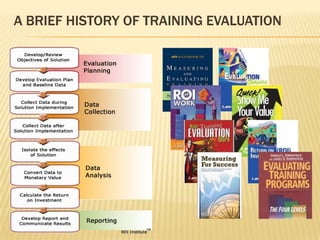

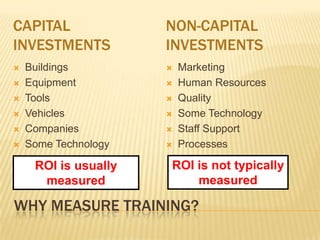

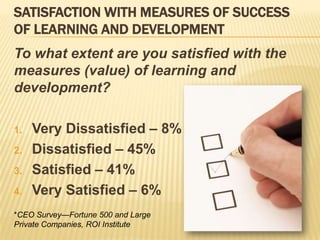

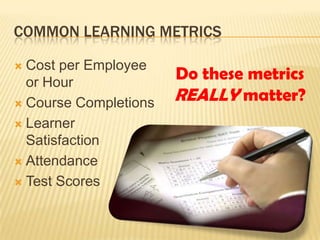

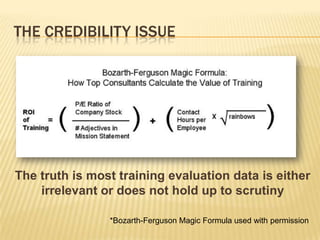

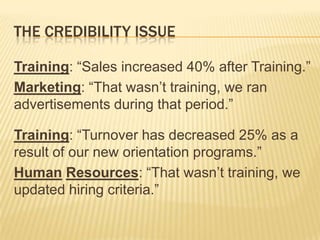

The document discusses evaluating the success of training programs. It argues that traditional metrics like satisfaction scores and attendance are often irrelevant and lack credibility. Instead, success should be defined by stakeholders and measured by meaningful performance outcomes like improved quality, reduced costs, or increased sales. The document recommends using a structured approach called the Success Case Method, which identifies high and low impact cases to document how training helped improve work performance. Overall, the key message is that evaluation should focus on how training impacts performance after the program, not just reactions or knowledge from the training itself.