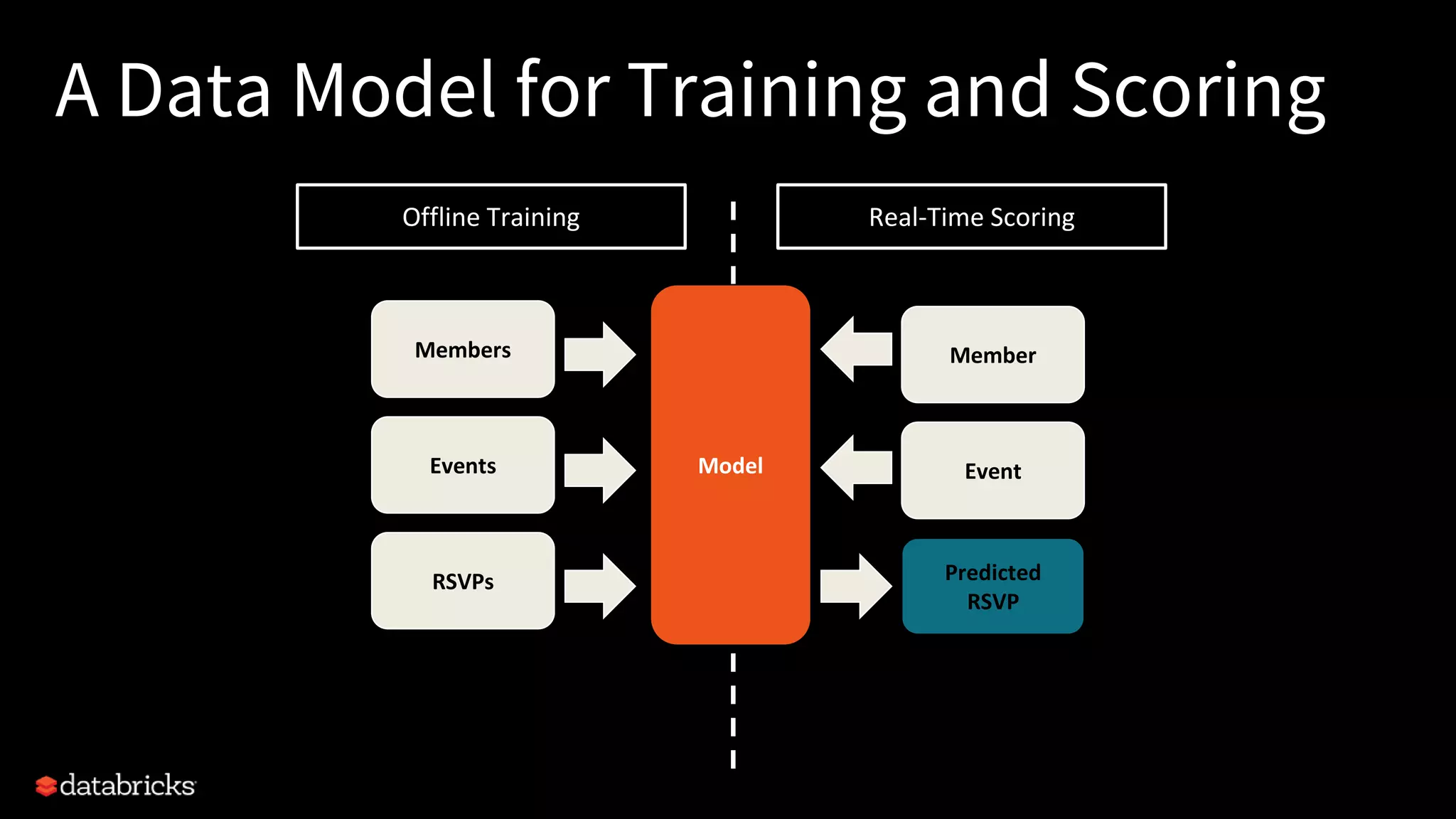

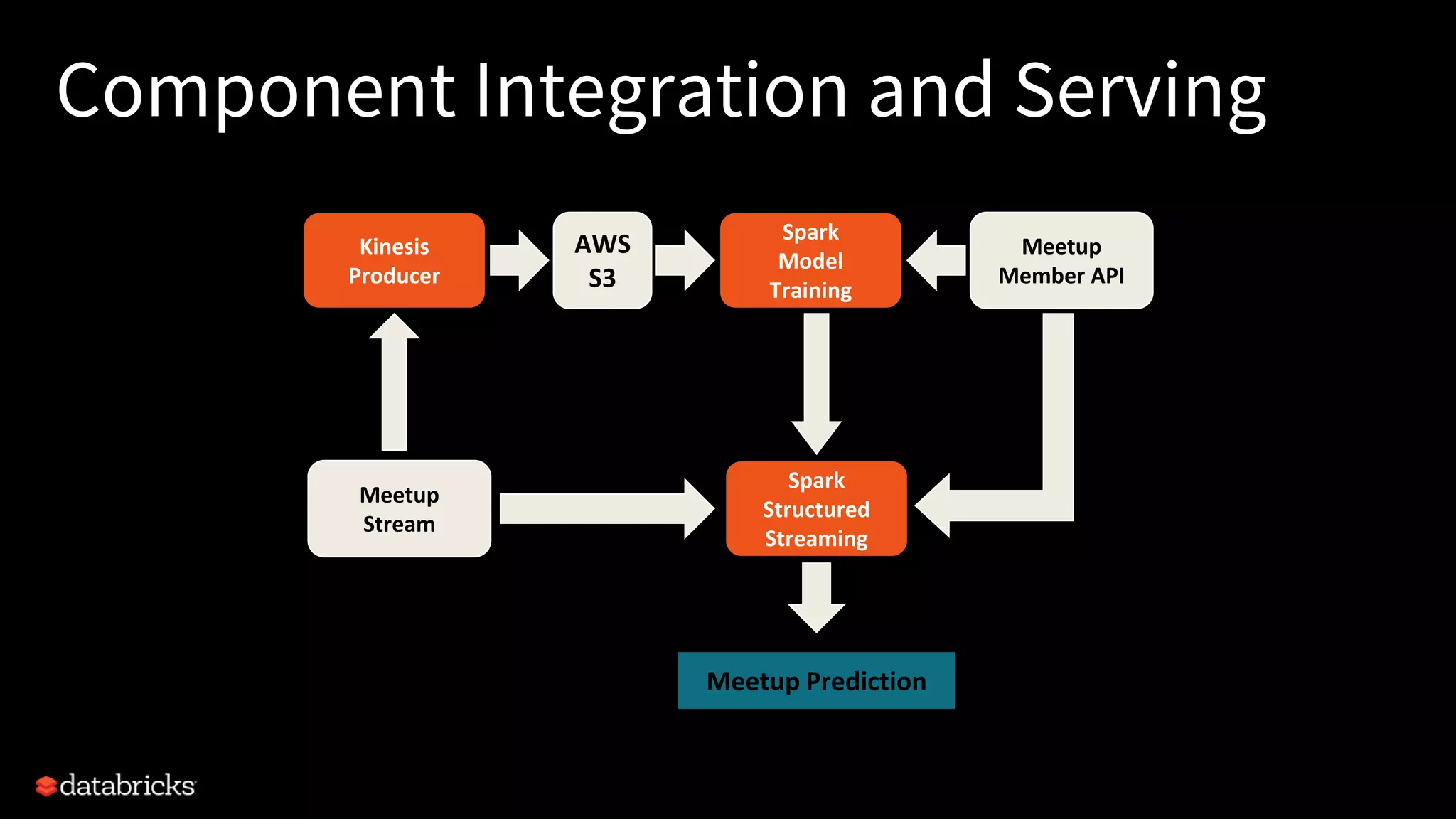

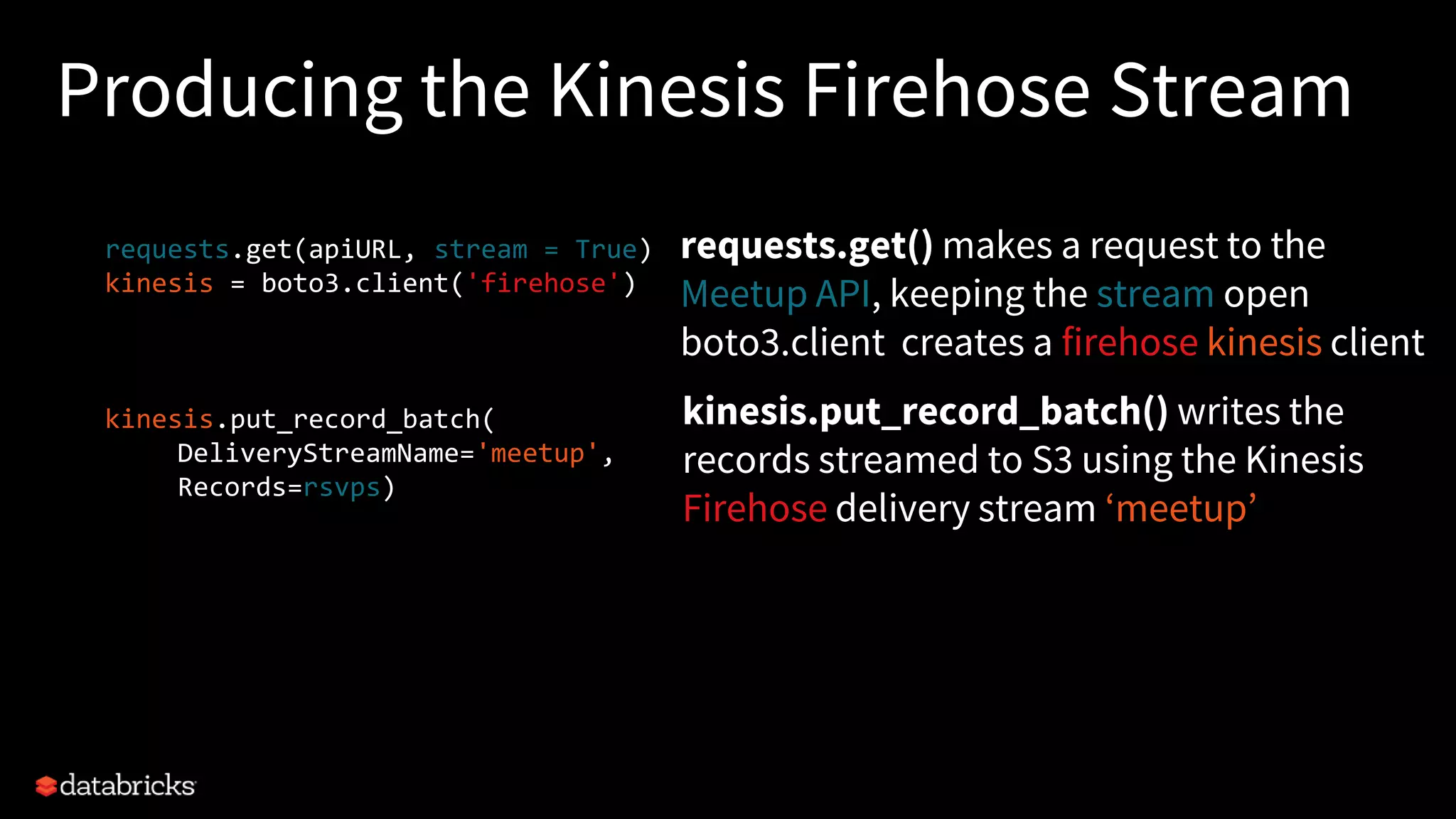

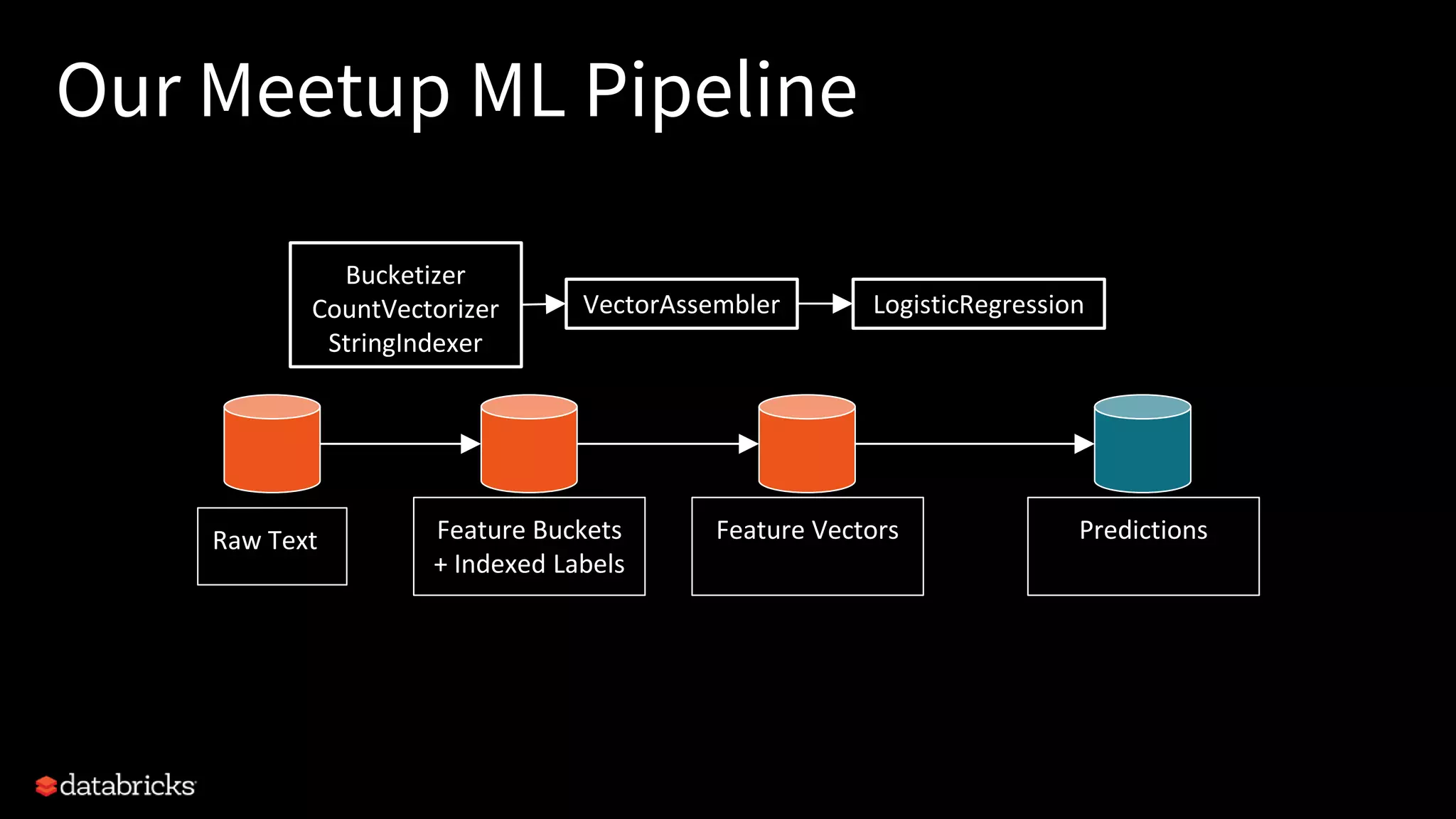

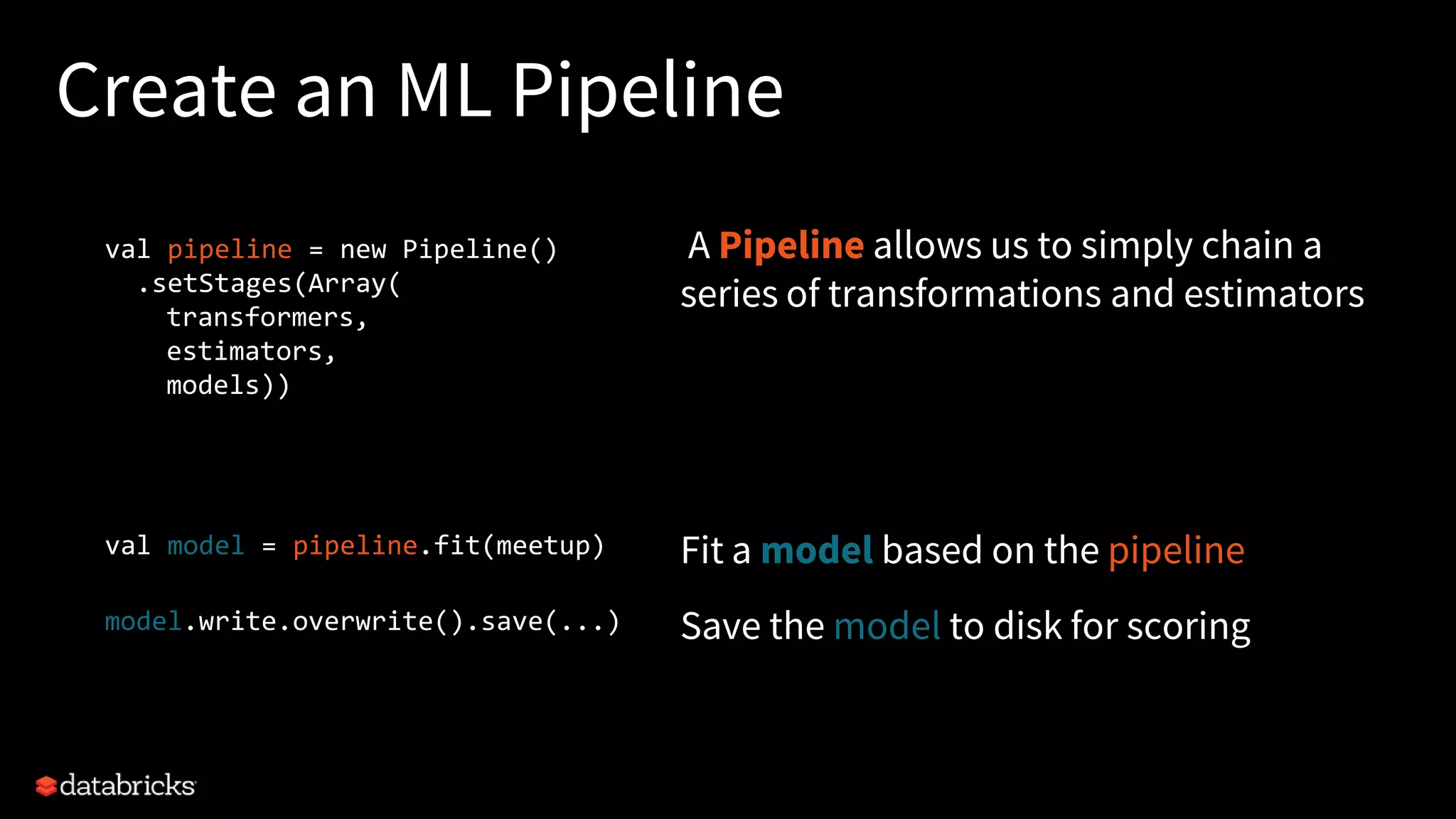

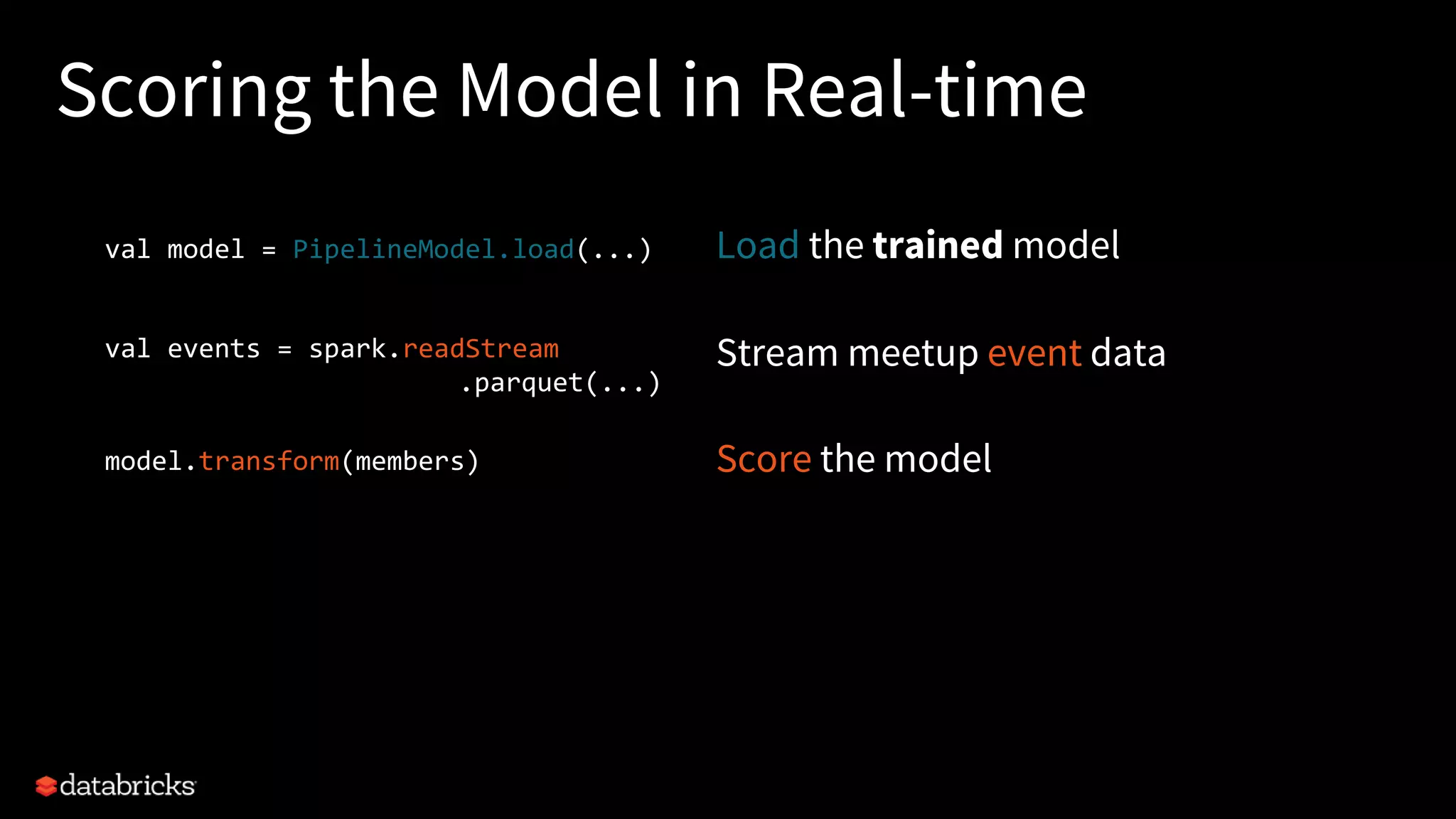

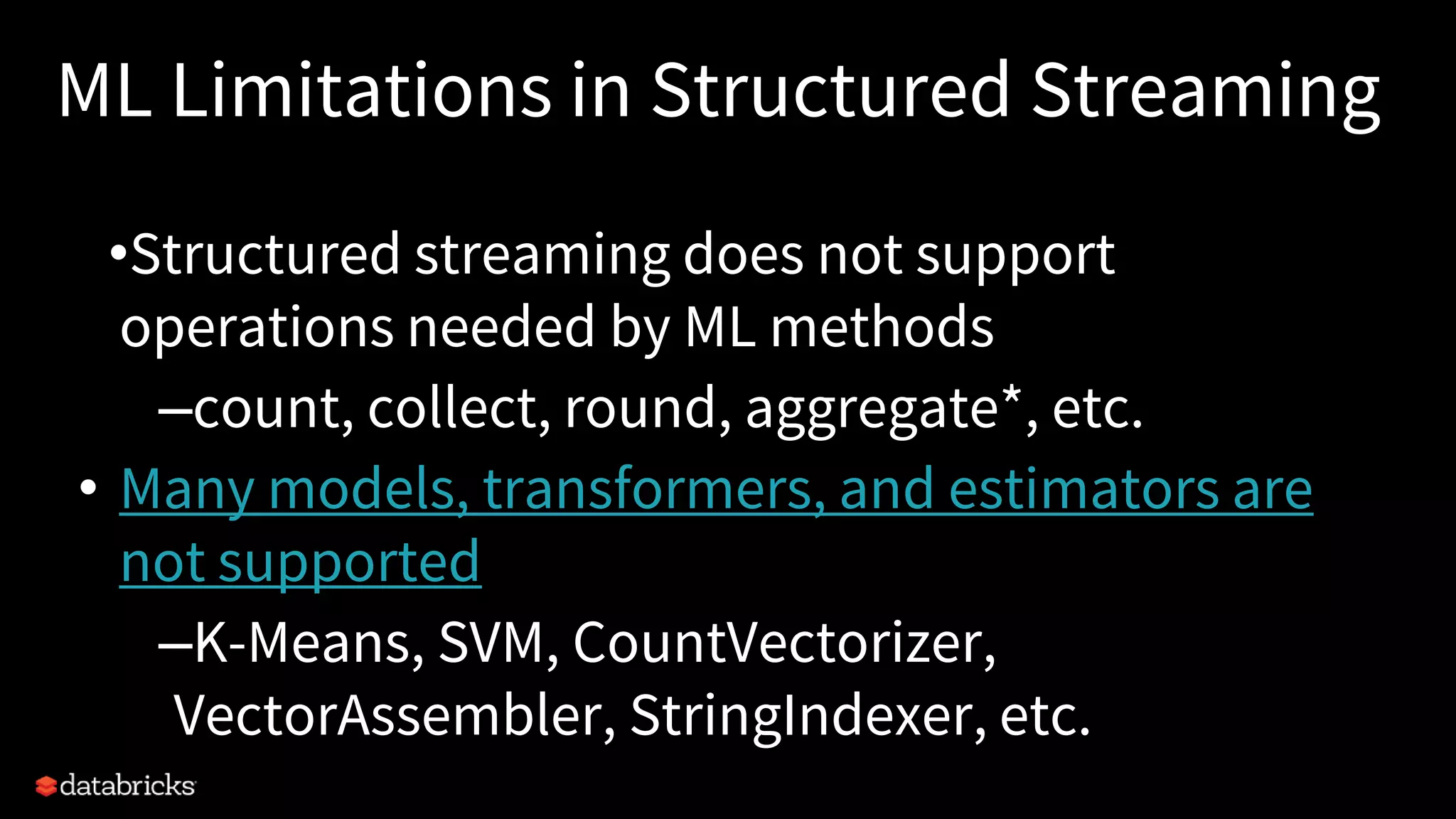

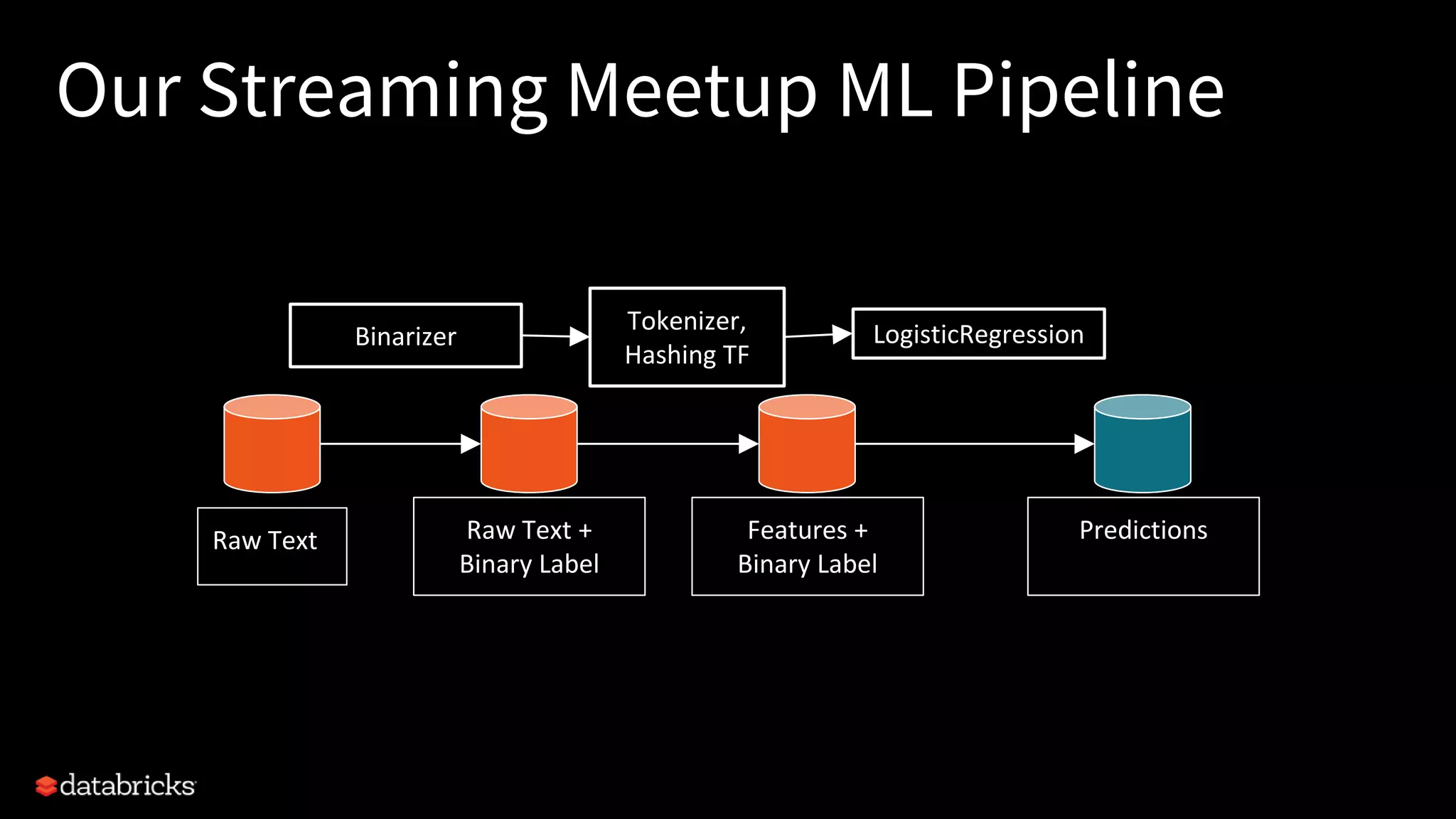

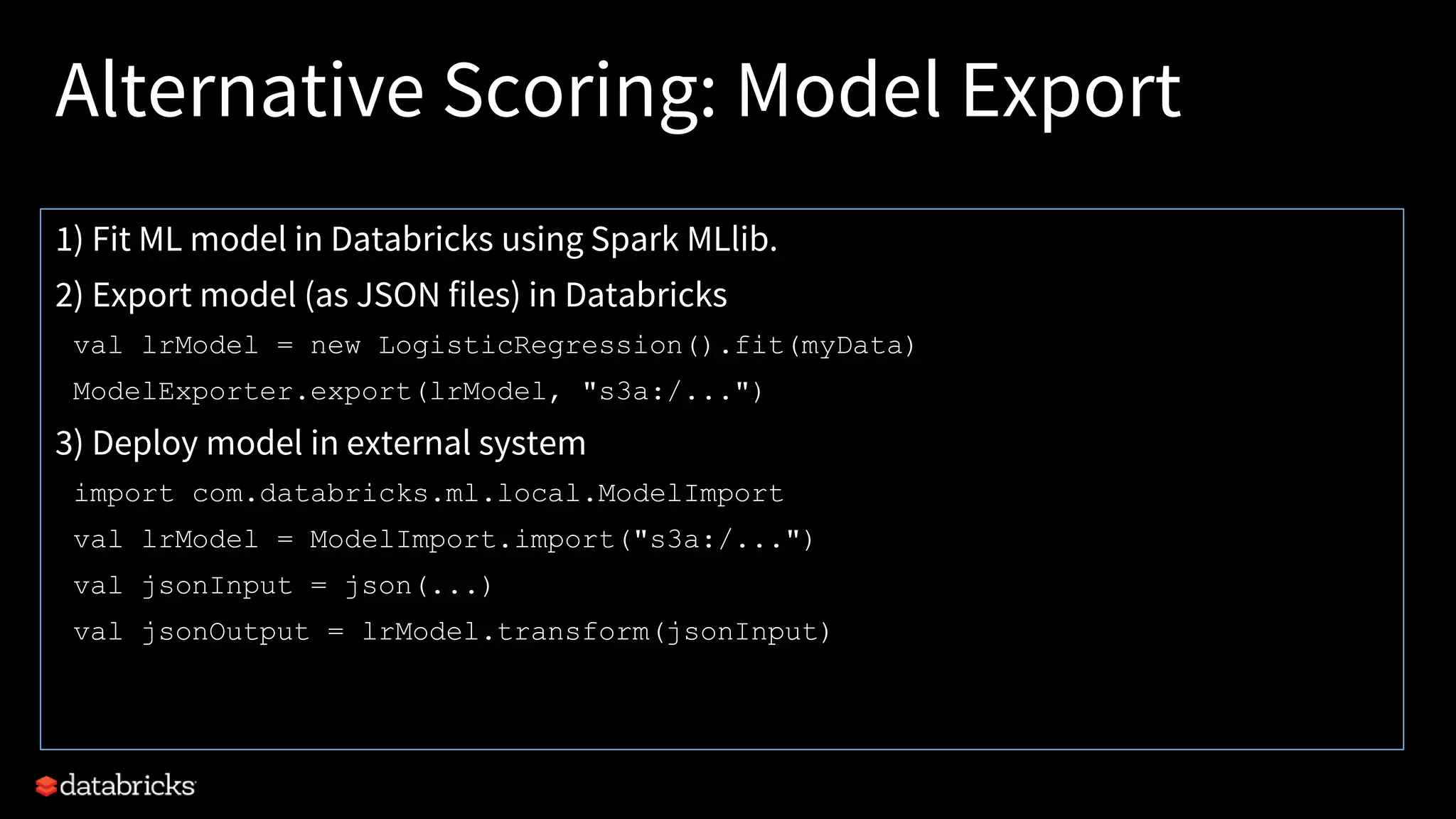

The document discusses the implementation of real-time machine learning analytics using structured streaming and Kinesis Firehose for exploring Meetup data, particularly for predicting RSVPs. It outlines the challenges of building scalable analytics solutions, highlights the components of the Meetup streaming API, and describes the ML pipeline for training and scoring models with real-time data. Emphasis is placed on the limitations of structured streaming in supporting certain ML operations and the process for model export and integration.