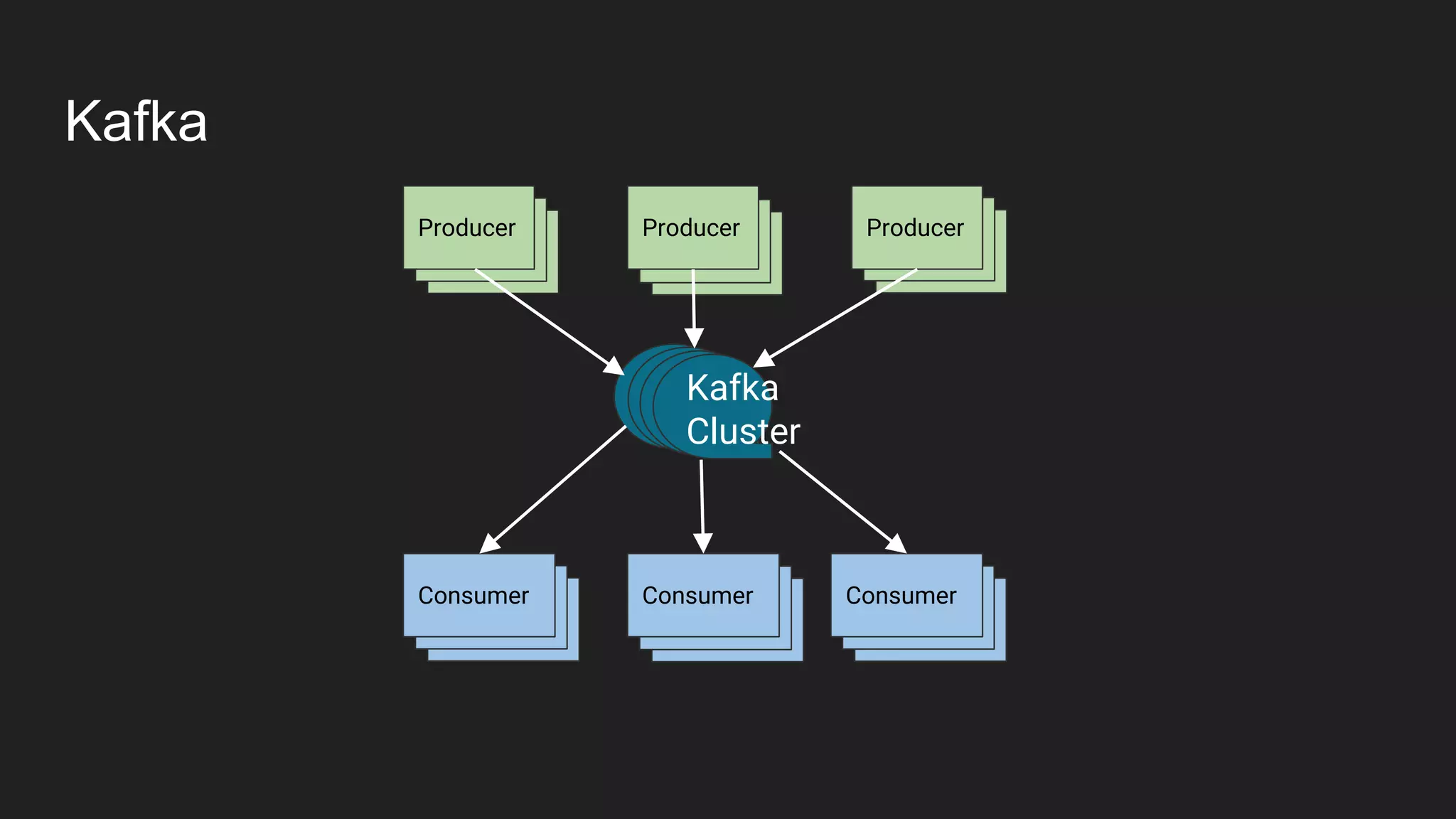

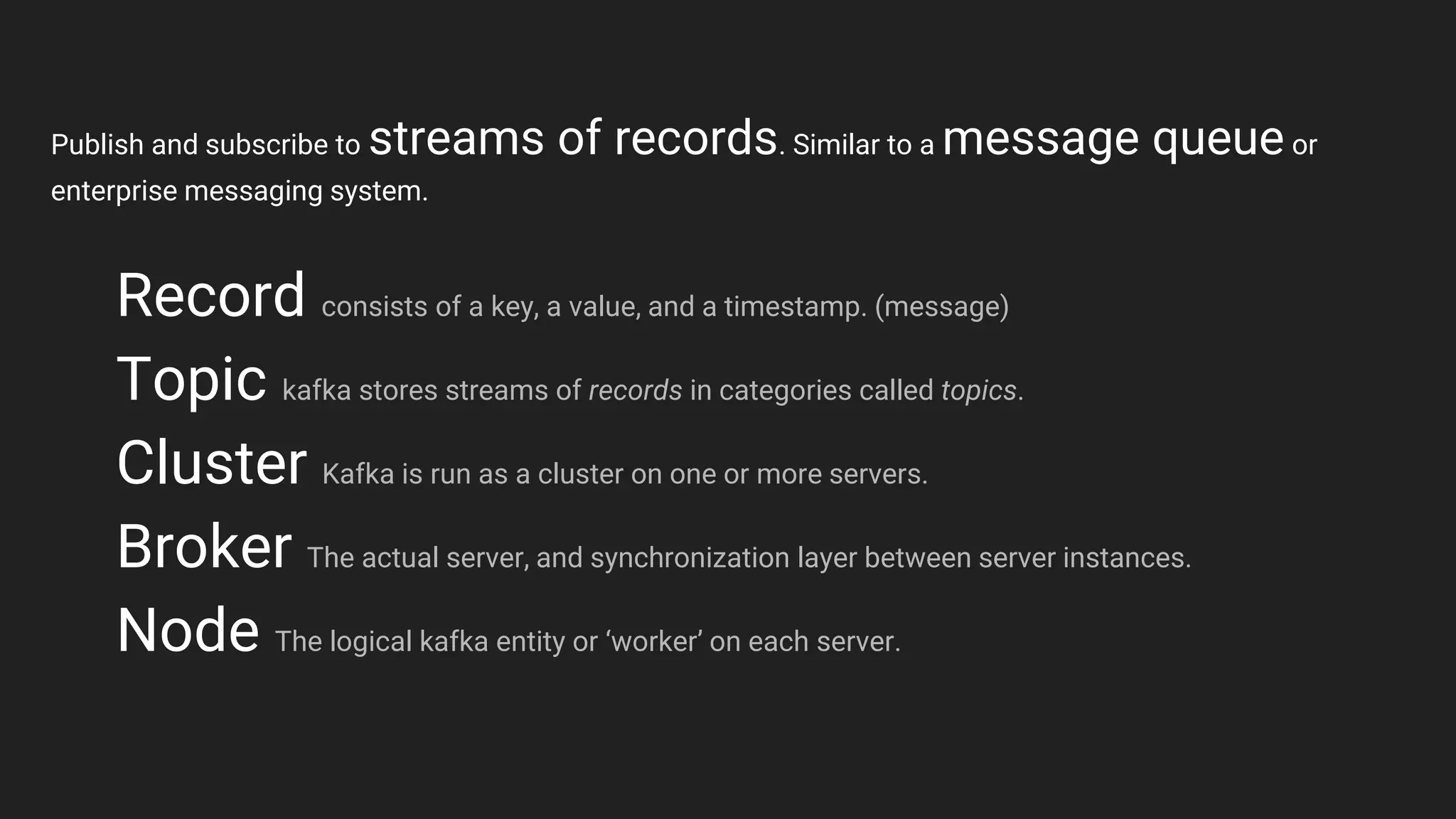

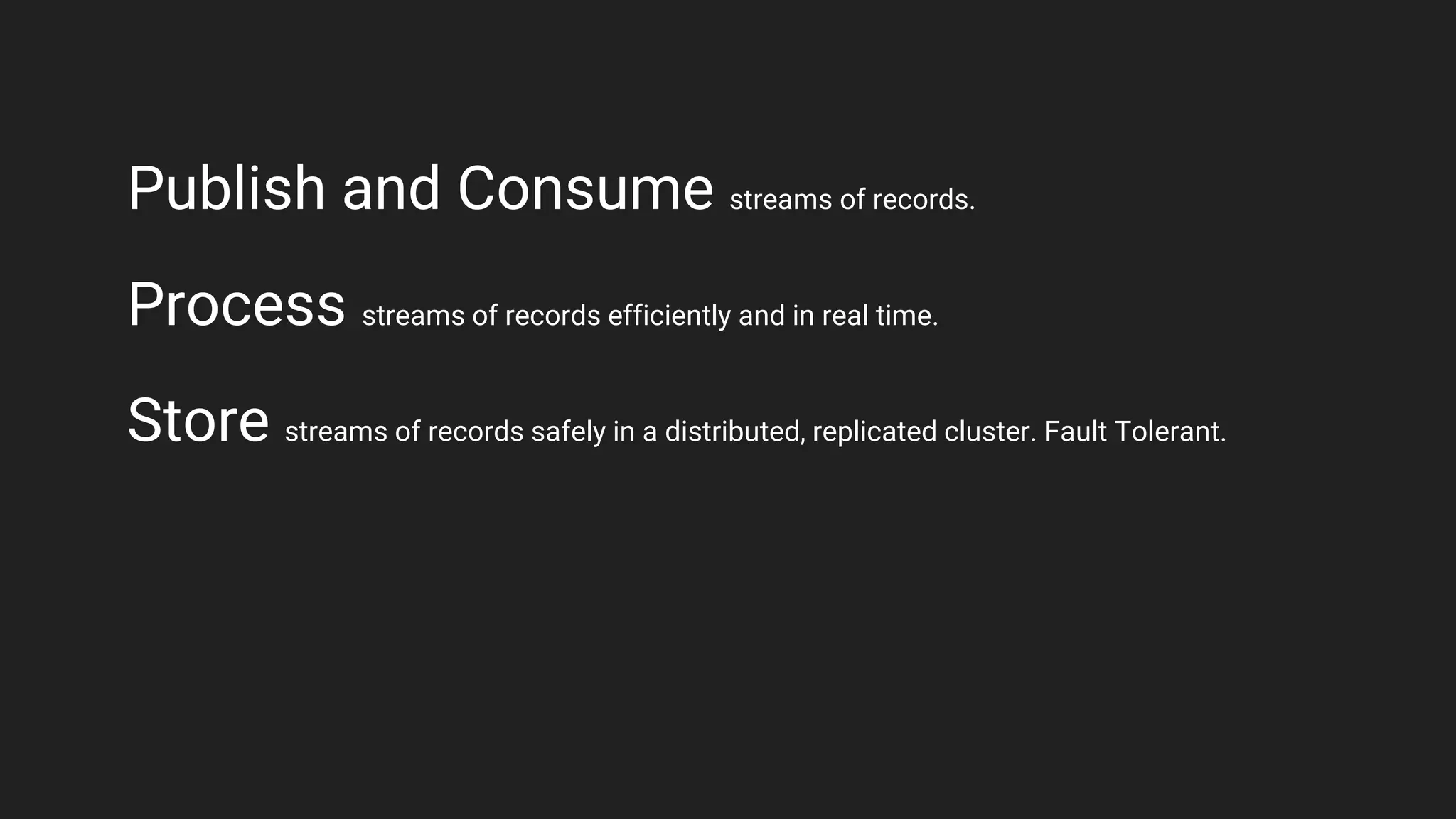

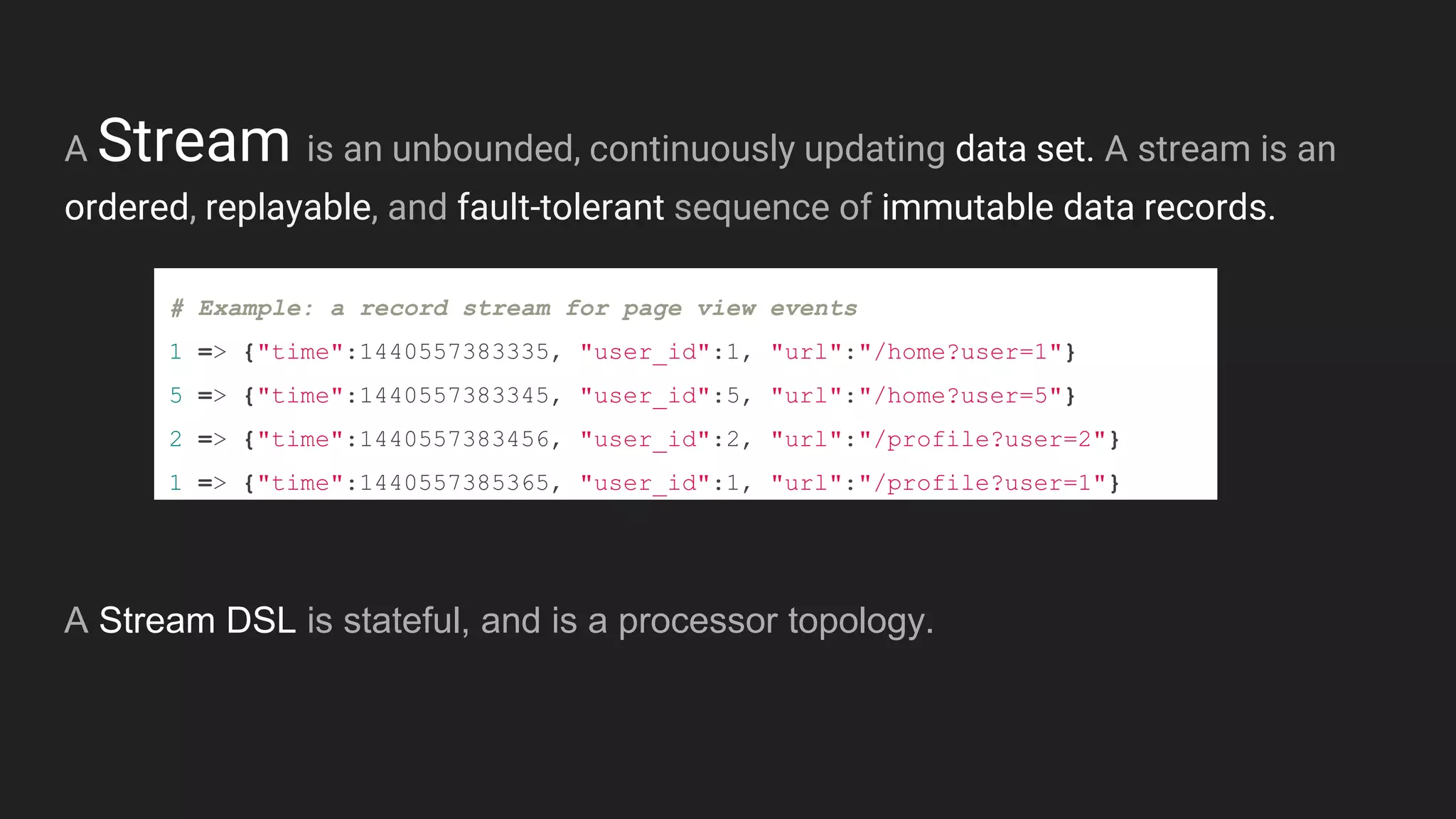

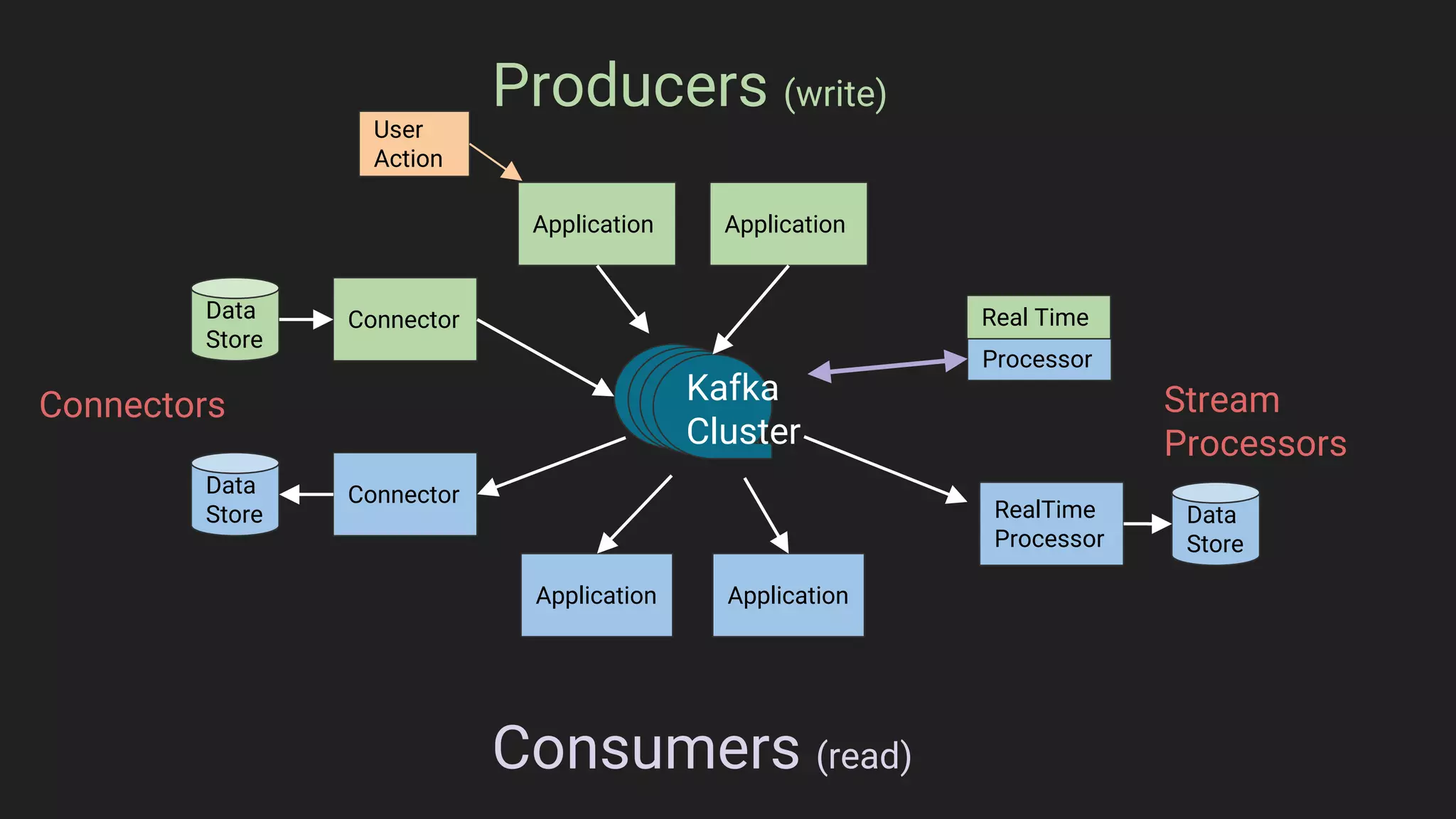

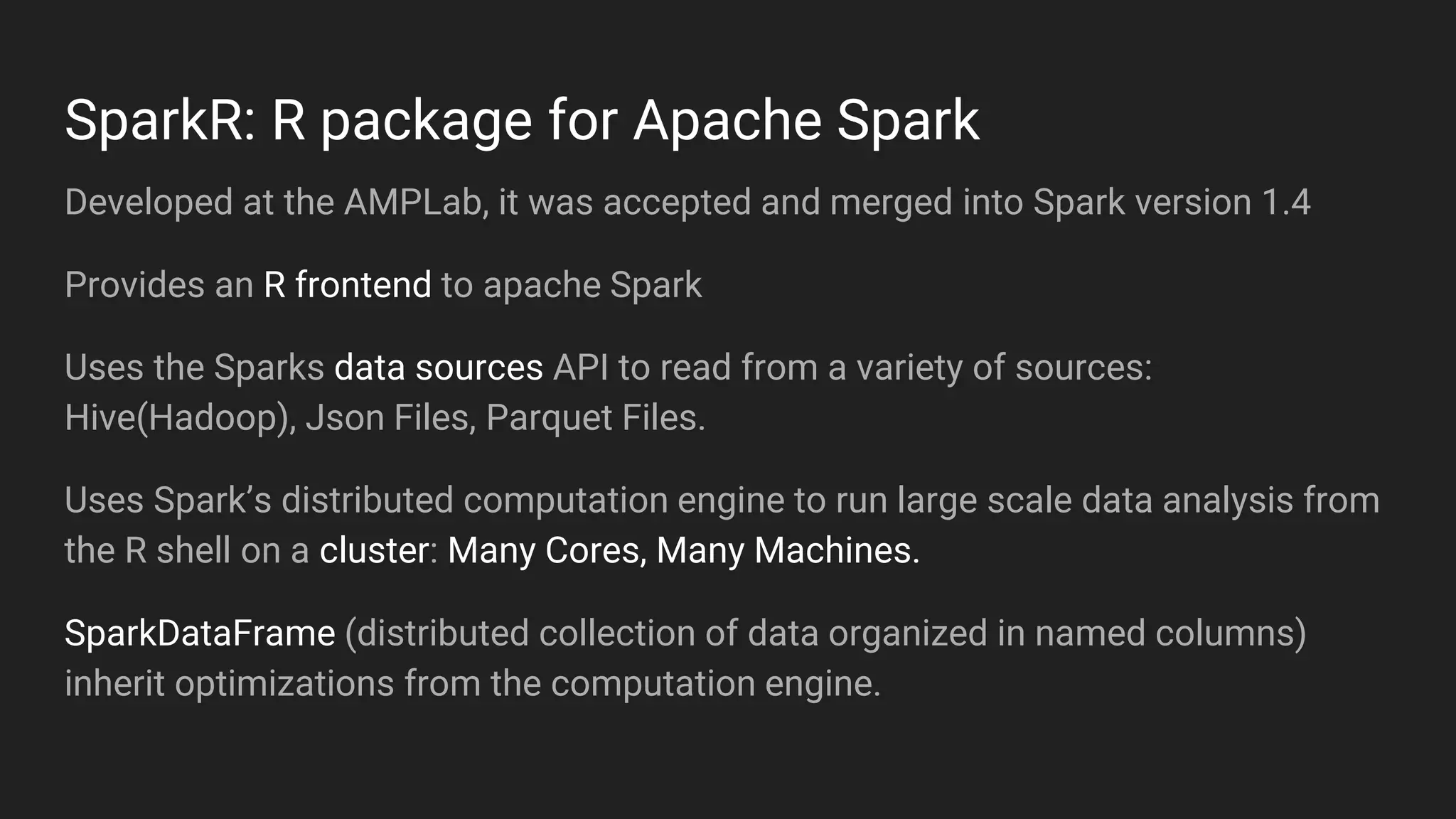

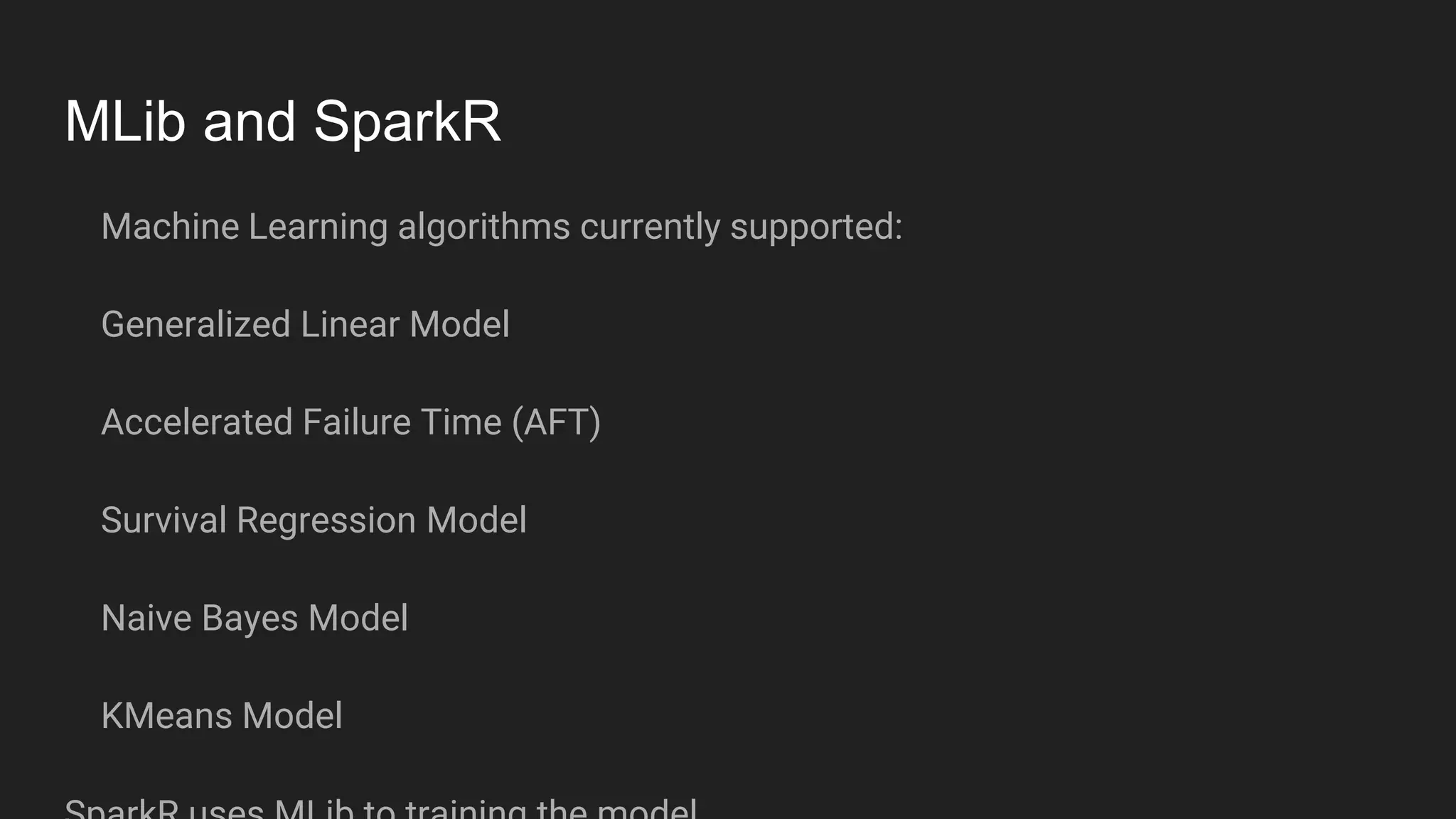

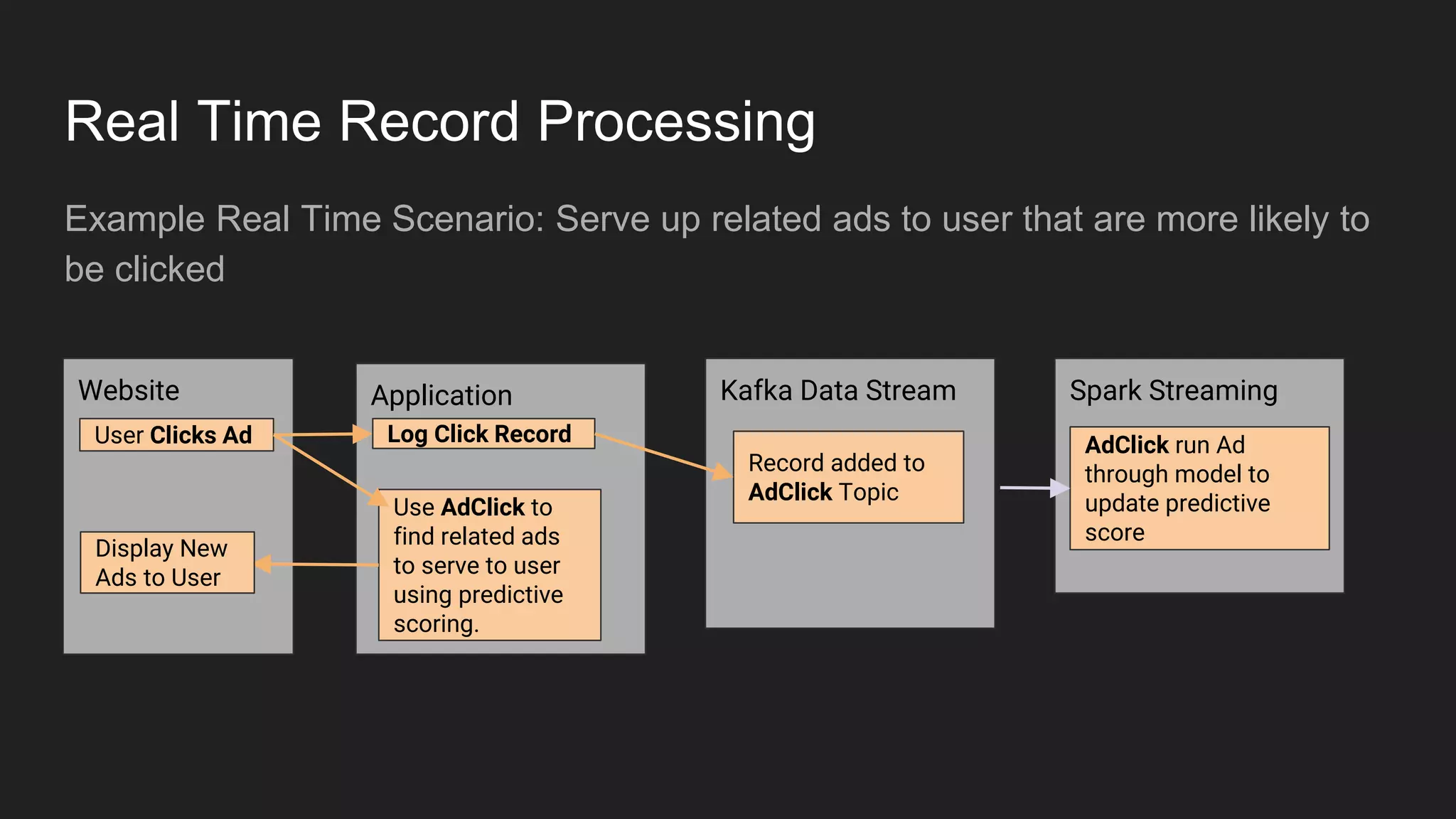

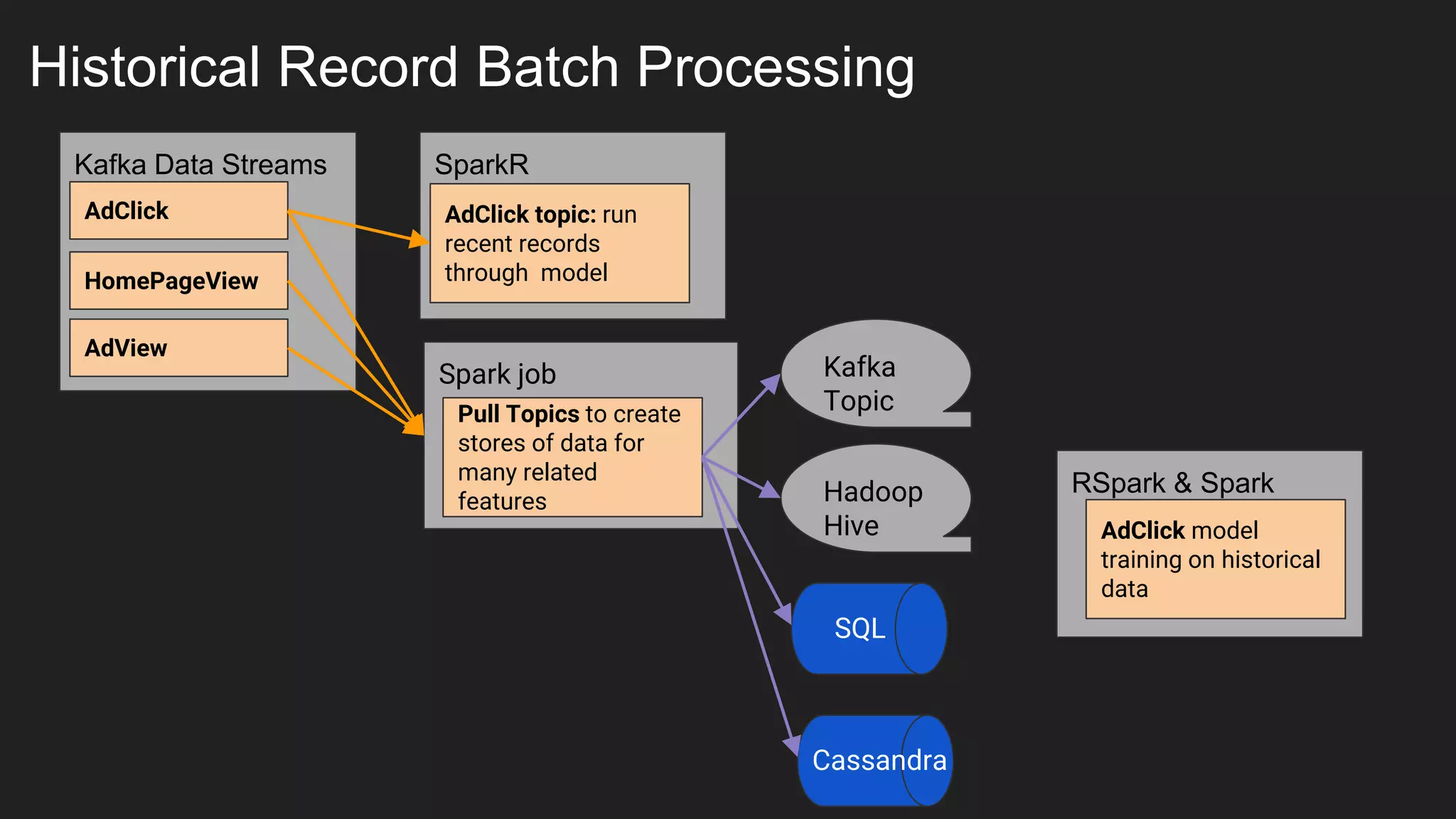

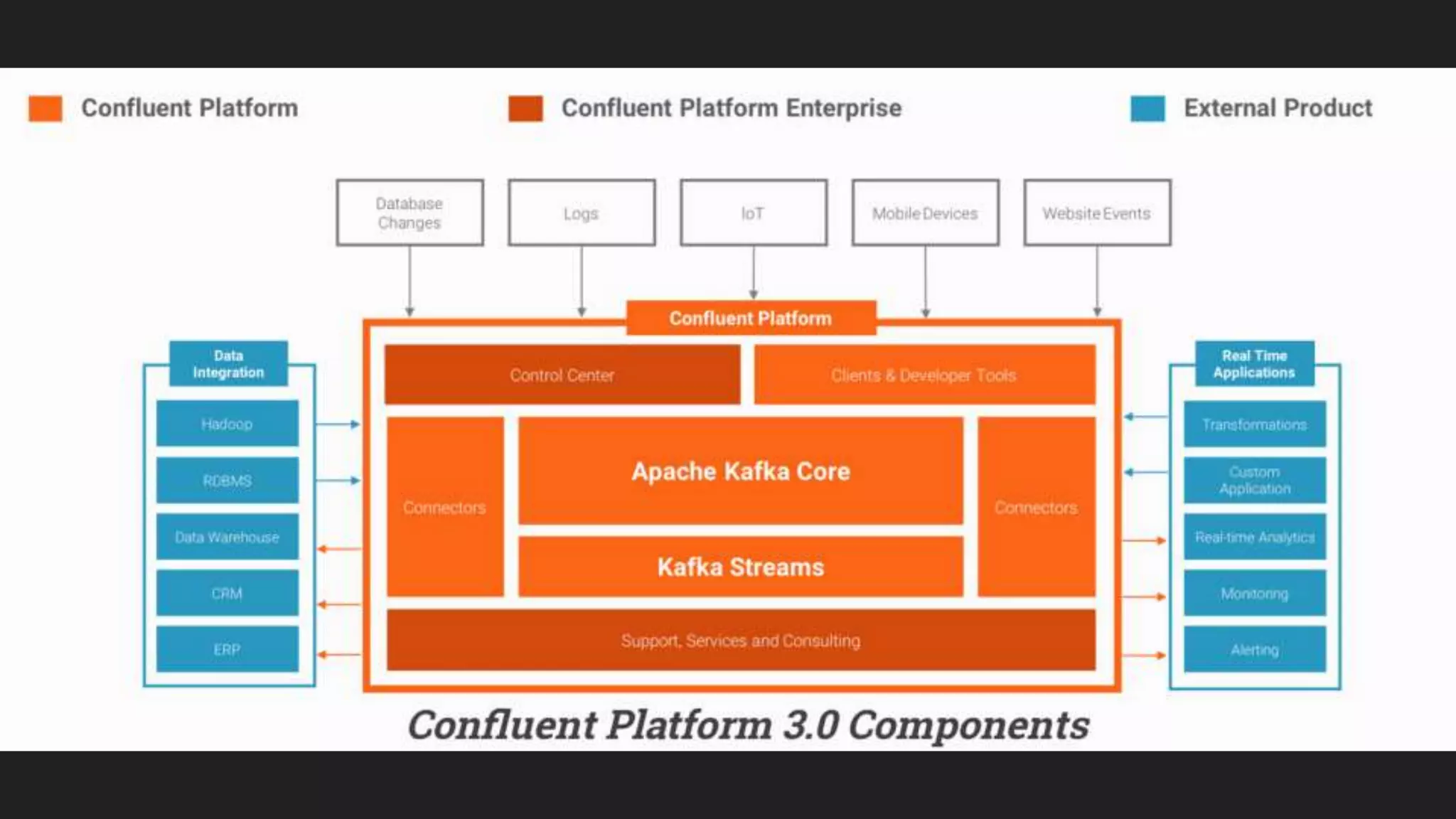

Jennifer Rawlins, a software engineer with extensive experience, discusses real-time streaming using Kafka in her presentation. She outlines the functionalities of messaging systems, particularly Kafka's role in handling data pipelines, real-time processing, and scalability. The document also covers the integration of Spark and R for data analysis and machine learning within Kafka streams.