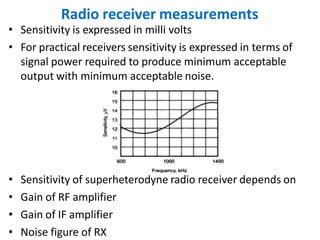

1. The important characteristics of a superheterodyne radio receiver are sensitivity, selectivity, and fidelity. Sensitivity is the ability to amplify weak signals and is measured in millivolts. Selectivity is the ability to reject unwanted signals and depends on receiving frequency and IF section response. Fidelity is the ability to reproduce all modulating frequencies equally.

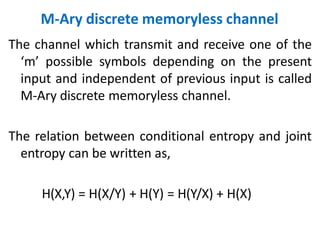

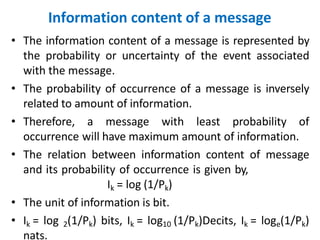

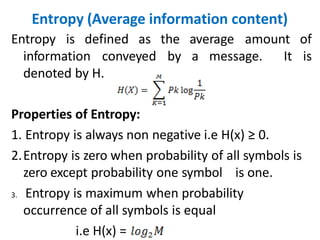

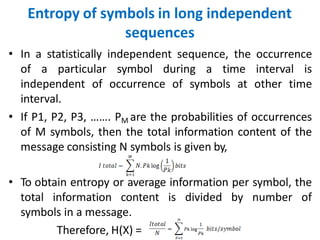

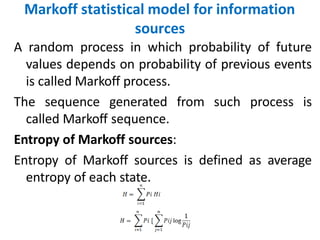

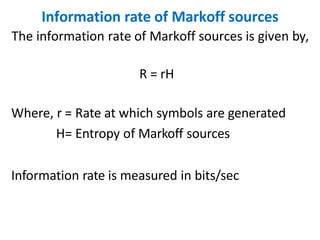

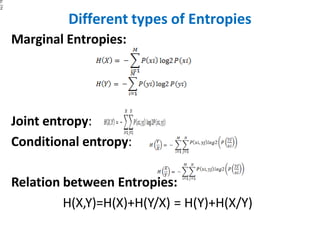

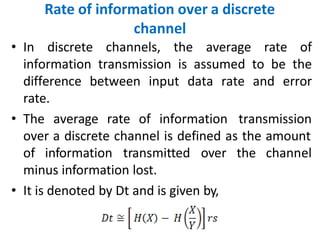

2. Information is defined as a sequence of symbols carrying meaning. The information content depends on a message's probability, with less probable messages conveying more information. Entropy is the average information conveyed by a message and is highest when symbol probabilities are equal. Mutual information between input and output of a channel is the reduction of uncertainty.

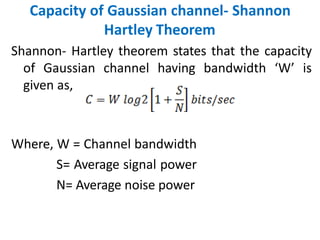

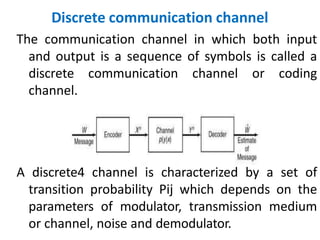

3. The capacity of

![Capacity of discrete memoryless channel

The maximum allowable rate of information that

can be transmitted over a discrete channel is

called capacity of memoryless channel.

When channel matches with the source, maximum

rate of transmission takes place.

Therefore, channel capacity,

C= max [I(X,Y)] = Max [ H(X) – H(X/Y) ]](https://image.slidesharecdn.com/ppt-7-230829145617-26e3a9c4/85/Radio-receiver-and-information-coding-pptx-18-320.jpg)