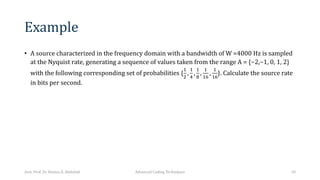

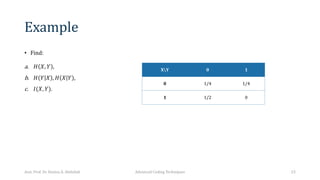

This document discusses an introduction to coding theory and information theory. It defines source coding and channel coding as the two main fields of coding theory. Source coding aims to represent source symbols efficiently, while channel coding enhances error detection and correction during transmission. The document then discusses information measures like entropy, joint entropy, conditional entropy, and mutual information. It provides examples of calculating entropy for different probability distributions. The key concepts of information theory like Shannon's definition of information, chain rule, and mutual information are also summarized.

![Entropy

• The entropy of a discrete random variable, X, is defined by:

𝑯 𝑿 = −𝑬[𝒍𝒐𝒈𝟐 𝒑 𝑿 = − D

𝒙∈𝑿

𝒑 𝒙 𝒍𝒐𝒈𝟐 𝒑 𝒙

𝑯 𝑿 = D

𝒙∈𝑿

𝒑 𝒙 𝒍𝒐𝒈𝟐

𝟏

𝒑 𝒙

• For discrete random variables 𝐻(𝑋) ≥ 0

• The entropy is the average information of the random variable X:

𝐻 𝑋 = 𝐸[𝐼 𝑋 ]

• When base 2 is used, the entropy is measured in bits per symbol.

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 6](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-6-320.jpg)

![Chain rule

• We Know that 𝑝 𝑥, 𝑦 = 𝑝(𝑥)𝑝 𝑦|𝑥 . Therefore, taking logarithms and expectations on both

sides we arrive to:

𝐸[𝑙𝑜𝑔 𝑝 𝑥, 𝑦 = 𝐸[𝑙𝑜𝑔 𝑝 𝑥 + 𝐸[𝑙𝑜𝑔 p y|x ]

• So chain rule:

𝑯 𝑿, 𝒀 = 𝑯 𝑿 + 𝑯(𝒀|𝑿)

• Similarly:

𝑯 𝑿, 𝒀 = 𝑯 𝒀 + 𝑯(𝑿|𝒀)

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 20](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-20-320.jpg)

![Solution

b. Since 𝐻 𝑋, 𝑌 = 𝐻 𝑋 + 𝐻(𝑌|𝑋)

𝑃 𝑌 = [

&

#

,

!

#

] , 𝑃 𝑋 = [

!

"

,

!

"

]

𝐻 𝑌 = ∑' 𝑃 𝑌 𝑙𝑜𝑔

!

( '

=

&

#

𝑙𝑜𝑔"

#

&

+

!

#

𝑙𝑜𝑔" 4 = 0.81

𝐻 𝑋 = ∑) 𝑃 𝑋 𝑙𝑜𝑔

!

( )

=

"

"

𝑙𝑜𝑔" 2 = 1

𝐻 𝑌 𝑋 = 𝐻 𝑋, 𝑌 − 𝐻 𝑋

𝐻 𝑌 𝑋 = 1.5 − 1 = 0.5

𝐻 𝑋 𝑌 = 𝐻 𝑋, 𝑌 − 𝐻 𝑌

𝐻 𝑋 𝑌 = 1.5 − 0.81 = 0.69

Note that: 𝐻(𝑋|𝑌) ≠ 𝐻 𝑌 𝑋

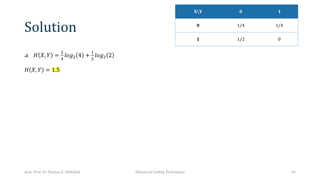

XY 0 1 P(X)

0 1/4 1/4 1/2

1 1/2 0 1/2

P(Y) 3/4 1/4

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 25](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-25-320.jpg)

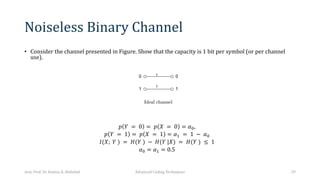

![Channel Capacity

• The channel capacity of a discrete memoryless channel is defined as:

𝐶 = max 𝐼 𝑋, 𝑌

𝐶 = 𝑚𝑎𝑥[𝐻 𝑌 − 𝐻 𝑌 𝑋 ]

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 28](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-28-320.jpg)

![Binary Symmetric Channel(BSC)

• The probability matrix for the BSC is equal to:

𝑃78 = [

1 − 𝑝 𝑝

𝑝 1 − 𝑝

]

• Channel Capacity

𝑪𝑩𝑺𝑪 = 𝟏 − 𝑯 𝒑, 𝟏 − 𝒑

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 31](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-31-320.jpg)

![Binary Erasure Channel (BEC)

• For this channel, 𝟎 ≤ 𝒑 ≤ 𝟏 / 𝟐, 𝑤ℎ𝑒𝑟𝑒 𝒑 is the erasure probability, and the channel model has two

inputs and three outputs.

• When the received values are unreliable, or if blocks are detected to contain errors, then erasures are

declared, indicated by the symbol ‘?’. The probability matrix of the BEC is the following:

𝑃78 = [

1 − 𝑝 𝑝 0

0 𝑝 1 − 𝑝

]

• Channel Capacity

𝑪𝑩𝑬𝑪 = 𝟏 − 𝒑 𝒃𝒊𝒕 𝒑𝒆𝒓 𝒄𝒉𝒂𝒏𝒏𝒆𝒍 𝒖𝒔𝒆

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 33](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-33-320.jpg)

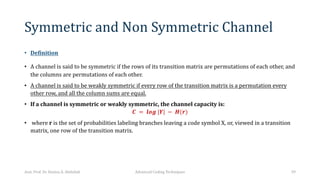

![Non Symmetric Channel

𝑃 𝑌 𝑋 = [

𝑃,, 𝑃,.

𝑃., 𝑃..

]

𝑃 𝑄 = − 𝐻

𝑃,, 𝑃,.

𝑃., 𝑃..

𝑄,

𝑄.

= [

𝑃,,𝑙𝑜𝑔𝑃,, + 𝑃,.𝑙𝑜𝑔𝑃,.

𝑃.,𝑙𝑜𝑔𝑃., + 𝑃..𝑙𝑜𝑔𝑃..

]

• where Q1 and Q2 are auxiliary variables. Then:

𝐶 = log(2@) + 2@*)

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 44](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-44-320.jpg)

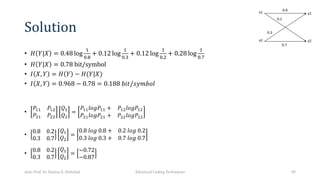

![Solution

• The transition Matrix:

𝑃 𝑌 𝑋 =

0.8 0.2

0.3 0.7

𝑃 𝑋, 𝑌 = 𝑃 𝑌 𝑋 ∗ 𝑃(𝑋)

𝑃 𝑋, 𝑌 =

0.8 ∗ 0.6 0.2 ∗ 0.6

0.3 ∗ 0.4 0.7 ∗ 0.4

=

0.48 0.12

0.12 0.28

𝑃 𝑌 = 𝑦 = D

0

𝑃(𝑋, 𝑌)

𝑃 𝑌 = [0.6 0.4]

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 46](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-46-320.jpg)

![Solution

𝐶 = log(2Z2 + 2Z3)

𝐶 = log(2[.]^]

+ 2[._`]

)

𝐶 = log 0.635 + 0.508

𝐶 = log 1.14 = 0.189

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 52](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-52-320.jpg)

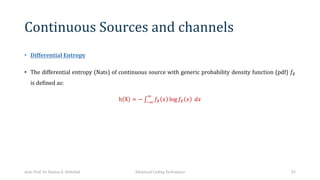

![Example

• The differential entropy is simply:

ℎ 𝑋 = 𝐸[− log 𝑓(𝑋)] = log (𝑏 − 𝑎)

• Notice that the differential entropy can be negative or positive depending on whether 𝑏 − 𝑎 is less

than or greater than 1. In practice, because of this property, differential entropy is usually used as

means to determine mutual information and does not have much operational significance by itself.

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 56](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-56-320.jpg)

![Example

• The differential entropy is simply:

ℎ 𝑋 = 𝐸[− log 𝑓(𝑋)]

ℎ 𝑋 = 1 𝑓 𝑥 [

1

2

log 2𝜋𝜎" +

𝑥 − 𝜇 "

2𝜎" ]𝑑𝑥

=

1

2

log 2𝜋𝜎" +

1

2𝜎" 𝐸 𝑥 − 𝜇 "

=

1

2

log 2𝜋𝜎" +

1

2

=

!

"

log(2𝜋𝜎"𝑒) nats

• A continuous source X with Gaussian generic distribution of mean 𝜇 and variance 𝜎" has differential entropy.

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 58](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-58-320.jpg)

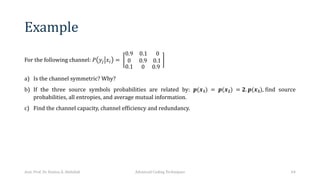

![Solution

a) The channel is symmetric (or TSC), because the components of the rows of 𝑃 𝑦2 𝑥+ are the same.

b) To start solving the problem to find source probabilities, we think that we have 3 unknowns and so we

need 3 equations and these are given:

P(x1) = P(x2) . . . (1)

P(x2) =2.P(x3) . . . .(2)

P(x1) + P(x2) + P(x3)=1 ……(3)

from (1) P(x2) = P(x1), from (2) P(x3) = P(x2)/2 = P(x1)/2 .

Putting these relations in (3) will give:

P(x1) + P(x1) + P(x1)/2 =1 à 5. P(x1) /2 =1 or P(x1) =2/5 =0.4

• Now, using (1) and (2) à P(x1) =0.4, P(x2) =0.4, and P(x3) =0.2

• Now we have P(xi) = [ 0.4 0.4 0.2]

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 65](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-65-320.jpg)

![Solution

• From the relation 𝑃 𝑥N, 𝑦O = 𝑝(𝑥N) 𝑝 𝑦O 𝑥N and the given matrix of 𝑝 𝑦O 𝑥N :

𝑃(𝑥N, 𝑦O) =

0.36

0

0.04 0

0.36 0.04

0.02 0 0.18

• Summing the column components to have 𝑃 𝑦O = [ 0.38 0.4 0.22]

• Other probabilities are unnecessary in this example, now calculate:

H(x) = − ∑* 𝑃 𝑥N 𝐿𝑜𝑔𝑃(𝑥N) = 1.5219 Bit/Symbol

H(y) = − ∑K 𝑃 𝑦O 𝐿𝑜𝑔𝑃(𝑦O) = 1.5398 Bit/Symbol

• Since we have symmetric channel then, H(y|x)= − ∑K 𝑃 𝑦O|𝑥N 𝐿𝑜𝑔𝑃 𝑦O 𝑥N

• H(y|x) = − [ 0.9 Log 0.9 + 0.1 Log 0.1 +0 Log0 ] = 0.467 Bits/Symbol

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 66](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-66-320.jpg)

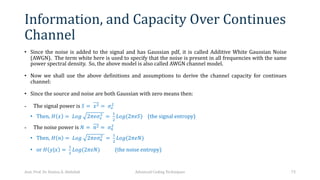

![Entropy, Information, and Capacity Rates

• The meaning of rate is the unit of a physical quantity per unit time. For the entropy, the

average mutual information, and the capacity the rate is measured in bits per second

(bits/sec) or more general bps. This is much important than the unit Bits/symbol.

• In terms of units >>>

PNQ

RKSTUV

×

RKSTUV

WXYUZ[

=

PNQ

RXYUZ[

𝑜𝑟 𝑏𝑝𝑠

• Let Rx be the source symbol rate, then the time of the symbol (Tx )is given by:

𝑇* =

!

*

second/Symbol

• Now each entropy H, I or C can be converted from Bits/Symbol unit into rate unit of bps by

multiplying each of them by Rx, as follows:

𝐻] 𝑥 = 𝑅*. 𝐻 𝑥

𝐼] = 𝑅*. 𝐼

𝐶] = 𝑅*. 𝐶

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 68](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-68-320.jpg)

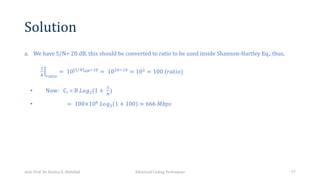

![Solution

b. Using 500 MHz bandwidth:

• Now: Cr = B 𝐿𝑜𝑔.(1 +

S

T

) =

= 500×10

𝐿𝑜𝑔. 1 + 100 ≈ 3330 𝑀𝑏𝑝𝑠 (𝑜𝑟 3.3 𝐺𝑏𝑝𝑠)

• %increase in rate =

TZ] ^PQZA_`N ^PQZ

_`N ^PQZ

. 100%

=

aaa6A

. 100%

=

.5

. 100% = 400%

• This means, the rate (bps) in 5G is four times that of 4G.

Asst. Prof. Dr. Hamsa A. Abdullah Advanced Coding Techniques 78](https://image.slidesharecdn.com/lectureno1-240328212225-f7f337a9/85/advance-coding-techniques-probability-78-320.jpg)