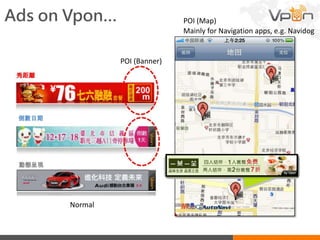

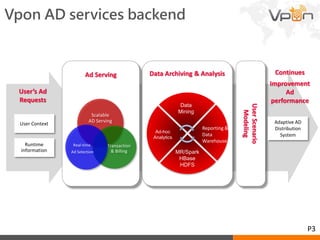

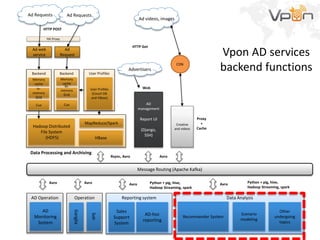

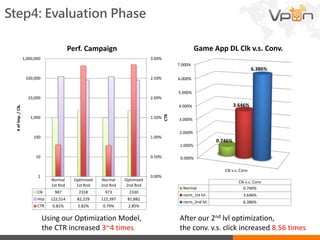

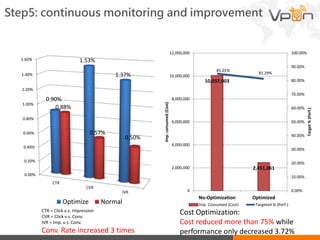

This document discusses Vpon's mobile advertising system and recommender model. It describes the basic concept, challenges, and infrastructure of Vpon's ad serving platform. It then focuses on the recommender system, outlining the design, implementation, and evaluation process. Key steps include calculating ad and user similarities, predicting user preferences, optimizing ad delivery, and continuously improving based on results. The recommender significantly increased click-through and conversion rates while reducing costs.