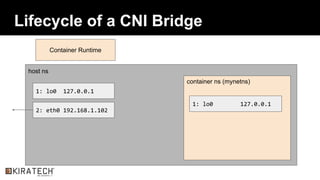

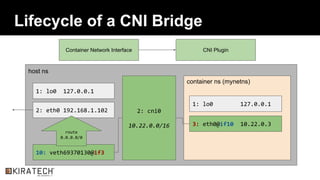

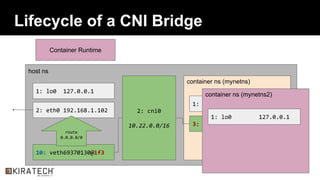

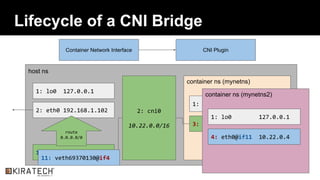

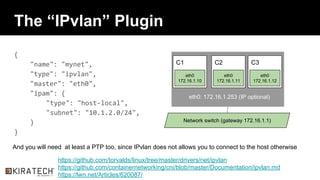

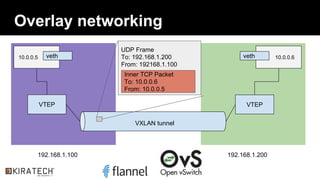

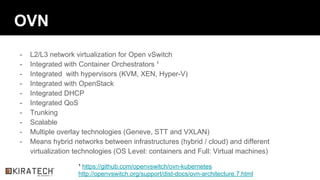

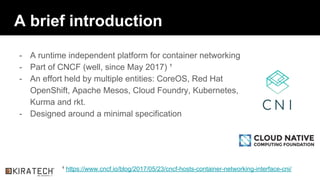

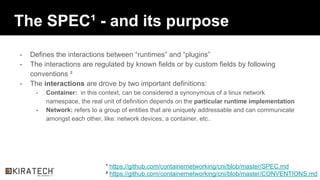

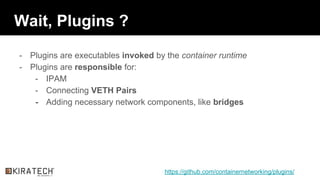

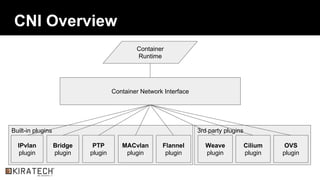

The document provides an overview of Container Network Interface (CNI) which is a standard for configuring network interfaces for Linux containers. It discusses how CNI uses plugins to configure networking for containers and provides examples of plugins like ptp, bridge, and IPvlan. It also covers overlay networking techniques like VXLAN and OVN which can be used to provide networking between containers on different hosts.

![The “ptp” Plugin

{

"name": "mynet",

"type": "ptp",

"ipam": {

"type": "host-local",

"subnet": "10.1.1.0/24"

},

"dns": {

"nameservers": [ "10.1.1.1", "8.8.8.8" ]

}

}

ns0 s1

tap0 tap1](https://image.slidesharecdn.com/practical-cni-fontana-170620152933/85/Practical-CNI-6-320.jpg)