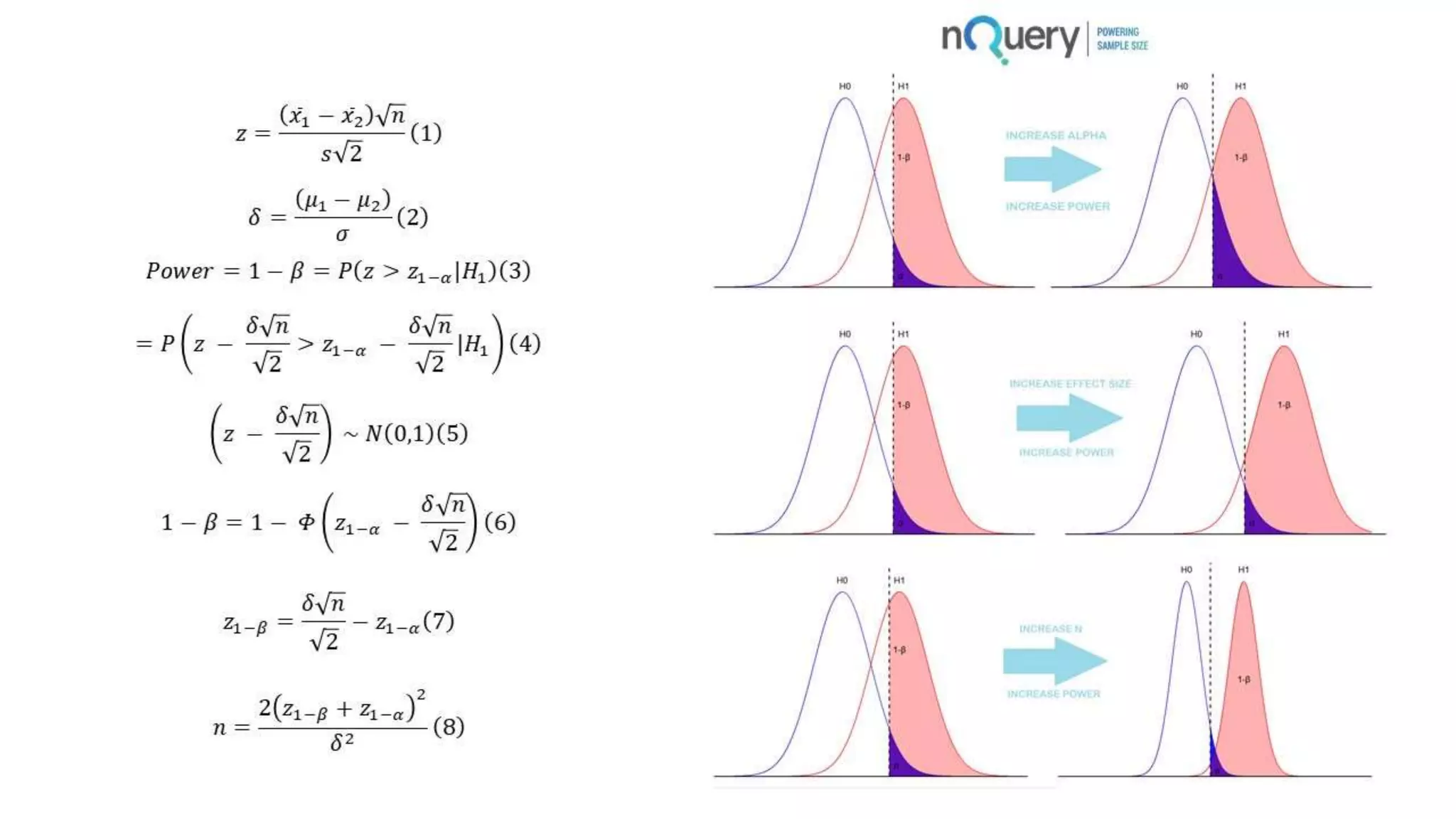

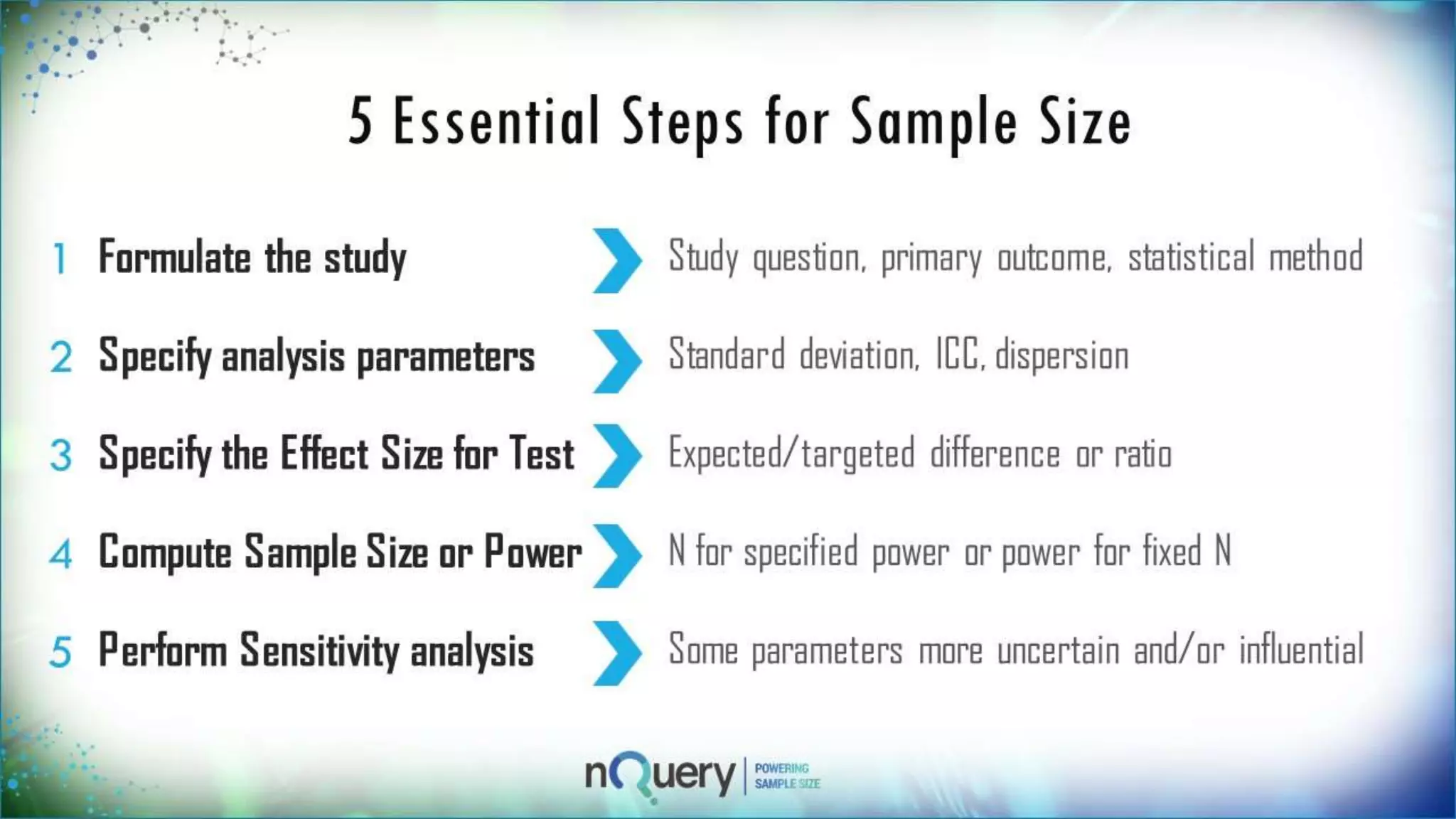

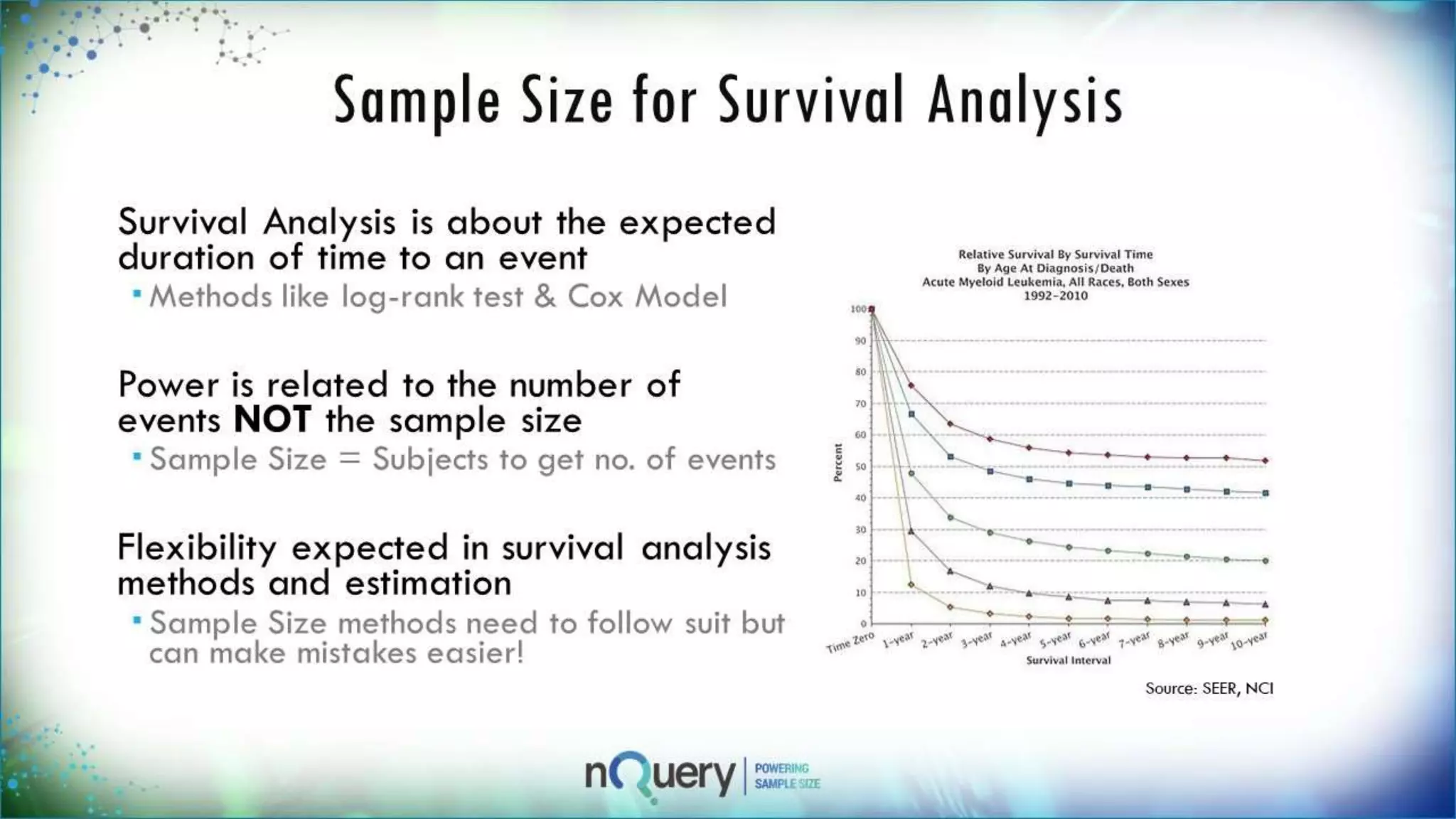

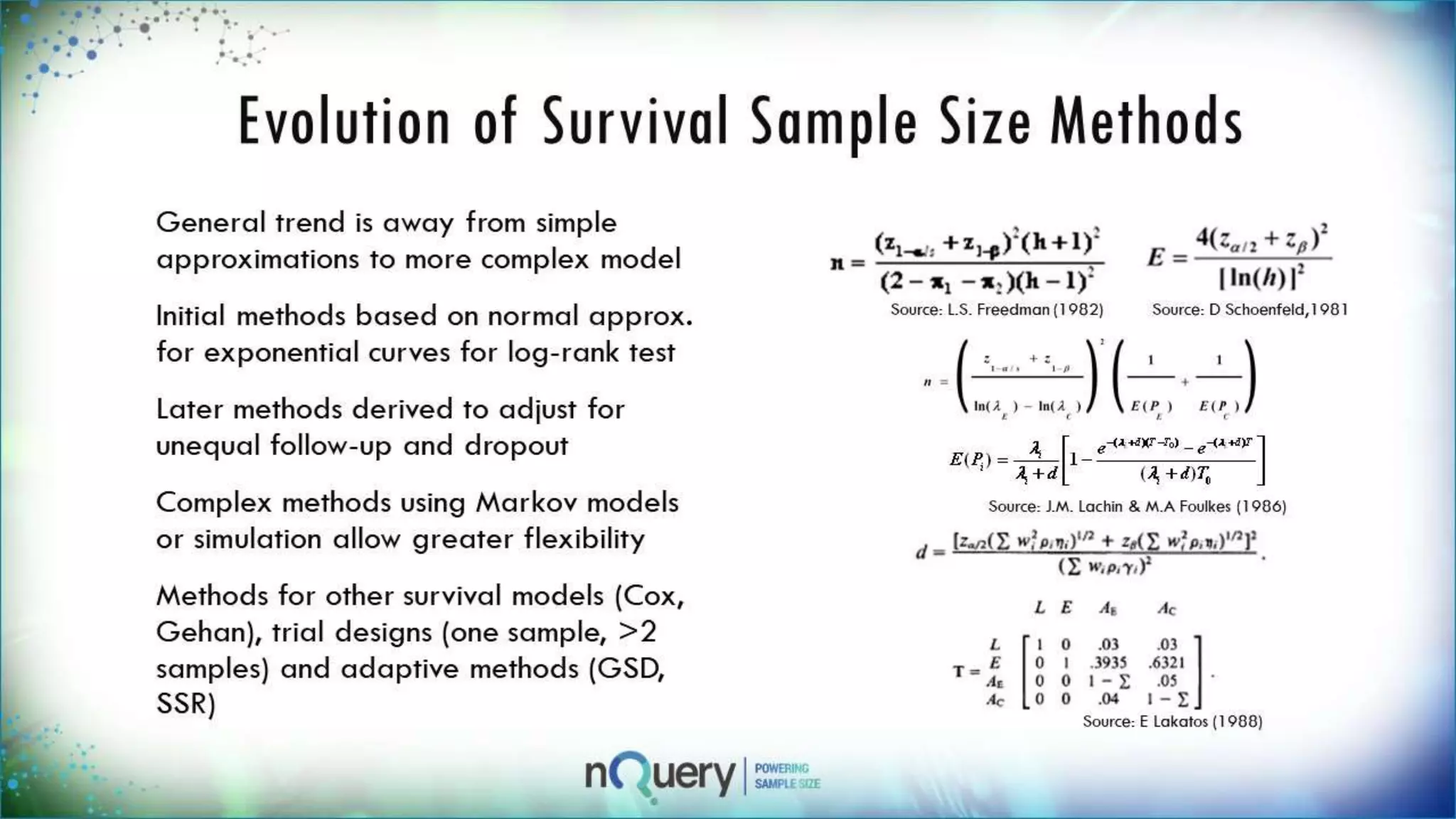

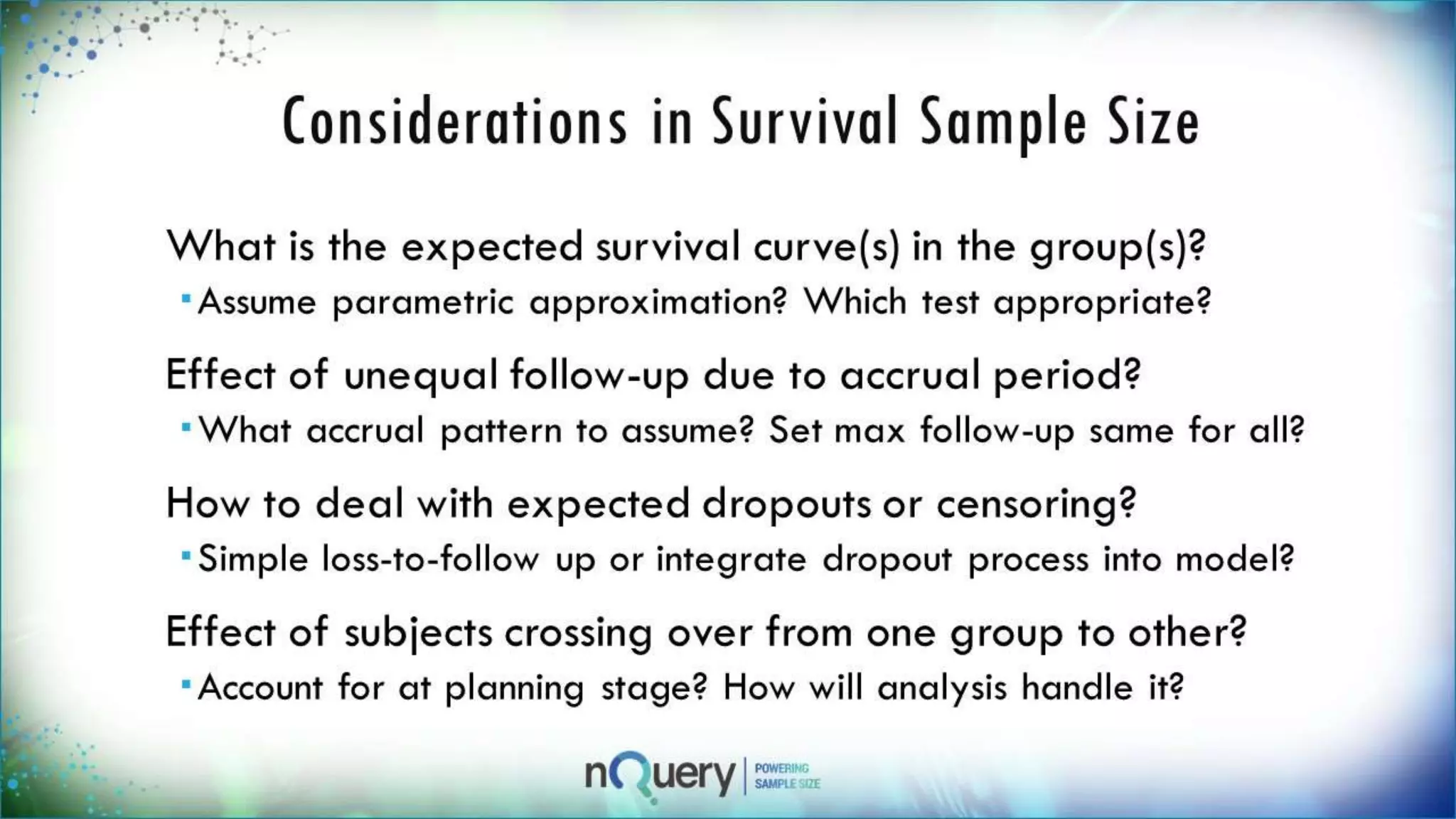

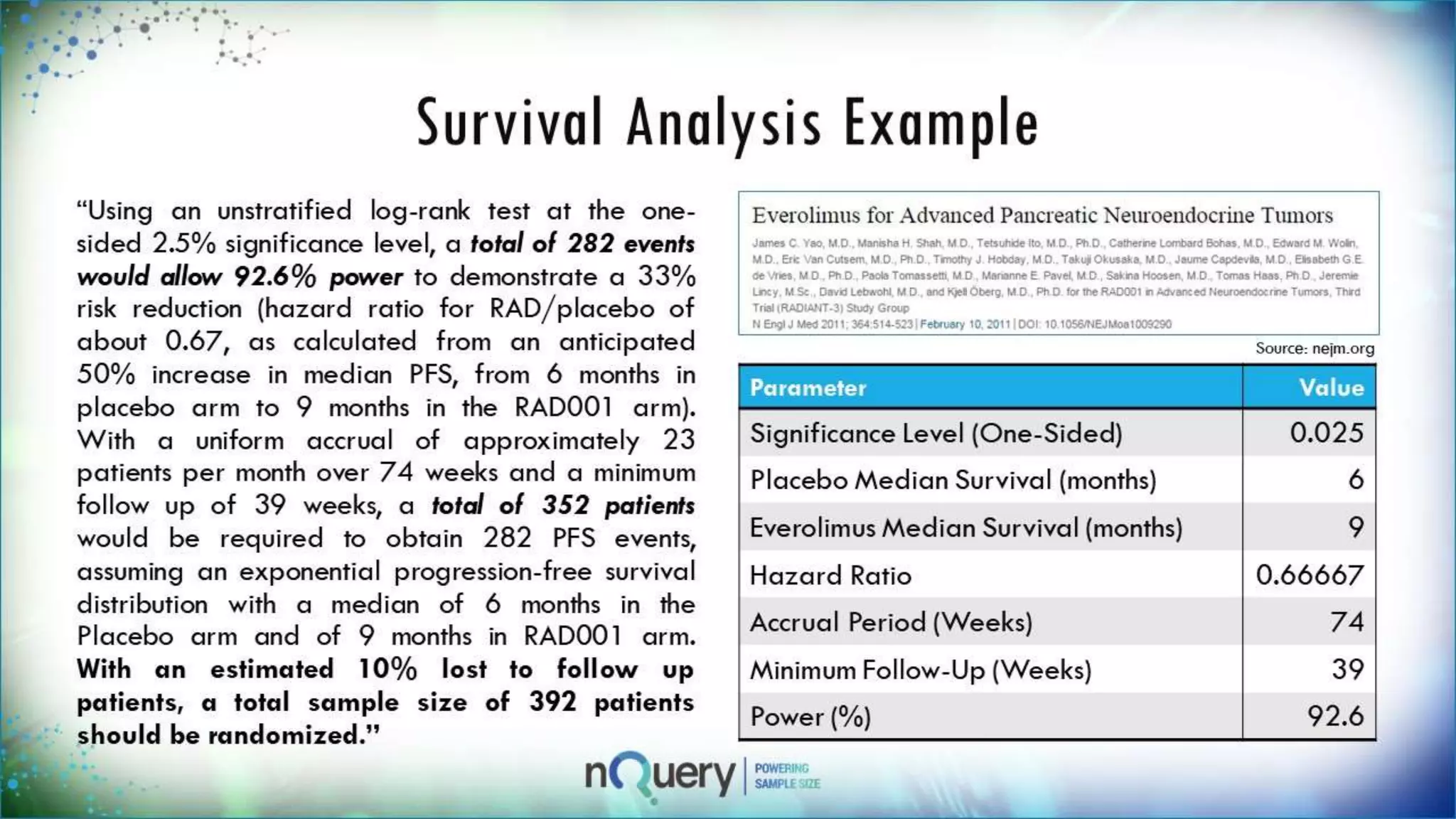

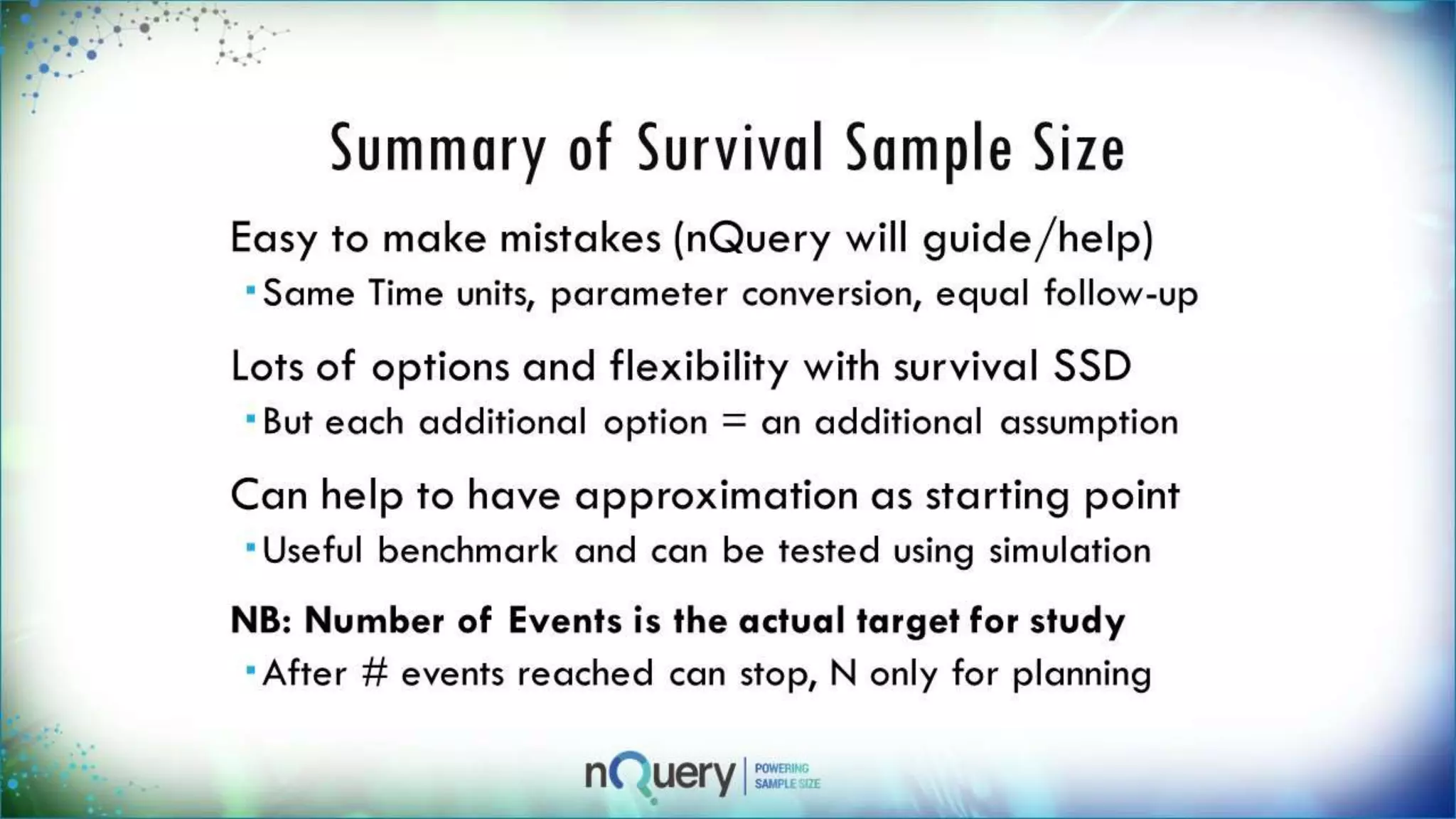

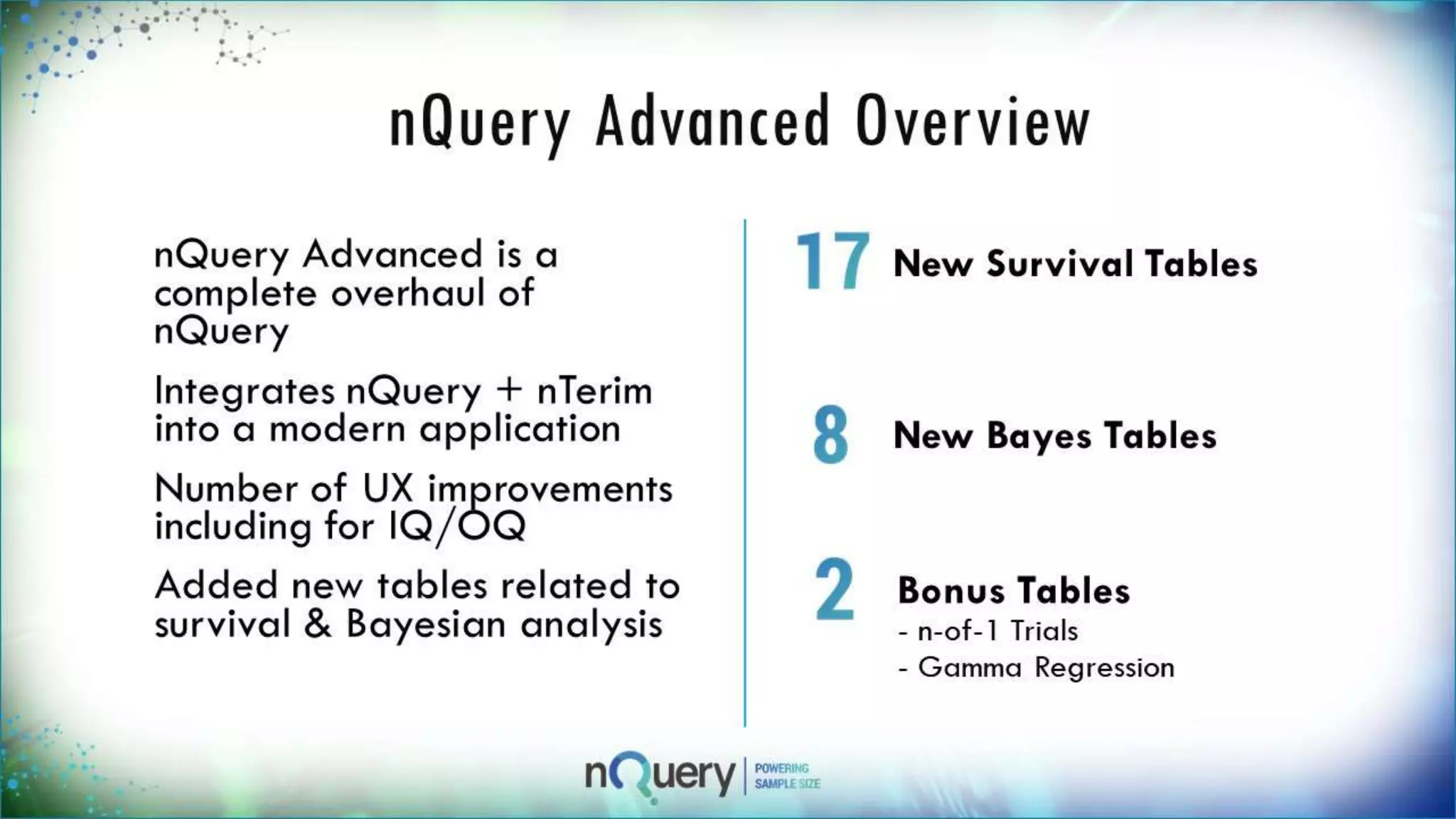

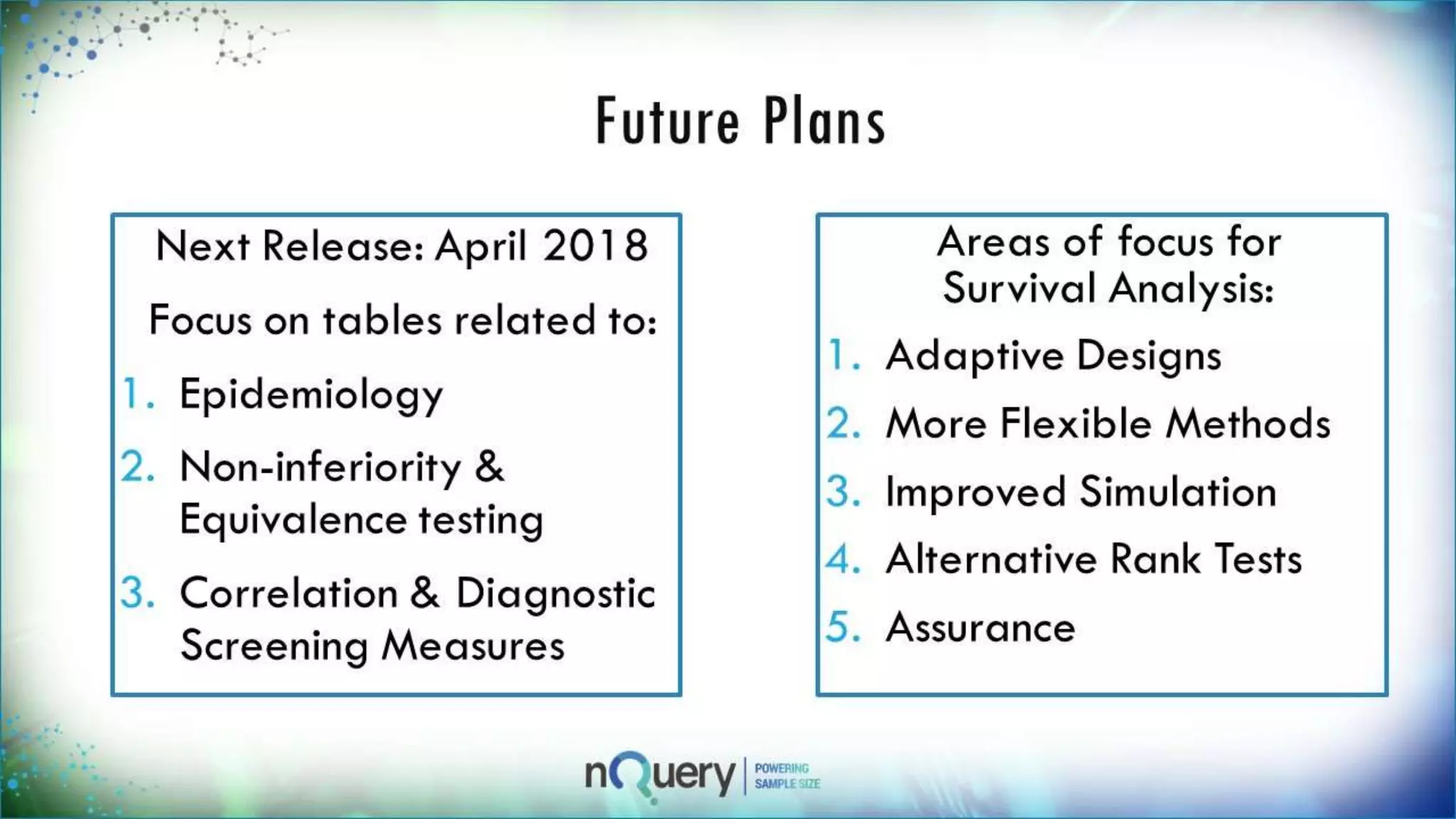

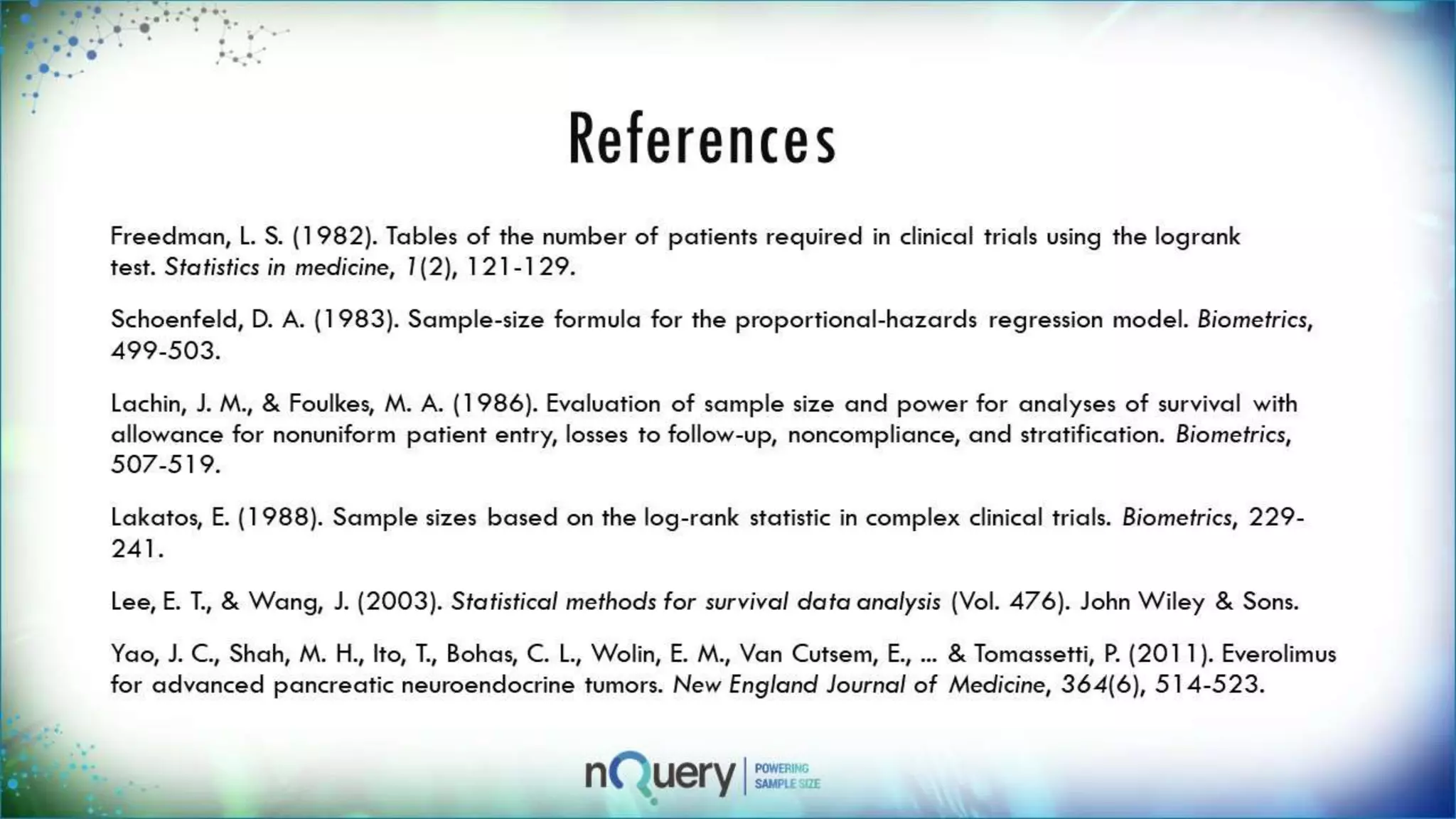

This webinar presentation introduced sample size determination for survival analysis. It discussed how to estimate the appropriate sample size, key considerations for survival analysis including expected survival curves and handling dropouts. It demonstrated an example in nQuery software to calculate the sample size needed for a clinical trial to show a risk reduction in progression-free survival between treatment arms. The webinar concluded with plans to further enhance survival analysis capabilities in nQuery and addressed questions from participants.