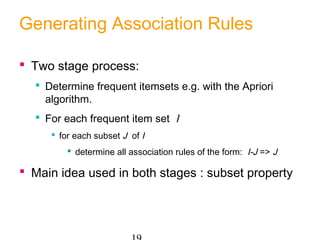

This document discusses association rules and frequent itemset mining. It defines key concepts like transactions, frequent itemsets, support, confidence and the subset property. The Apriori algorithm is introduced for efficiently finding frequent itemsets by leveraging the subset property. Association rule generation and measures like lift are also covered. Examples are provided to illustrate frequent itemset mining and rule generation. Finally, some applications of association rules like market basket analysis are mentioned along with challenges in applying the insights.