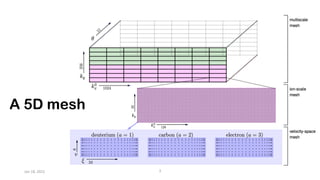

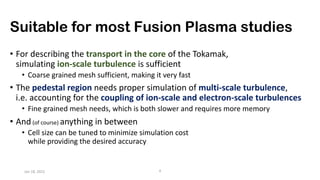

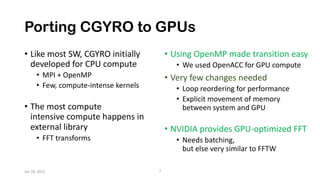

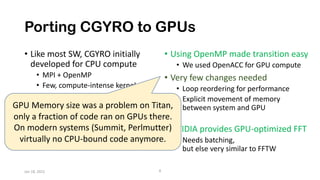

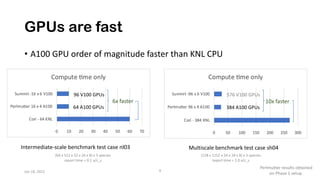

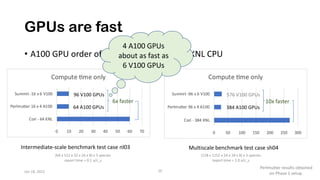

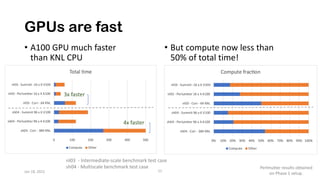

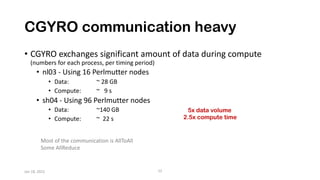

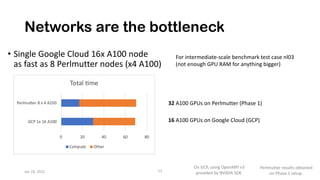

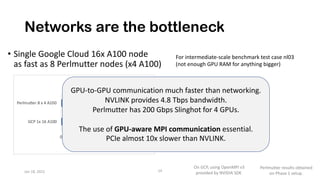

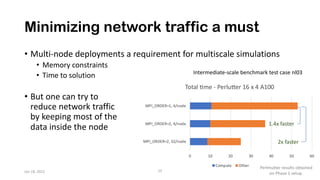

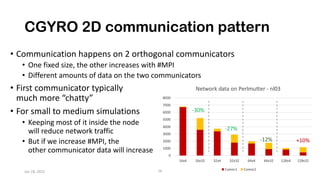

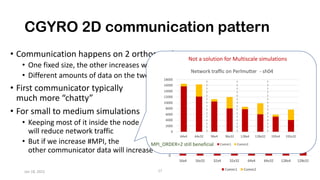

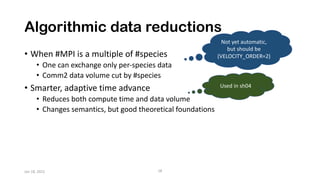

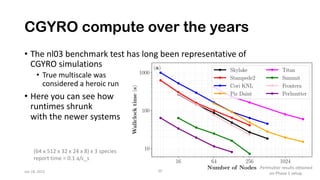

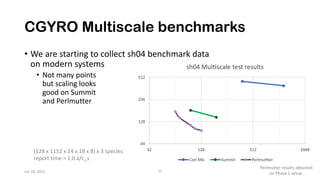

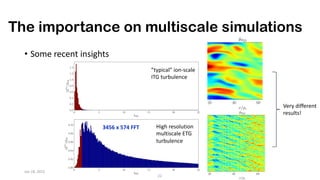

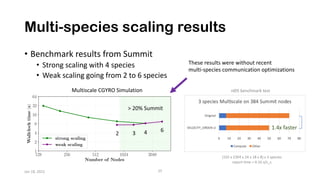

The document discusses the performance optimization of the cgyro solver for multiscale turbulence simulations in fusion plasma, emphasizing its design for efficient simulation on modern high-performance computing systems. The transition to GPU computing significantly reduced compute times and enhanced the scalability of simulations, aided by algorithmic improvements for communication costs. Future work includes ensuring compatibility with new hardware and further optimizing algorithms for complex multi-species simulations in burning plasma conditions.