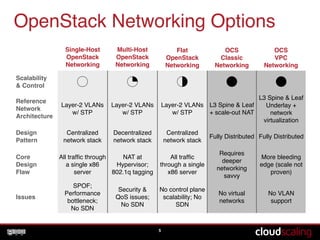

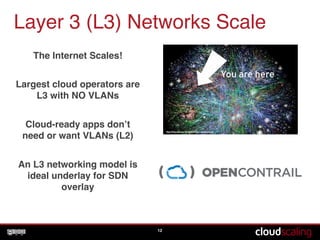

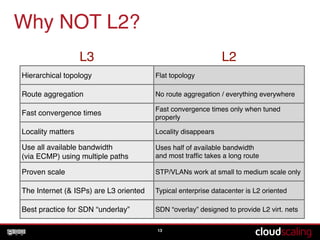

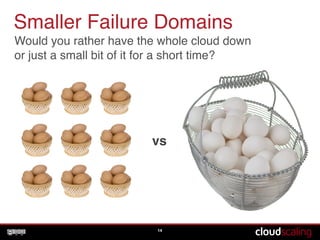

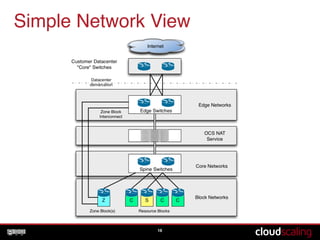

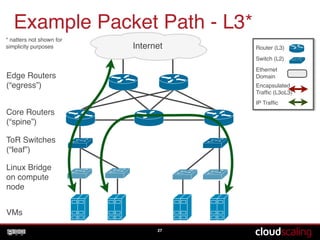

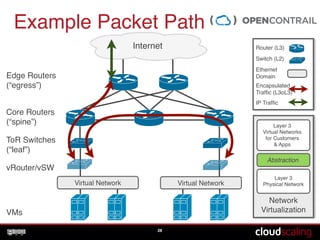

(1) The document describes OpenStack networking architecture that scales to over 1,000 servers without using Neutron. It uses a layer 3 spine and leaf topology with no VLANs.

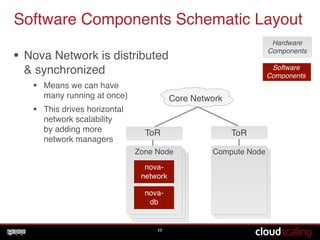

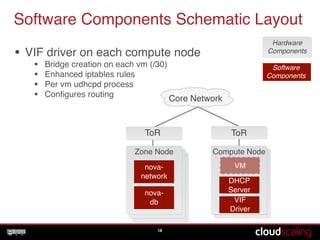

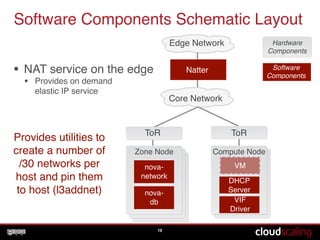

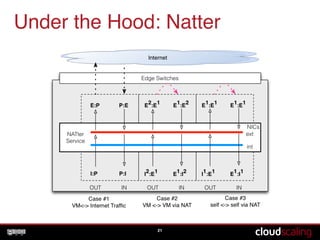

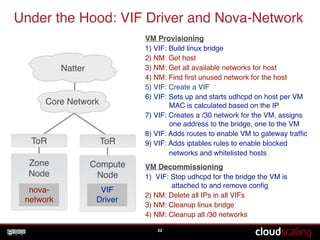

(2) Key components include Nova network which is distributed, NAT services on the edge, and a VIF driver that sets up routing and DHCP for each VM.

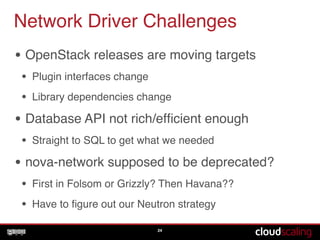

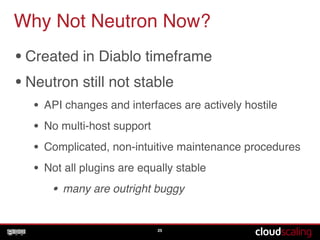

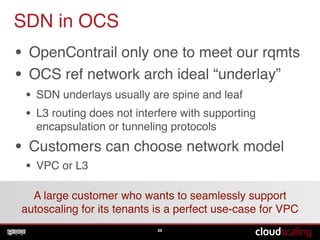

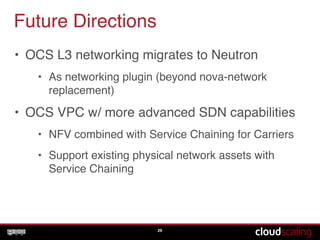

(3) Challenges included moving OpenStack targets and an immature Neutron. The architecture provides a solid underlay for SDN and future integration with Neutron.