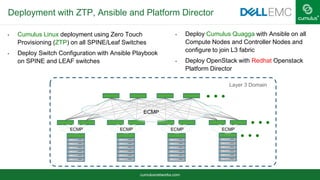

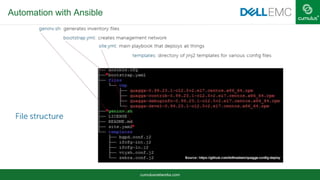

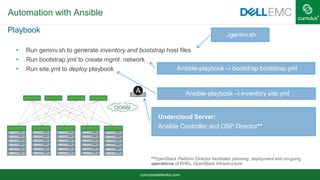

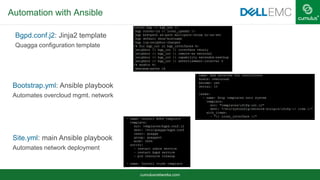

The document discusses building a scalable Layer 3 network for private cloud environments utilizing BGP and a Clos topology, emphasizing its advantages like scalability and predictable latency. It outlines the use of Cumulus Linux and automation tools such as Ansible for configuration and deployment, showcasing a sample deployment with Dell EMC hardware and OpenStack. Key takeaways include the effectiveness of eBGP as a routing protocol in data centers and the benefits of Layer 3 networking for simplifying deployments.

![cumulusnetworks.com

RFC 5549 in Action

leaf01# sh ip route

Codes: K - kernel route, C - connected, S - static, R - RIP,

O - OSPF, I - IS-IS, B - BGP, P - PIM, T - Table, v - VNC,

V - VPN,

> - selected route, * - FIB route

K>* 0.0.0.0/0 via 192.168.0.254, eth0

B>* 172.16.0.1/32 [20/0] via fe80::4638:39ff:fe00:5c, swp1, 00:08:03

B>* 172.16.0.2/32 [20/0] via fe80::4638:39ff:fe00:2b, swp2, 00:08:03

B>* 172.16.0.3/32 [20/0] via fe80::4638:39ff:fe00:3c, swp3, 00:08:03

C>* 172.16.1.1/32 is directly connected, lo

B>* 172.16.1.2/32 [20/0] via fe80::4638:39ff:fe00:5c, swp1, 00:08:03

* via fe80::4638:39ff:fe00:2b, swp2, 00:08:03

via fe80::4638:39ff:fe00:3c, swp3, 00:08:03

B>* 172.16.1.3/32 [20/0] via fe80::4638:39ff:fe00:5c, swp1, 00:08:03

* via fe80::4638:39ff:fe00:2b, swp2, 00:08:03

via fe80::4638:39ff:fe00:3c, swp3, 00:08:03](https://image.slidesharecdn.com/cumulusdellwebinarfinal1-161129210340/85/Building-Scalable-Data-Center-Networks-10-320.jpg)

![cumulusnetworks.com

Cumulus Quagga Logging

Logs: log file /var/log/quagga/quagga.log

sudo journalctl -f -u quagga

Oct 28 21:31:44 leaf01 quagga[1076]: Starting Quagga monitor daemon: watchquagga.

Oct 28 21:31:44 leaf01 quagga[1076]: Exiting from the script

Oct 28 21:31:44 leaf01 watchquagga[1130]: watchquagga 0.99.24+cl3eau5 watching [zebra bgpd ], mode

[phased zebra restart]

Oct 28 21:31:45 leaf01 watchquagga[1130]: bgpd state -> up : connect succeeded

Oct 28 21:31:45 leaf01 watchquagga[1130]: zebra state -> up : connect succeeded

2016/11/03 16:49:26.613476 BGP: %ADJCHANGE: neighbor swp1 Up

2016/11/03 16:49:26.613527 BGP: %ADJCHANGE: neighbor swp2 Up

2016/11/03 16:49:26.613545 BGP: %ADJCHANGE: neighbor swp3 Up](https://image.slidesharecdn.com/cumulusdellwebinarfinal1-161129210340/85/Building-Scalable-Data-Center-Networks-13-320.jpg)

![cumulusnetworks.com

Troubleshooting BGP

Show ip bgp summary

leaf01# show ip bgp summary

BGP router identifier 1.1.1.1, local AS number 65001 vrf-id 0

BGP table version 2

RIB entries 5, using 640 bytes of memory

Peers 2, using 42 KiB of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

spine01(swp1) 4 65000 99 100 0 0 0 00:04:37 1

spine02(swp2) 4 65000 46 48 0 0 0 00:02:02 1

spine03(swp3) 4 65000 87 88 0 0 0 00:01:04 1

Total number of neighbors 3

leaf01# show ip bgp nei spine01

BGP neighbor on swp1: fe80::4638:39ff:fe00:5c, remote AS 65000, local AS 65001, external link

Hostname: spine01

BGP version 4, remote router ID 10.10.2.1

[snip]](https://image.slidesharecdn.com/cumulusdellwebinarfinal1-161129210340/85/Building-Scalable-Data-Center-Networks-14-320.jpg)