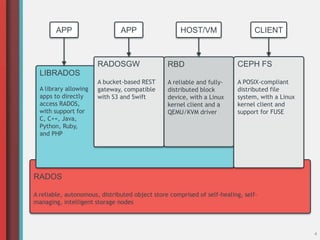

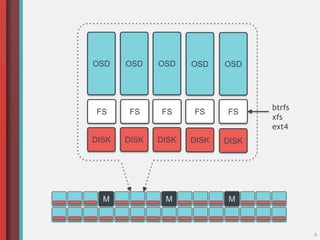

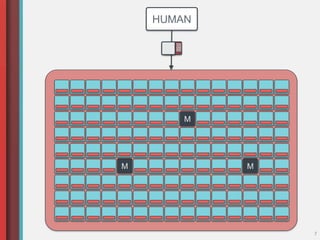

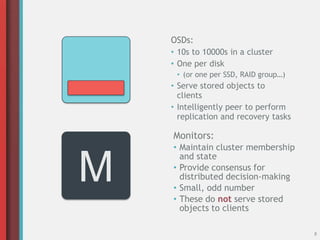

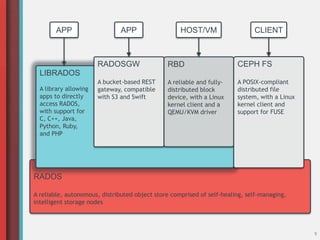

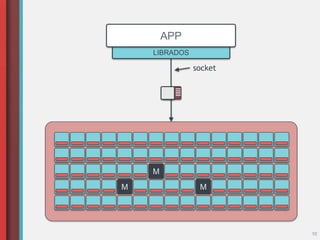

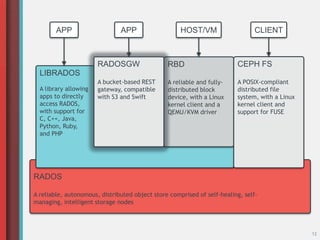

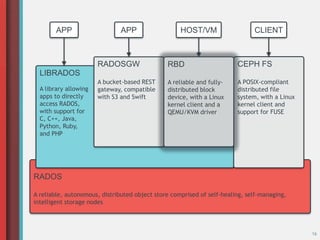

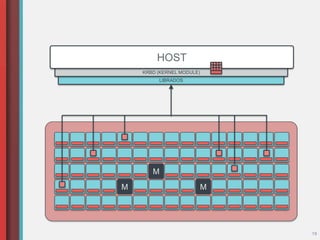

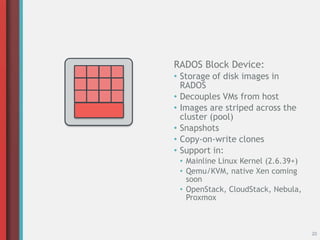

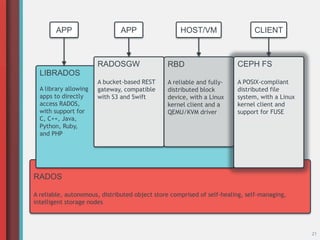

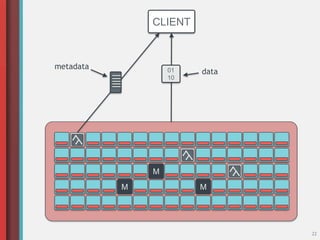

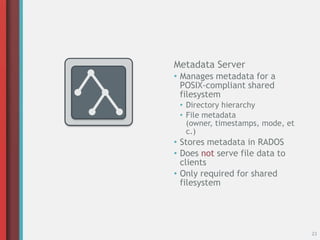

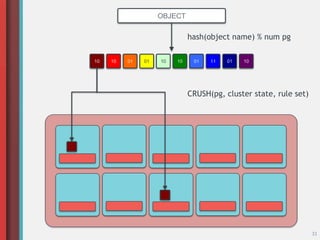

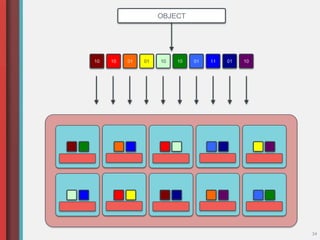

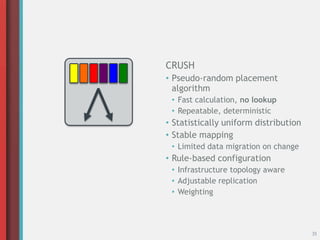

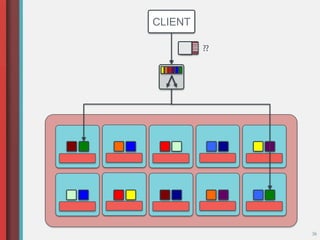

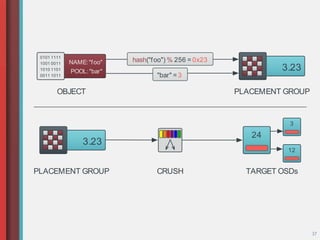

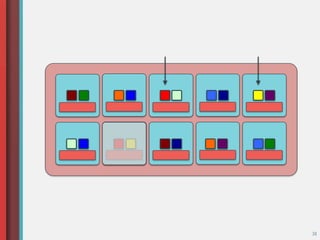

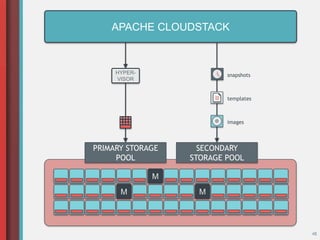

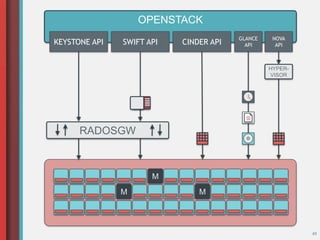

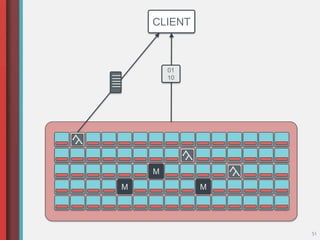

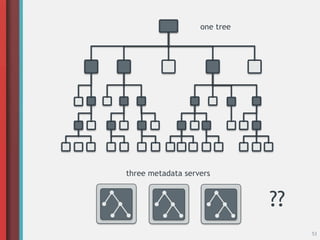

Ceph is an open-source distributed storage system that provides object storage, block storage, and file storage in a single unified cluster. It uses a pseudo-random data distribution algorithm called CRUSH to determine where to store data and metadata across the cluster to ensure high performance, reliability, and availability without a single point of failure. Ceph supports virtual machine images, shared file systems, object storage, and interfaces with other applications and frameworks like OpenStack, CloudStack, and RADOS Gateway.