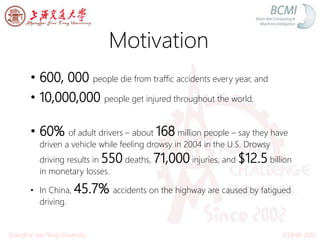

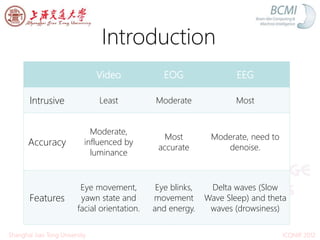

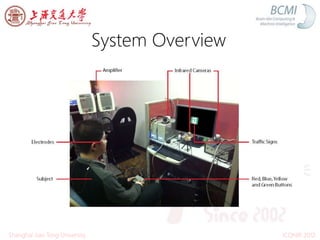

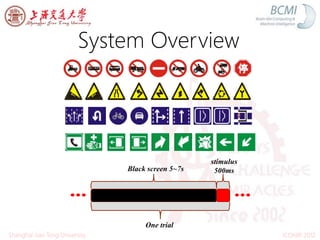

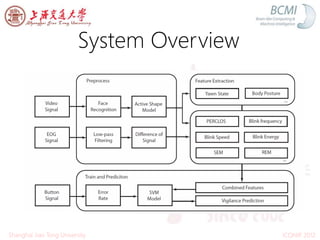

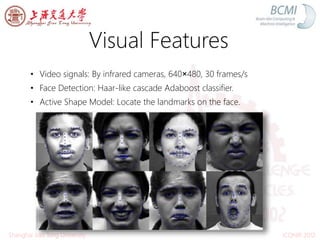

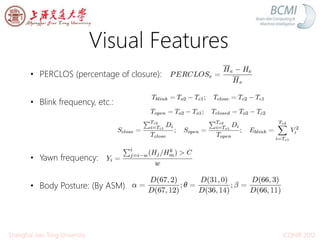

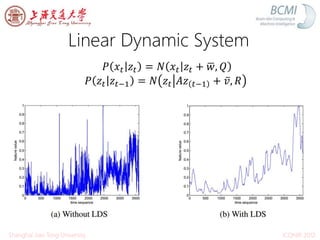

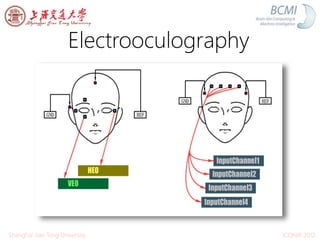

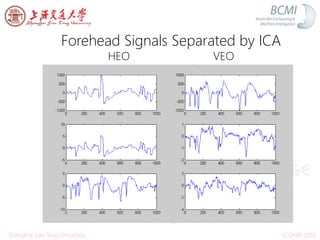

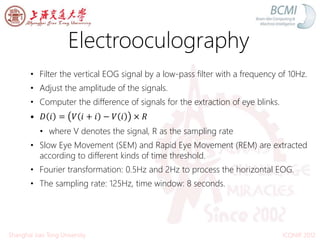

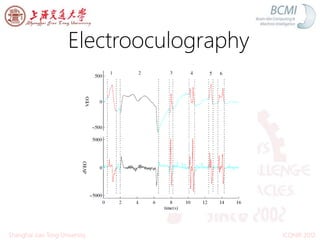

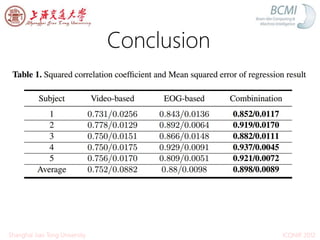

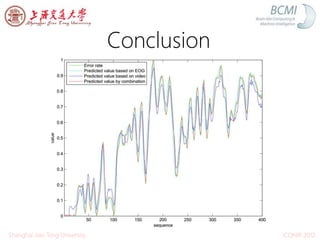

The document discusses the development of a system for detecting driver drowsiness using video and electrooculography (EOG) features, motivated by the high incidence of traffic accidents caused by fatigued driving. The system incorporates features such as eye movement, yawn state, and facial orientation for analysis, along with processing EOG signals to improve accuracy. Future work aims to enhance robustness and introduce comprehensive features to further address driver vigilance.