This document discusses convolutional neural networks for image classification and their application to the Kaggle National Data Science Bowl competition. It provides an overview of CNNs and their effectiveness for computer vision tasks. It then details various CNN architectures, preprocessing techniques, and ensembling methods that were tested on the competition dataset, achieving a top score of 0.609 log loss. The document concludes with highlights of the winning team's solution, including novel pooling methods and knowledge distillation.

![lenet-5. no other layers are necessary

6

Firstly idea proposed by LeCun7

in 1989, recently revived by

Springenberg et. al. in ”All Convolutional Net”8

,

6http://eblearn.sourceforge.net/beginner_tutorial2_train.html

7url: https://www.facebook.com/yann.lecun/posts/10152766574417143.

8J. T. Springenberg et al. “Striving for Simplicity: The All Convolutional Net”. In:

ArXiv e-prints (2014). arXiv: 1412.6806 [cs.LG].

11](https://image.slidesharecdn.com/kaggle-plankton-150325055010-conversion-gate01/75/Convolutional-neural-networks-for-image-classification-evidence-from-Kaggle-National-Data-Science-Bowl-12-2048.jpg)

![regularization methods

Table 5: 5-layer network experiments, 64x64 input image, LeakyReLU

Name, augmentation Val logloss

h+v mirror, scale + rot, vanilla 1.08

h+v mirror, scale + rot, PReLU (but slow down a lot)10

1.03

h+v mirror, scale + rot, BatchNorm11

1.10

h+v mirror, scale + rot, StochPool12

0.98

10K. He et al. “Delving Deep into Rectifiers: Surpassing Human-Level Performance on

ImageNet Classification”. In: ArXiv e-prints (2015). arXiv: 1502.01852 [cs.CV].

11S. Ioffe and C. Szegedy. “Batch Normalization: Accelerating Deep Network Training by

Reducing Internal Covariate Shift”. In: ArXiv e-prints (2015). arXiv: 1502.03167

[cs.LG].

12M. D. Zeiler and R. Fergus. “Stochastic Pooling for Regularization of Deep

Convolutional Neural Networks”. In: ArXiv e-prints (2013). arXiv: 1301.3557 [cs.LG].

20](https://image.slidesharecdn.com/kaggle-plankton-150325055010-conversion-gate01/75/Convolutional-neural-networks-for-image-classification-evidence-from-Kaggle-National-Data-Science-Bowl-21-2048.jpg)

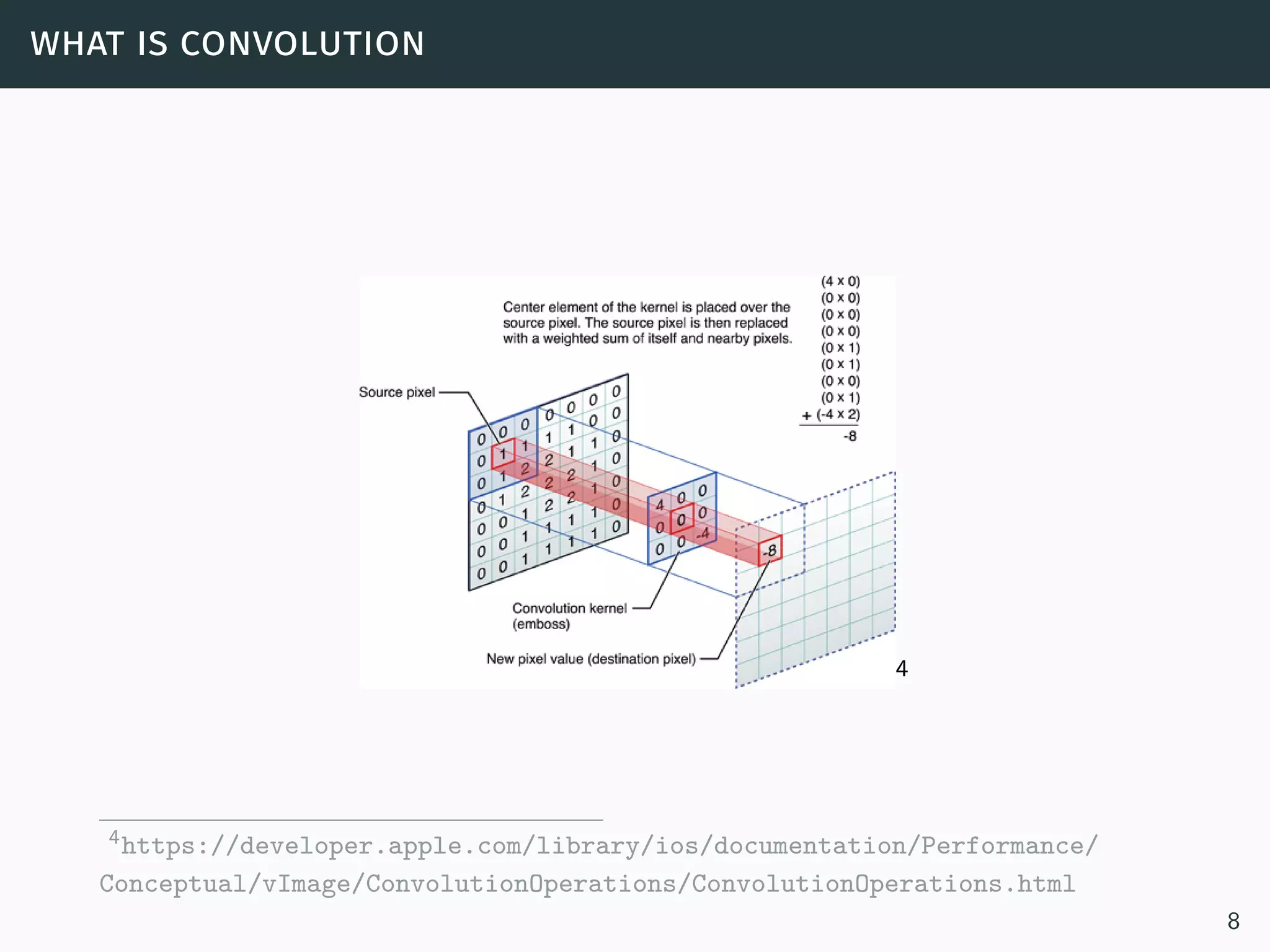

![googlenet

GoogLeNet architecture13

13C. Szegedy et al. “Going Deeper with Convolutions”. In: ArXiv e-prints (2014).

arXiv: 1409.4842 [cs.CV].

25](https://image.slidesharecdn.com/kaggle-plankton-150325055010-conversion-gate01/75/Convolutional-neural-networks-for-image-classification-evidence-from-Kaggle-National-Data-Science-Bowl-26-2048.jpg)

![internal ensemble

Take mean of all auxiliary classifiers instead of just throwing away them

Table 6: GoogLeNet,validation loss

Name Public LB

clf on inc3 0.722

clf on inc4a 0.754

clf on inc4b 0.757

clf on inc5b 0.855

average 0.693

Table 7: VGGNet,validation loss

Name Public LB

clf on pool4 0.762

clf on pool5 0.657

clf on fc7 0.707

average 0.630

14

14J. Xie, B. Xu, and Z. Chuang. “Horizontal and Vertical Ensemble with Deep

Representation for Classification”. In: ArXiv e-prints (2013). arXiv: 1306.2759

[cs.LG].

27](https://image.slidesharecdn.com/kaggle-plankton-150325055010-conversion-gate01/75/Convolutional-neural-networks-for-image-classification-evidence-from-Kaggle-National-Data-Science-Bowl-28-2048.jpg)

![vggnet

VGGNet architectures16

Differences: Dropout in conv-layers (0.3), SPP-pooling for pool5, LeakyReLU,

aux. clf.

16K. Simonyan and A. Zisserman. “Very Deep Convolutional Networks for Large-Scale

Image Recognition”. In: ArXiv e-prints (Sept. 2014). arXiv: 1409.1556 [cs.CV].

29](https://image.slidesharecdn.com/kaggle-plankton-150325055010-conversion-gate01/75/Convolutional-neural-networks-for-image-classification-evidence-from-Kaggle-National-Data-Science-Bowl-30-2048.jpg)

![spatial pyramid pooling

17

17K. He et al. “Spatial Pyramid Pooling in Deep Convolutional Networks for Visual

Recognition”. In: ArXiv e-prints (2014). arXiv: 1406.4729 [cs.CV].

30](https://image.slidesharecdn.com/kaggle-plankton-150325055010-conversion-gate01/75/Convolutional-neural-networks-for-image-classification-evidence-from-Kaggle-National-Data-Science-Bowl-31-2048.jpg)