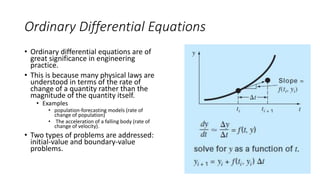

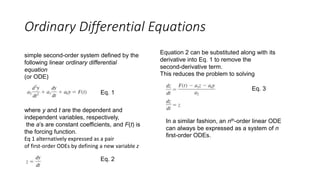

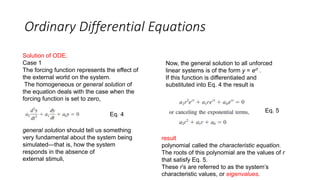

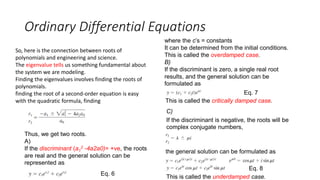

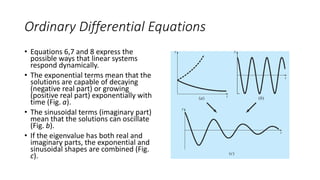

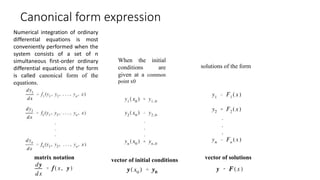

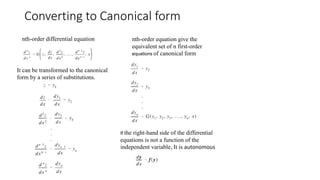

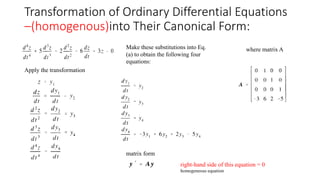

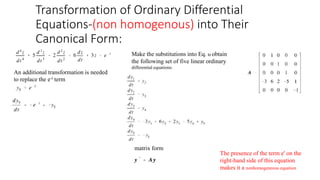

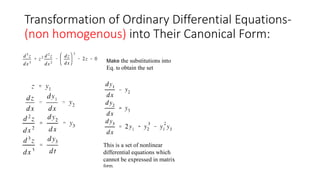

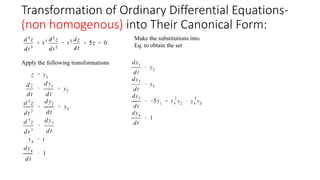

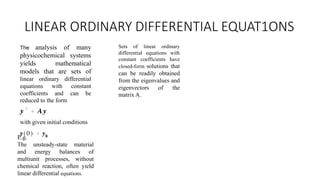

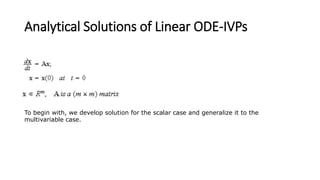

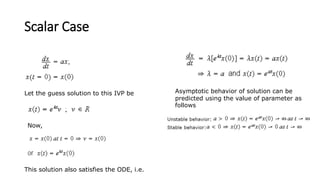

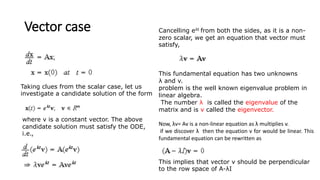

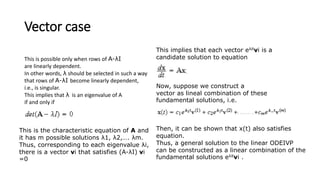

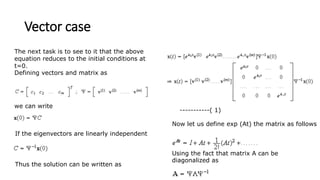

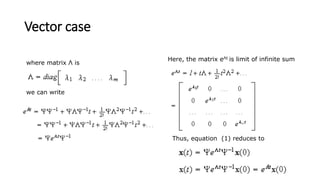

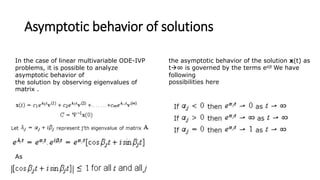

This document discusses analytical solutions of linear ordinary differential equation initial value problems (ODE-IVPs). It begins by introducing scalar and vector cases of linear ODE-IVPs. For the scalar case, it shows that the solution has the form of et. For the vector case, it shows that the solution has the form of eλtv, where λ are the eigenvalues of the coefficient matrix A and v are the corresponding eigenvectors. It then discusses how to determine the eigenvalues and eigenvectors by solving the characteristic equation of A. Finally, it expresses the general solution as a linear combination of the fundamental solutions eλtv, which must also satisfy the initial conditions.