The document discusses MPEG's work on developing standards for augmented reality applications. It provides an overview of MPEG, its history of creating multimedia standards, and its technologies that relate to AR like scene description, graphics compression, sensors and actuators. The document outlines MPEG's vision for an Augmented Reality Application Format (ARAF) that brings together these technologies to enable end-to-end AR experiences. It demonstrates ARAF through examples and exercises using an AR quiz and augmented book.

![MPEG 3DG Report

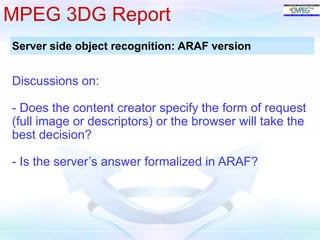

Server side object recognition: a real system*

Client Server

Query

image

[Extraction]

Descriptors

[Detection]

Key points

HTTP POST

(binary descriptor +

key points)

Query

descriptors

DB

descriptors

Matchin

g

ID

Correspondin

g Information

Error/no message

Data as String

Parse and

display the

answer

Decod

e

(5.2)

Decod

e

(1)

(2.2)

(2.1)

(3.1)

(3.2)

HTTP

Response

Descriptors,

images and

information

[DB]

(4)

(5.1)

(6)

(7)

(8’)

(8’’)

(10) (9)

Binary

Data

* Wine recognizer : GooT and IMT](https://image.slidesharecdn.com/mpegaraftutorialismar-141109073006-conversion-gate02/85/Mpeg-ARAF-tutorial-ISMAR-2014-39-320.jpg)