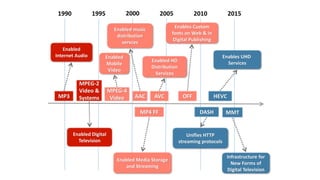

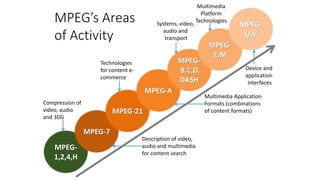

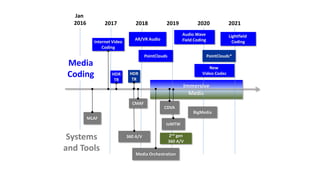

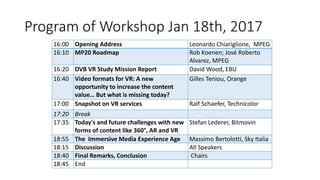

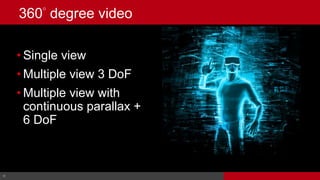

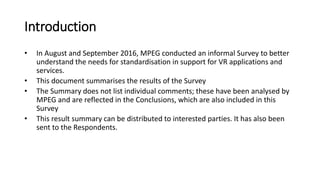

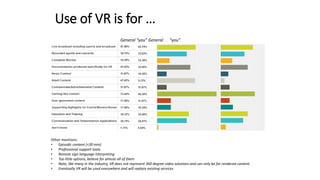

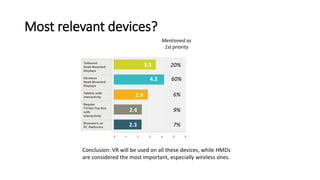

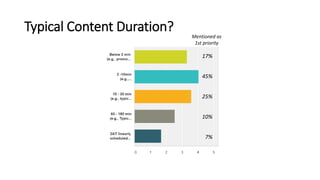

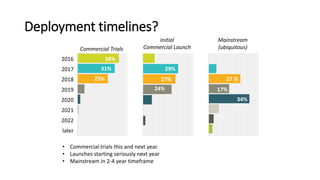

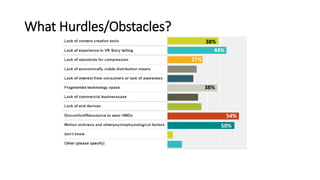

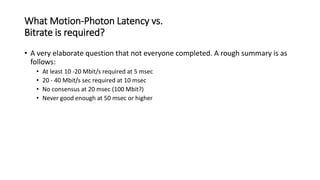

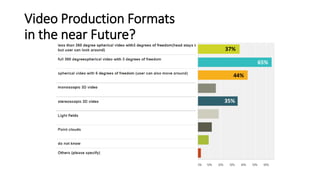

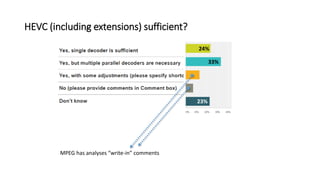

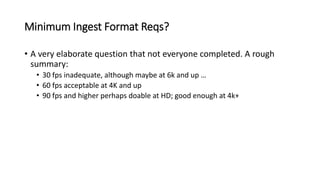

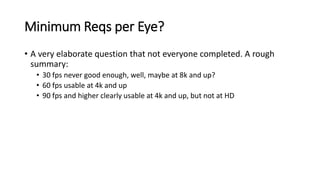

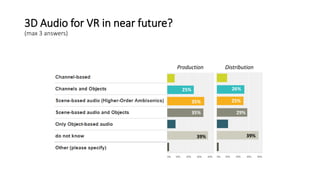

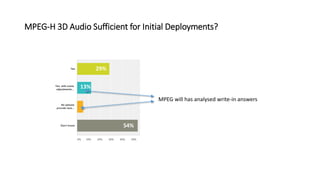

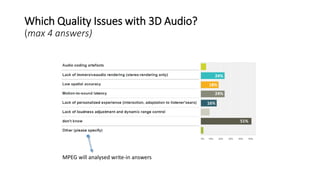

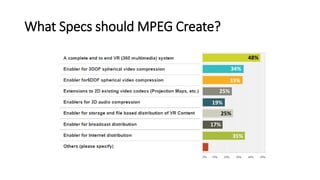

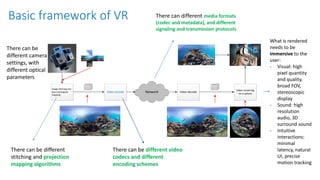

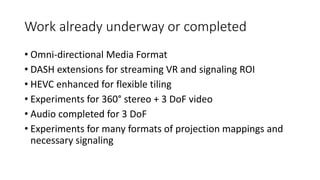

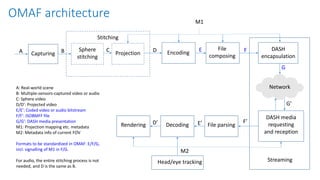

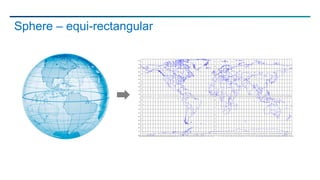

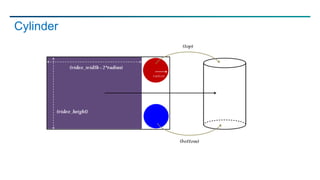

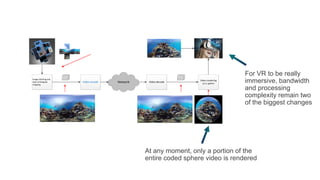

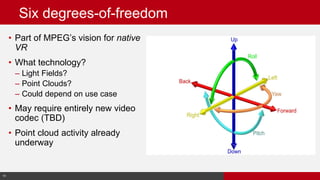

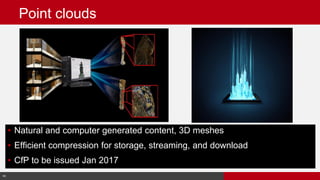

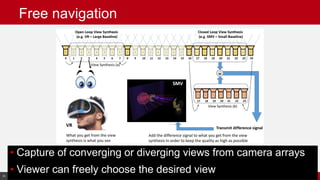

The document discusses the MPEG group's efforts in developing standards for immersive media, particularly focusing on virtual reality (VR). It outlines the collaboration among accredited participants from various countries and presents findings from a survey on the industry's needs for VR standardization, highlighting areas such as video and audio coding, delivery methods, and anticipated deployment timelines. The ultimate goal is to create comprehensive specifications supporting high-quality VR experiences while addressing significant industry challenges.