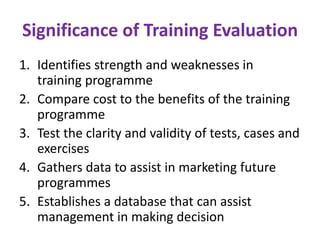

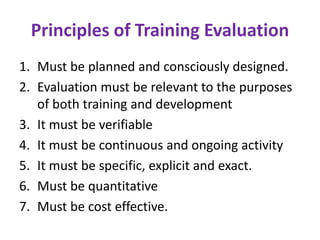

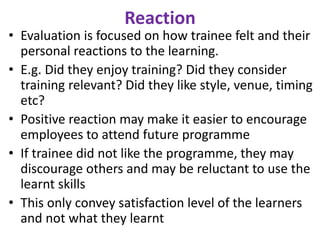

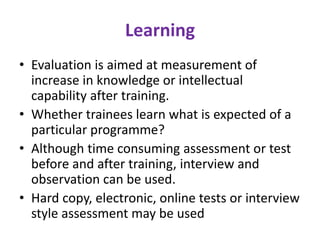

This document discusses training evaluation, including its meaning and significance. It describes Kirkpatrick's four-level model of training evaluation (reaction, learning, behavior, results) and return on investment. Different types of evaluation (formative, summative) and data collection methods are explained. The principles and designs of training evaluation are outlined, along with suggestions for better evaluation.