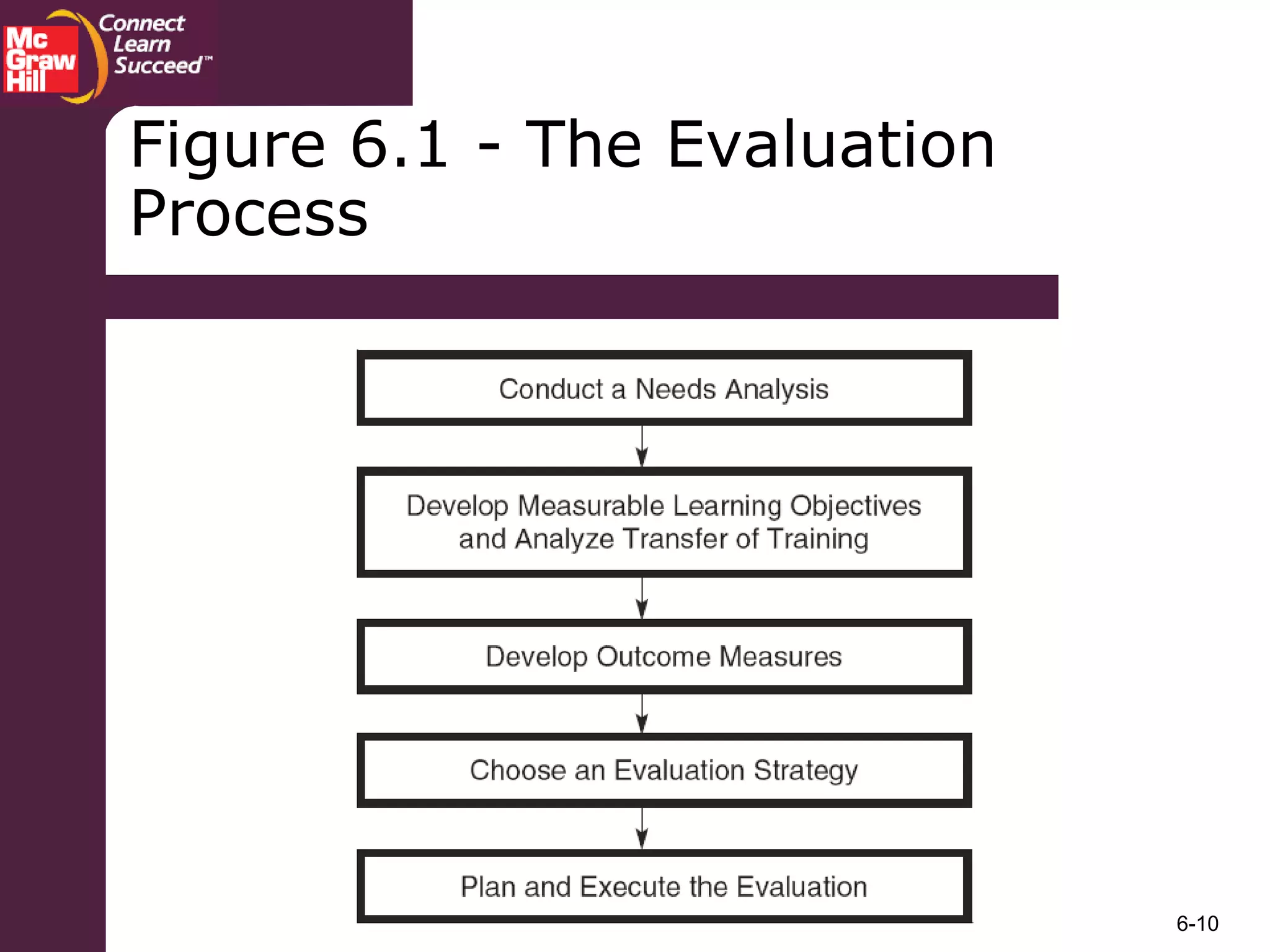

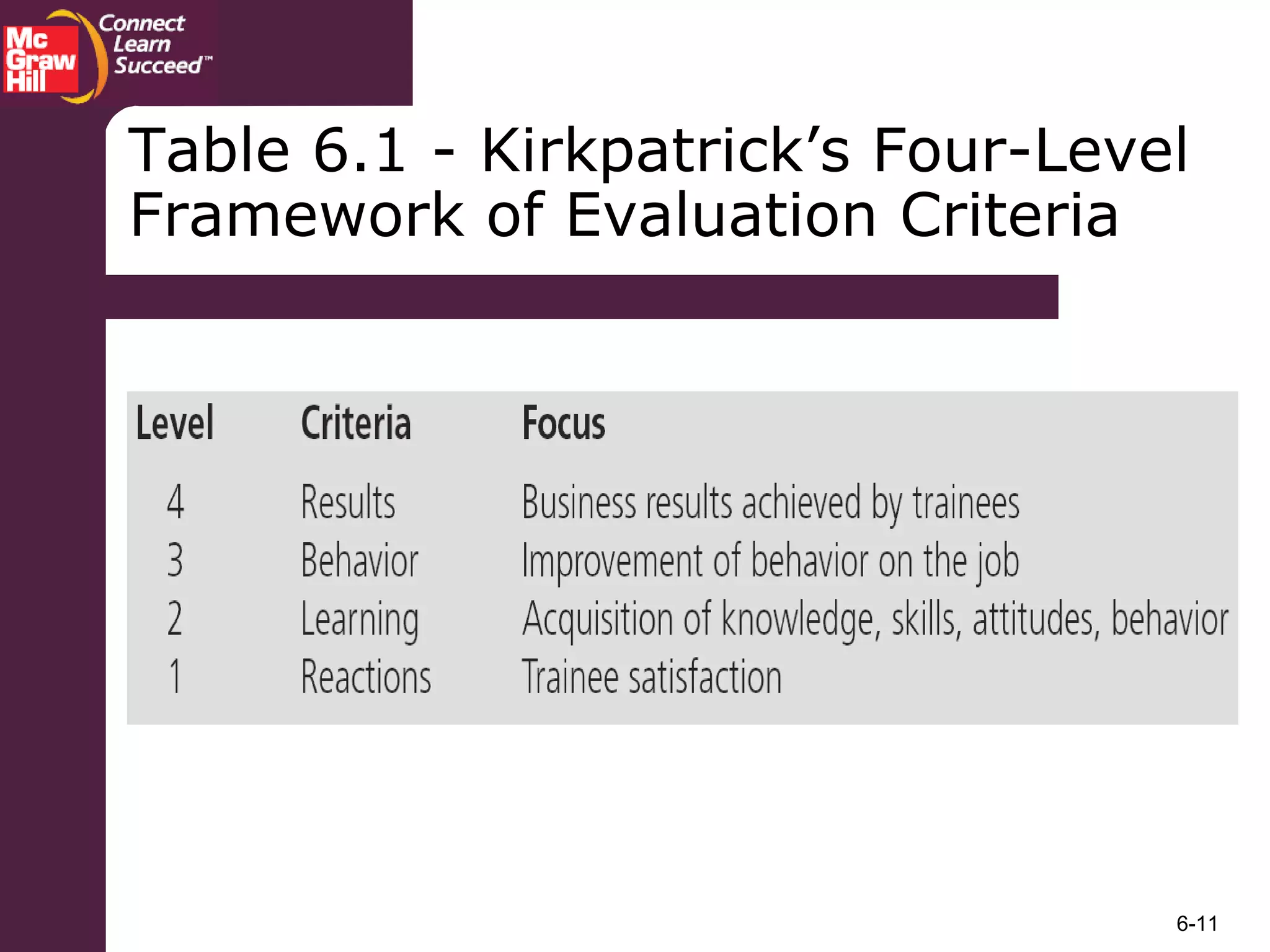

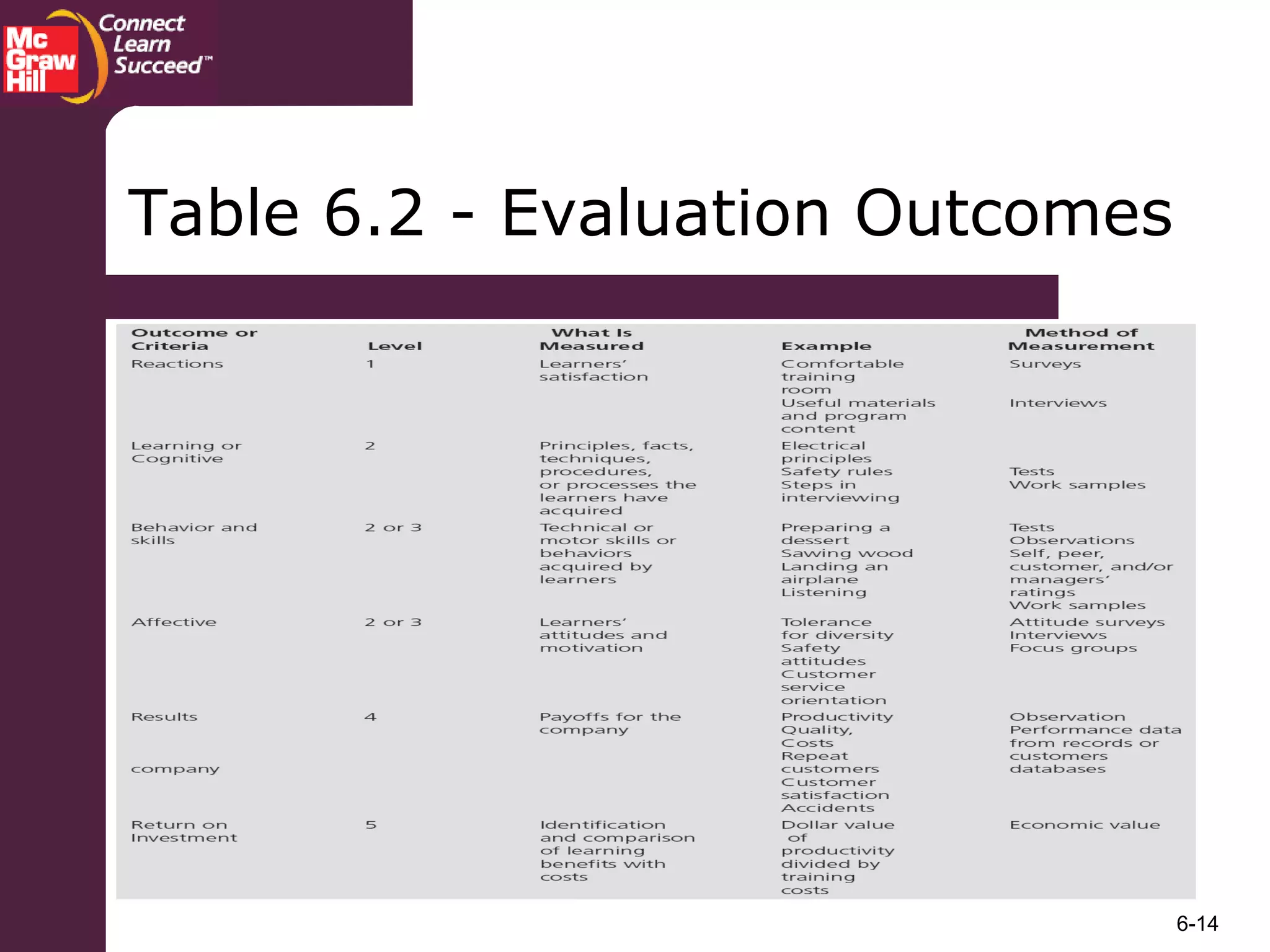

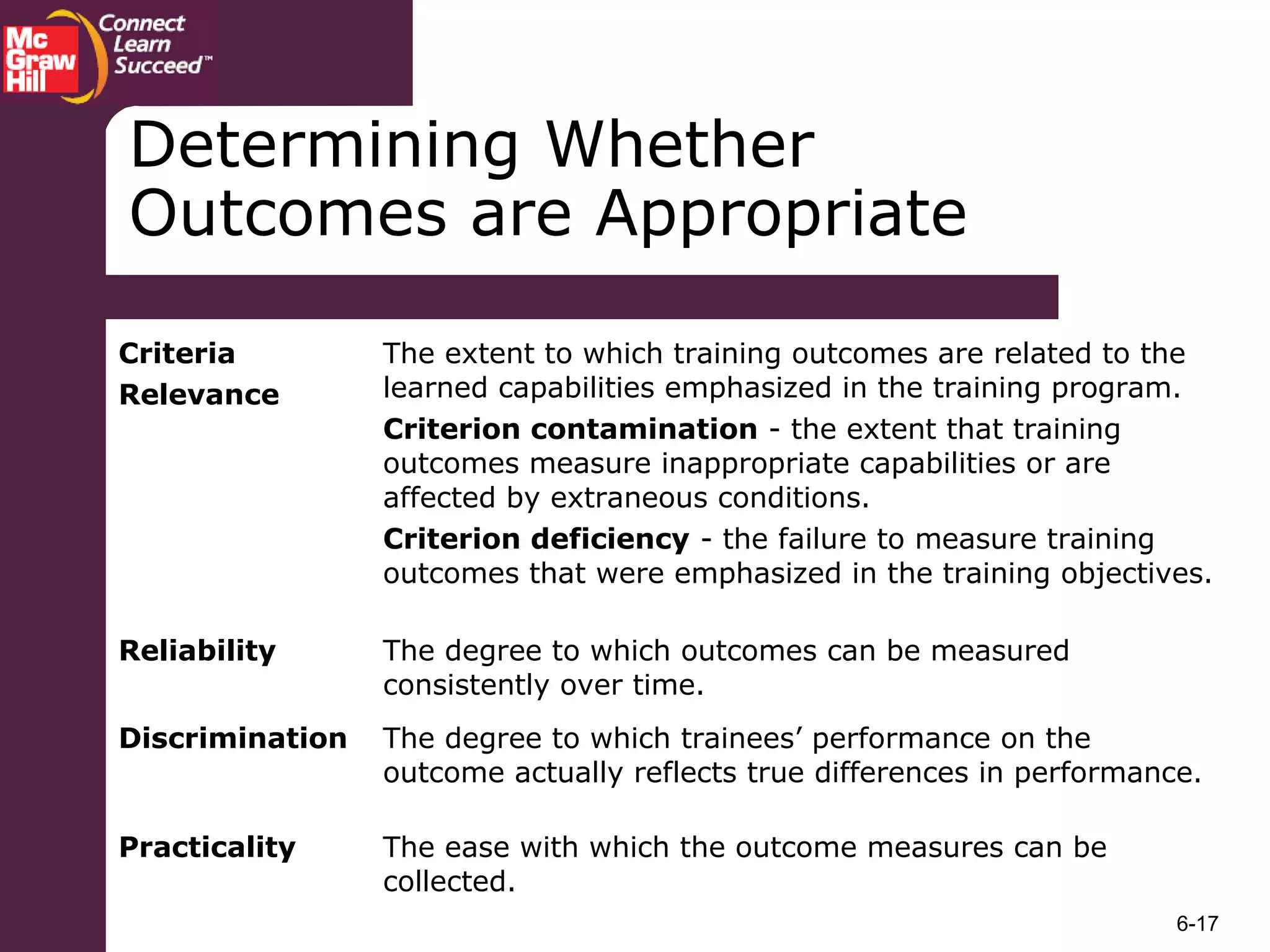

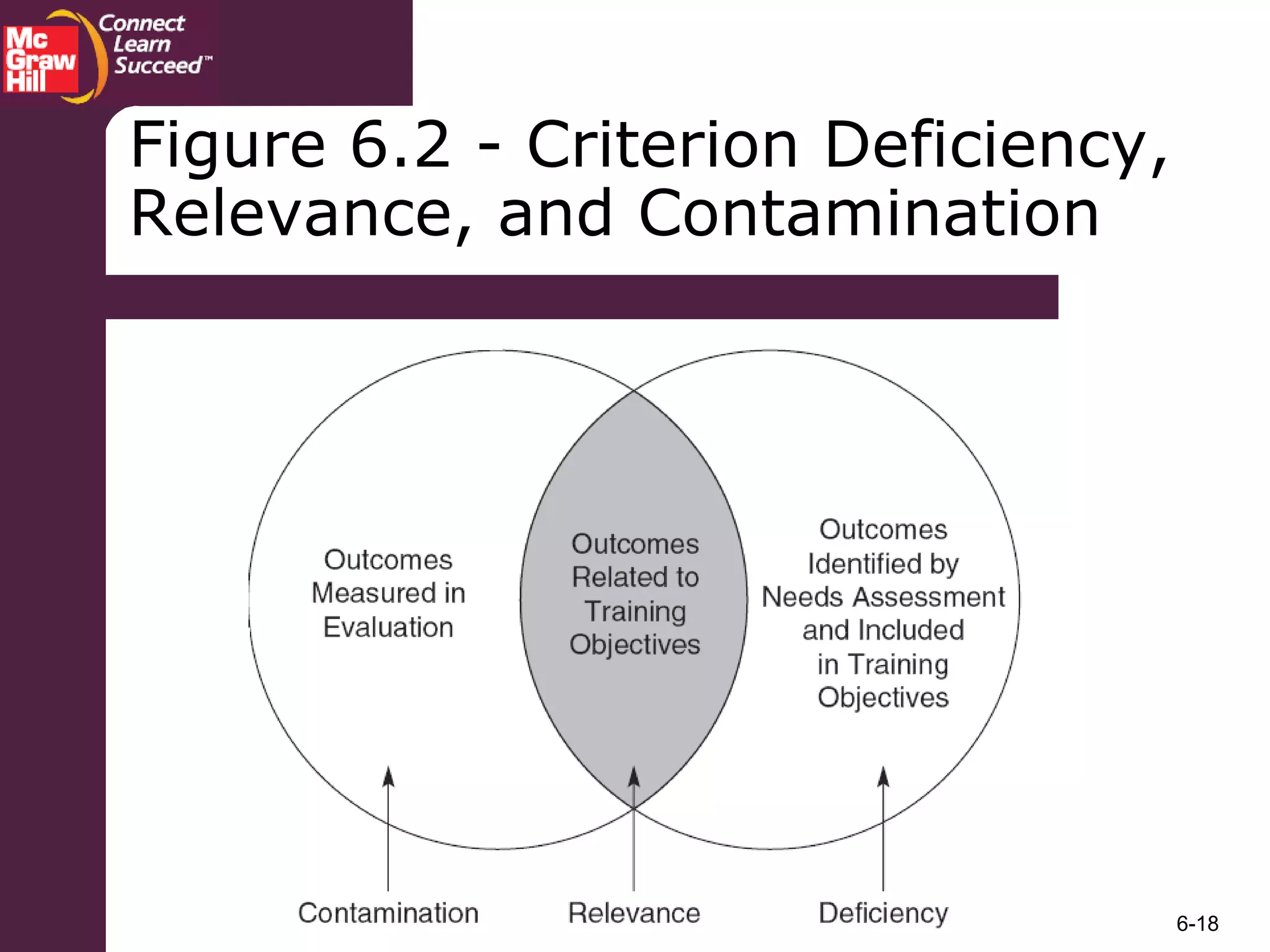

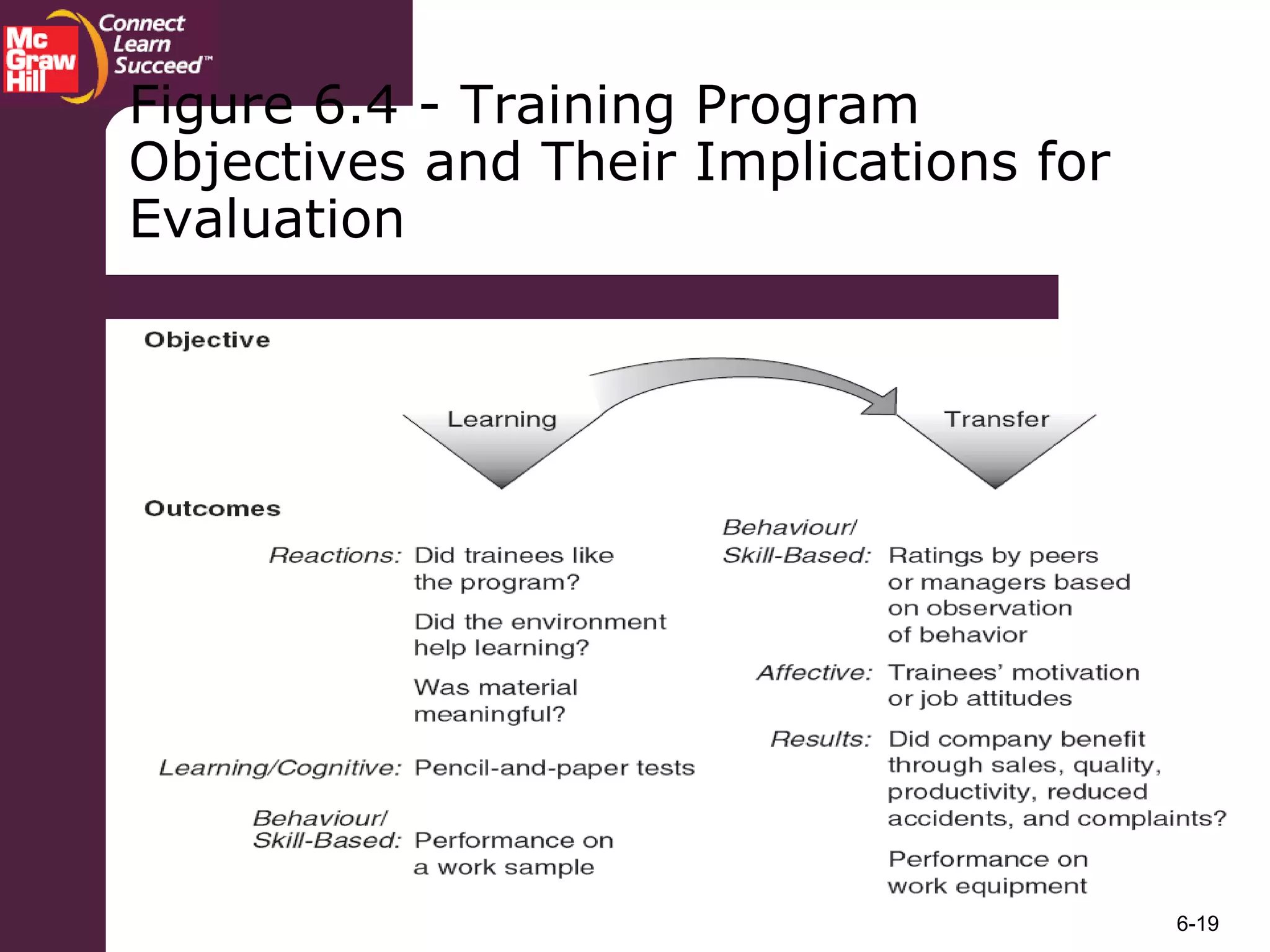

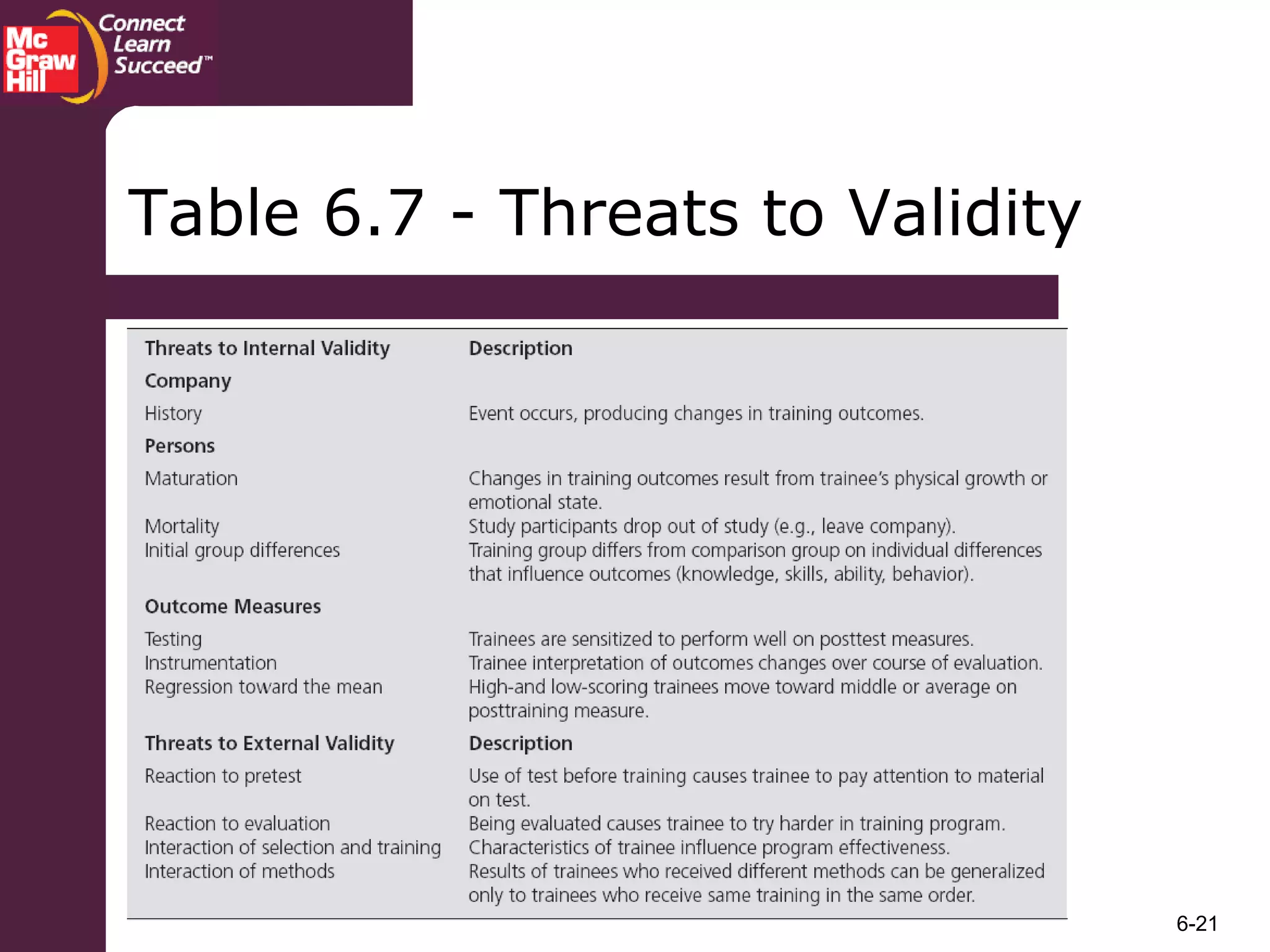

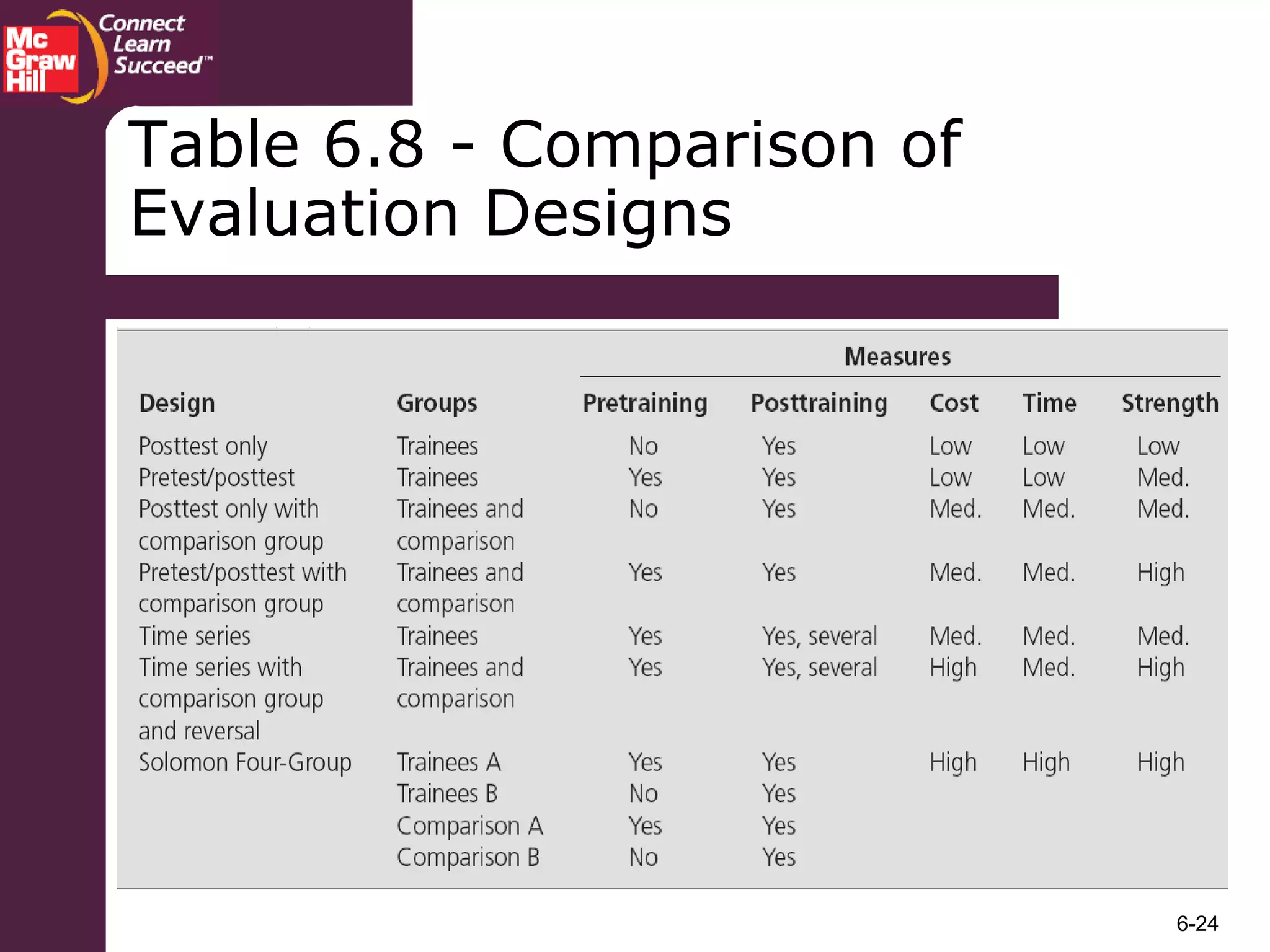

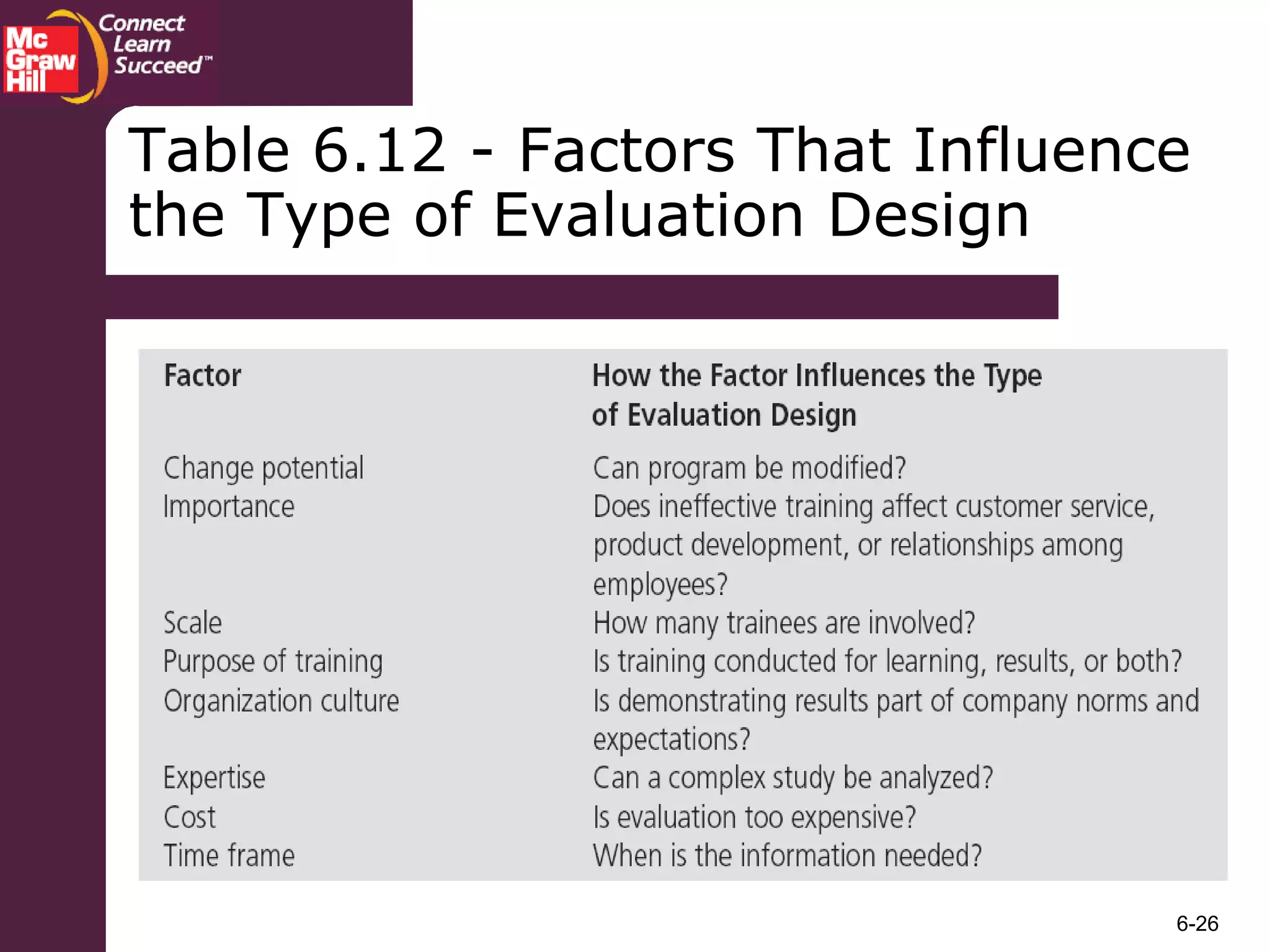

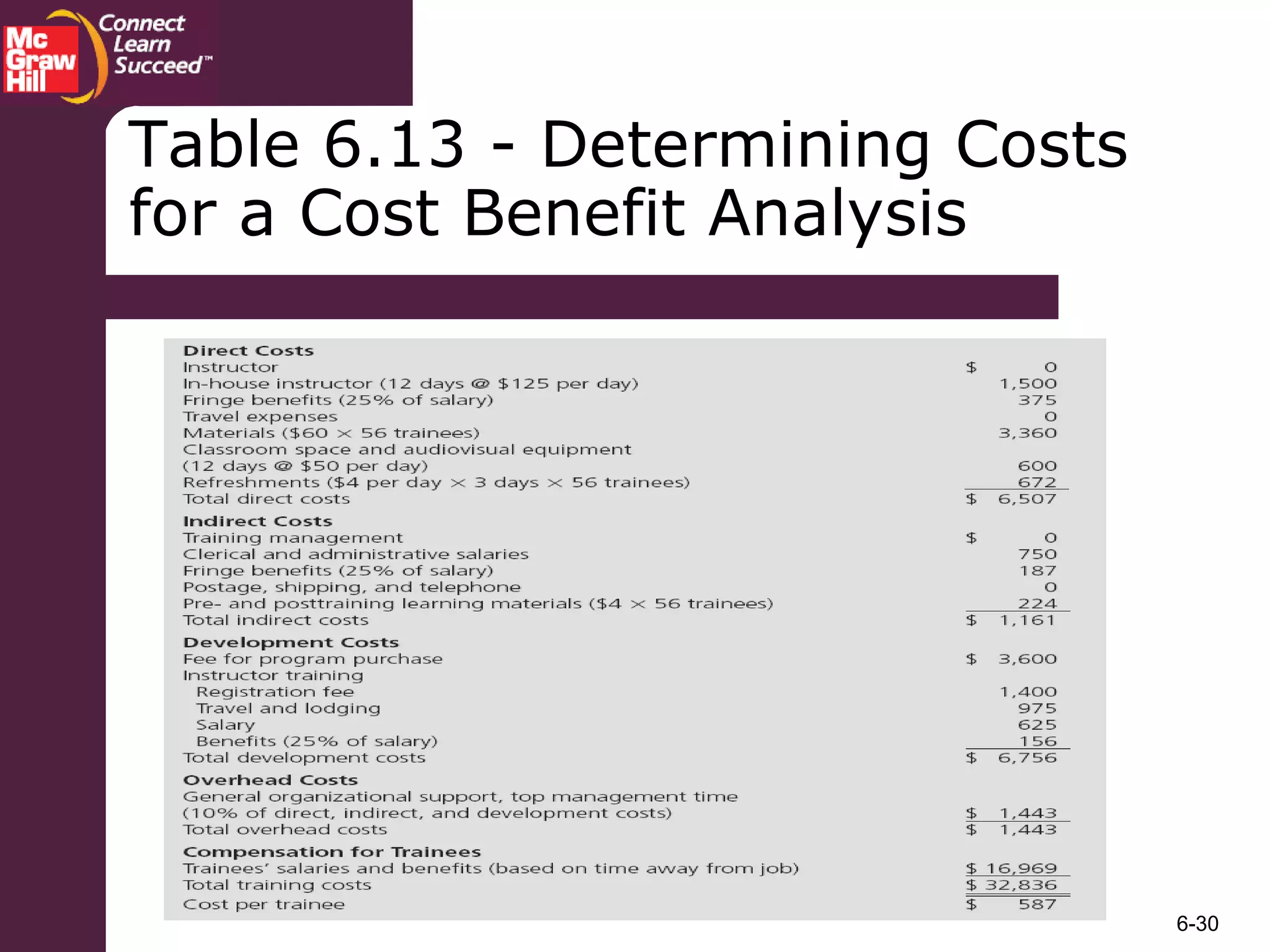

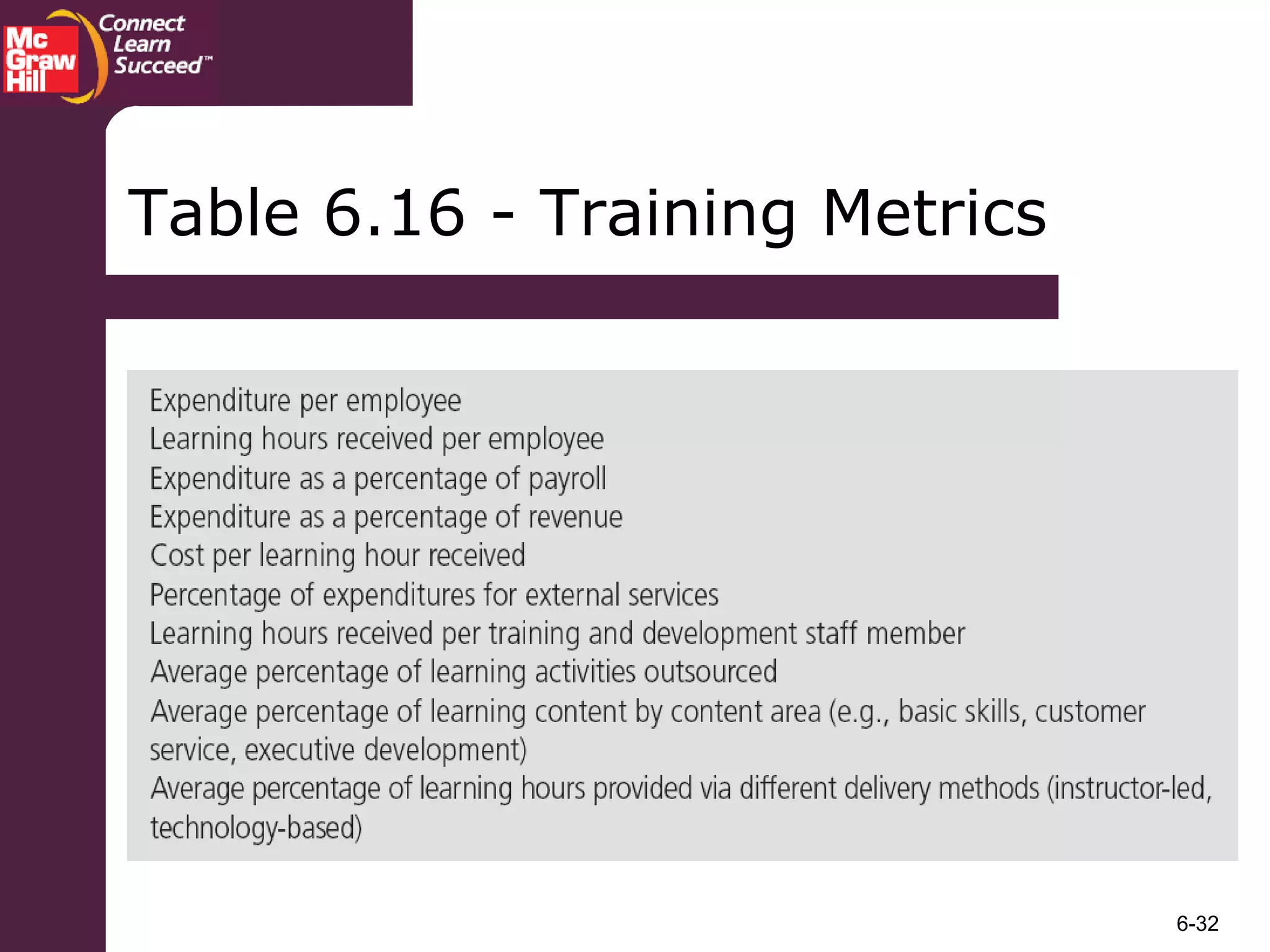

This document discusses training evaluation and return on investment analysis. It defines key terms like training effectiveness, outcomes, formative and summative evaluation. It describes Kirkpatrick's four-level model of evaluating training reaction, learning, behavior, and results. The document outlines different evaluation designs and how to measure costs and benefits to calculate return on investment. It emphasizes the importance of evaluating training programs to demonstrate benefits and identify areas for improvement.