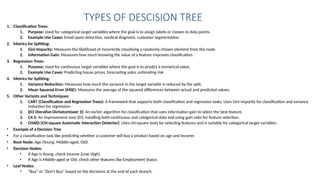

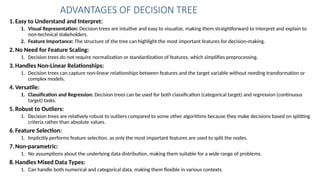

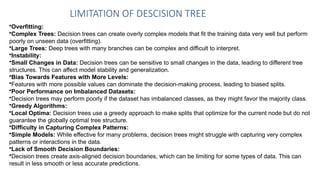

The document provides an overview of decision trees, a supervised machine learning algorithm used for classification and regression tasks, characterized by a flowchart-like structure with nodes, branches, and leaves. It explains how decision trees function, including splitting data based on features, metrics for selection like Gini impurity and information gain, and examples of their application in various domains such as healthcare and finance. Additionally, it discusses the advantages and limitations of decision trees, including issues like overfitting, instability, and challenges with complex patterns.

![Visual Representation of a Simple Decision Tree:

[Weather]

/ |

Sunny Overcast Rainy

/ /

Humidity - Wind -

/ /

High Normal Strong Weak

/ /

Don't Play Play Don't Play Play](https://image.slidesharecdn.com/aimachinelearning-31140523010-bds302-240828170448-5063fabd/85/Ai-Machine-learning-31140523010-BDS302-pptx-6-320.jpg)