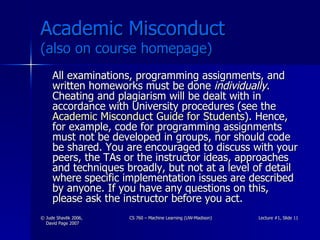

This document outlines the syllabus for a machine learning course. It introduces the instructor, teaching assistant, required textbook, and meeting schedule. It describes the course style as primarily algorithmic and experimental, covering many ML subfields. The goals are to understand what a learning system should do and how existing systems work. Background knowledge in languages, AI topics, and math is assumed, but no prior ML experience is needed. Requirements include biweekly programming homework, a midterm exam, and a final project. Grading will be based on homework, exam, project, and discussion participation. Policies on late homework and academic misconduct are also provided.

![Broad Paradigms of Machine Learning Inducing Functions from I/O Pairs Decision trees (e.g., Quinlan’s C4.5 [1993]) Connectionism / neural networks (e.g., backprop) Nearest-neighbor methods Genetic algorithms SVM’s Learning without Feedback/Teacher Conceptual clustering Self-organizing systems Discovery systems Not in Mitchell’s textbook (covered in CS 776)](https://image.slidesharecdn.com/mllecture1ppt3517/85/MLlecture1-ppt-18-320.jpg)

![Standard Feature Types for representing training examples – a source of “ domain knowledge ” Nominal No relationship among possible values e.g., color є {red, blue, green} (vs. color = 1000 Hertz) Linear (or Ordered) Possible values of the feature are totally ordered e.g., size є {small, medium, large} ← discrete weight є [0…500] ← continuous Hierarchical Possible values are partially ordered in an ISA hierarchy e.g. for shape -> closed polygon continuous triangle square circle ellipse](https://image.slidesharecdn.com/mllecture1ppt3517/85/MLlecture1-ppt-39-320.jpg)

![[email_address] David Jensen’s group at UMass uses Naïve Bayes and other ML algo’s on the IMDB Opening weekend box-office receipts > $2 million 25 attributes Accuracy = 83.3% Default accuracy = 56% (default algo?) Movie is drama? 12 attributes Accuracy = 71.9% Default accuracy = 51% http://kdl.cs.umass.edu/proximity/about.html](https://image.slidesharecdn.com/mllecture1ppt3517/85/MLlecture1-ppt-45-320.jpg)