This document summarizes a machine learning lecture given by William H. Hsu. The 3-sentence summary is:

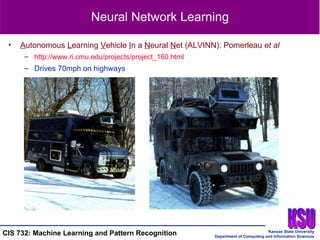

The lecture outlined the course information, including format, exams, homework and grading. It provided an overview of machine learning topics to be covered, such as learning algorithms, models and methodologies. Examples of applications of machine learning were also discussed, such as database mining, reasoning, and acting.

![Course Information and Administrivia Instructor: William H. Hsu E-mail: [email_address] Office phone: (785) 532-6350 x29; home phone: (785) 539-7180; ICQ 28651394 Office hours: Wed/Thu/Fri (213 Nichols); by appointment, Tue Grading Assignments (8): 32%, paper reviews (13): 10%, midterm: 20%, final: 25% Class participation: 5% Lowest homework score and lowest 3 paper summary scores dropped Homework Eight assignments: written (5) and programming (3) – drop lowest Late policy: due on Fridays Cheating: don’t do it; see class web page for policy Project Option CIS 690/890 project option for graduate students Term paper or semester research project; sign up by 09 Feb 2007](https://image.slidesharecdn.com/original3923/85/original-3-320.jpg)

![Class Resources Web Page (Required) http://www.kddresearch.org/Courses/Spring-2007/CIS732 Lecture notes (MS PowerPoint XP, PDF) Homeworks (MS PowerPoint XP, PDF) Exam and homework solutions (MS PowerPoint XP, PDF) Class announcements (students responsibility to follow) and grade postings Course Notes at Copy Center (Required) Course Web Group Mirror of announcements from class web page Discussions (instructor and other students) Dated research announcements (seminars, conferences, calls for papers) Mailing List (Automatic) [email_address] Sign-up sheet Reminders, related research announcements](https://image.slidesharecdn.com/original3923/85/original-4-320.jpg)