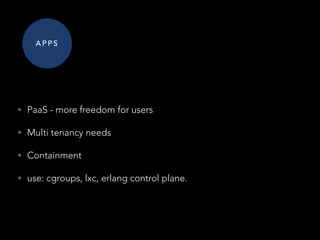

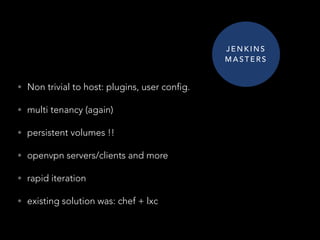

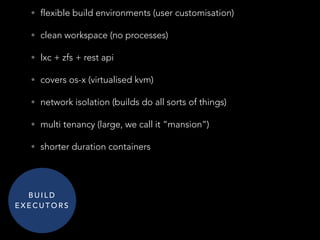

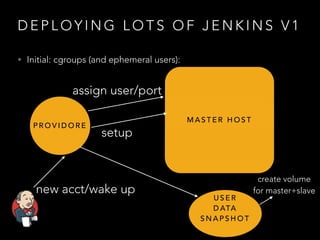

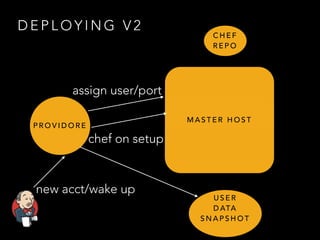

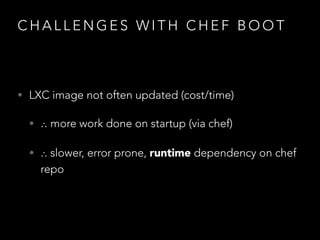

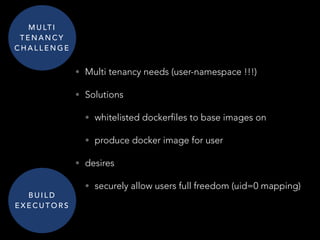

- Three disparate ways of containerization (cgroups, LXC, Docker) evolved over time at the company

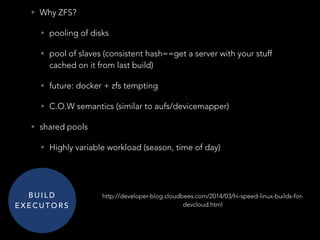

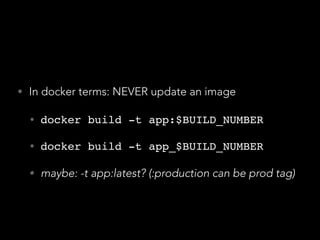

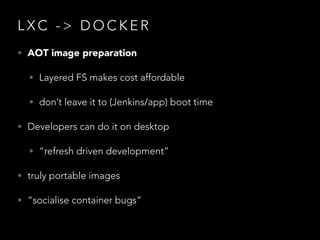

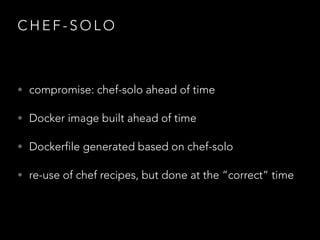

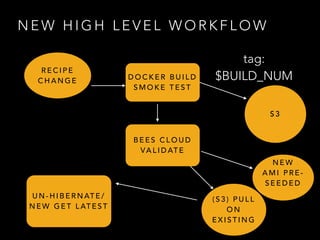

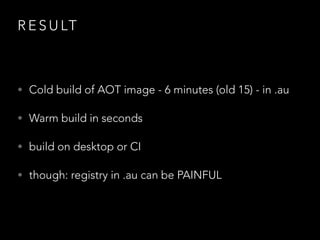

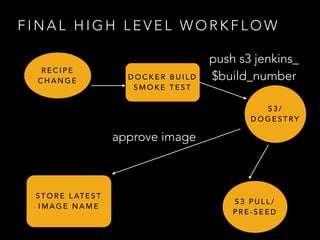

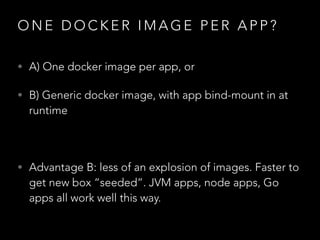

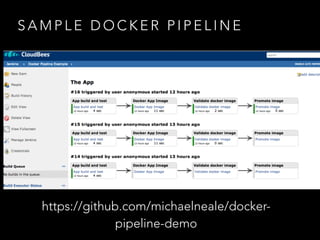

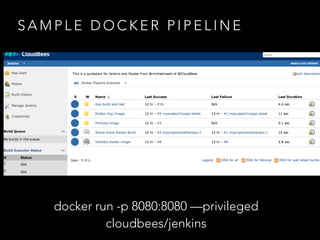

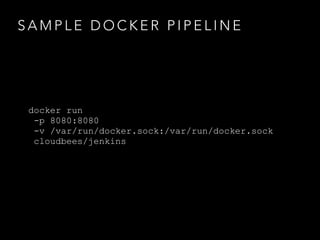

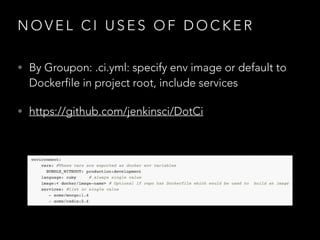

- Docker provided a unified standard and improved the workflow, such as pre-building container images ahead of time

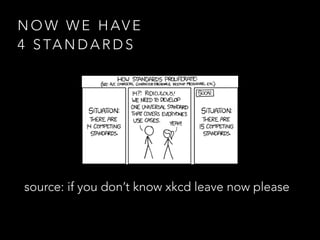

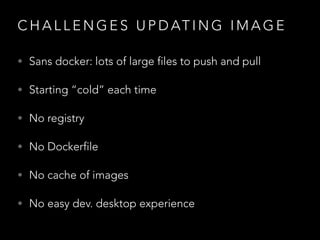

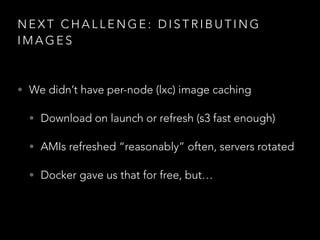

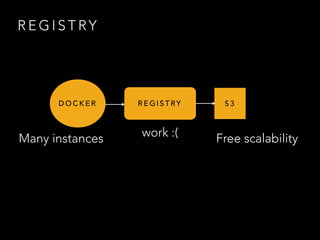

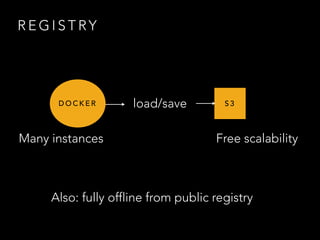

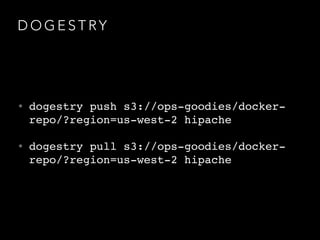

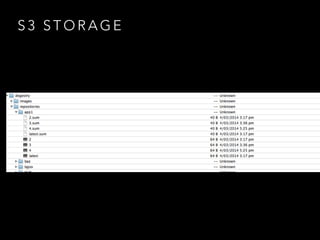

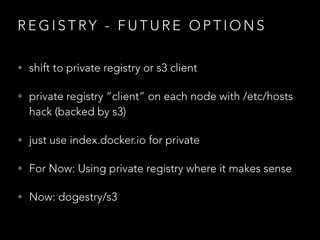

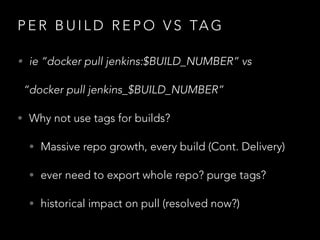

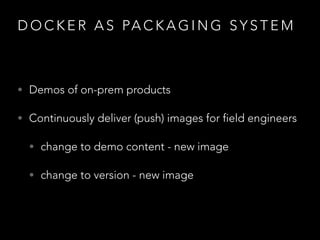

- Challenges remained around distributing and storing the large number of container images generated by continuous delivery