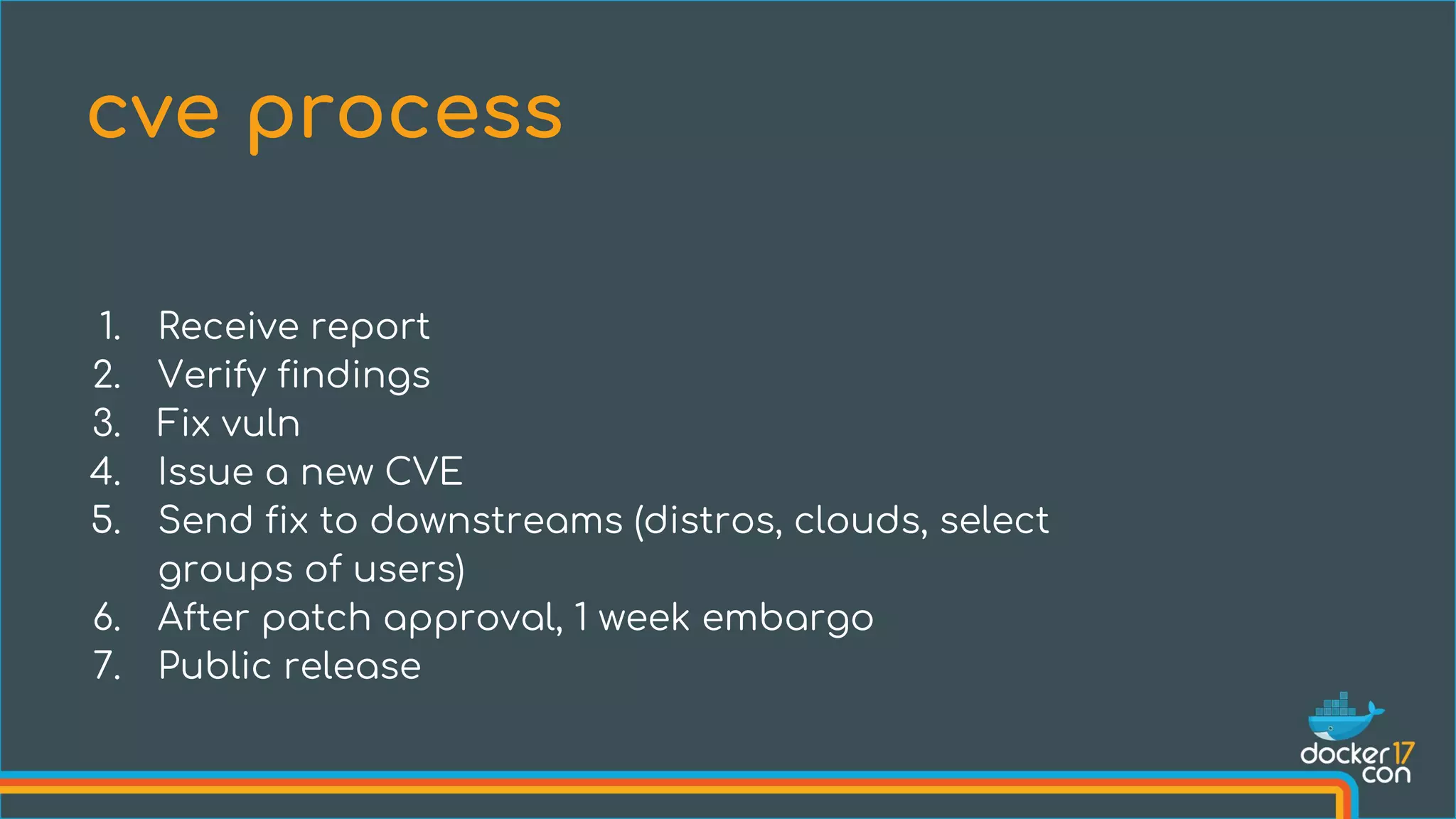

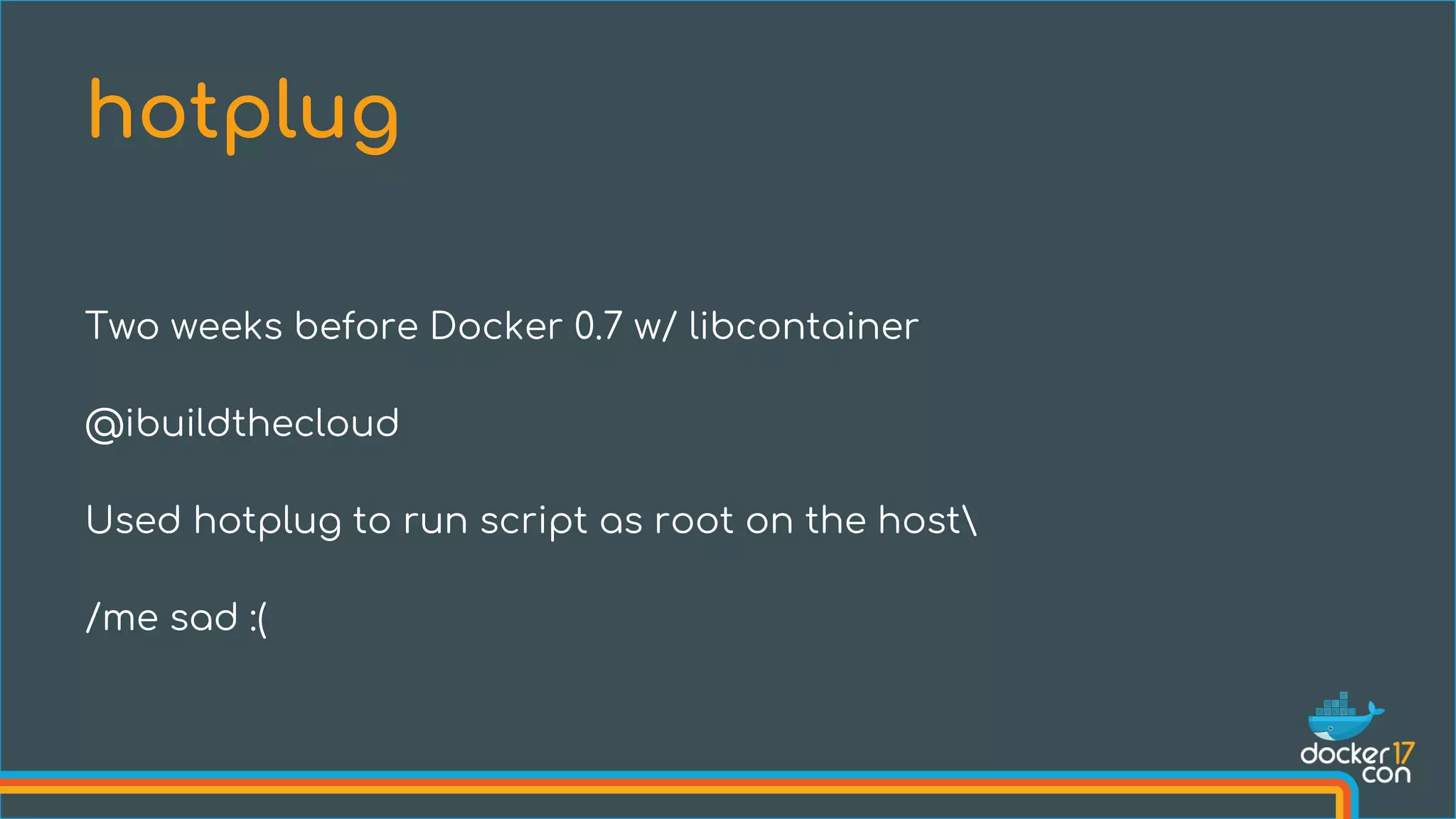

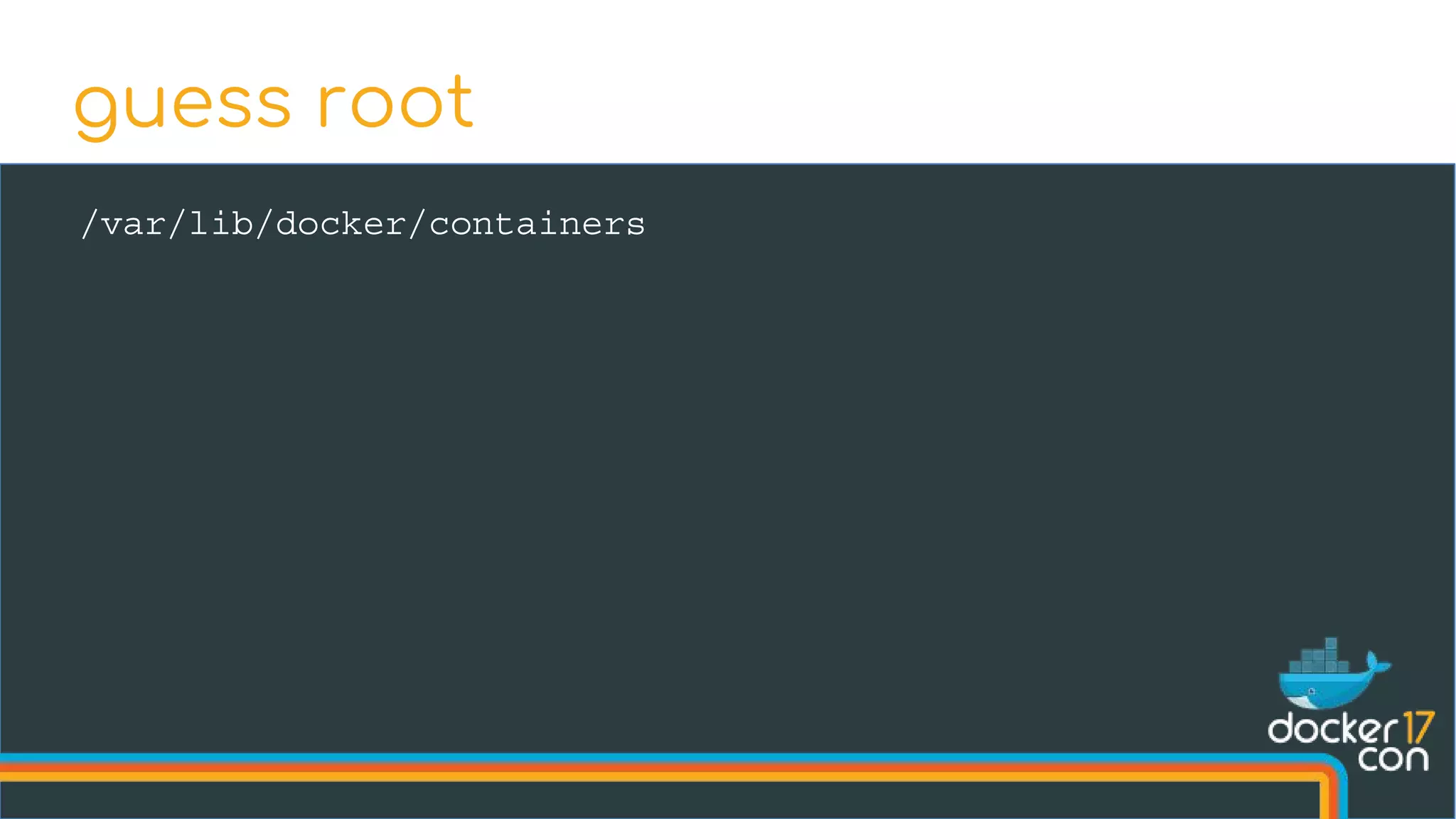

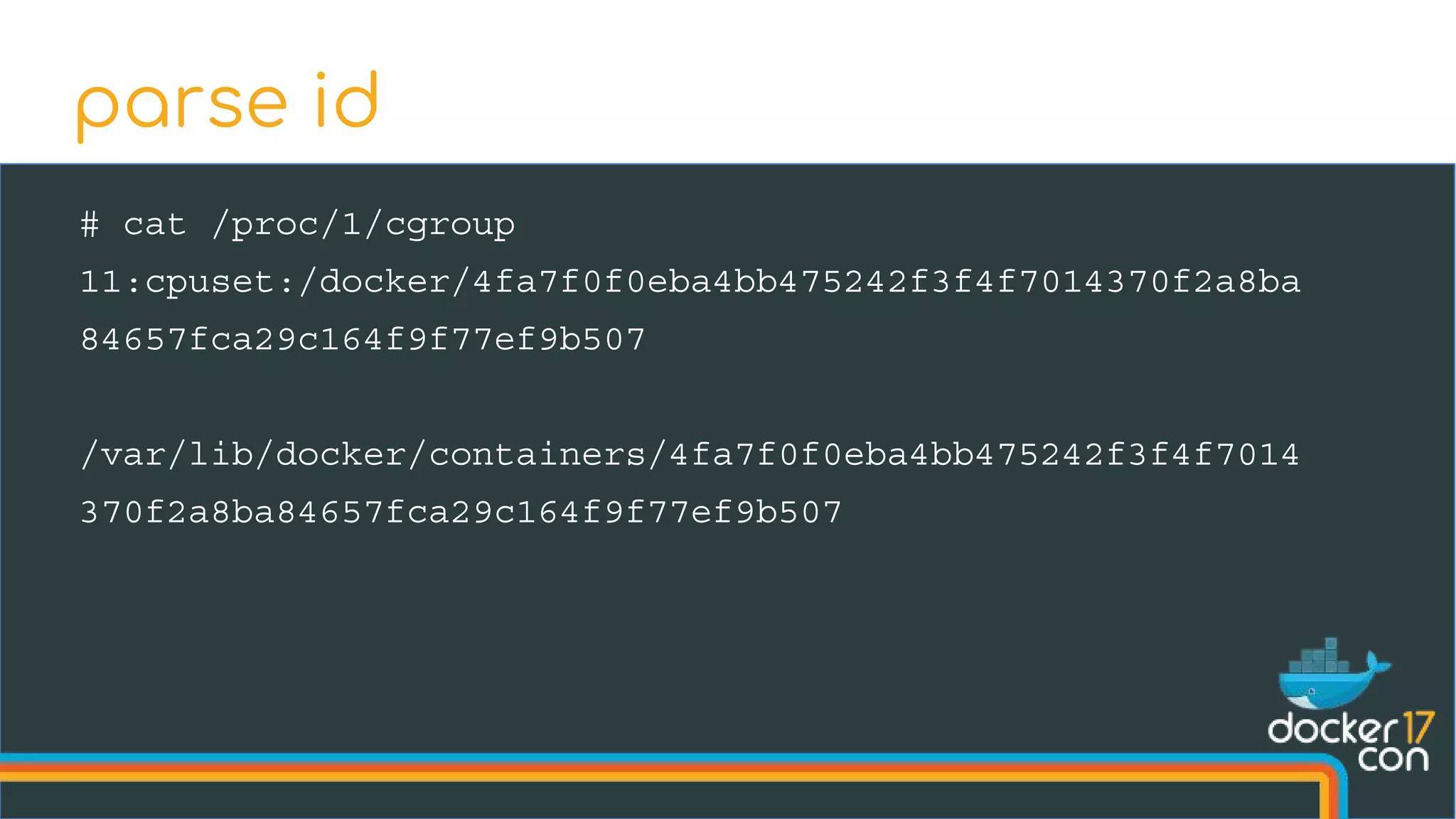

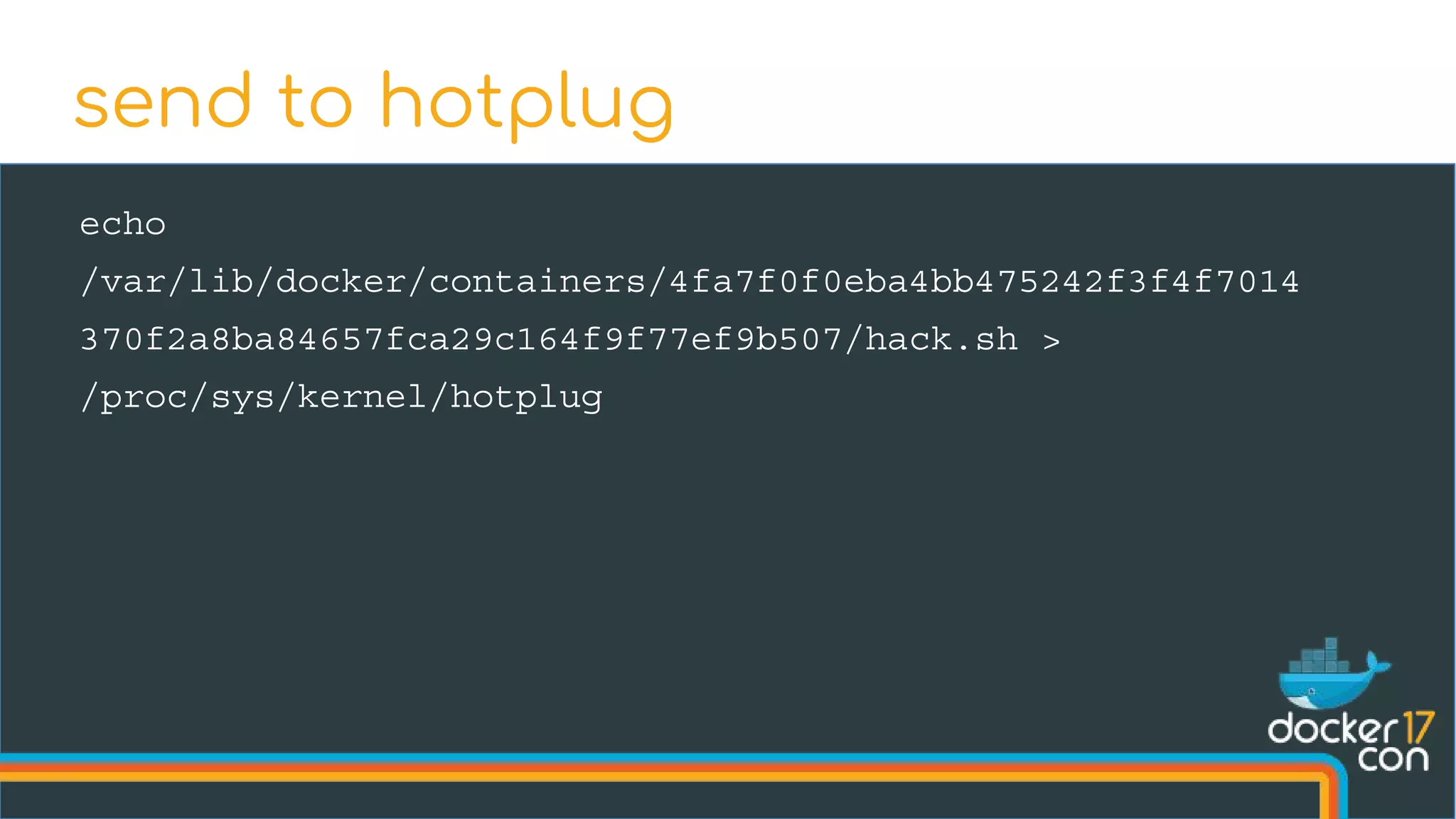

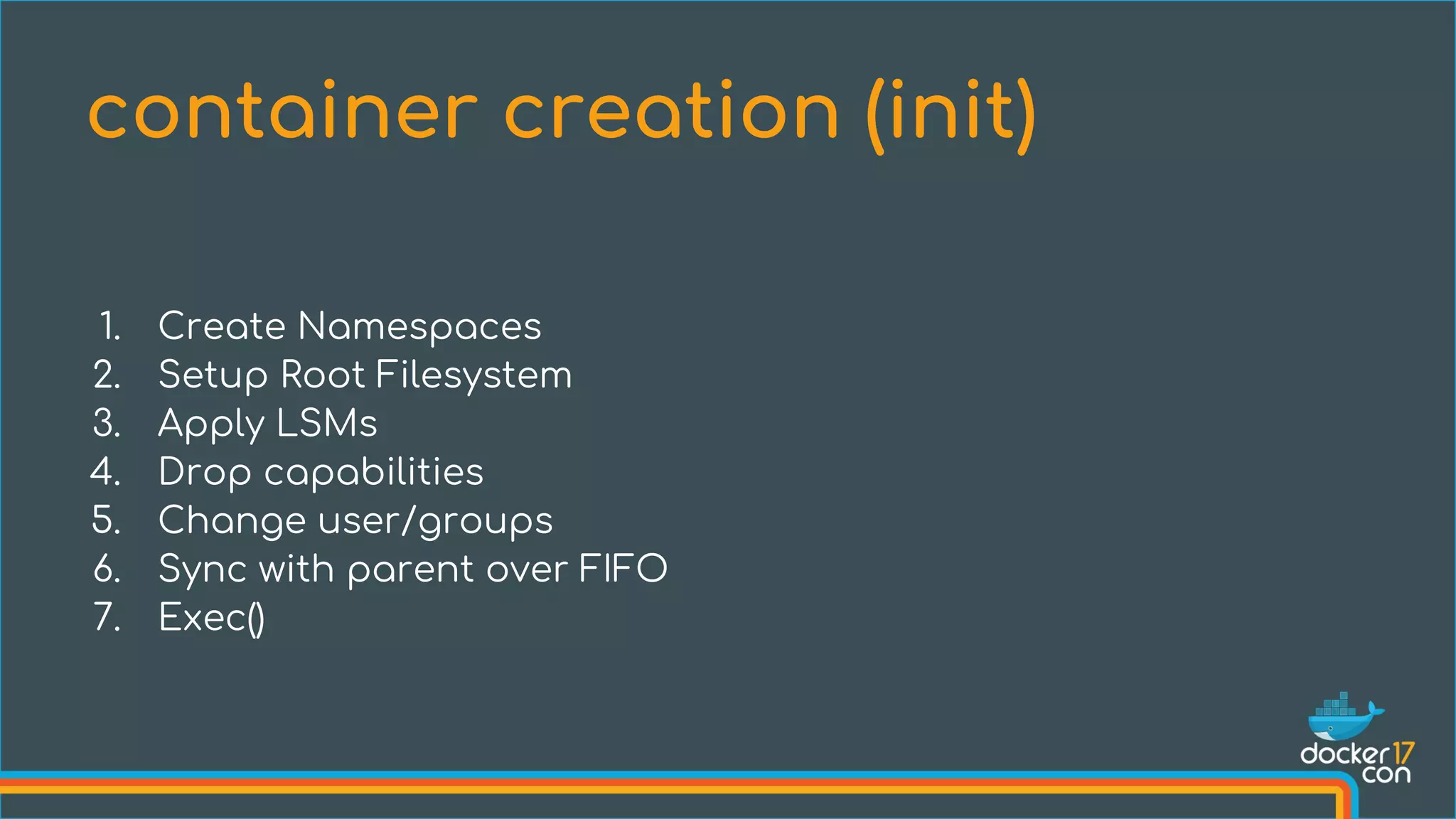

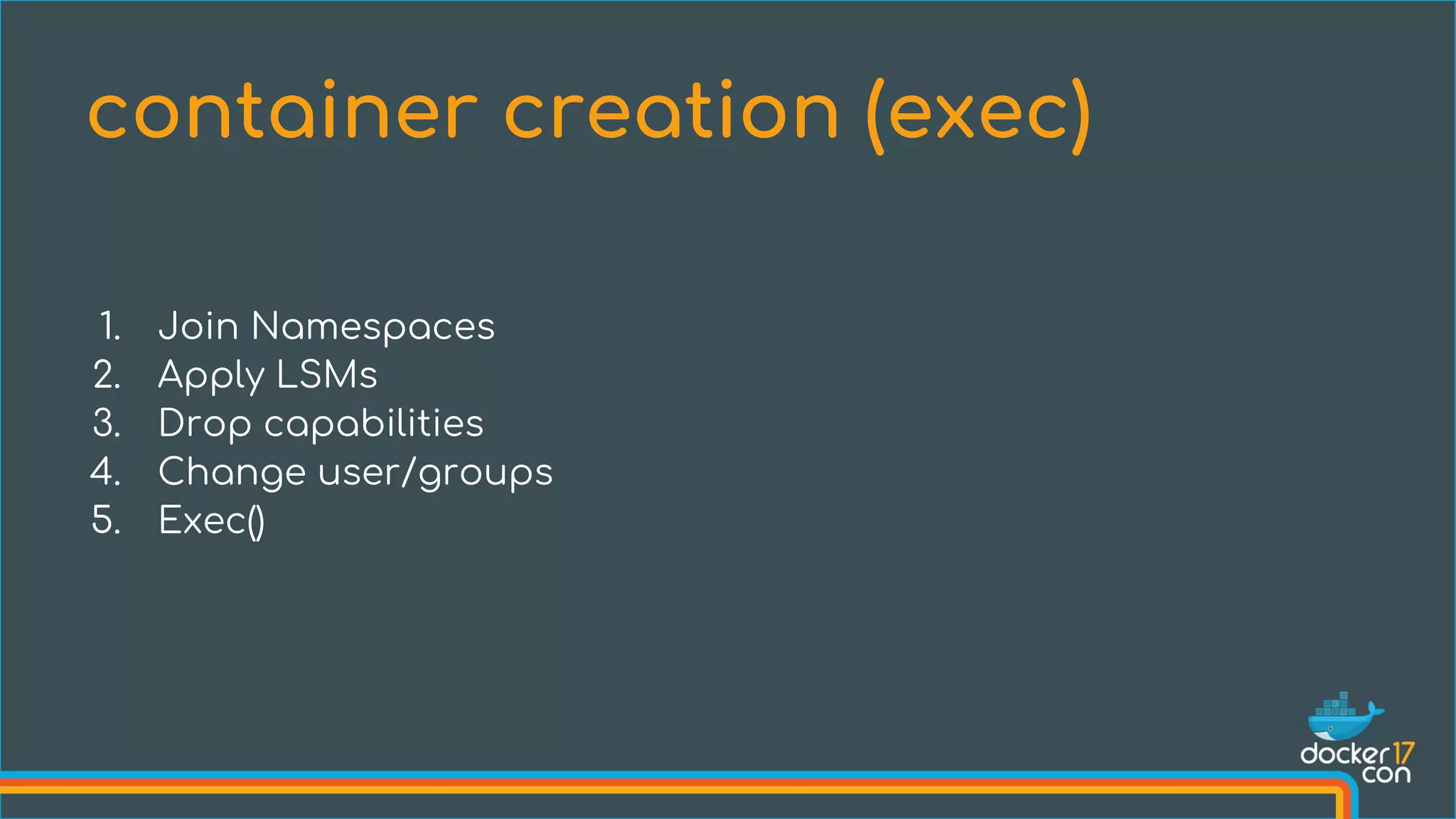

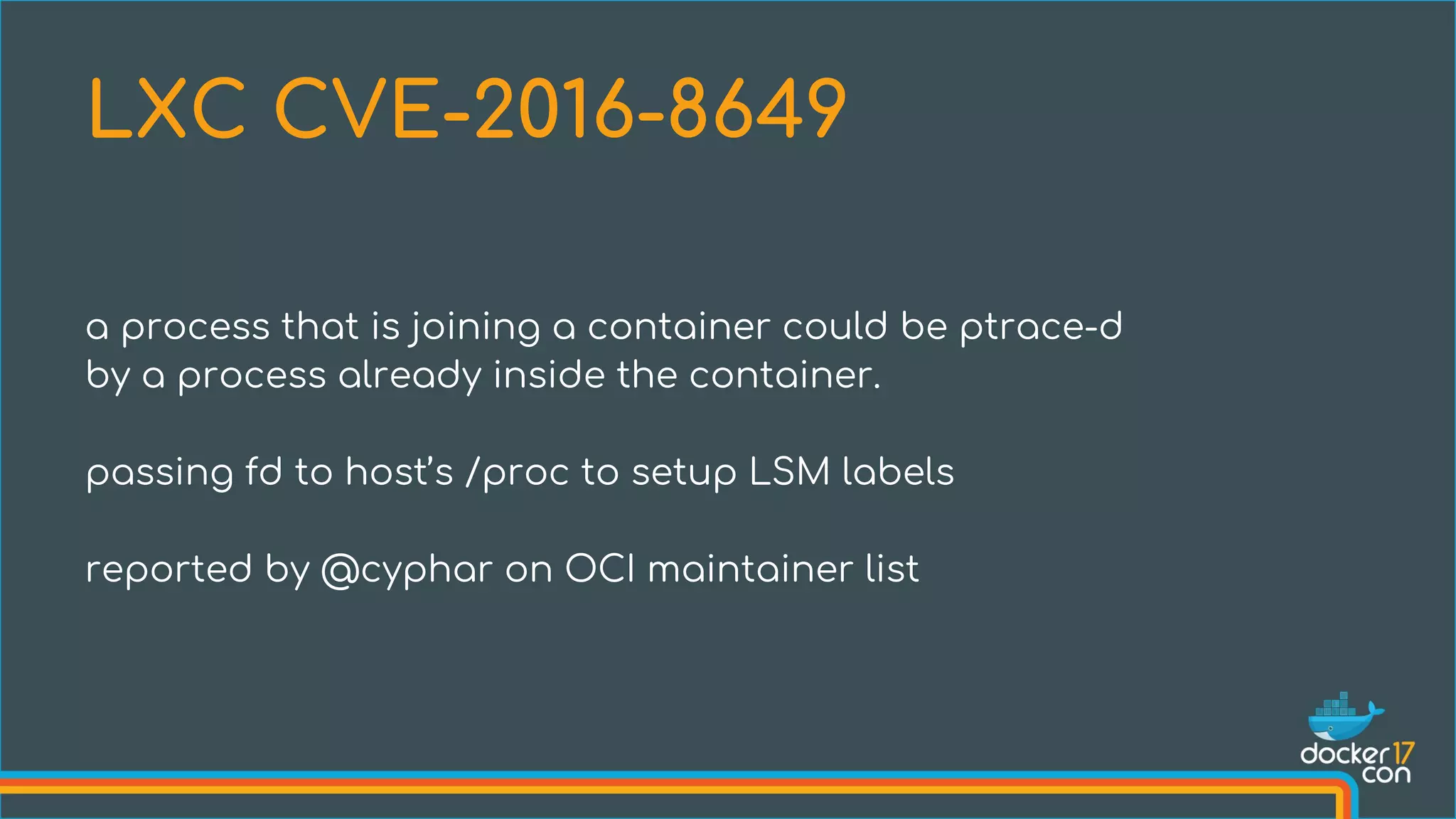

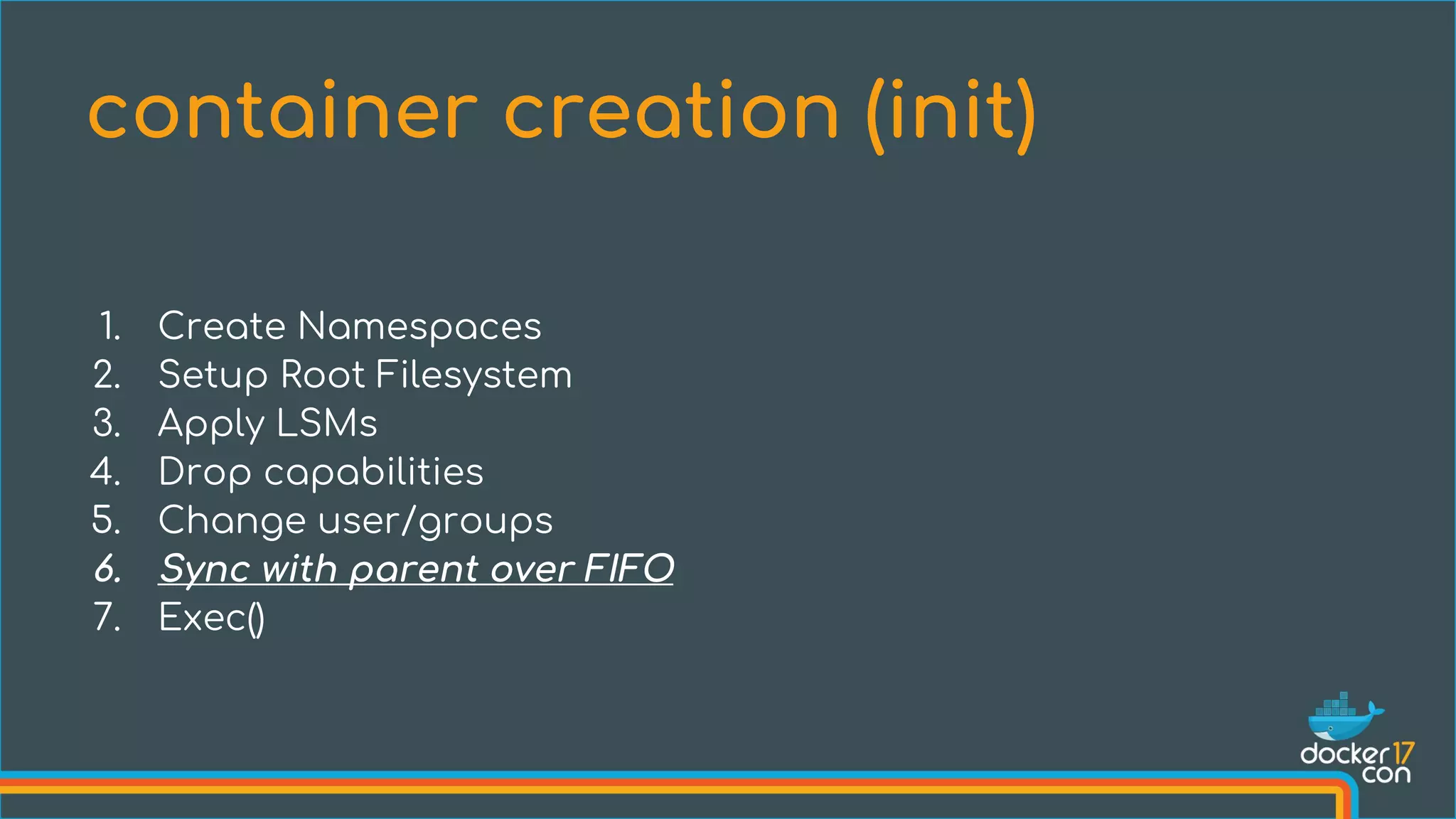

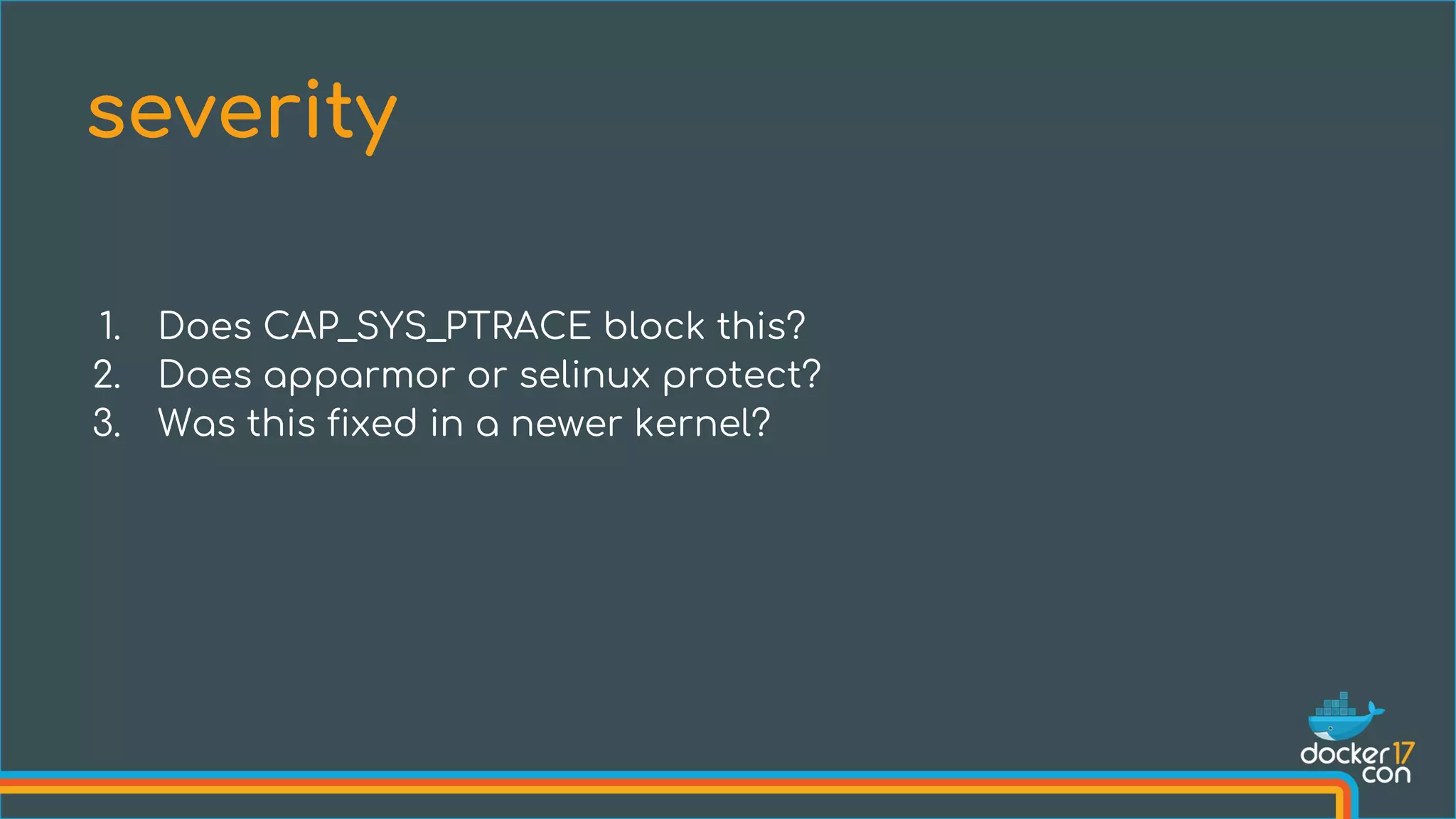

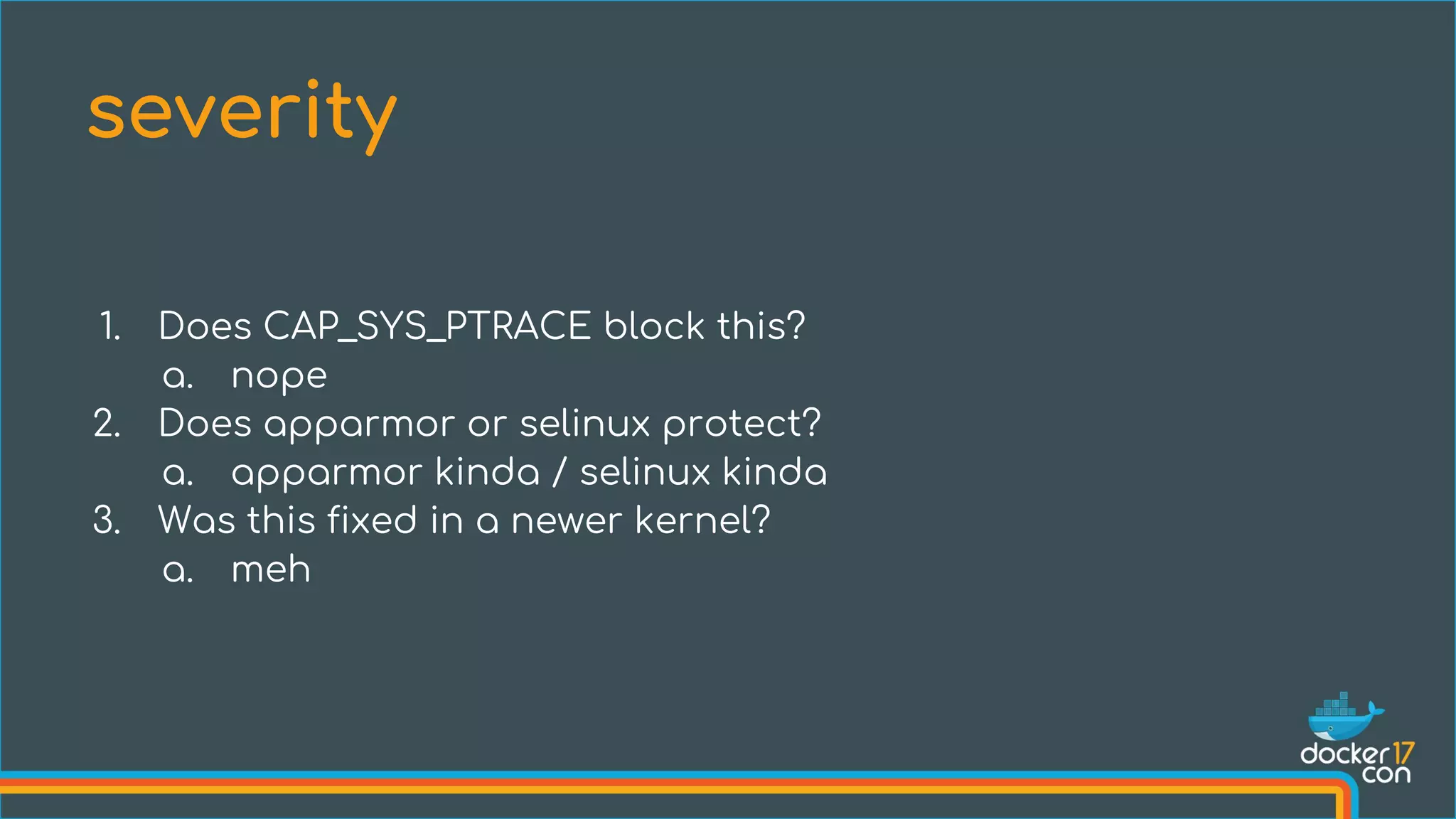

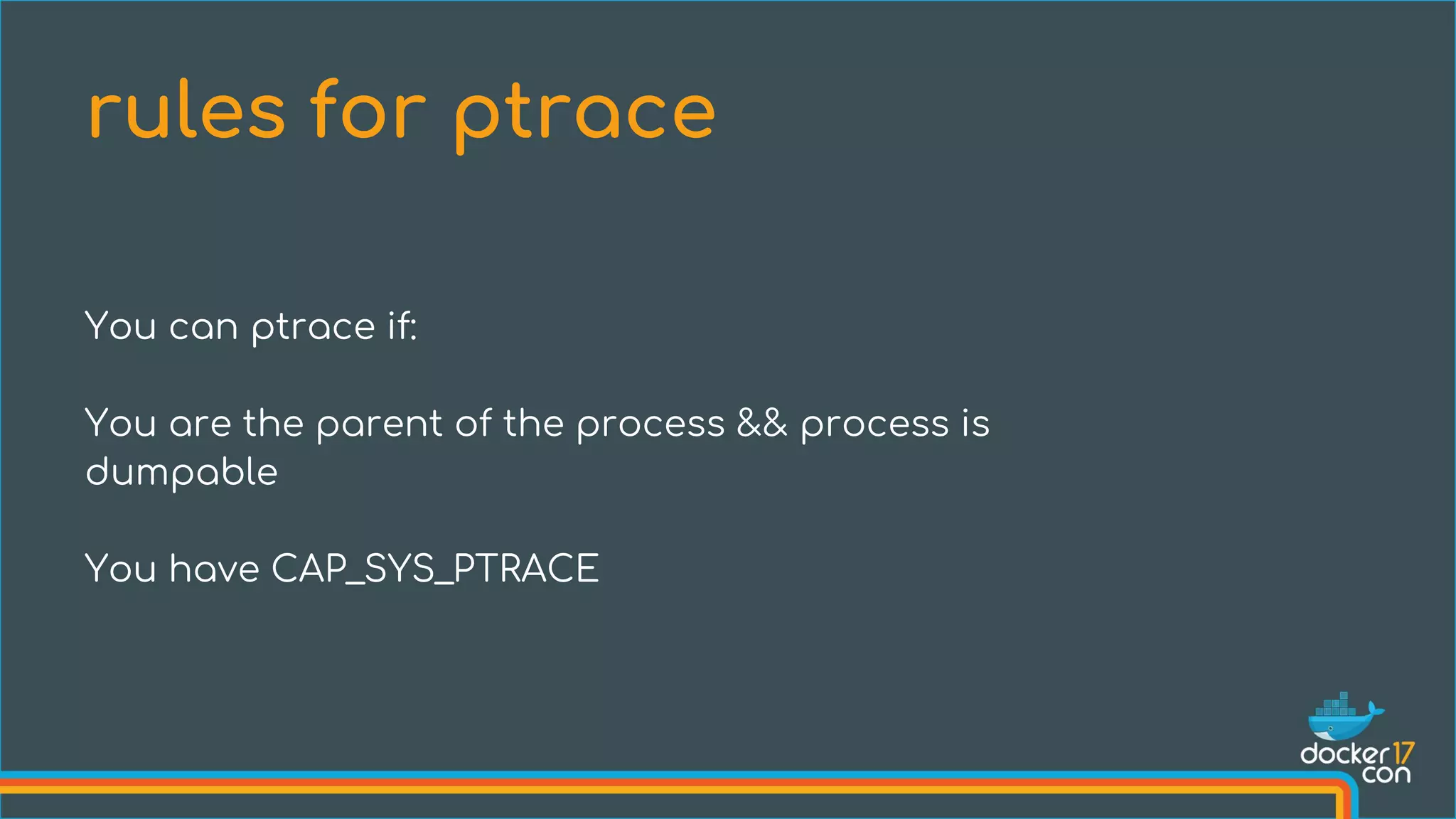

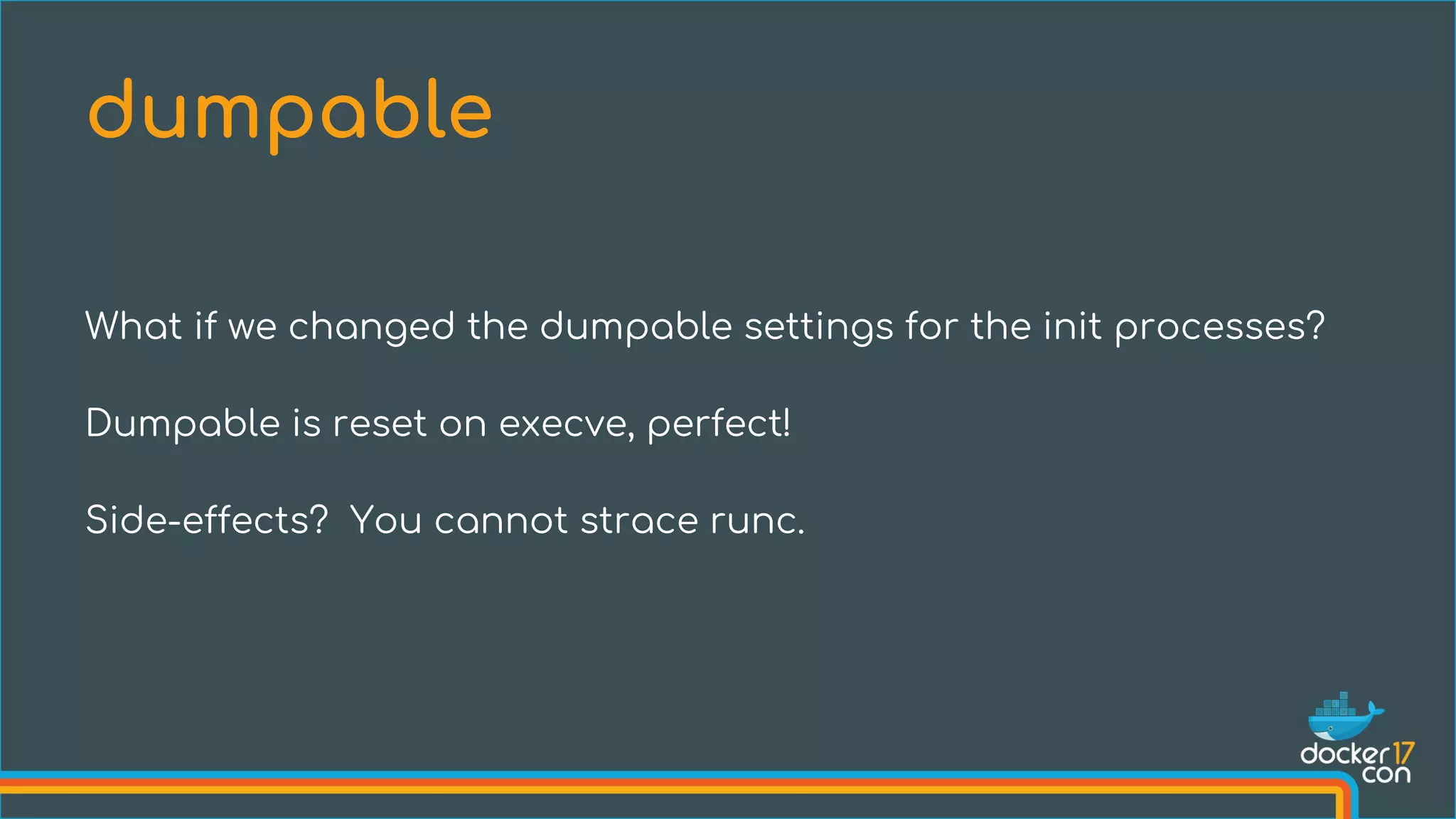

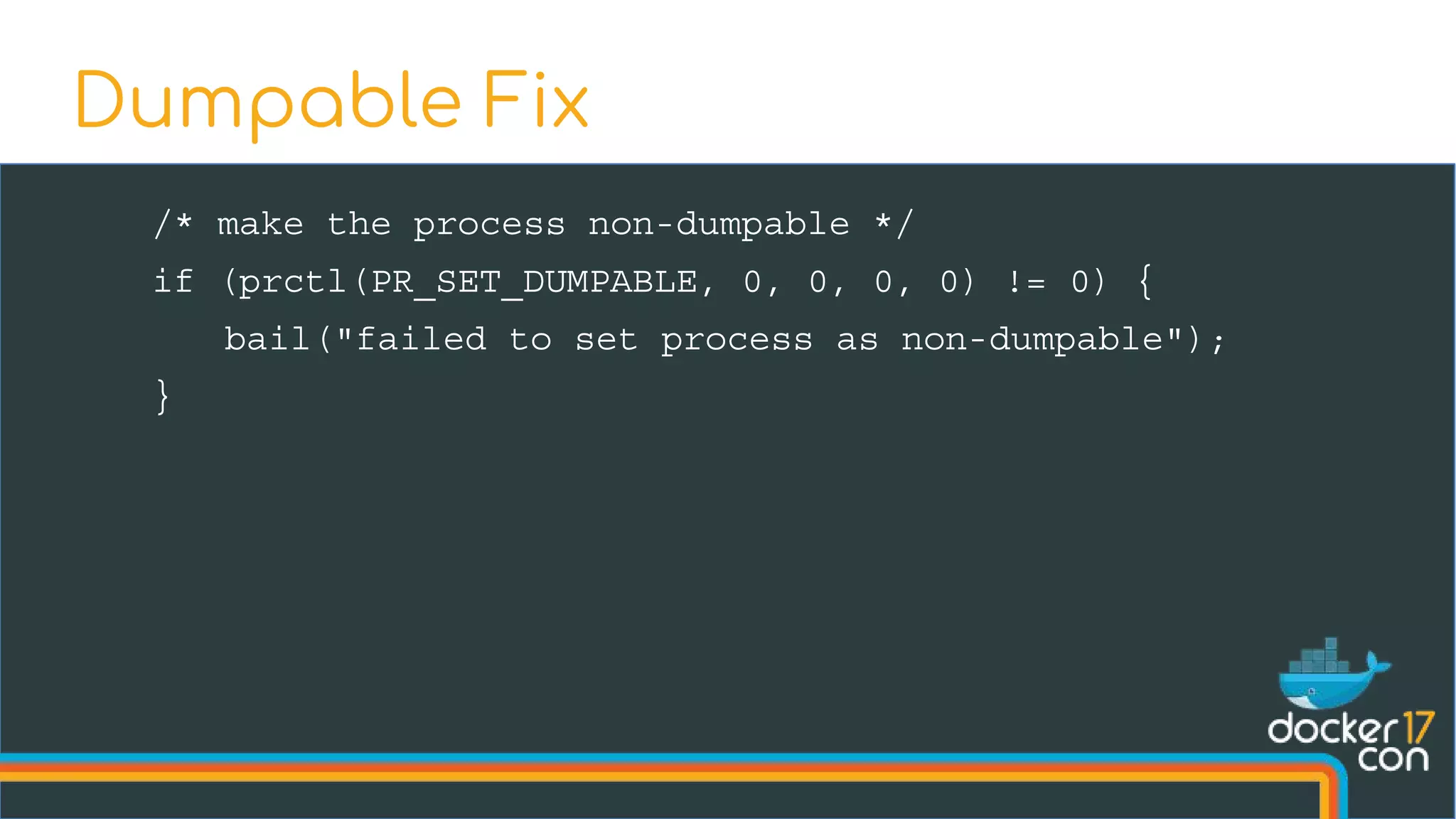

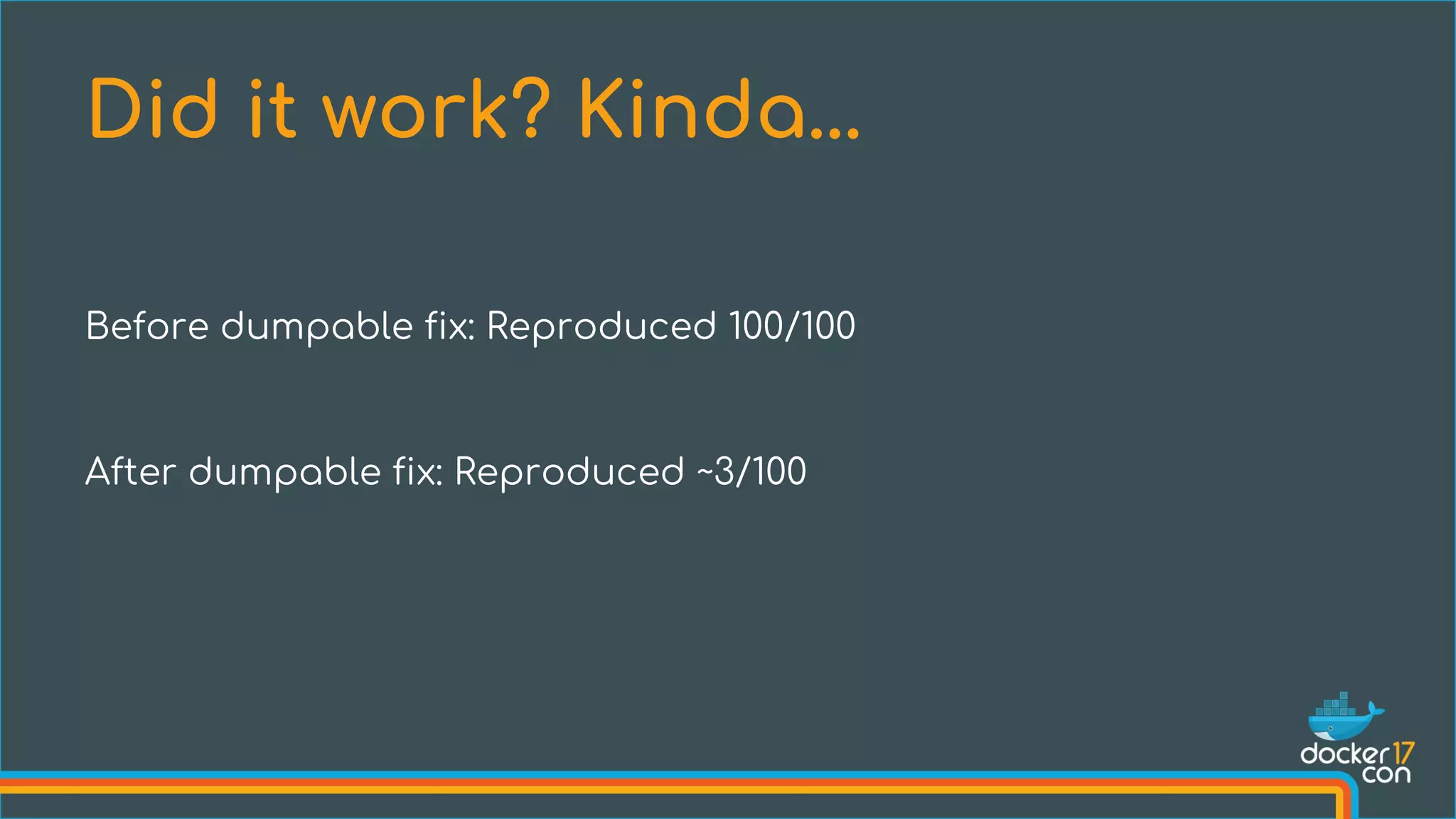

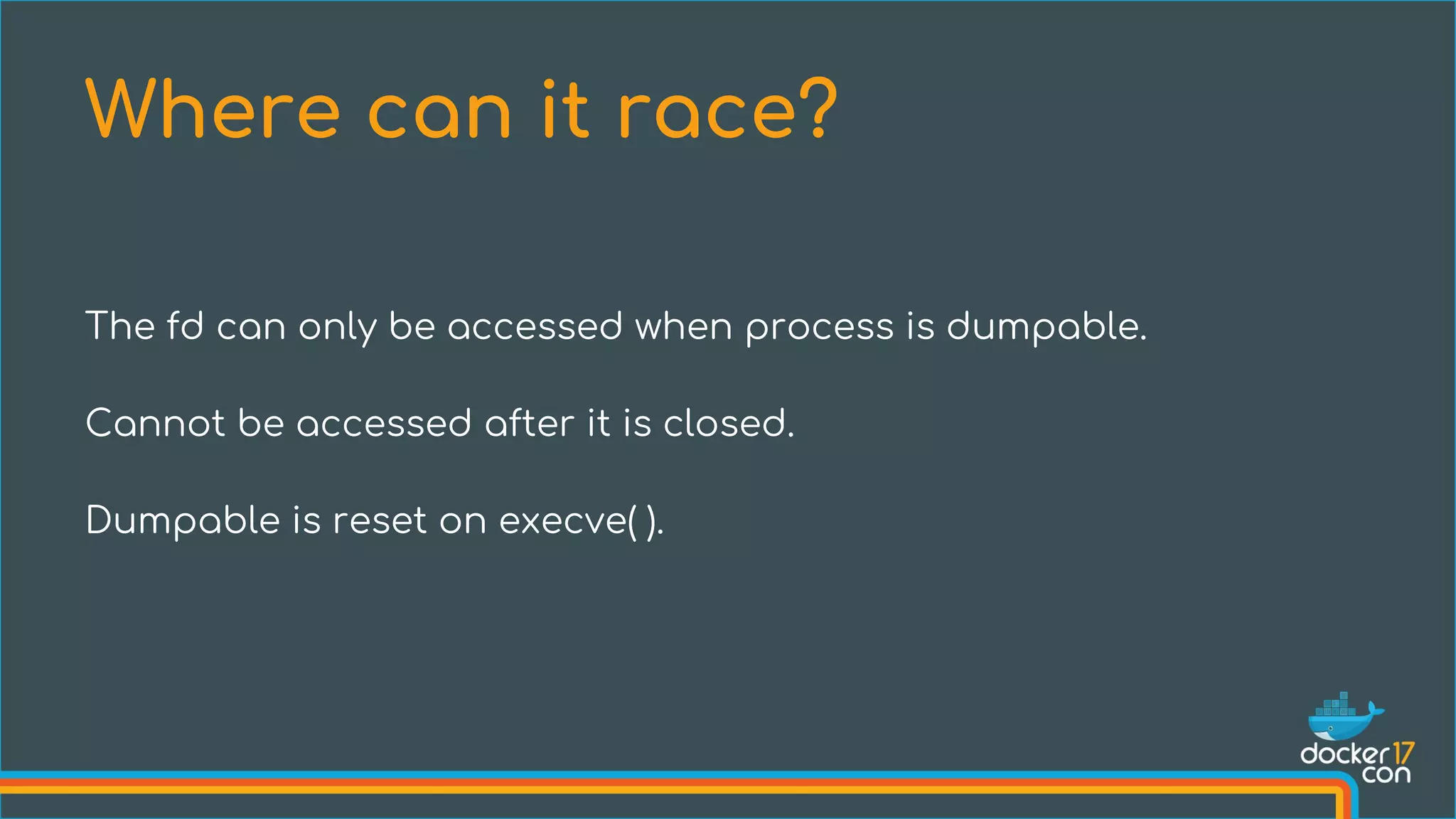

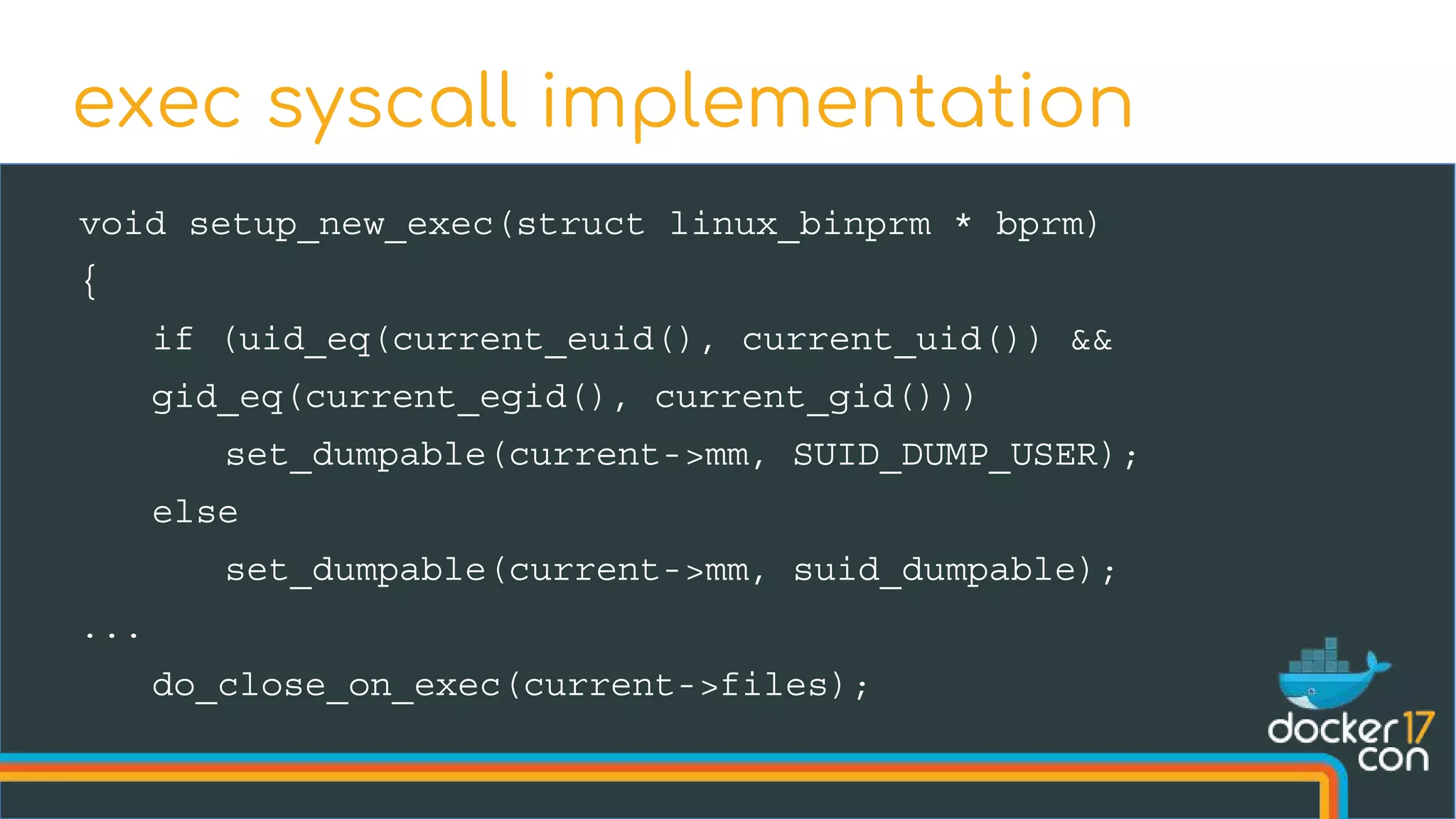

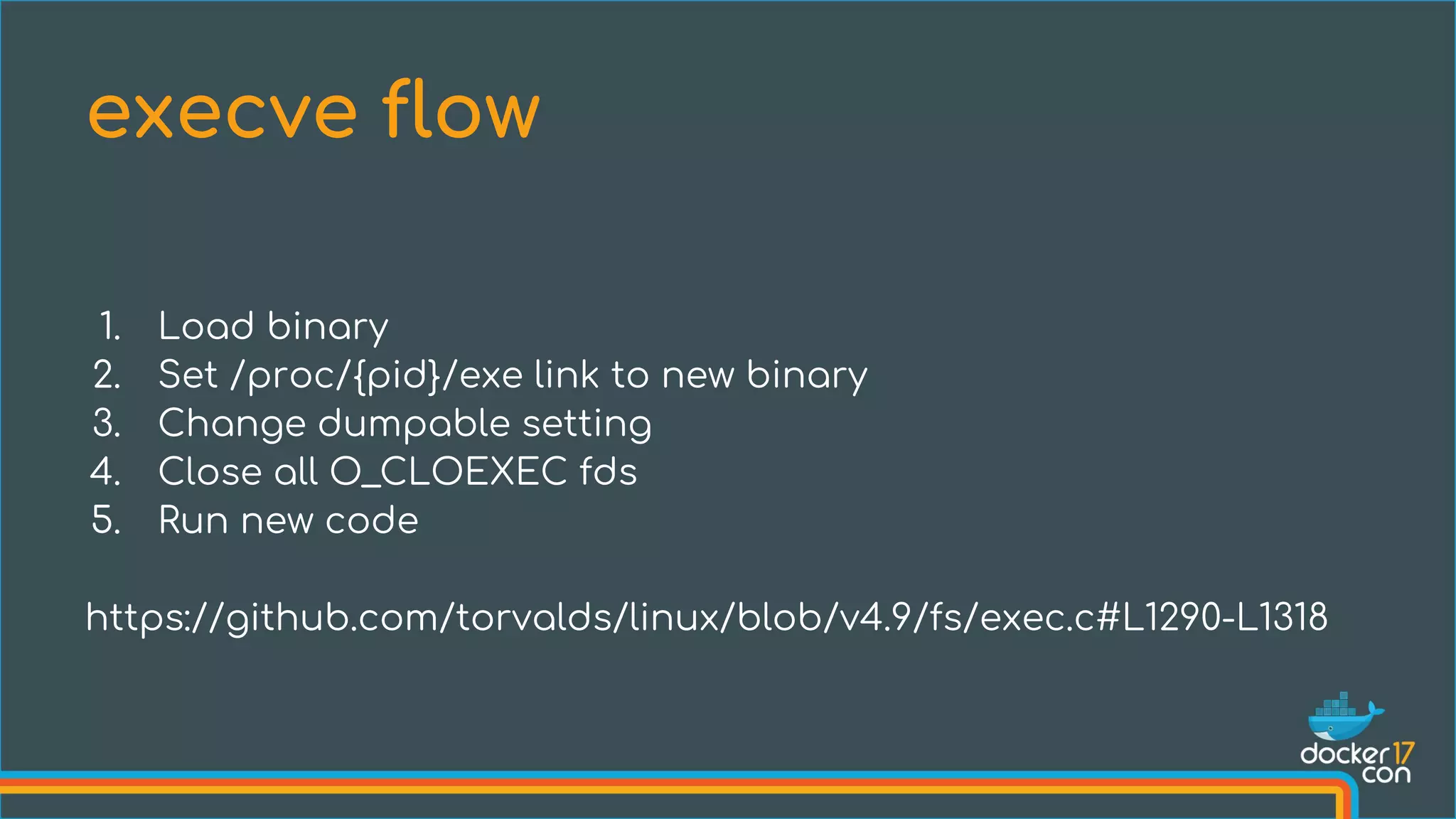

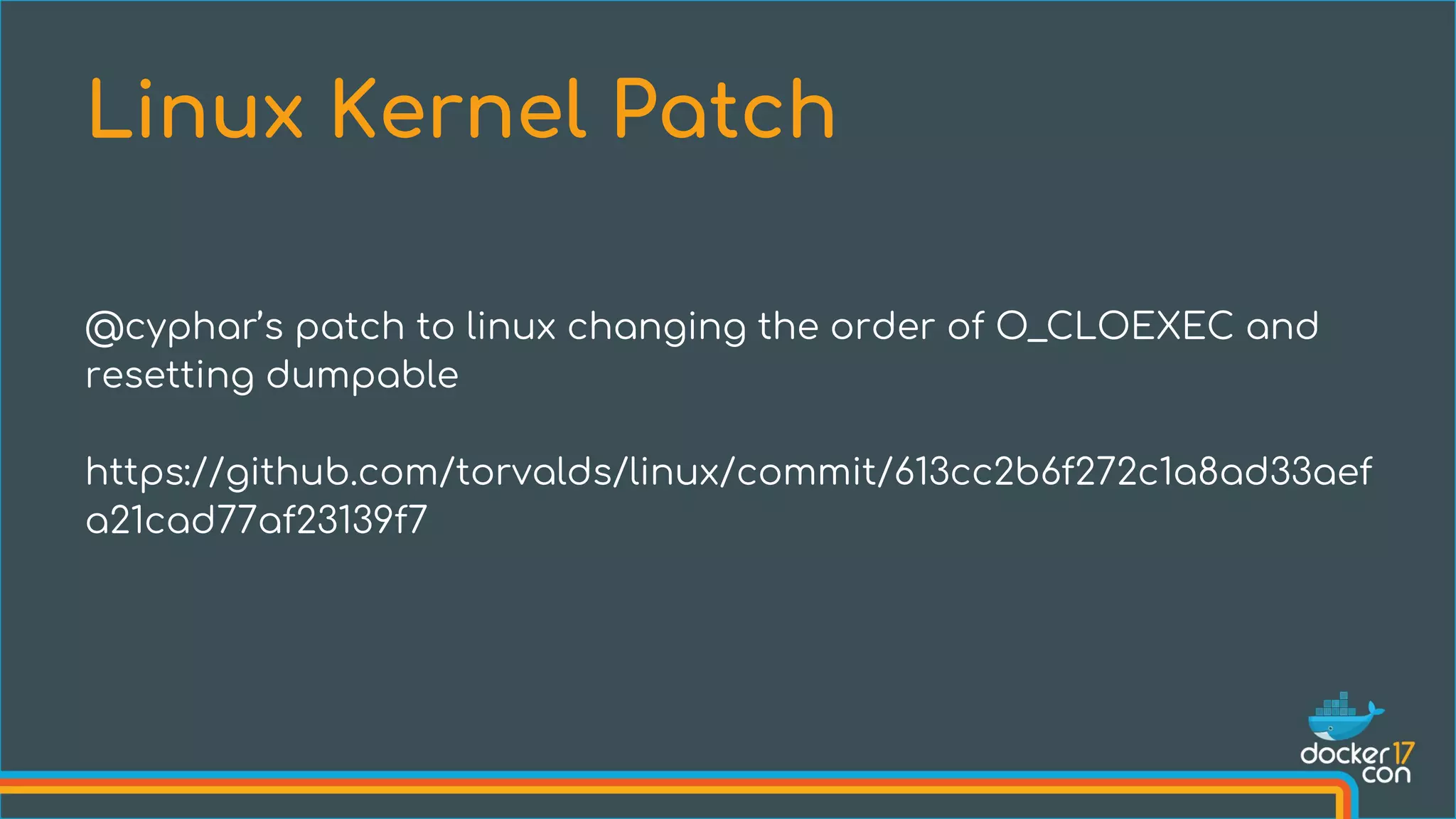

The document discusses the importance of Common Vulnerabilities and Exposures (CVEs) in securing container environments, outlining the CVE process and how vulnerabilities are reported, verified, and fixed. It details specific vulnerabilities related to runc and the implications of using ptrace in container security, along with proposed fixes and the significance of dumpable settings in mitigating these issues. The presentation emphasizes that security in containerization is an ongoing process rather than a one-time effort.