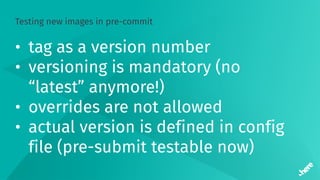

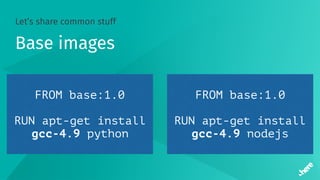

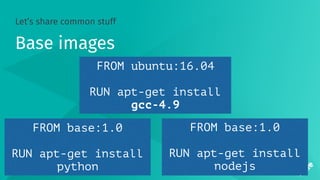

The document provides insights on using Docker in continuous integration (CI) environments, detailing the evolution from naive implementations to more structured and efficient workflows. It covers challenges faced, improvements made over time, and best practices for utilizing Docker effectively while minimizing issues such as image size and dependency management. Ultimately, the author emphasizes the importance of monitoring and refining CI processes to maintain stability and enhance productivity.

![Timeouts

“docker pull” times out

docker pull my_image:1.0

b6f892: Downloading [===========> ] XX MB/YY MB

55010f: Downloading [============> ] XX MB/YY MB

2955fb: Downloading [=============> ] XX MB/YY MB](https://image.slidesharecdn.com/5dockercihere2-170630120940/85/Docker-CI-HERE-Technologies-34-320.jpg)

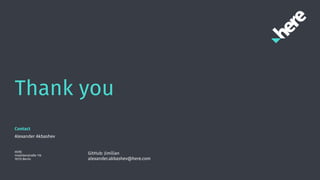

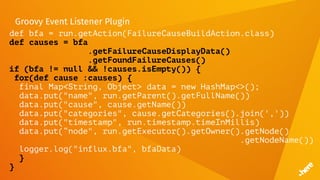

![Build Failure Analyzer Plugin

• docker: Error response from daemon: linux runtime spec

devices: .+

• docker: Error response from daemon: rpc error: code =

2 desc = "containerd: container did not start before

the specified timeout”

• docker: Error response from daemon: Cannot start

container [0-9a-f]+: lstat .+

• docker: Error response from daemon: shim error:

context deadline exceeded.+](https://image.slidesharecdn.com/5dockercihere2-170630120940/85/Docker-CI-HERE-Technologies-64-320.jpg)

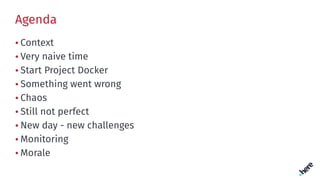

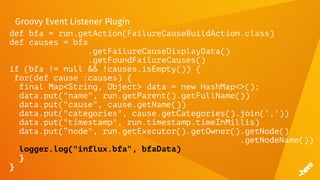

![FluentD

<match influx.bfa>

<store>

@type influxdb

host influxdb.internal

port 8086

dbname bfs

tag_keys ["name","node","cause","categories"]

timestamp_tag timestamp

time_precision ms

</store>

</match>](https://image.slidesharecdn.com/5dockercihere2-170630120940/85/Docker-CI-HERE-Technologies-70-320.jpg)

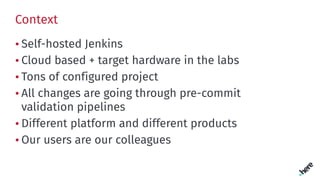

![FluentD

<match influx.bfa>

<store>

@type influxdb

host influxdb.internal

port 8086

dbname bfs

tag_keys ["name","node","cause","categories"]

timestamp_tag timestamp

time_precision ms

</store>

</match>](https://image.slidesharecdn.com/5dockercihere2-170630120940/85/Docker-CI-HERE-Technologies-71-320.jpg)