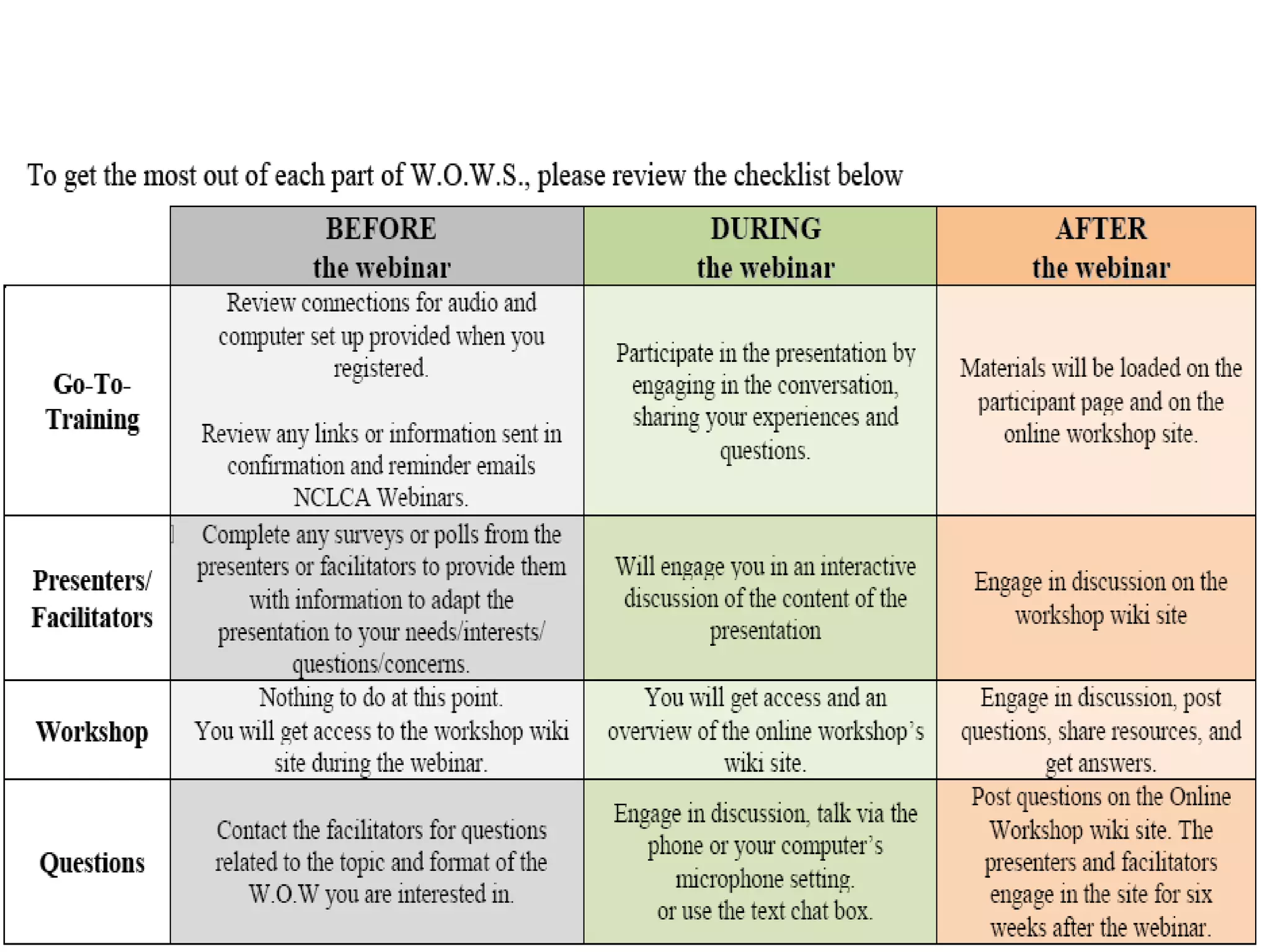

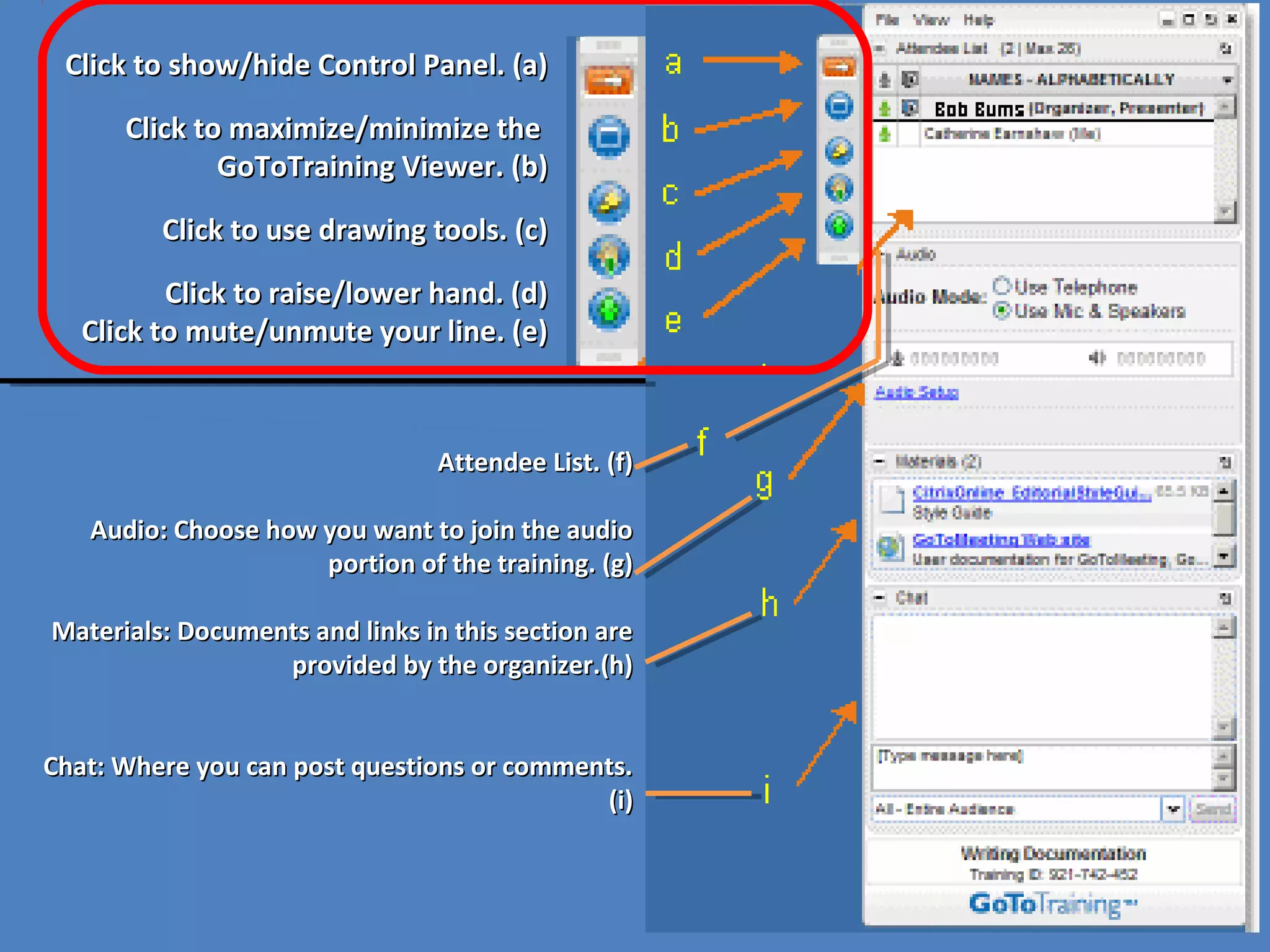

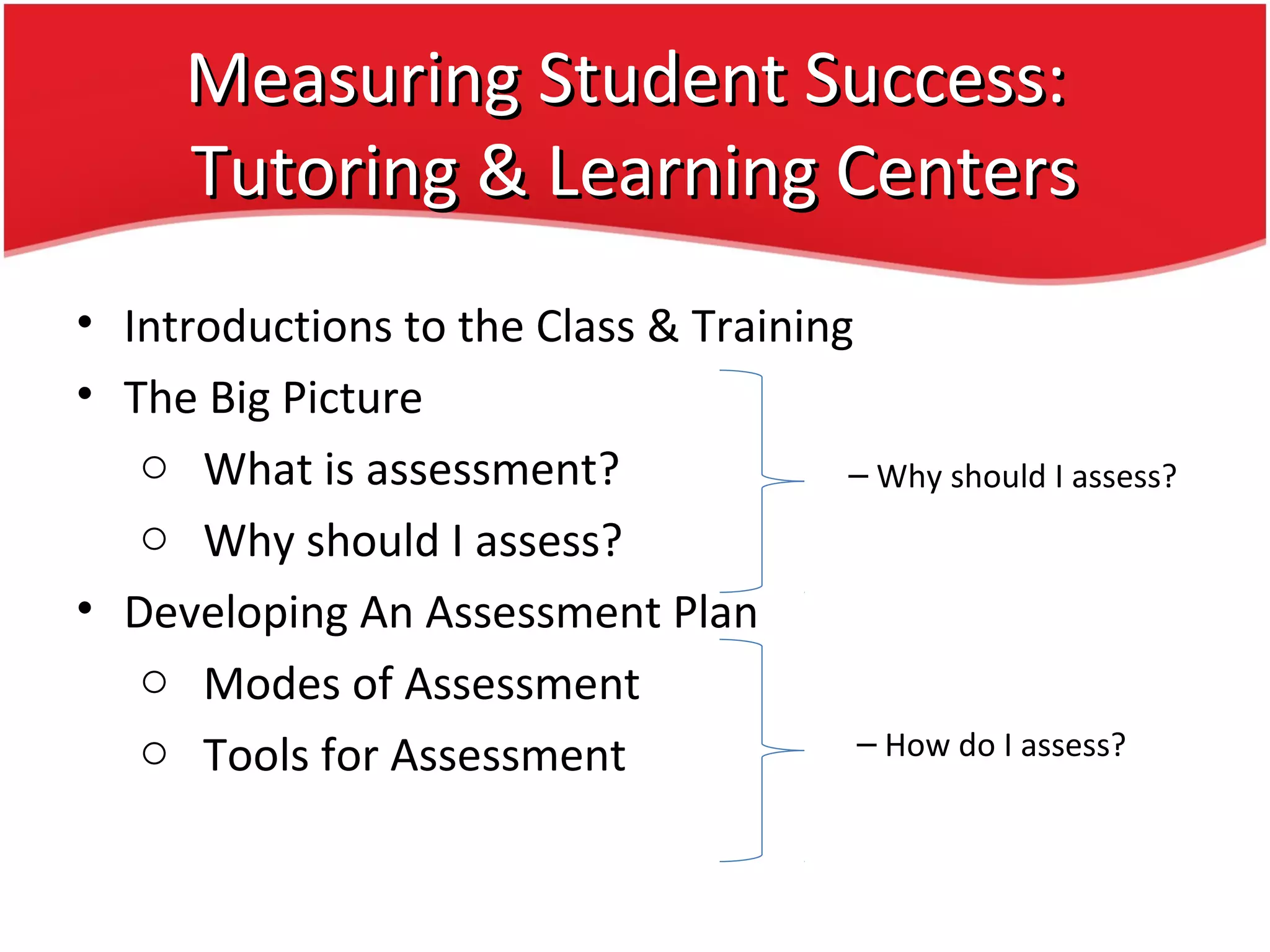

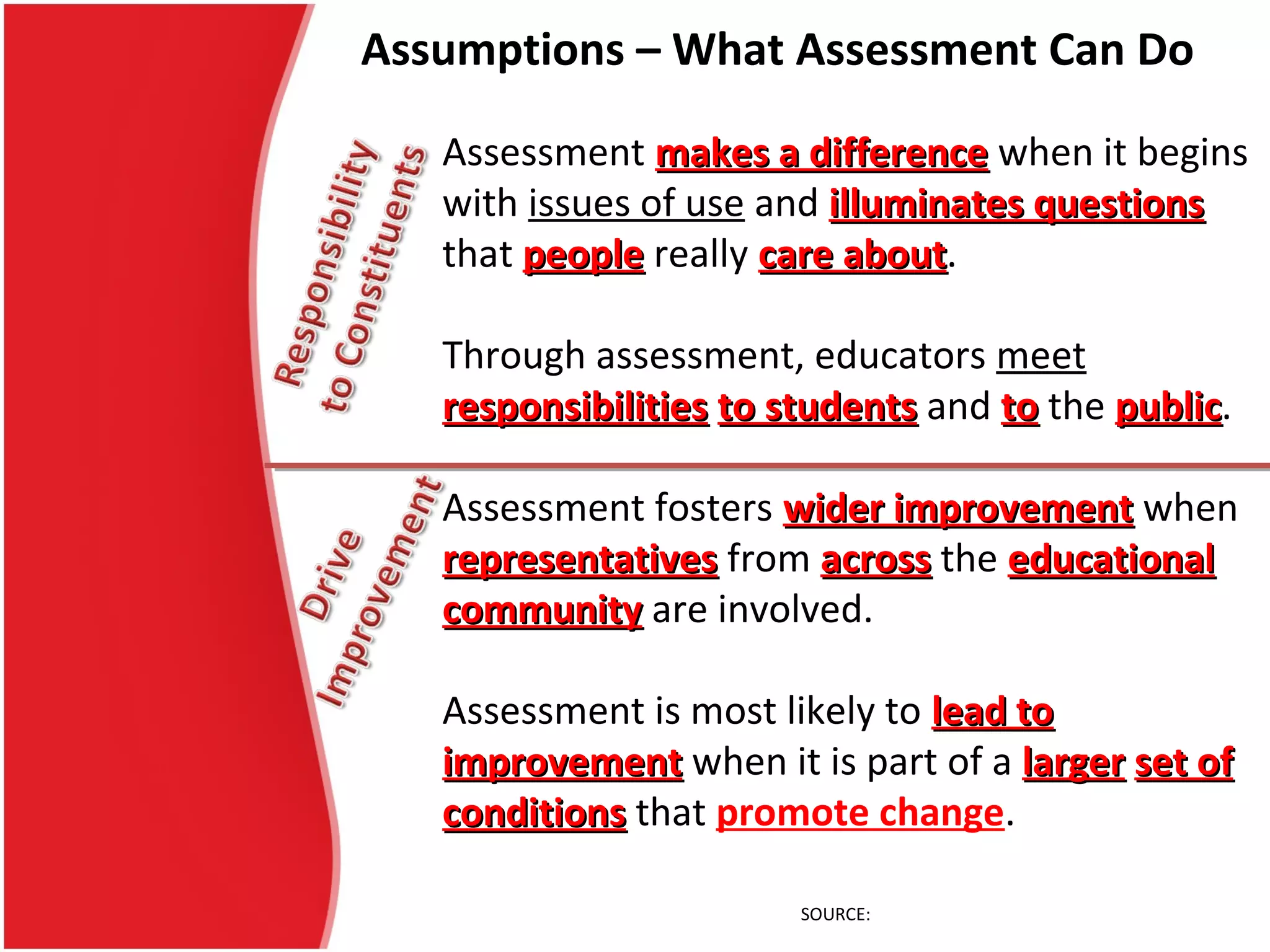

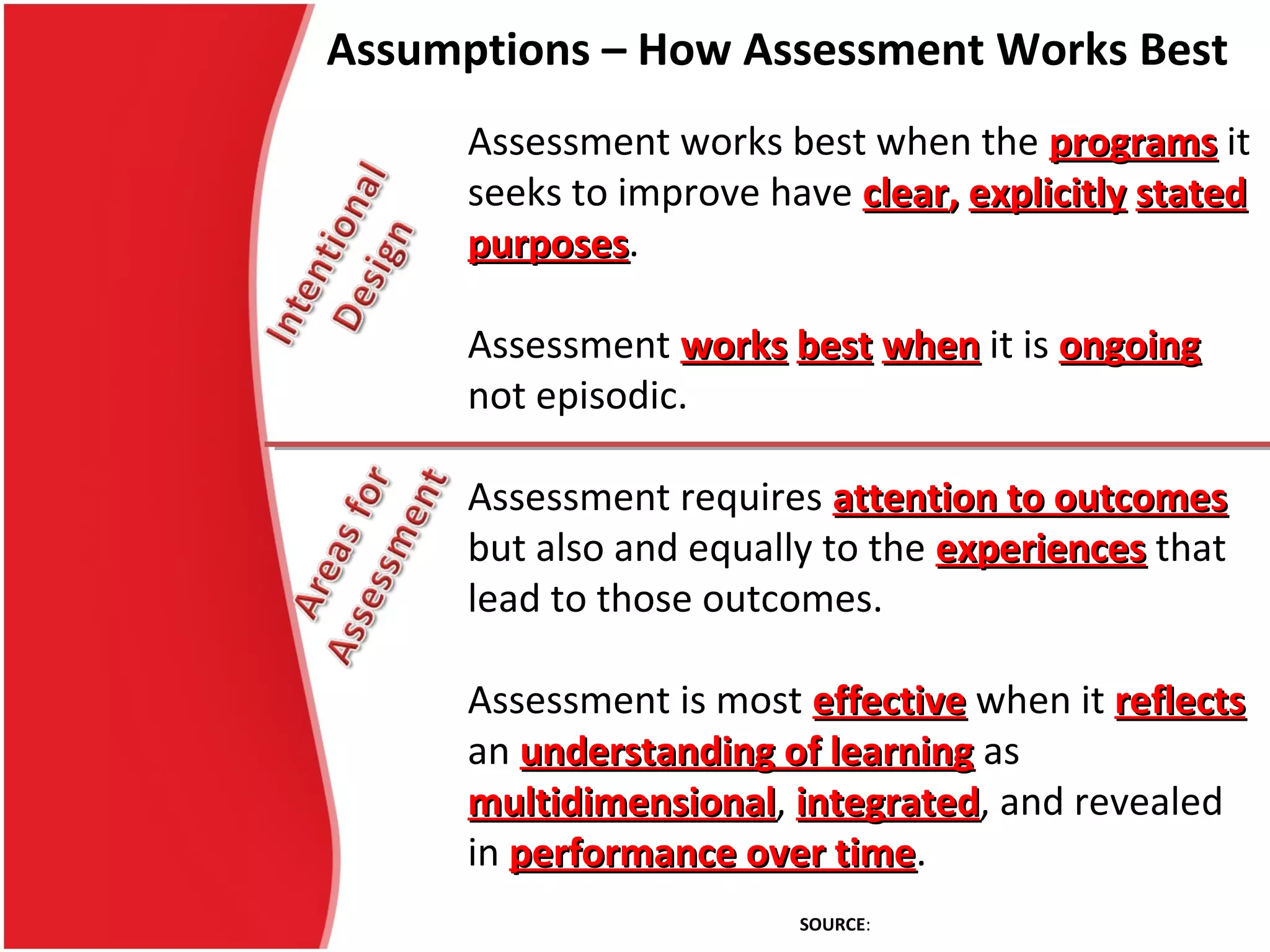

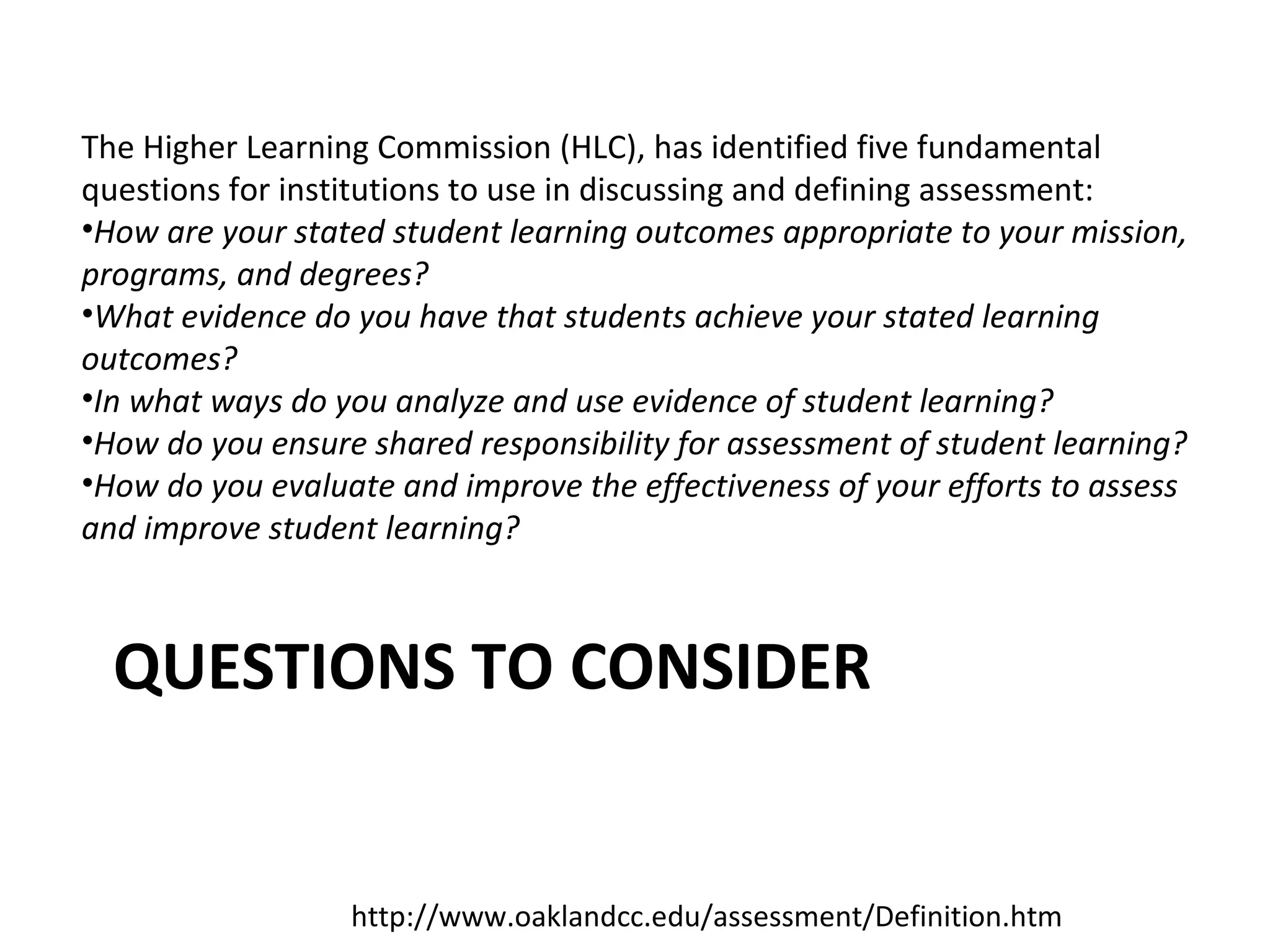

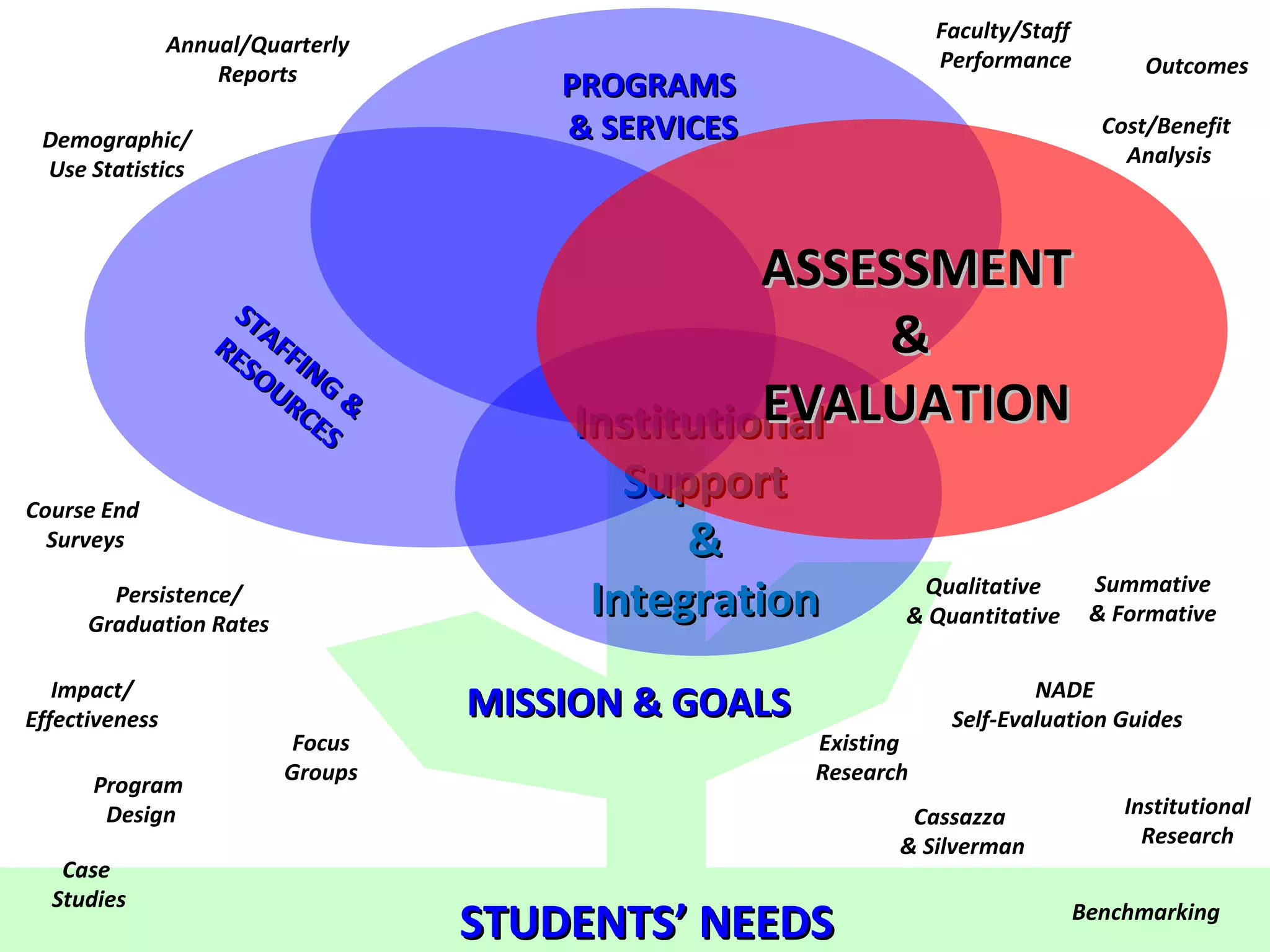

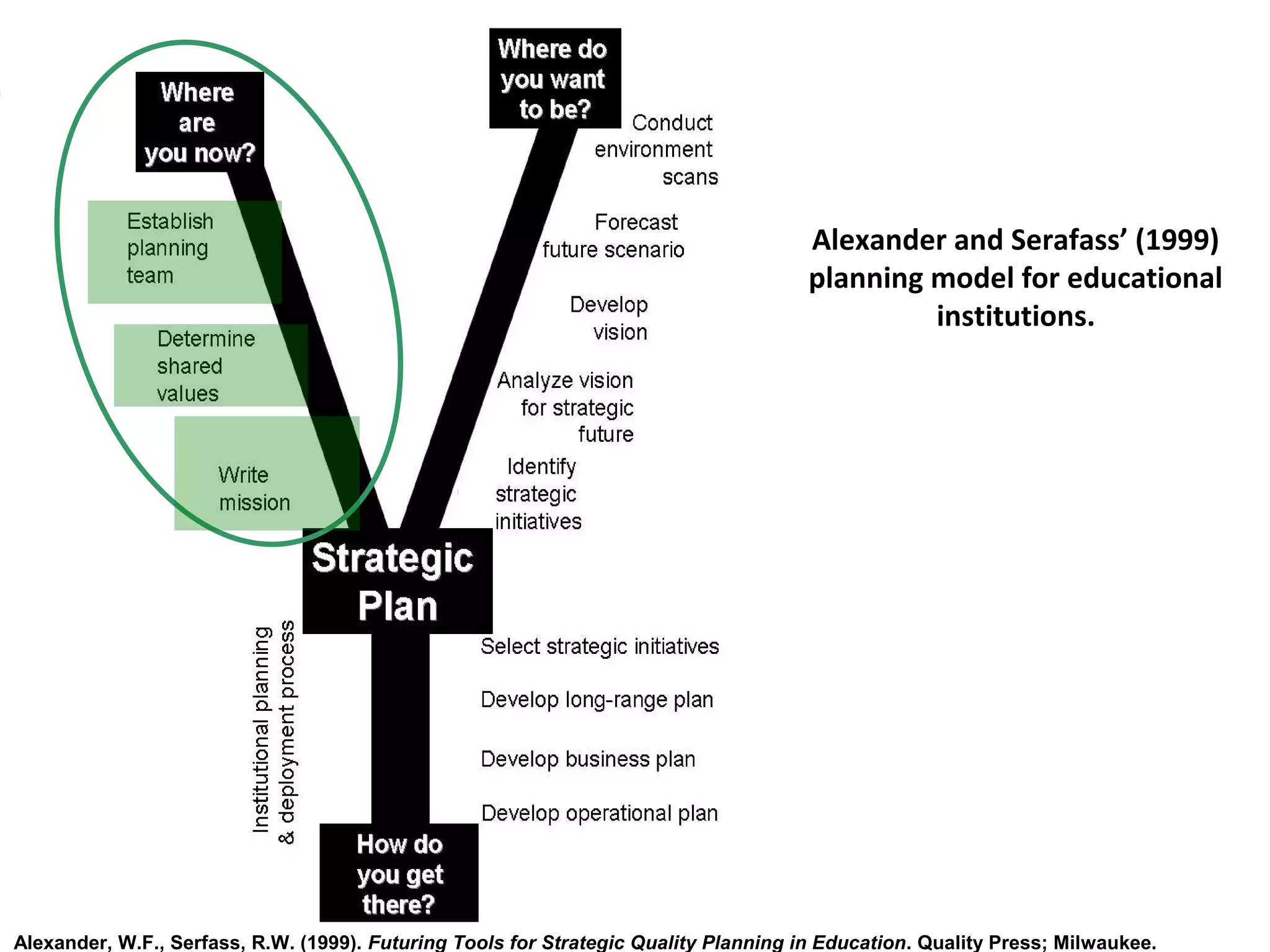

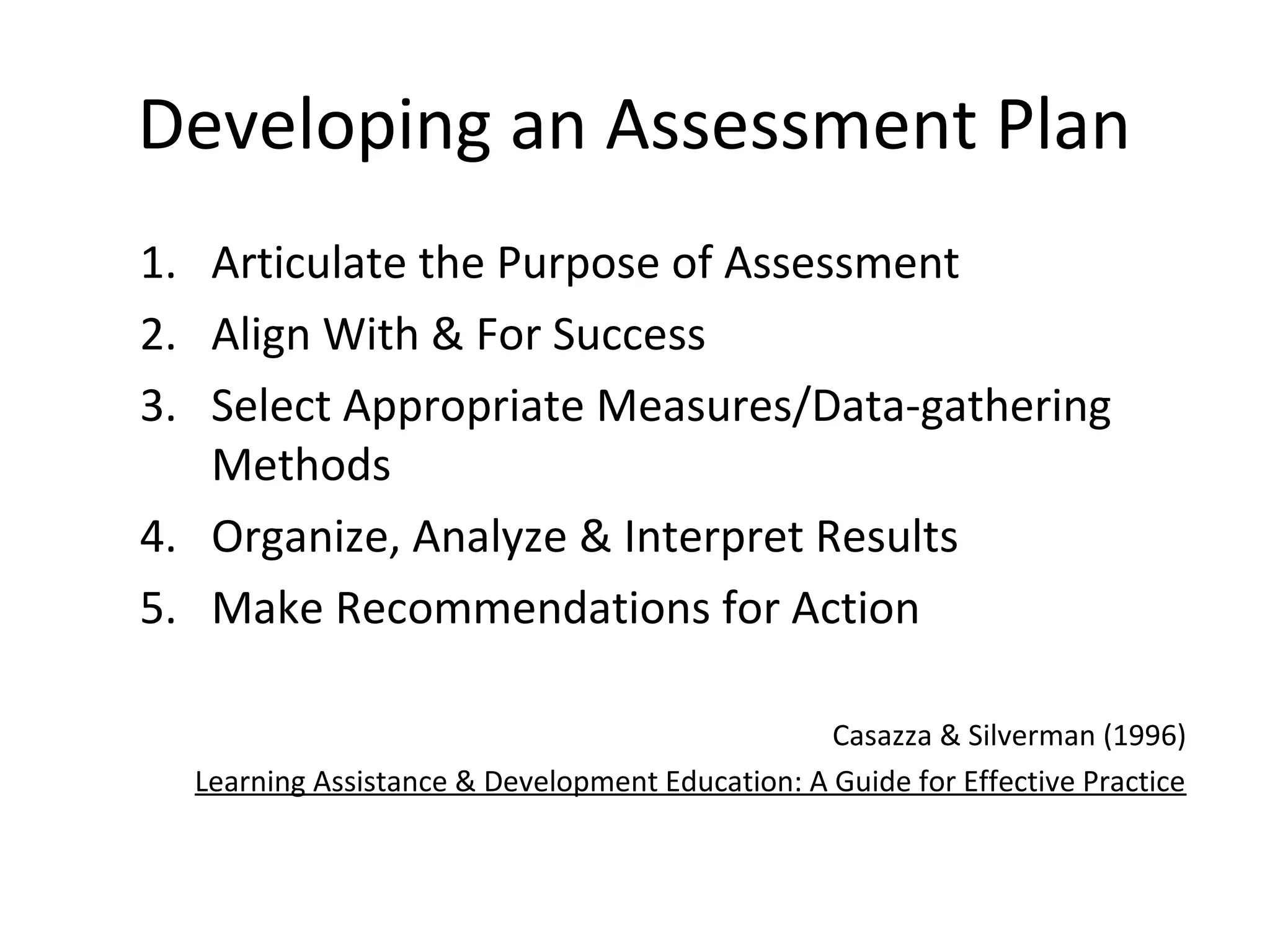

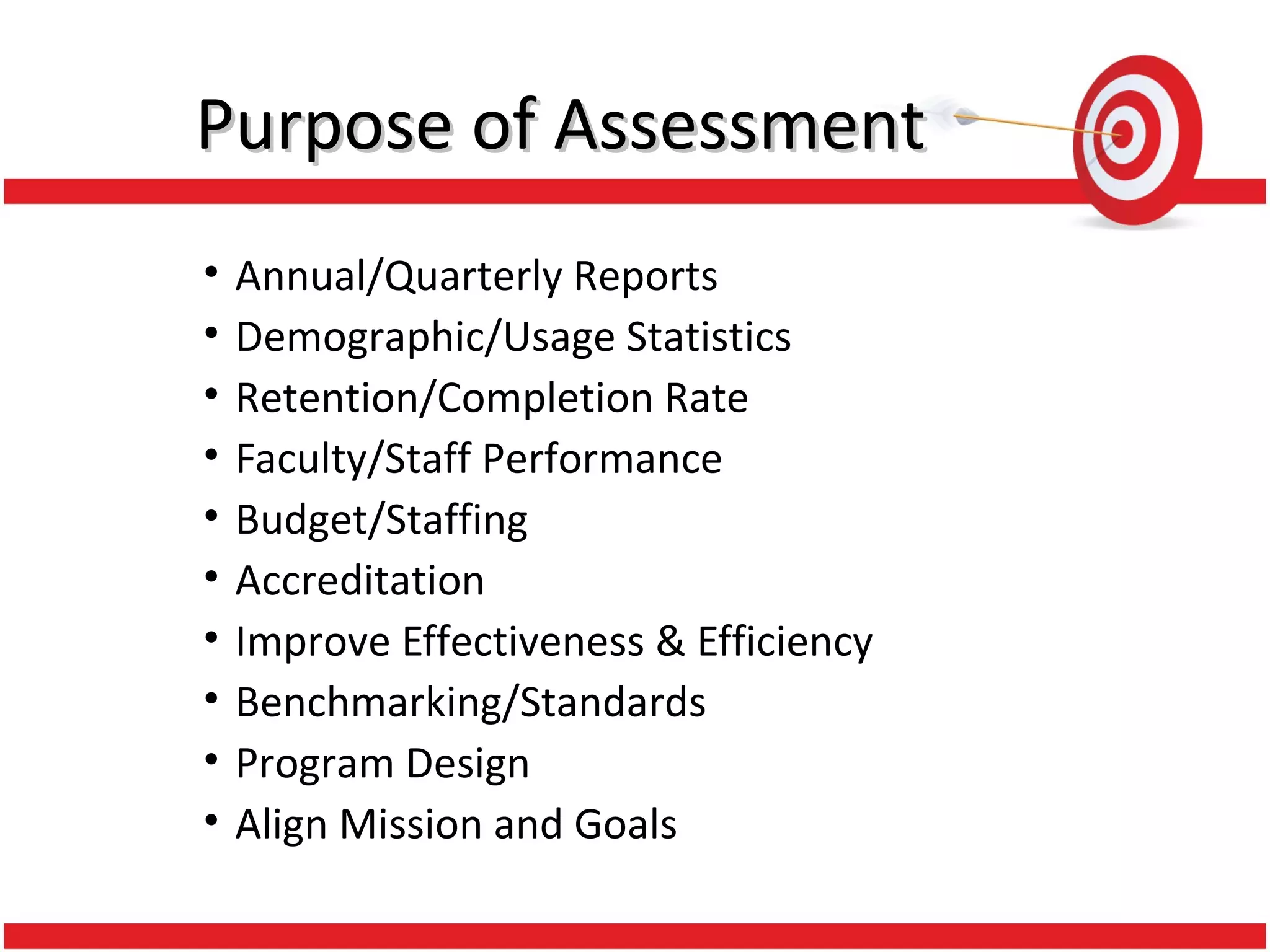

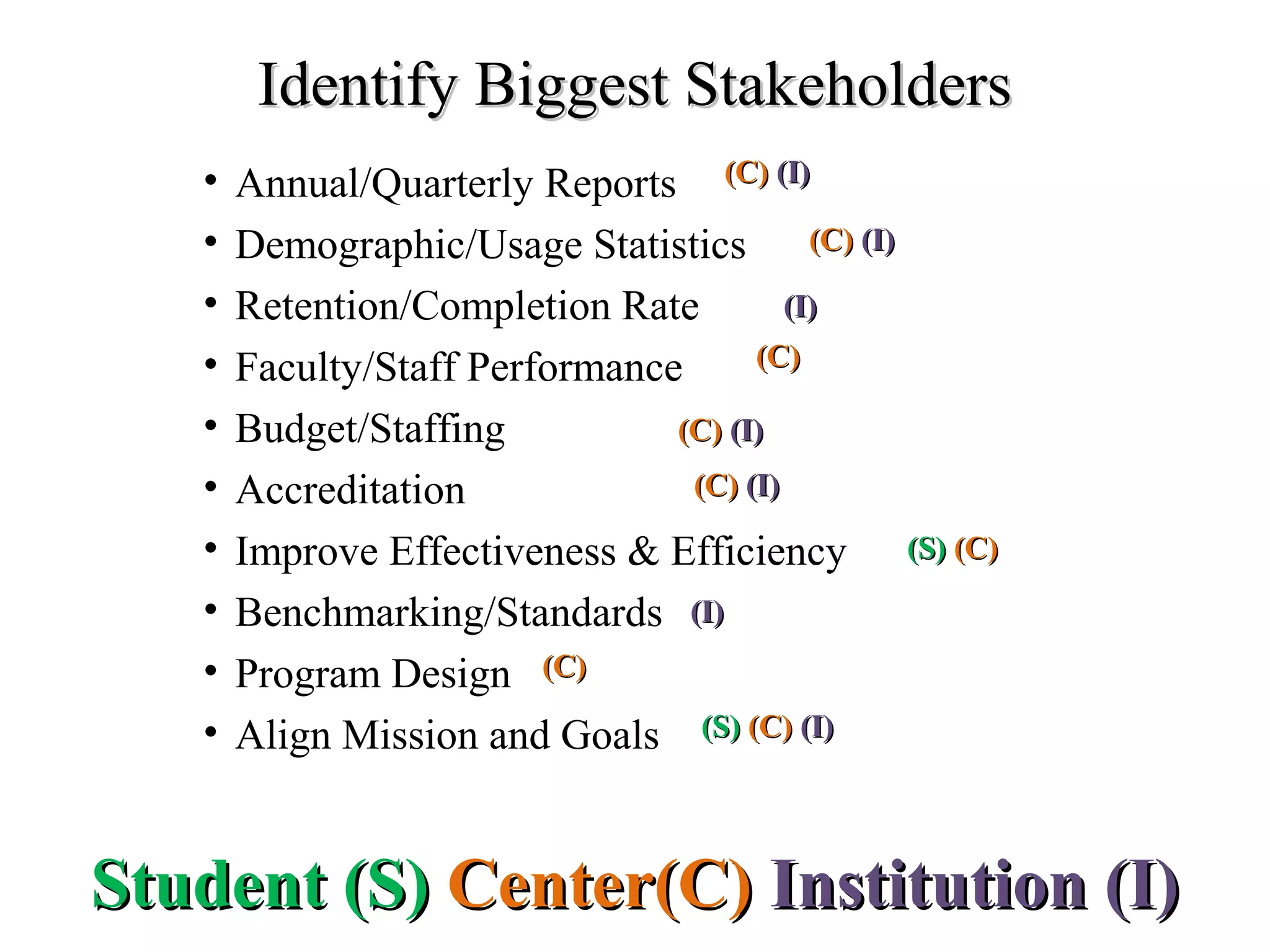

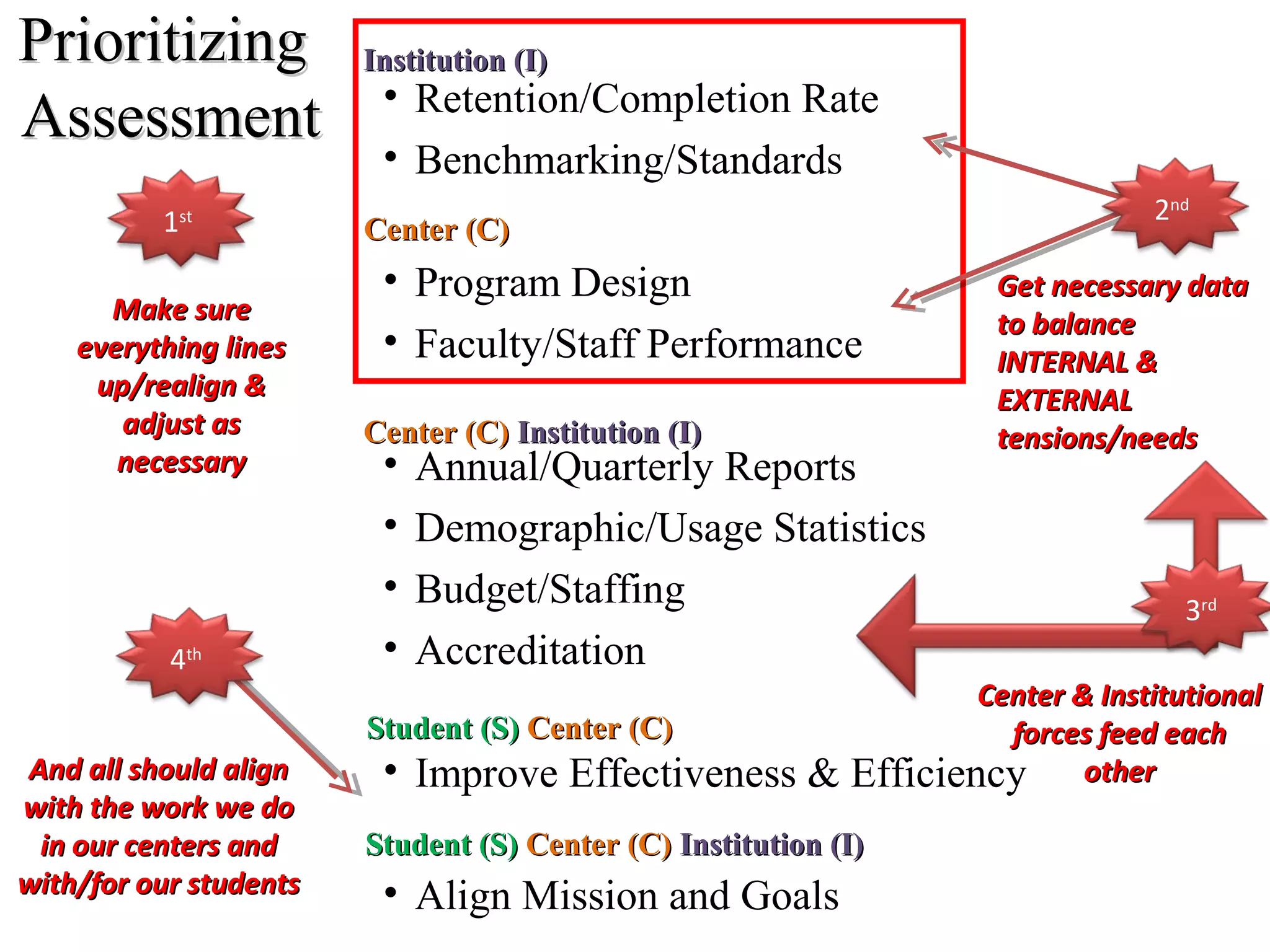

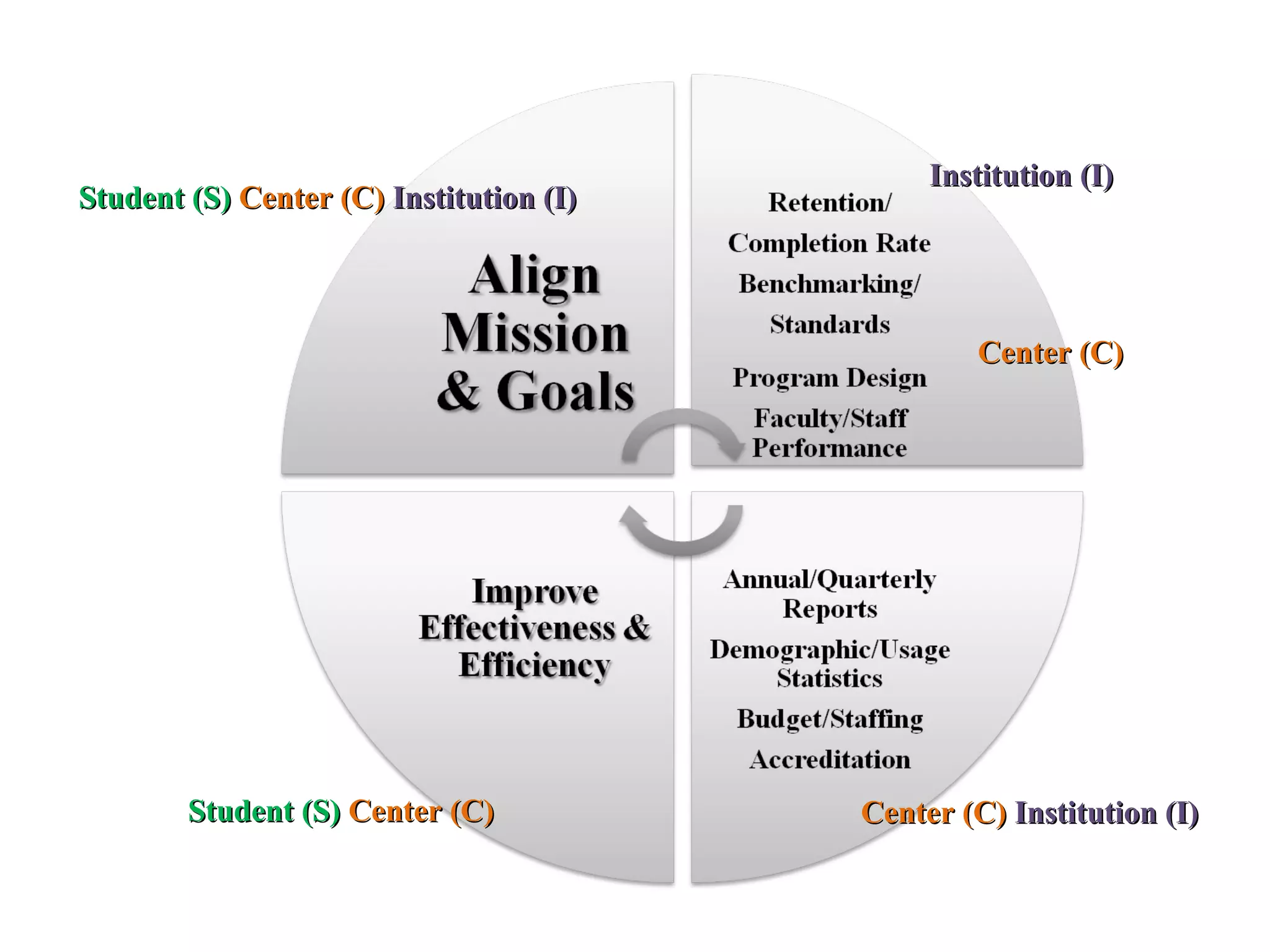

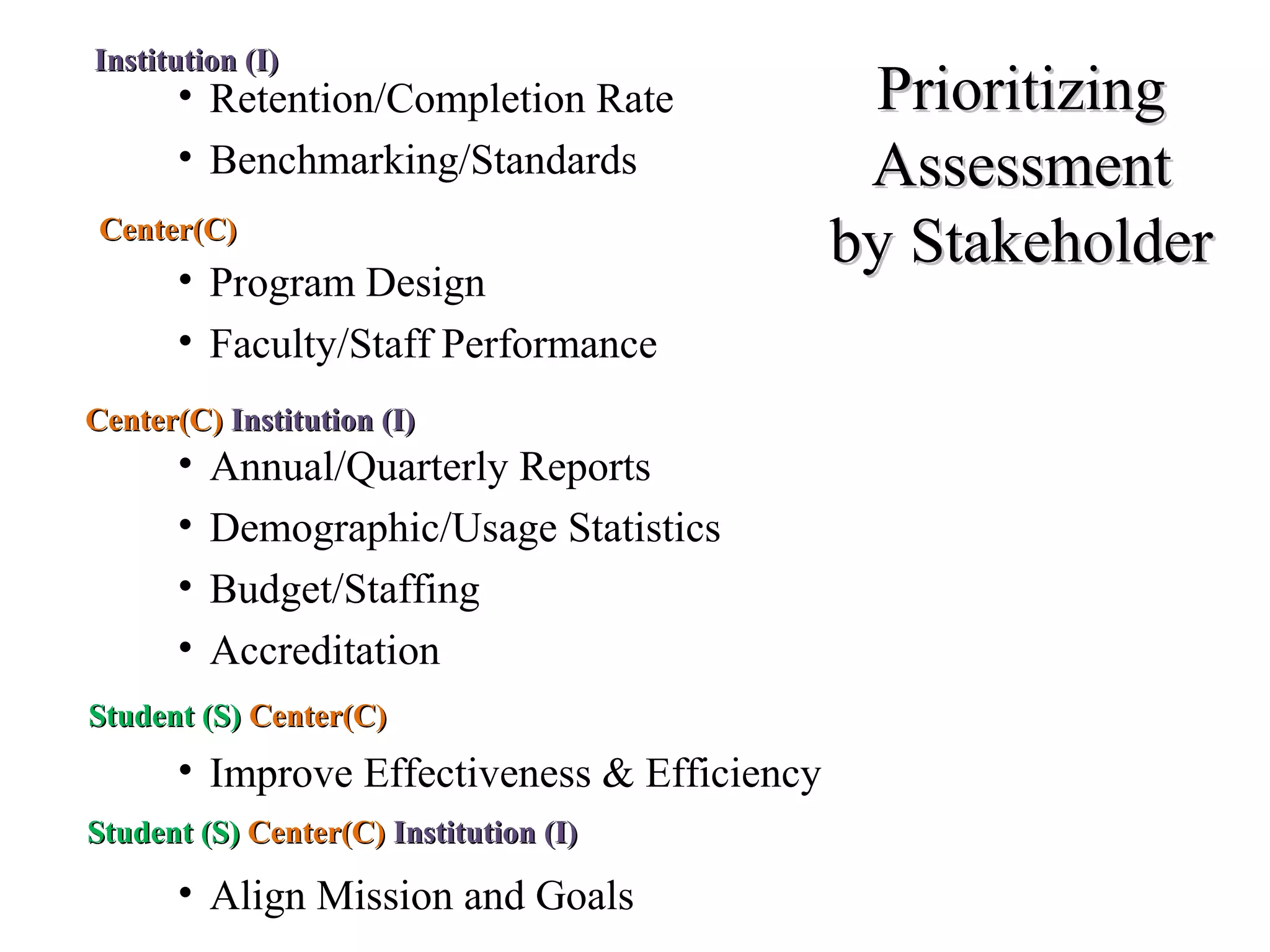

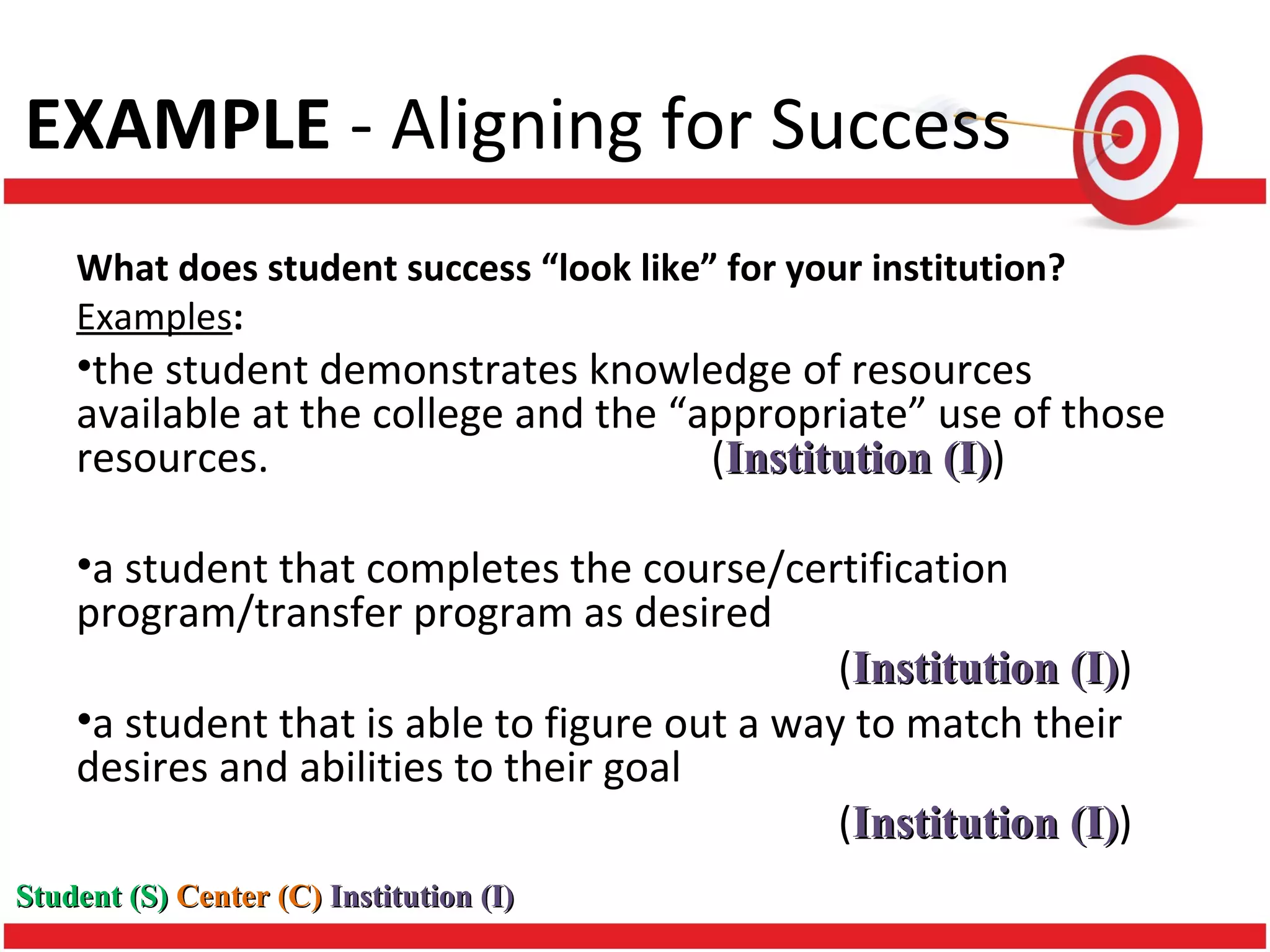

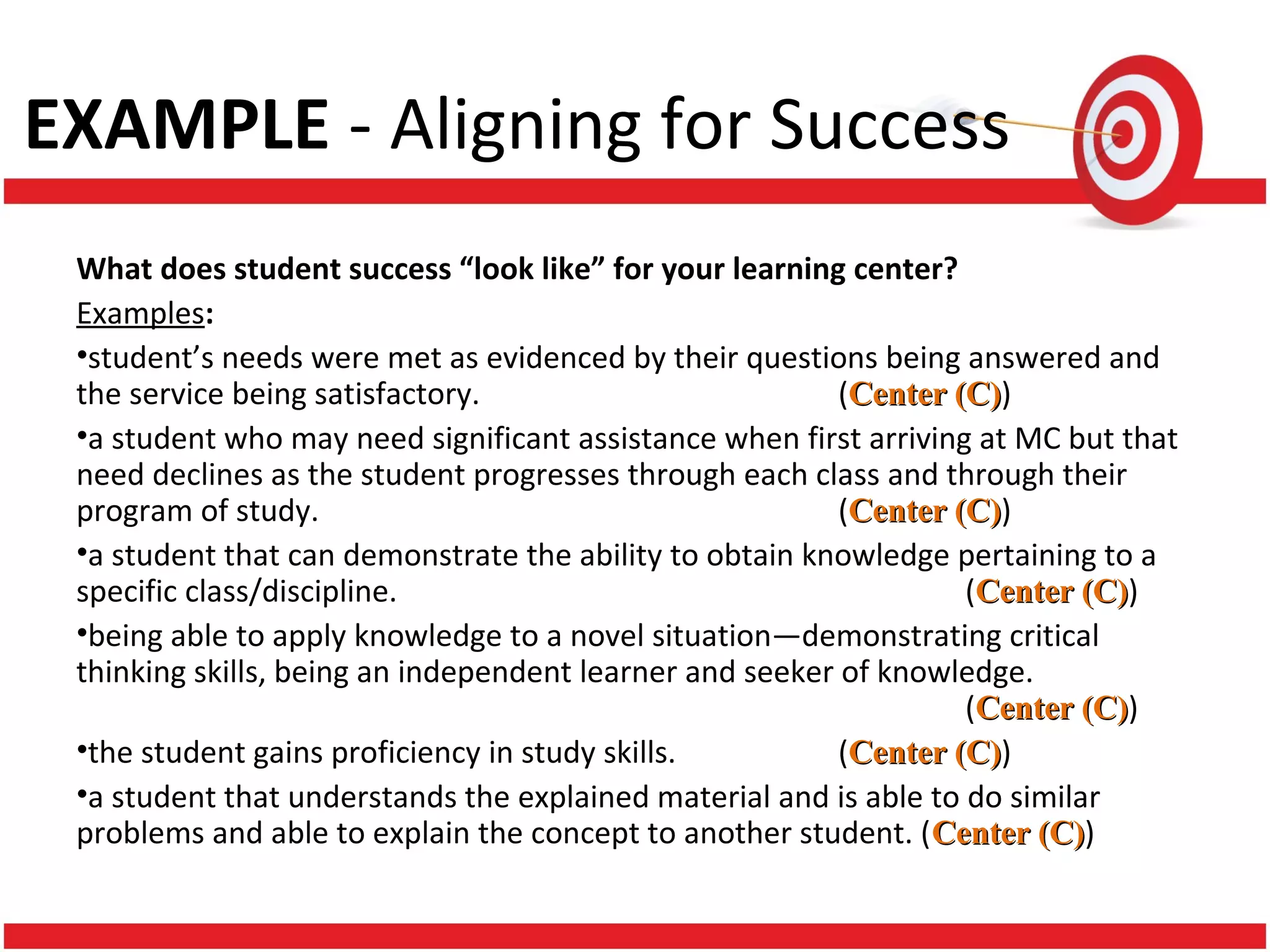

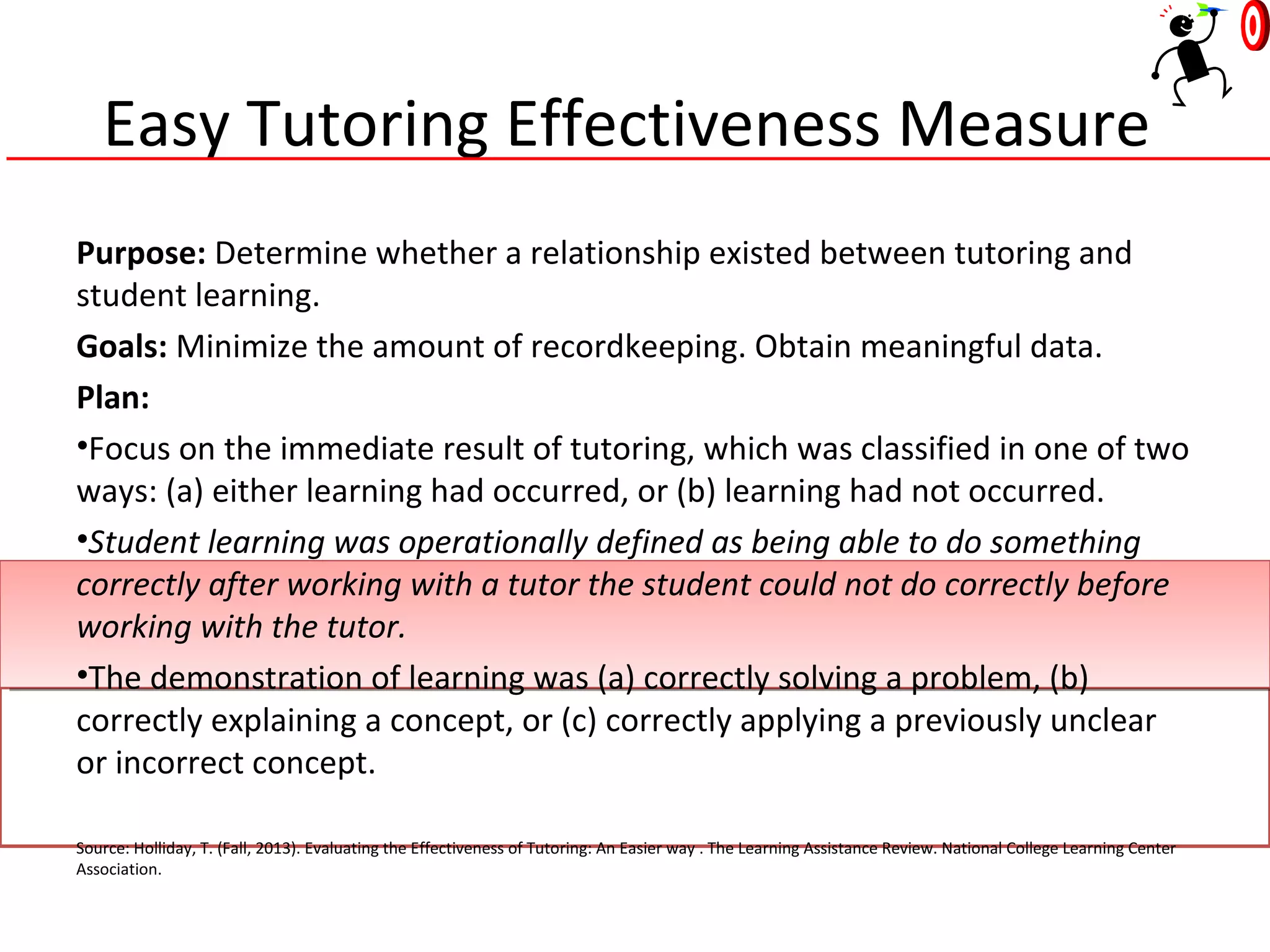

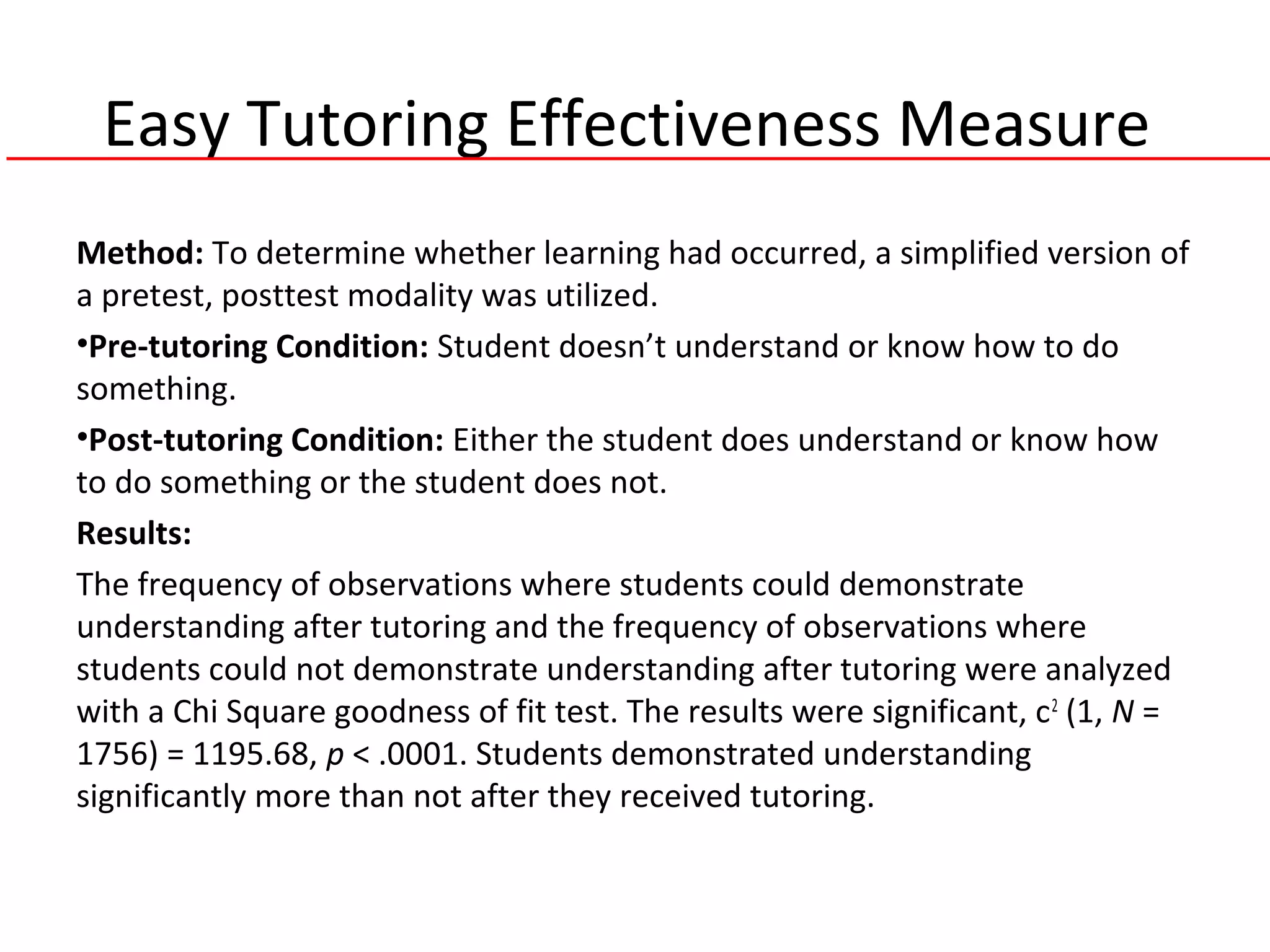

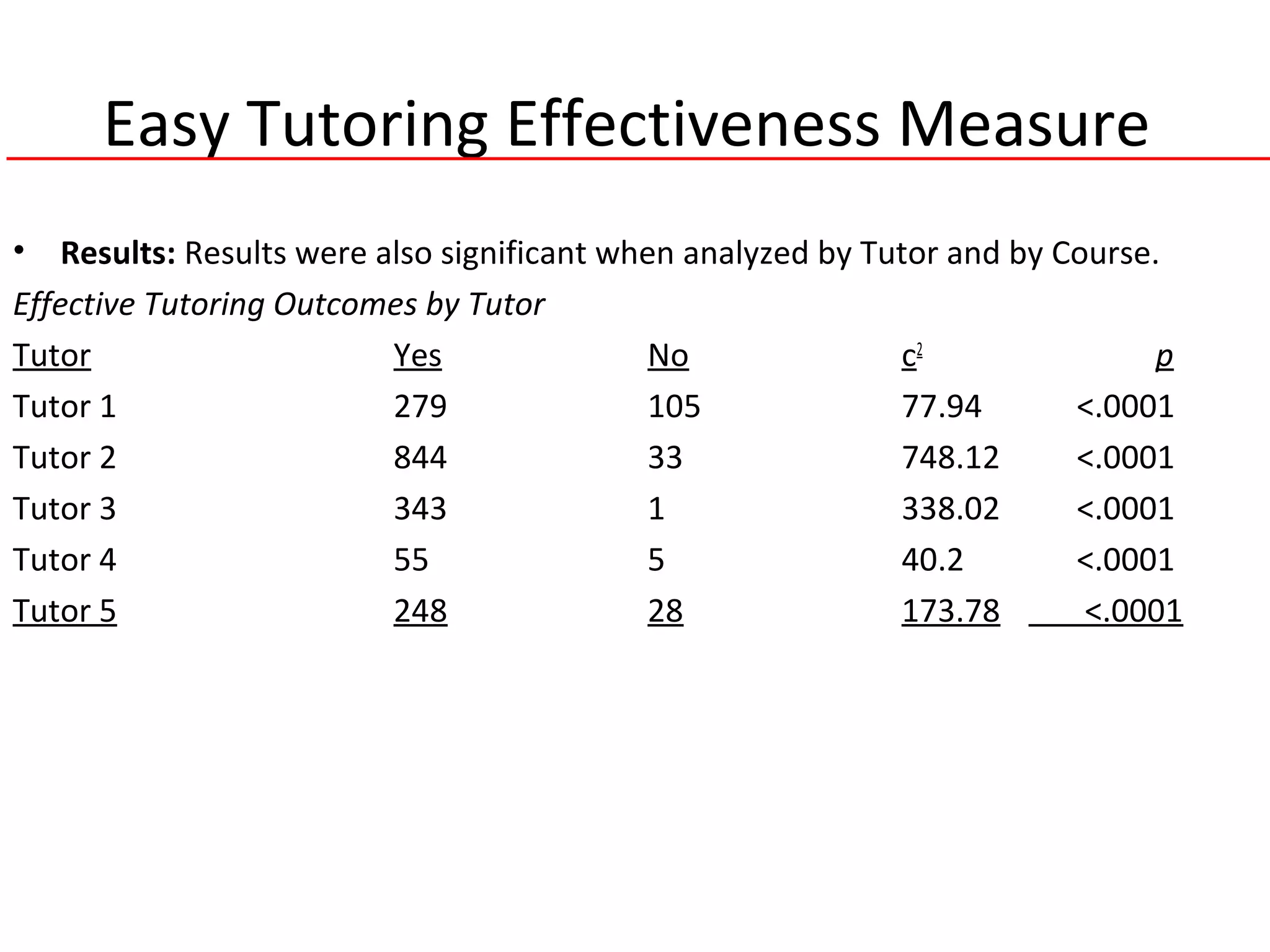

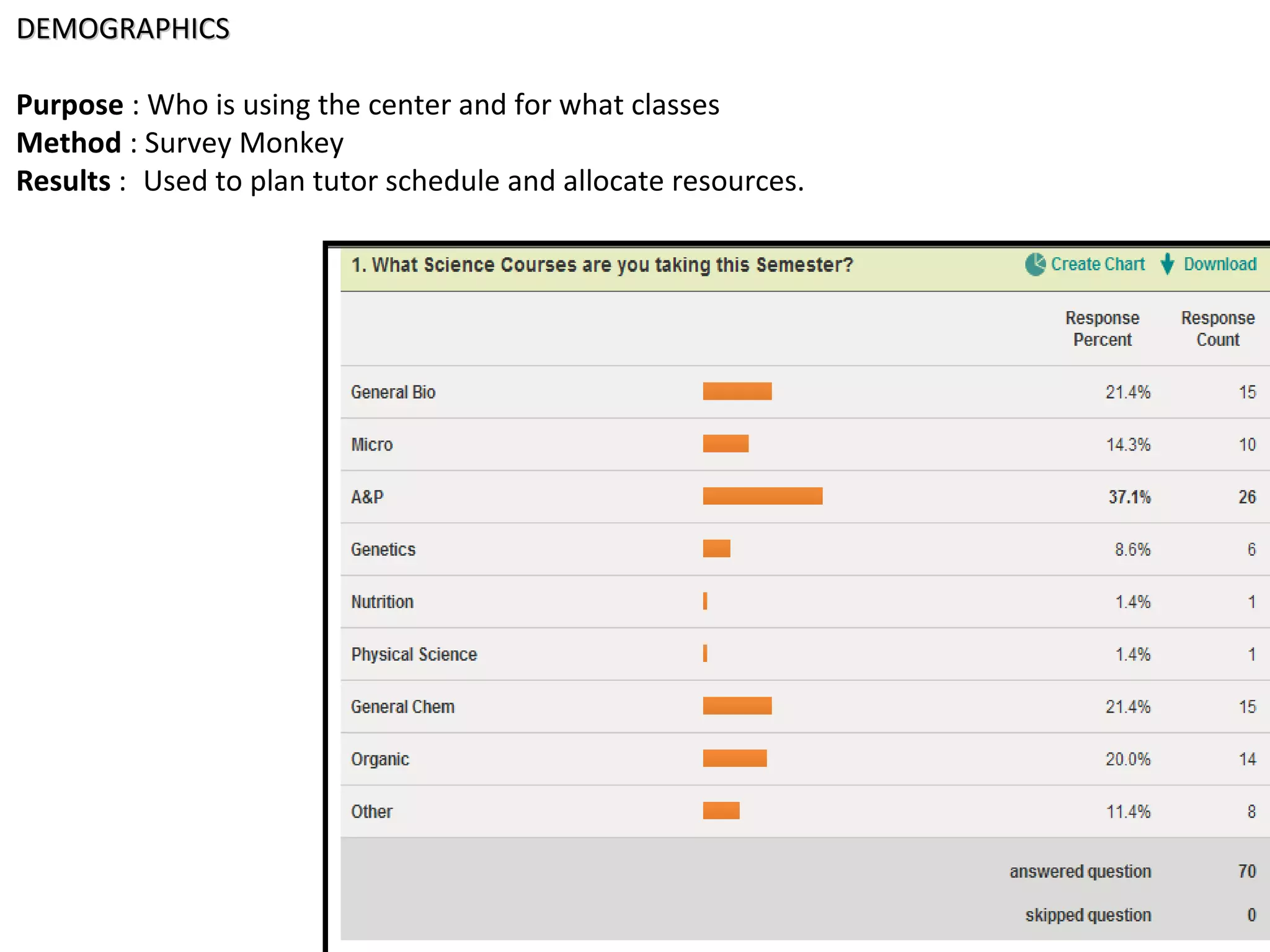

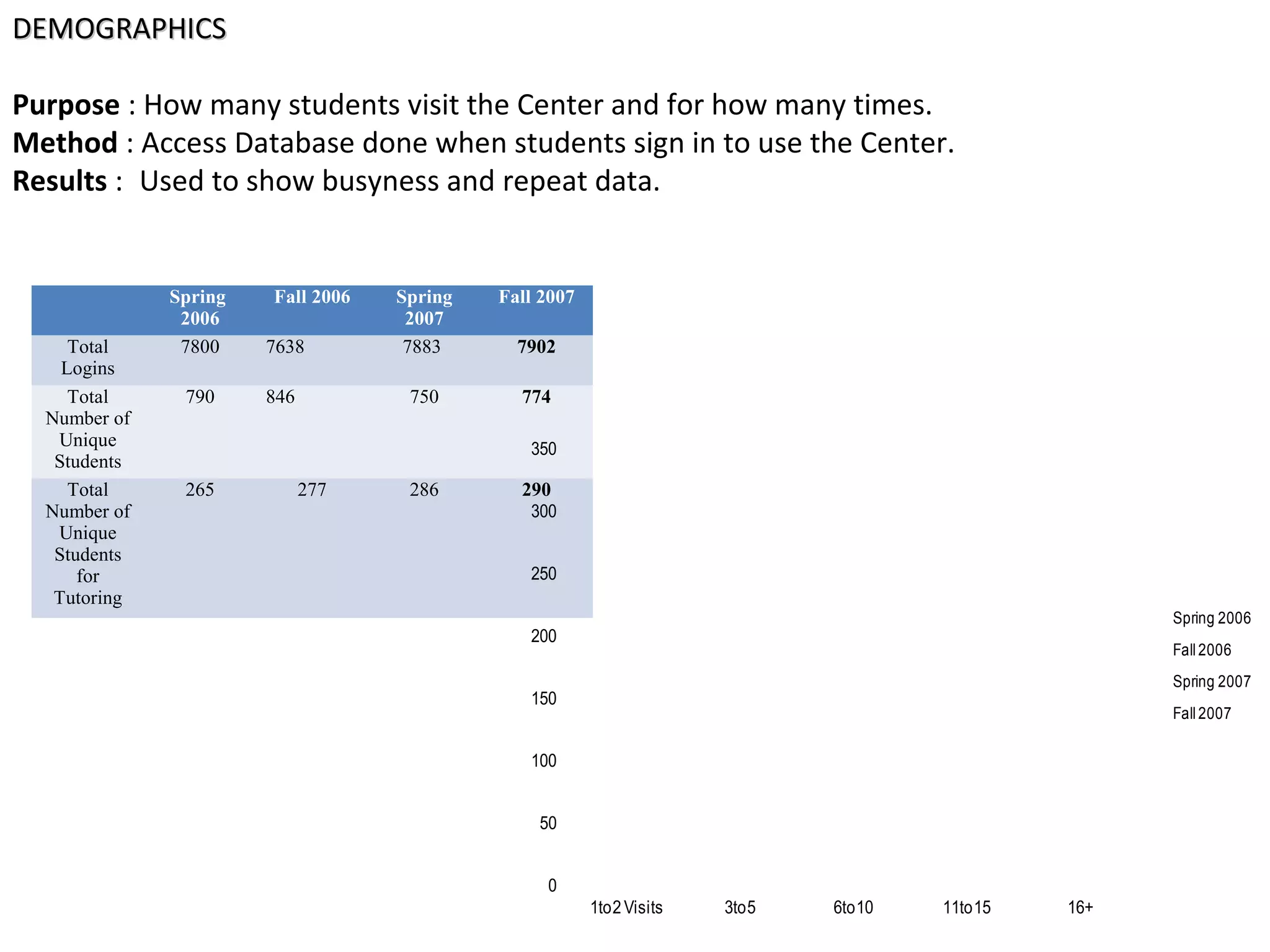

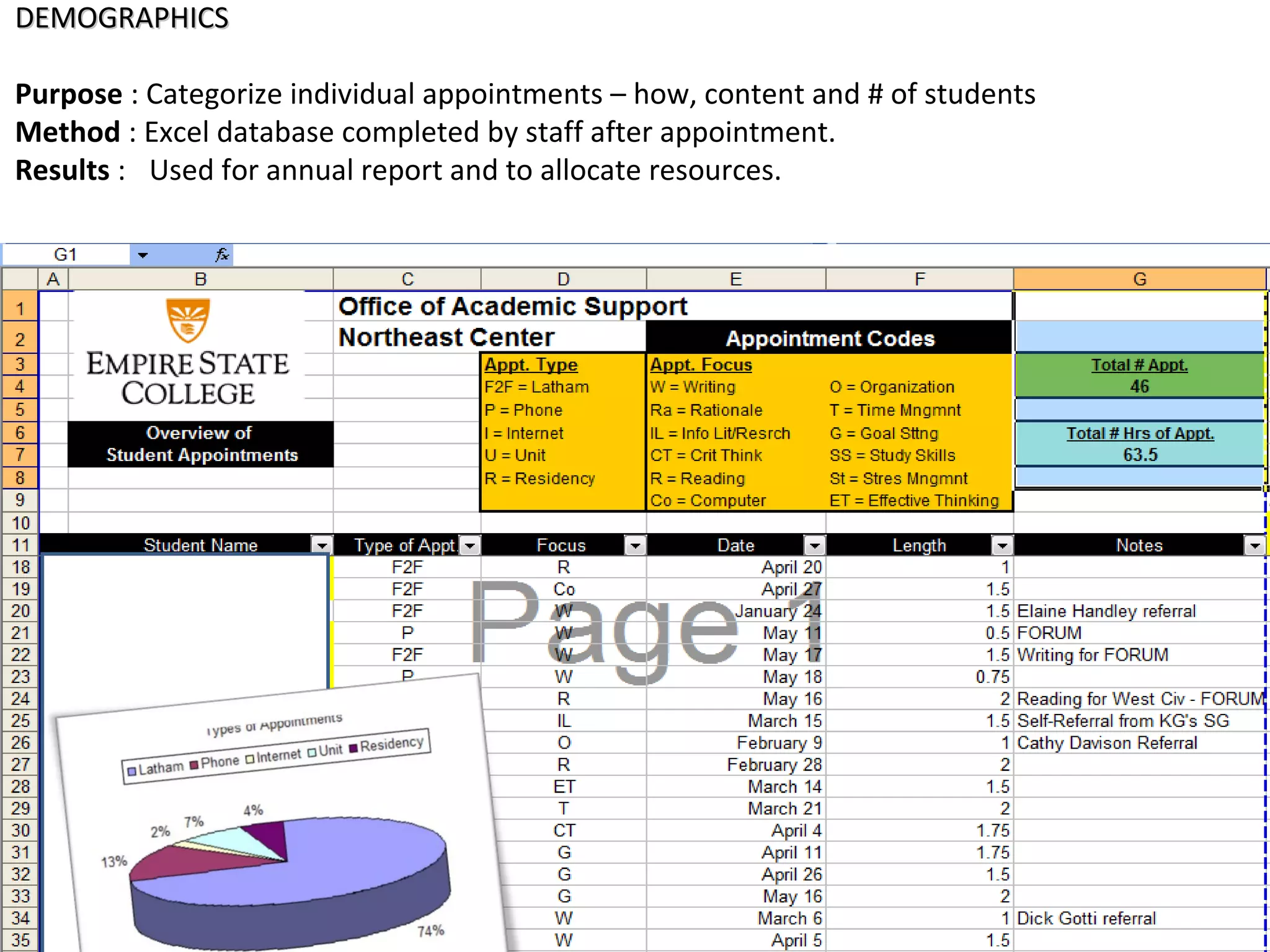

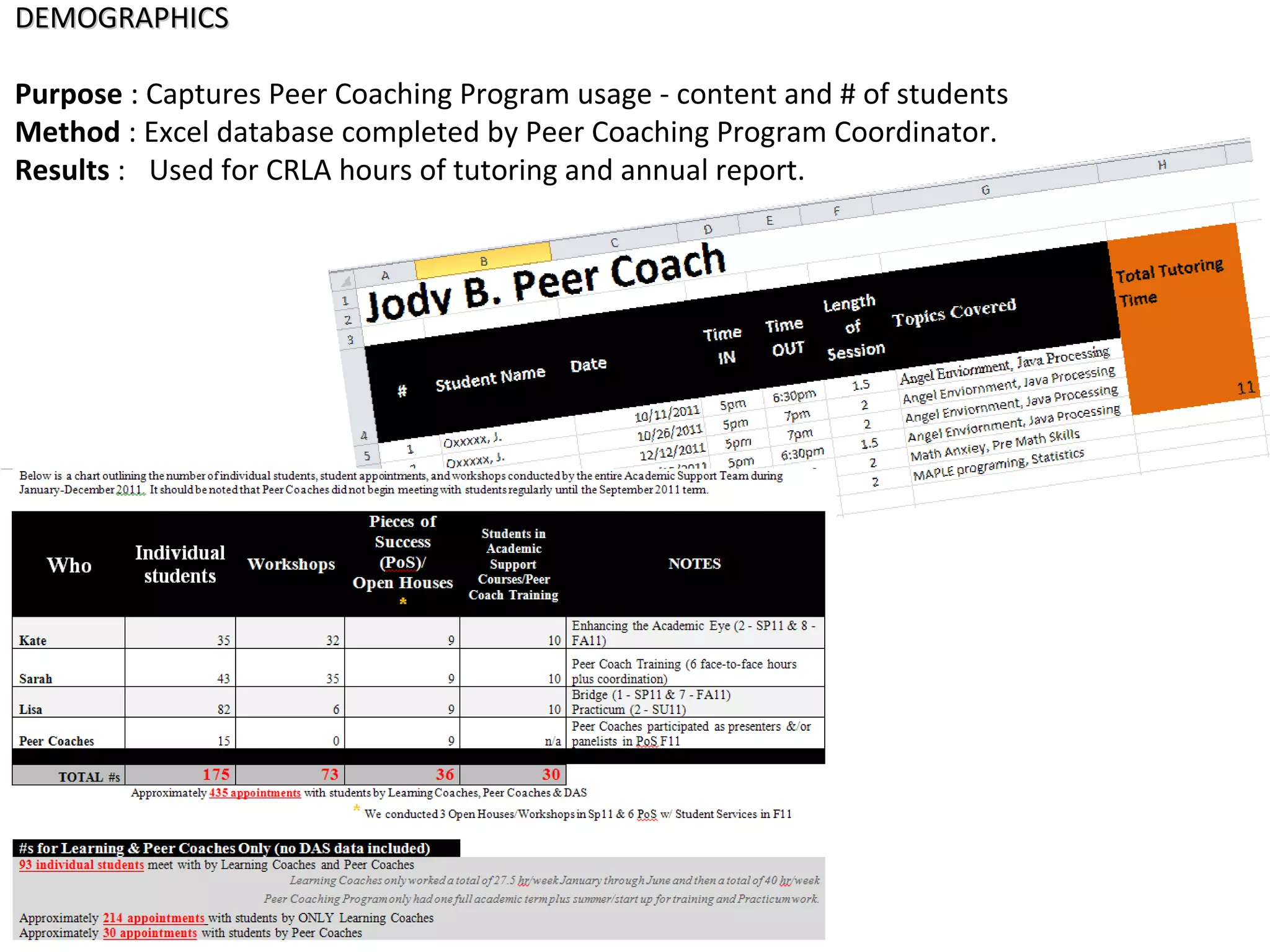

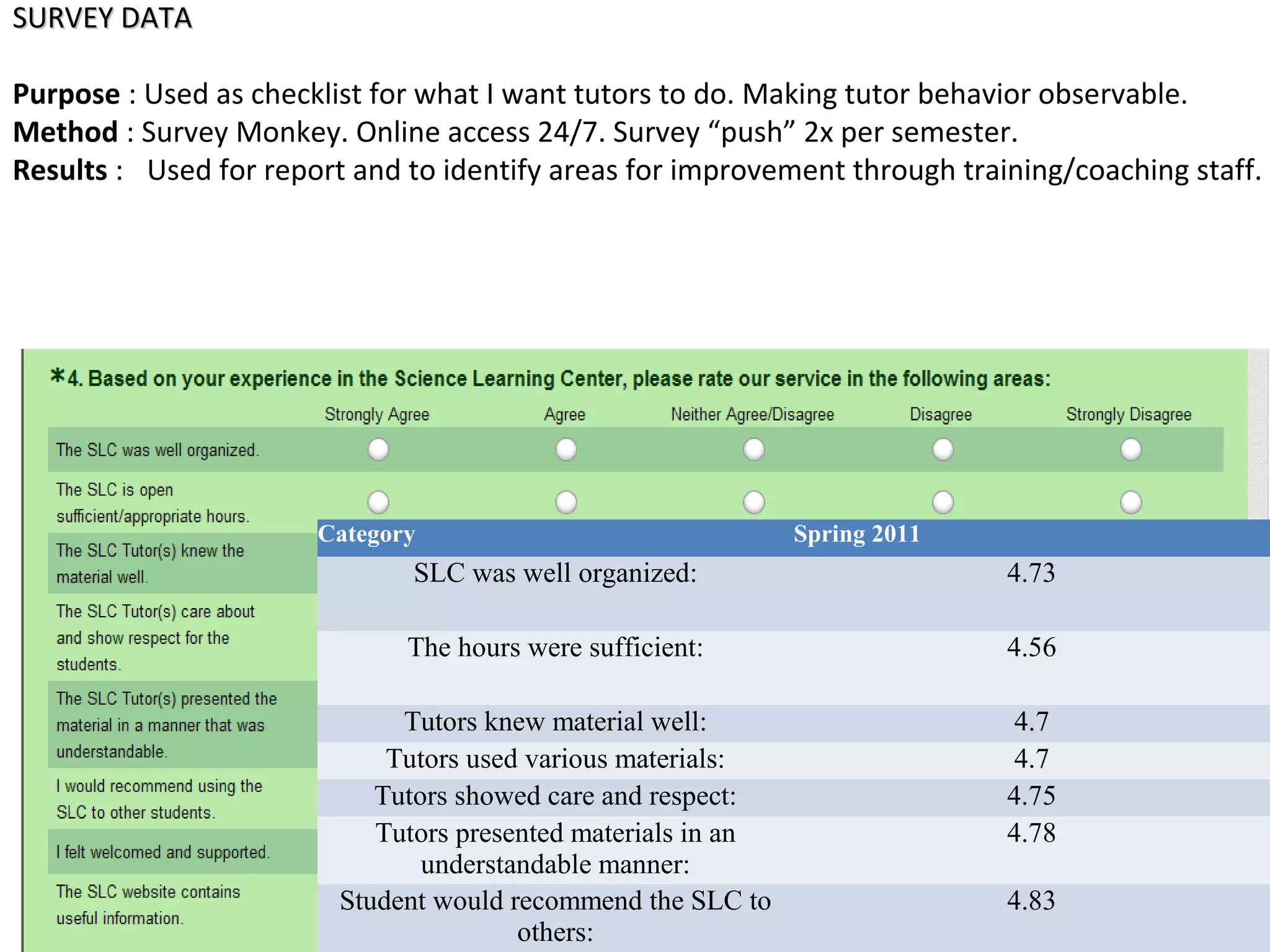

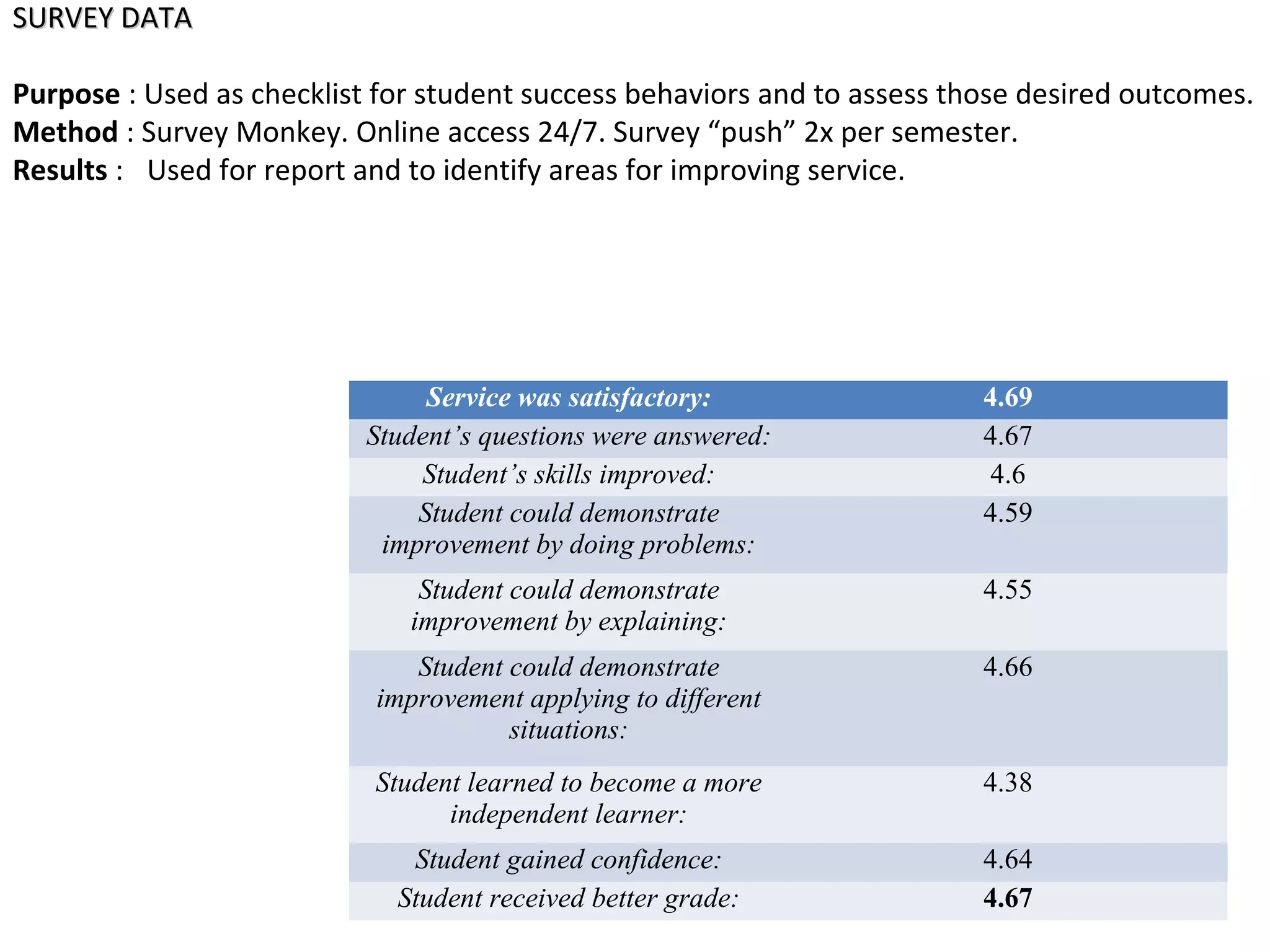

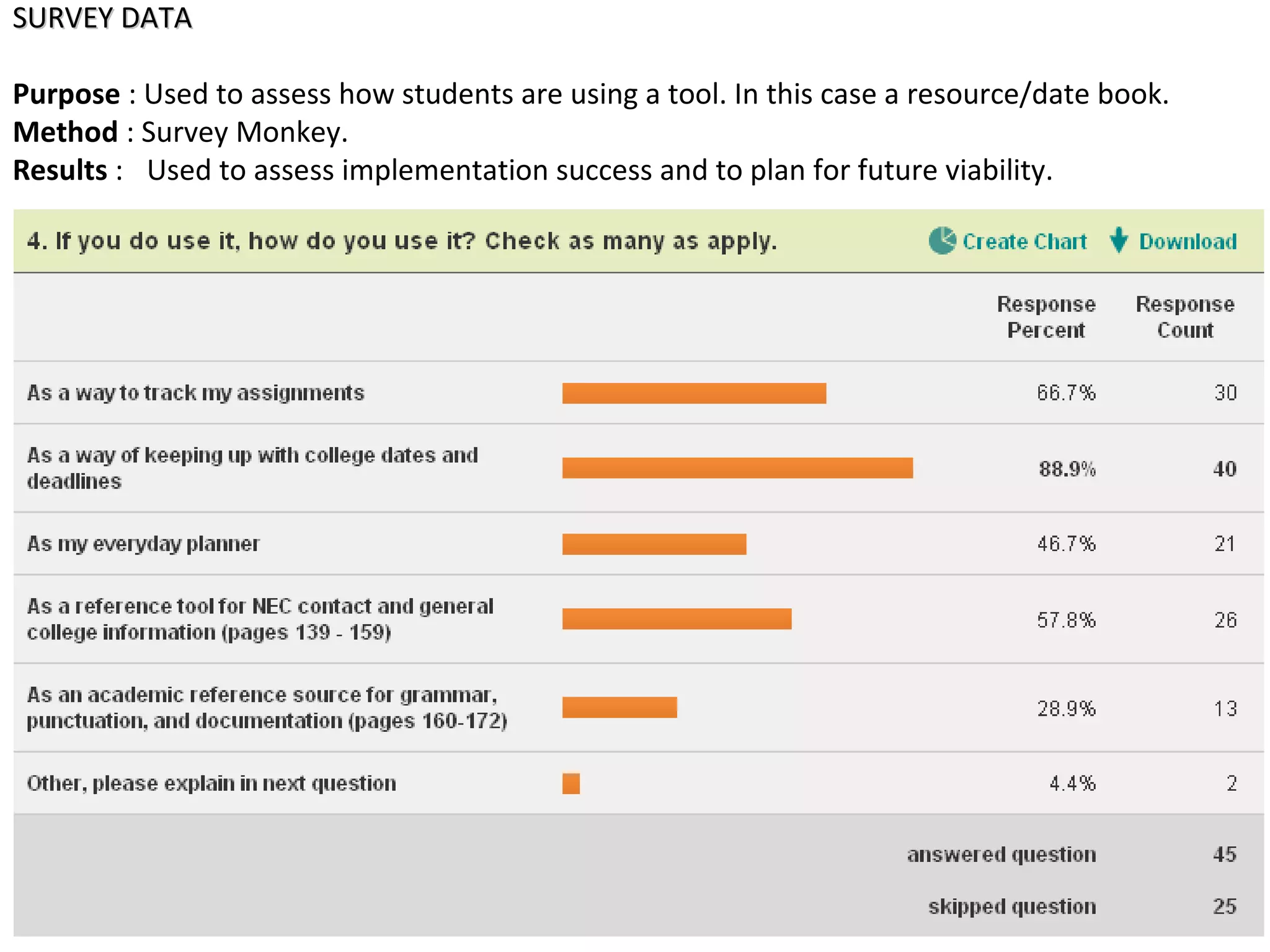

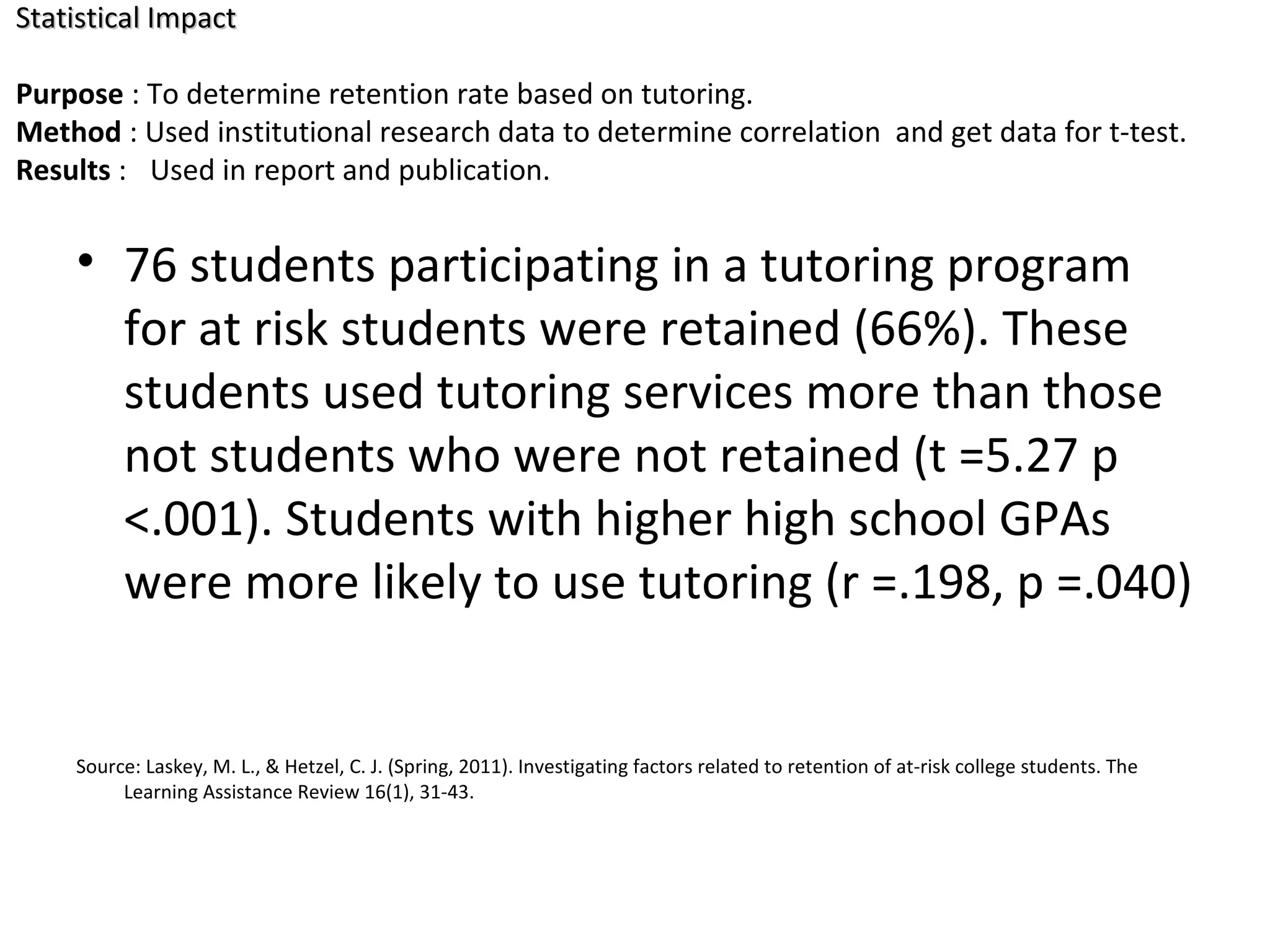

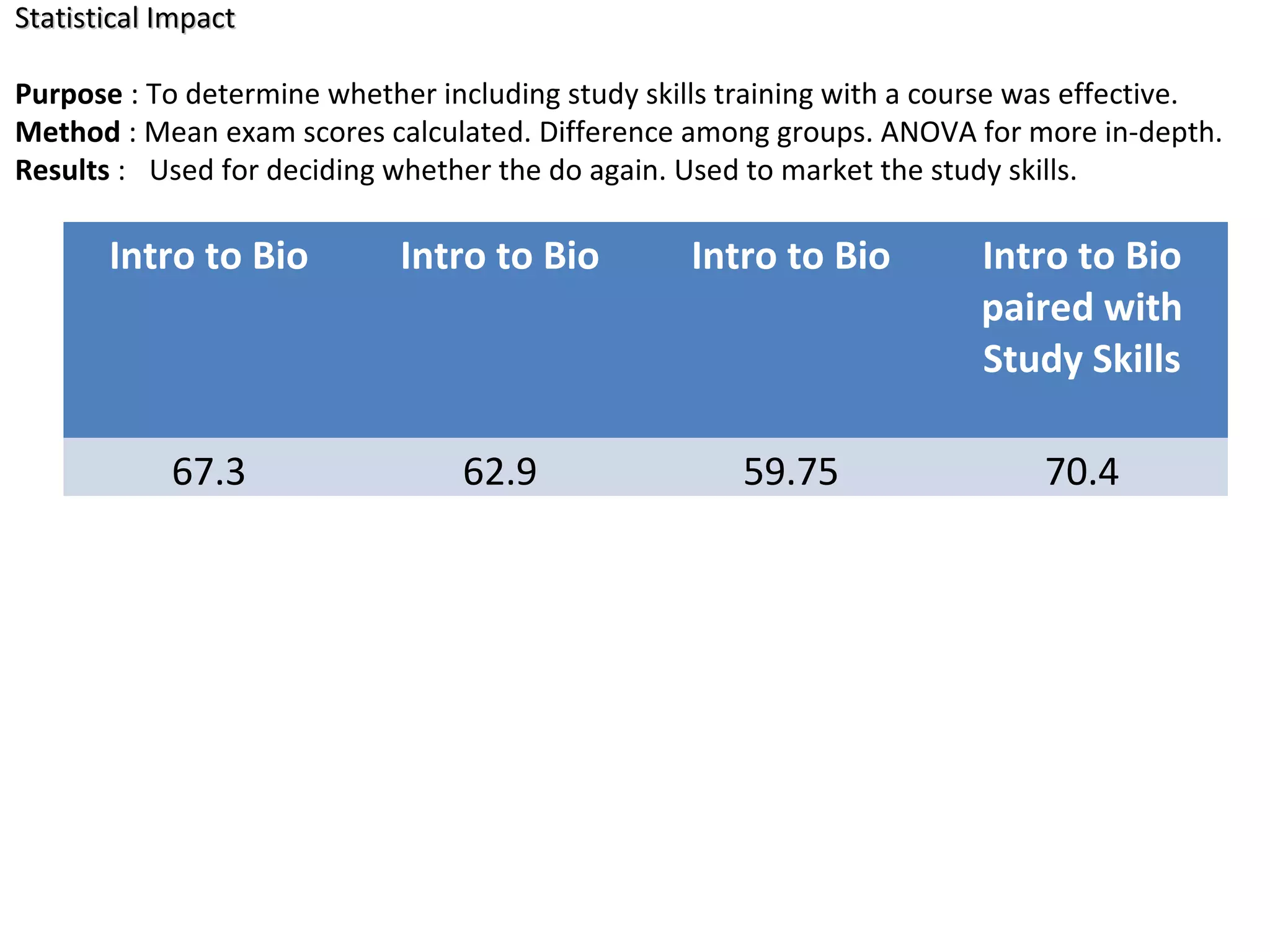

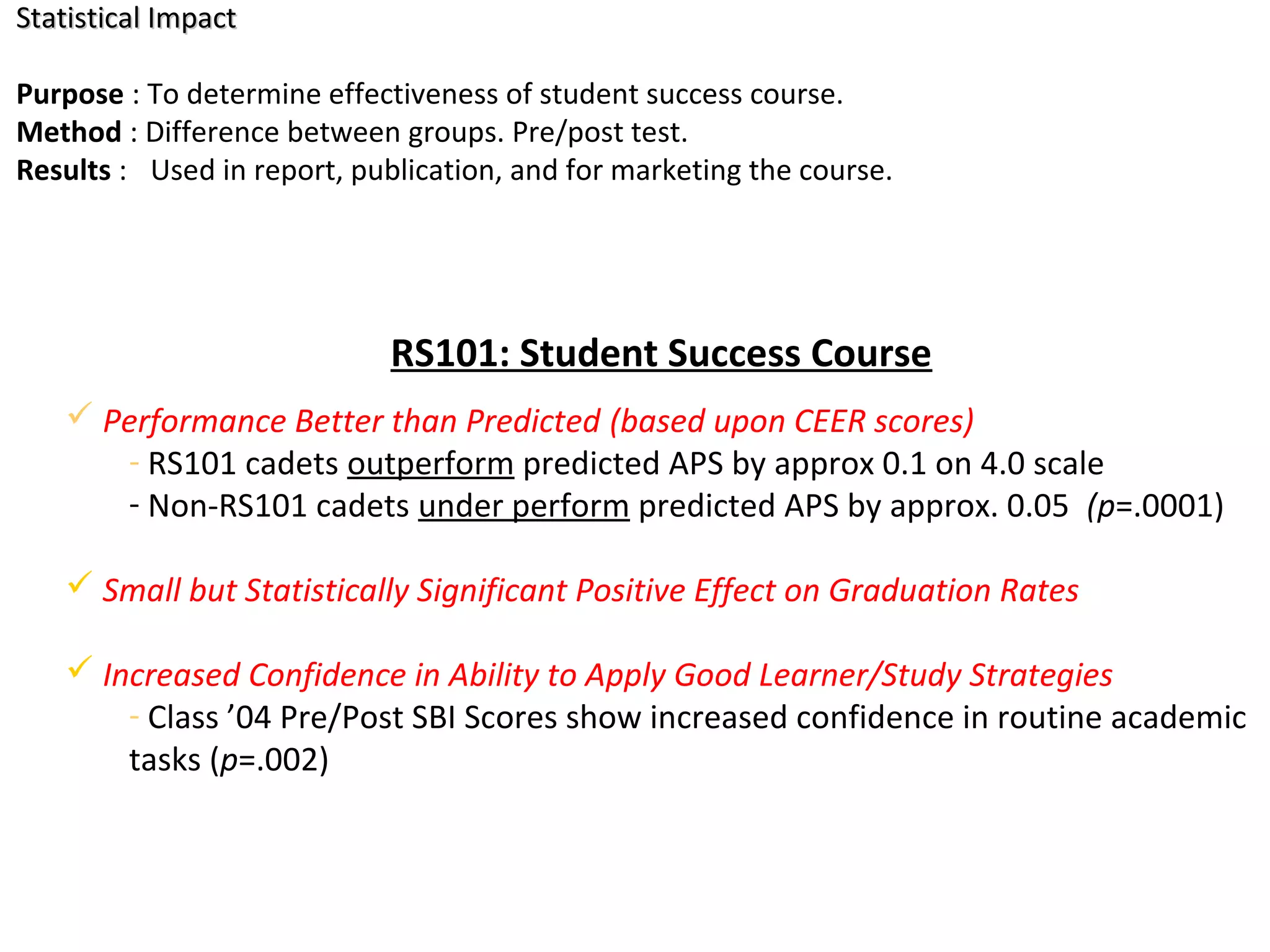

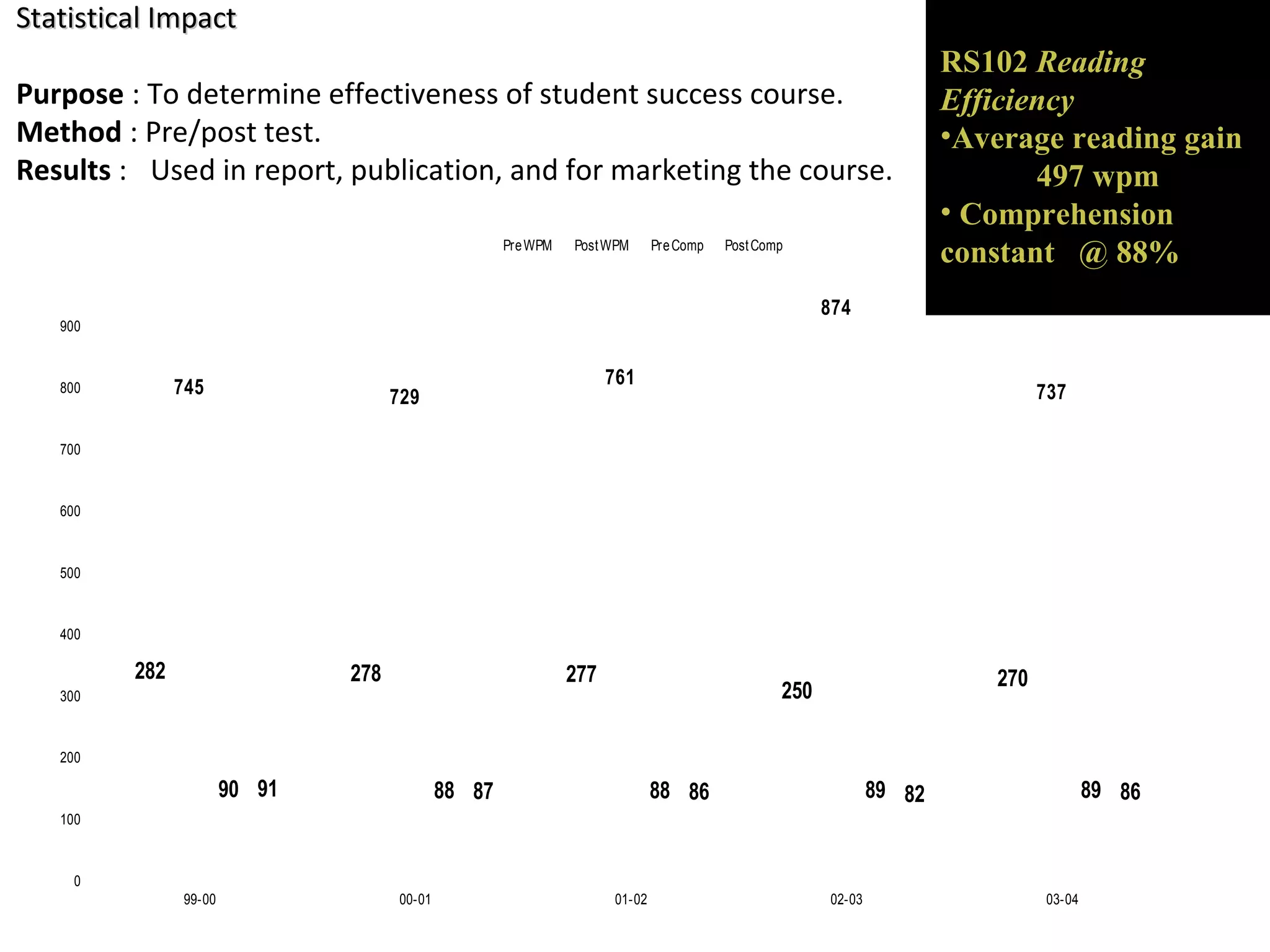

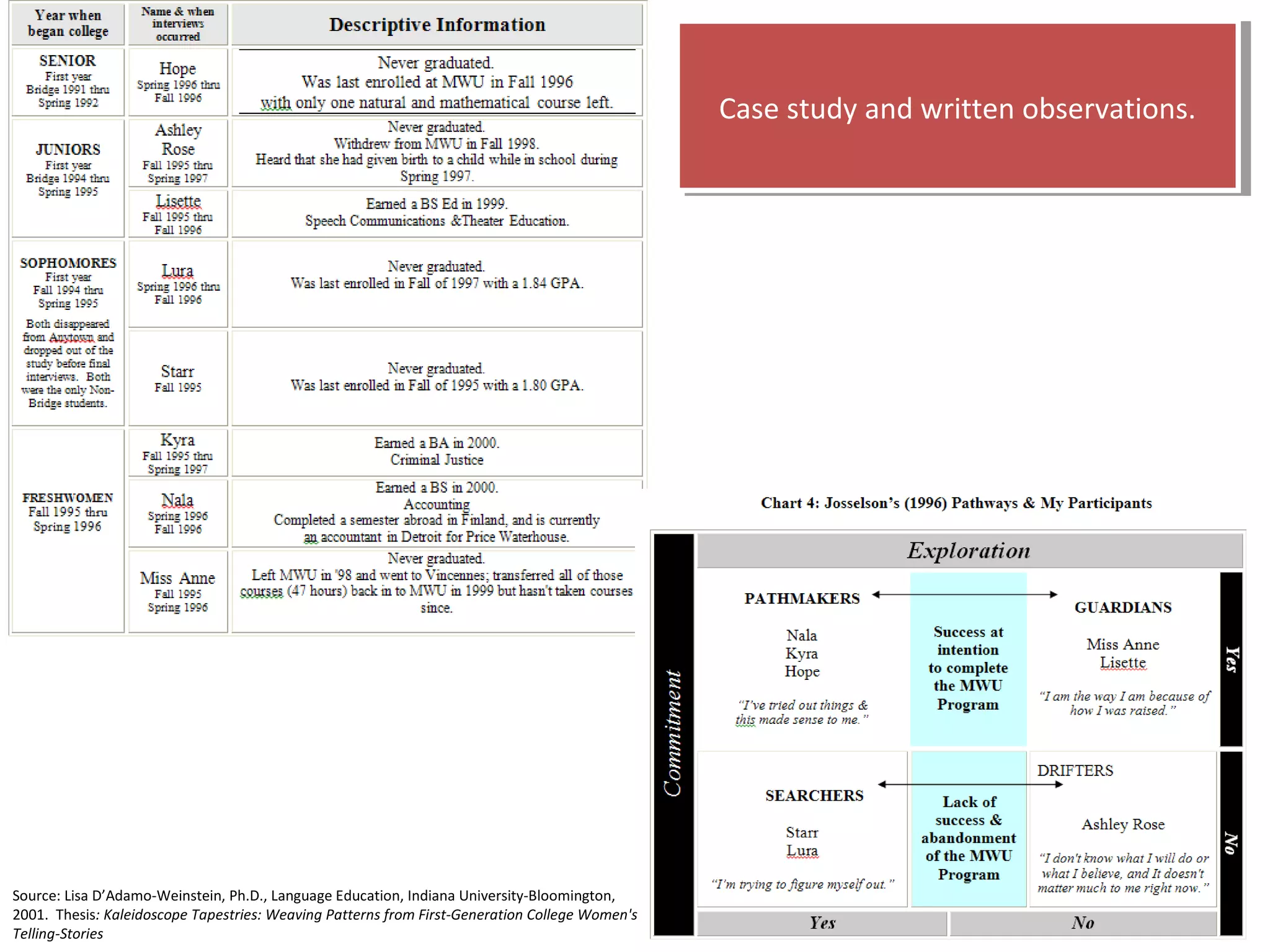

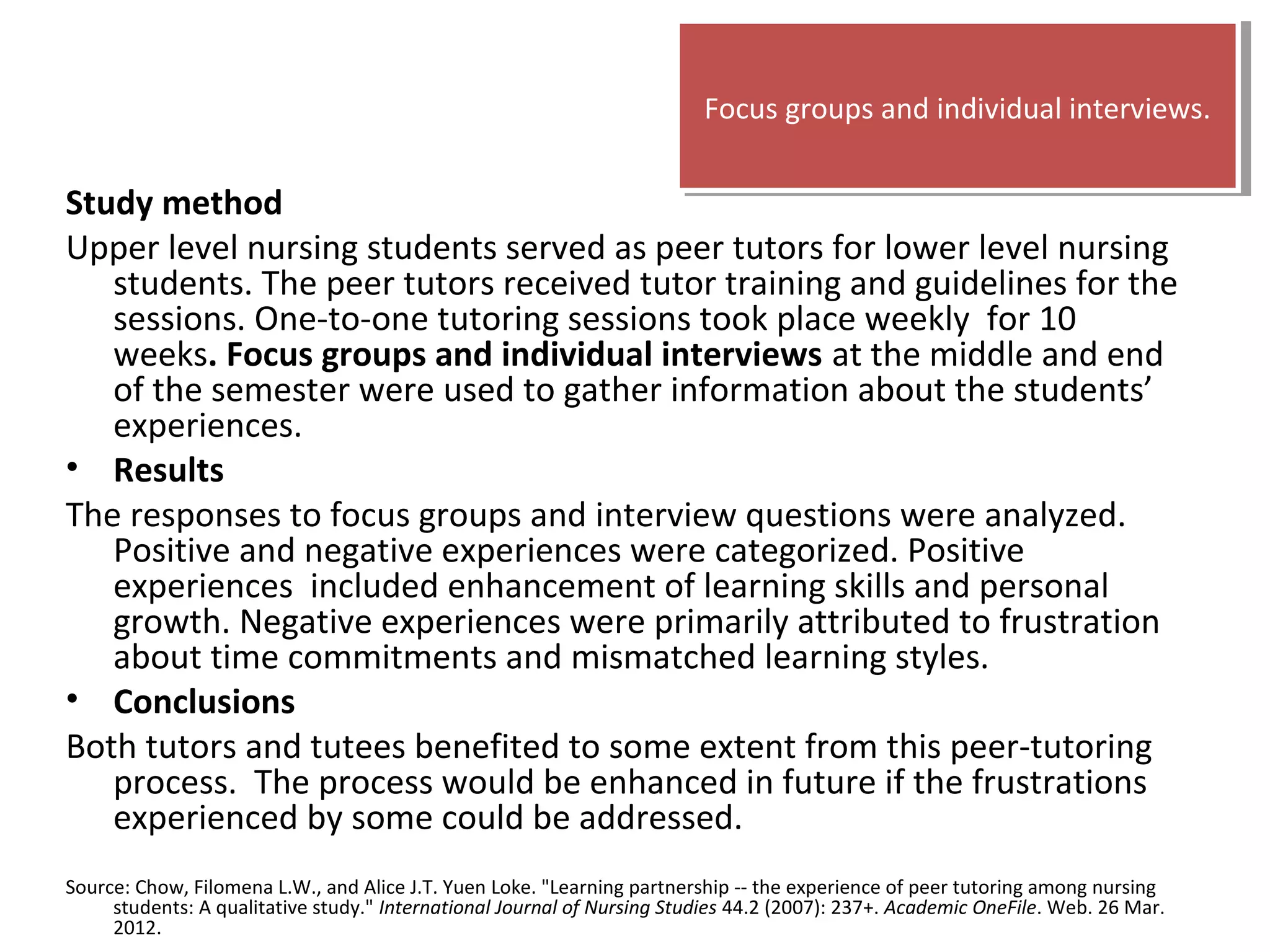

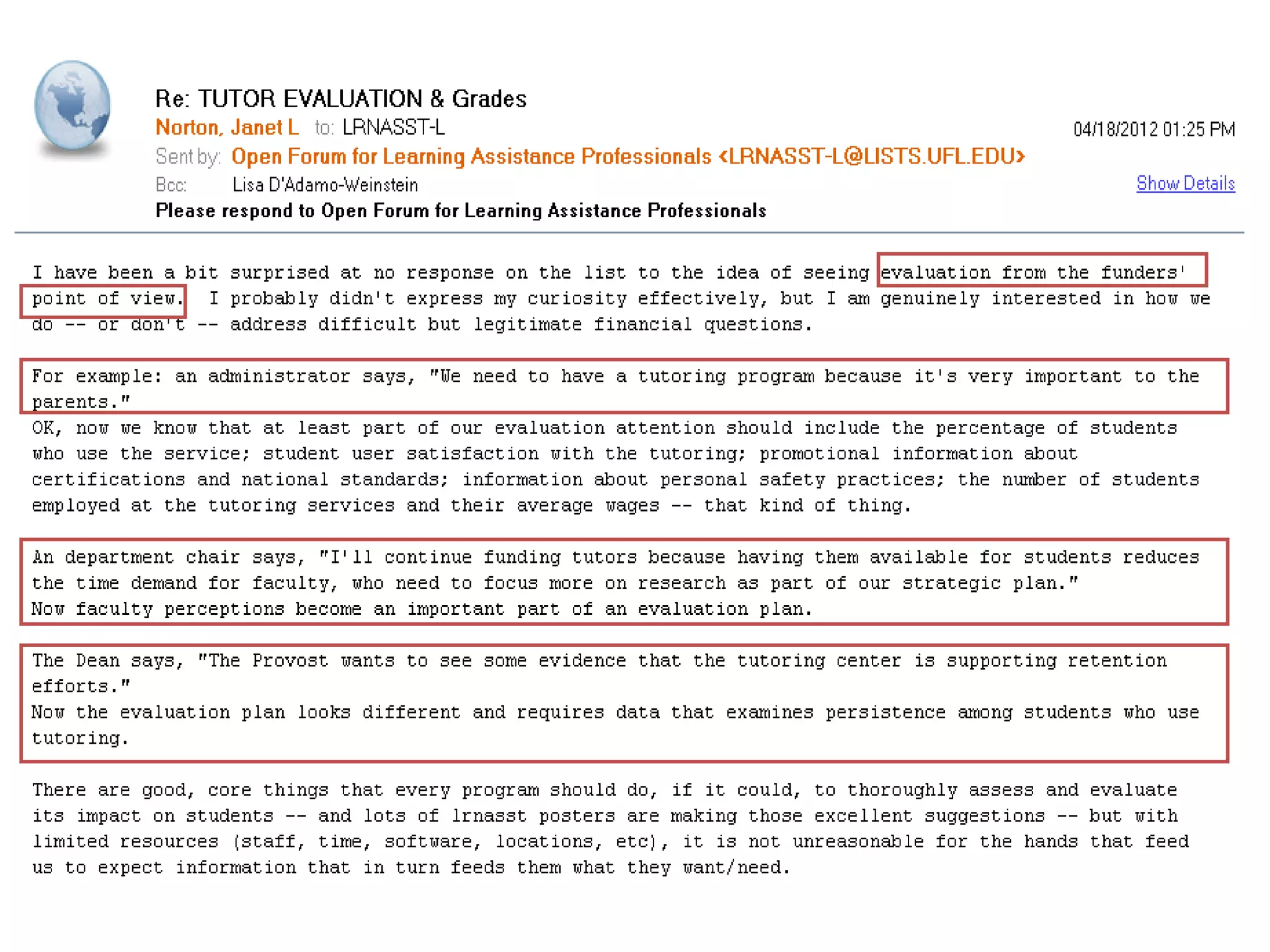

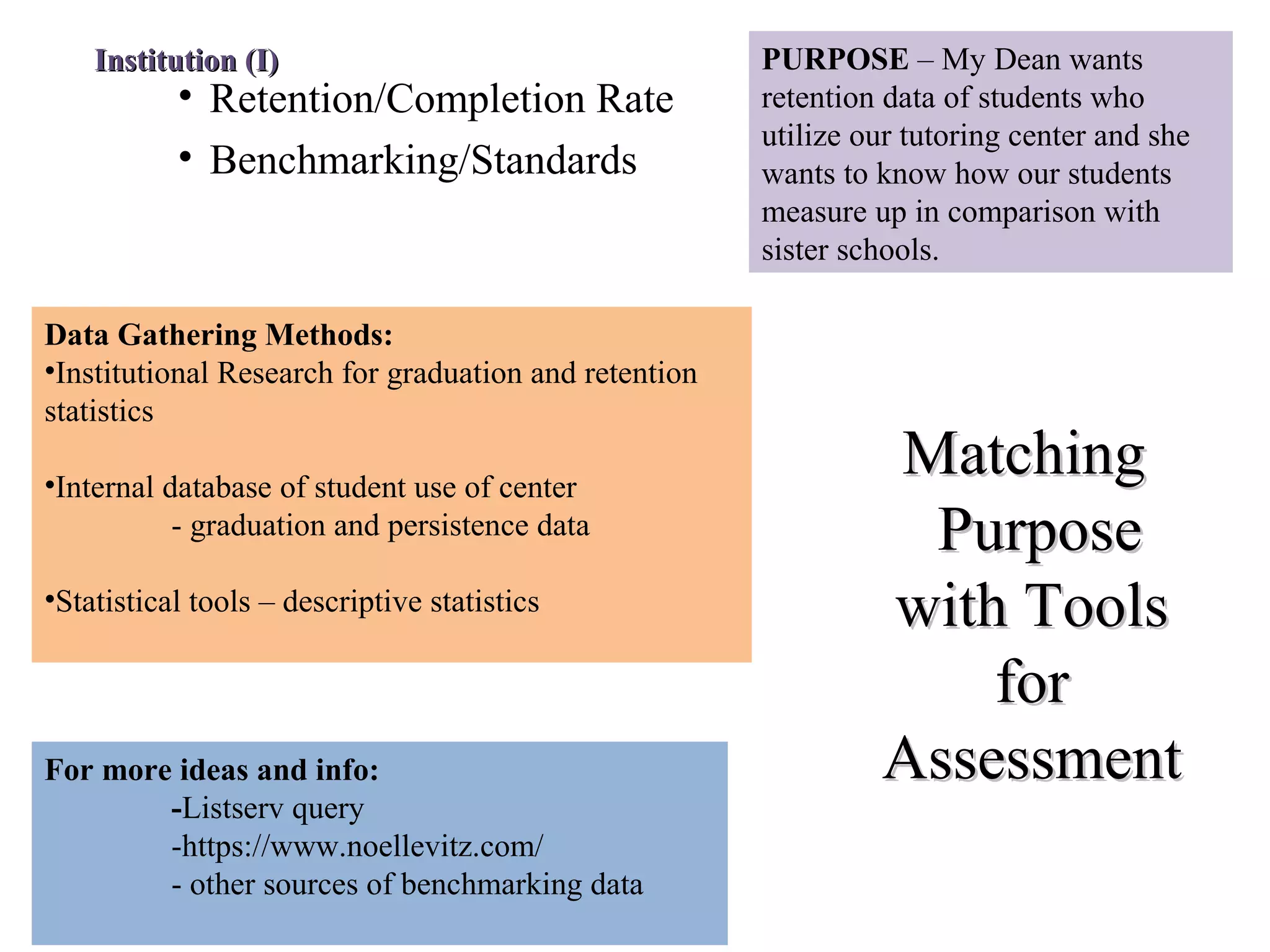

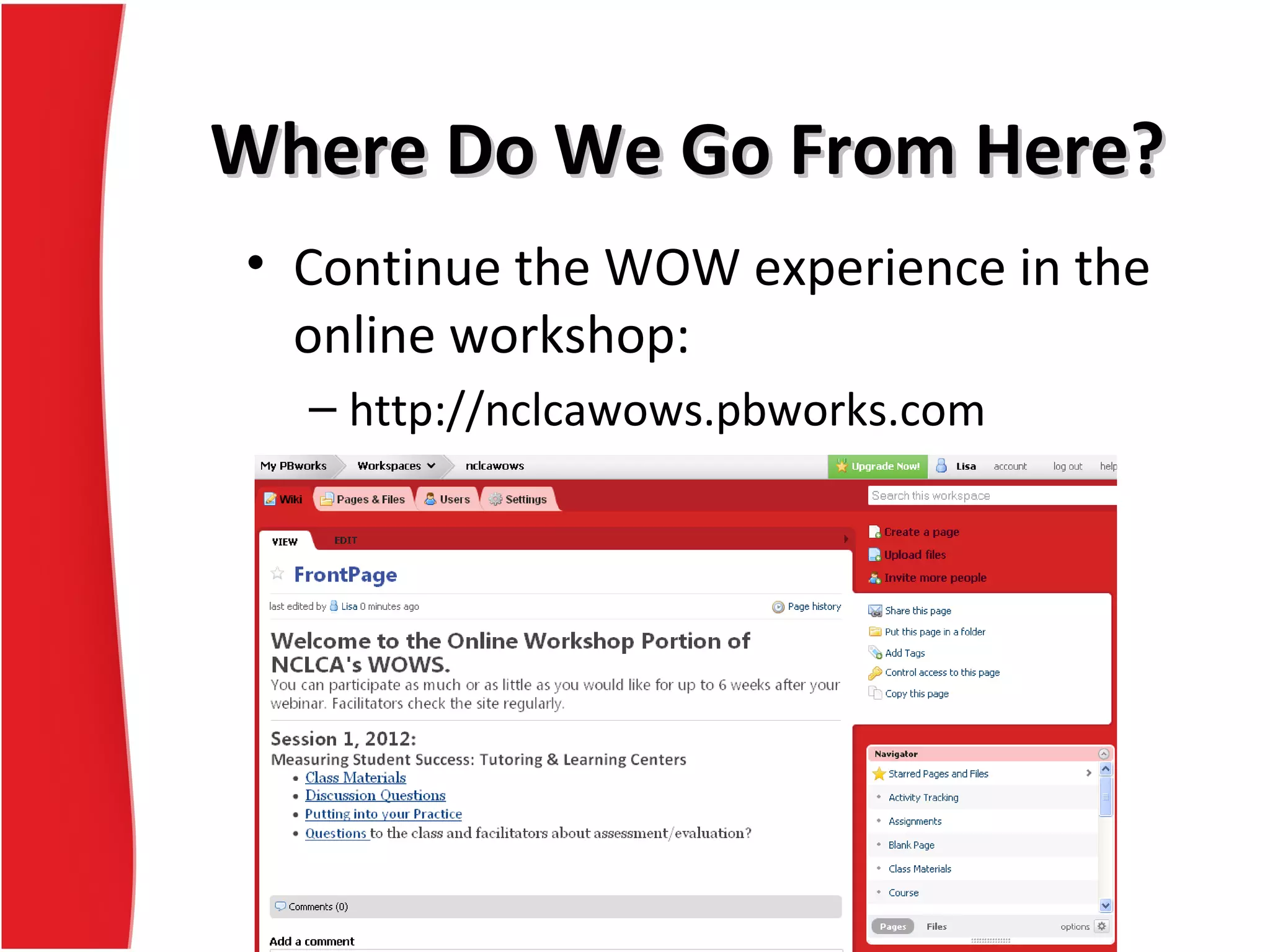

The document outlines a webinar and workshop series focused on assessing student success, particularly in tutoring and learning centers. It emphasizes the importance of continuous assessment processes that align educational goals with student learning outcomes, discussing methods for developing effective assessment plans. Various quantitative and qualitative assessment modes are presented, alongside the significance of alignment with institutional missions and stakeholder involvement for meaningful improvement in educational programs.