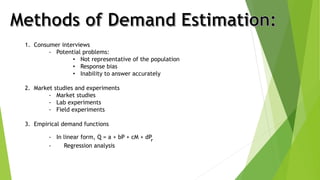

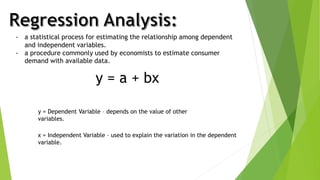

1. The document discusses demand estimation through regression analysis. Regression analysis uses empirical demand functions to estimate the relationship between a dependent variable (e.g. quantity demanded) and independent variables (e.g. price, income).

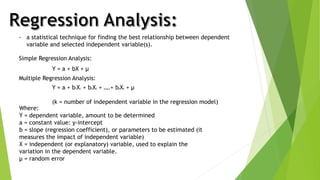

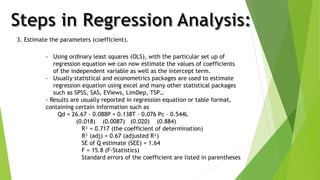

2. Simple and multiple regression analysis are explained. Simple regression uses one independent variable while multiple regression uses two or more. The Ordinary Least Squares method is commonly used to estimate coefficients.

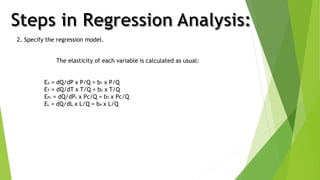

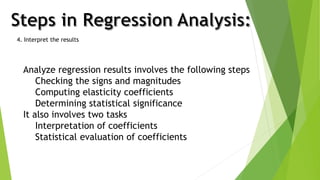

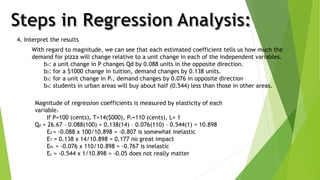

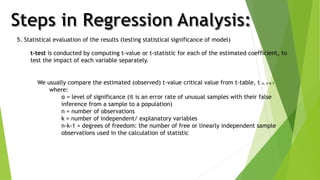

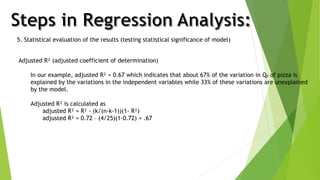

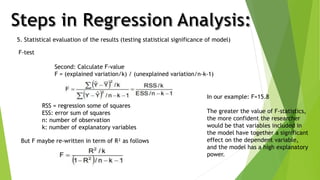

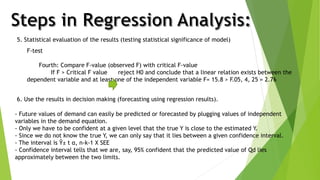

3. The key steps in regression analysis are: specifying the model, estimating coefficients, interpreting results through statistical tests of significance, and using results for decision making like forecasting.