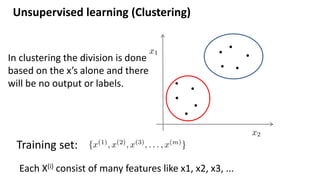

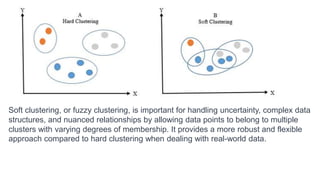

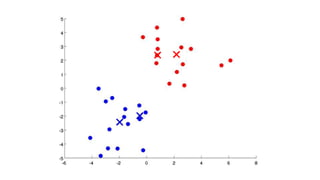

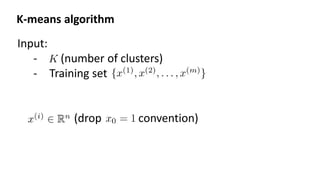

The document discusses clustering as a form of unsupervised learning in machine learning, contrasting it with supervised learning that relies on labeled data. It outlines various clustering methods such as k-means, hierarchical, and DBSCAN, along with concepts of hard and soft clustering, distance metrics, and the importance of choosing the right number of clusters. Additionally, examples of real-world applications like customer segmentation and the pros and cons of k-means are provided to illustrate its utility.