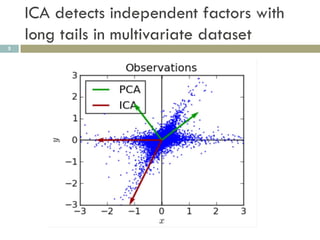

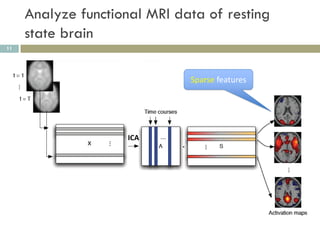

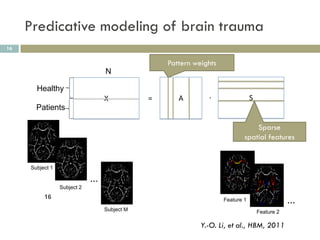

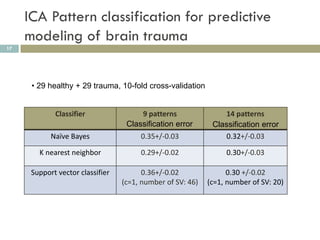

1) ICA can extract sparse and independent features from medical imaging data to build predictive models of conditions like brain trauma.

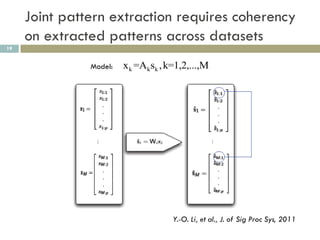

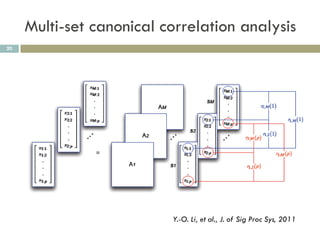

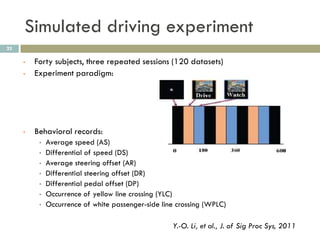

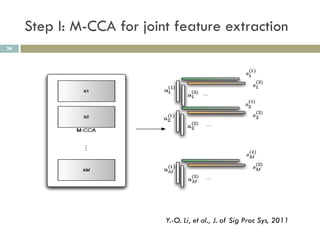

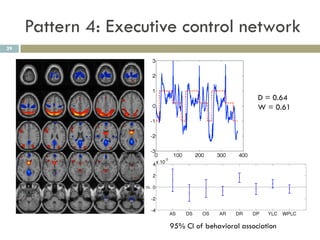

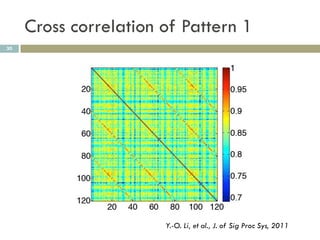

2) MCCA identifies joint patterns across multiple datasets, like functional MRI scans from a simulated driving experiment.

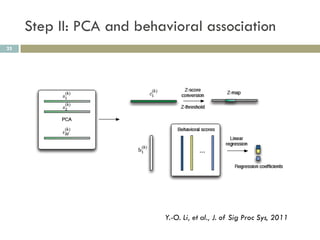

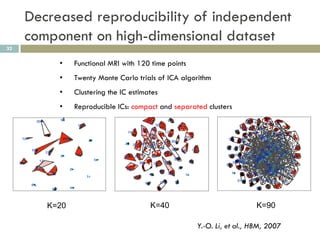

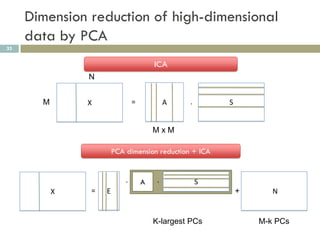

3) Dimension reduction with PCA improves ICA by addressing issues with high dimensionality, enhancing reproducibility of extracted patterns.

![ICA by maximum likelihood estimation

8

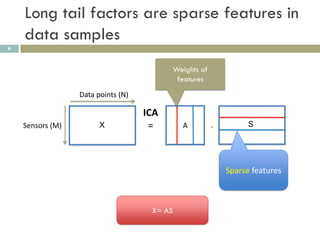

Transformation of multivariate random variable: x = As

p(s 1, s2 , ... , sM )

p(x 1,x 2 , ... , x M ) (1)

det(A)

Statistical independence condition of s:

p(s 1, s2 , ... , sM ) i 1 p(si )

M

(2)

Log likelihood function of x with parameter A:

log p(x 1,x 2 ,...x M ) log p([A x] i ) log det(A)

-1

i](https://image.slidesharecdn.com/machinelearningformedicalimagingdata-120311012226-phpapp01/85/Machine-learning-for-medical-imaging-data-8-320.jpg)

![Multi-set canonical correlation

analysis

21

Correlation matrix of [S1,S2, … SM]

Y.-O. Li, et al., J. of Sig Proc Sys, 2011](https://image.slidesharecdn.com/machinelearningformedicalimagingdata-120311012226-phpapp01/85/Machine-learning-for-medical-imaging-data-21-320.jpg)

![Estimation of degrees of freedom by

entropy rate

35

Entropy rate of a Gaussian process

1

h( x) ln 2 e

4 ln s()d

h( x) ln 2 e iff x[n] is an i.i.d. random process

h(x) = 0.40 h(x) = 1.28 h(x) = 1.41

Y.-O. Li, et al., HBM, 2007](https://image.slidesharecdn.com/machinelearningformedicalimagingdata-120311012226-phpapp01/85/Machine-learning-for-medical-imaging-data-35-320.jpg)