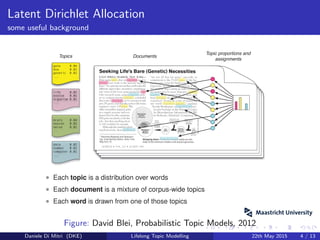

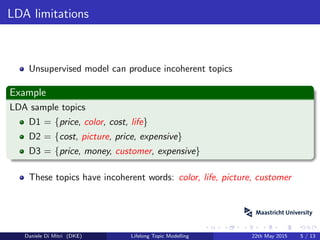

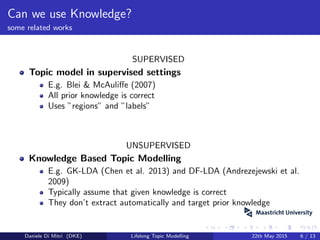

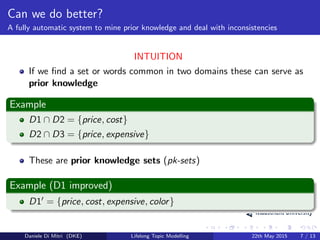

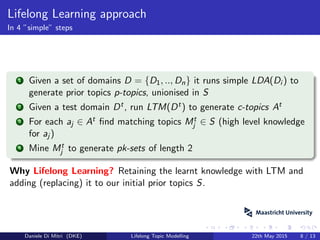

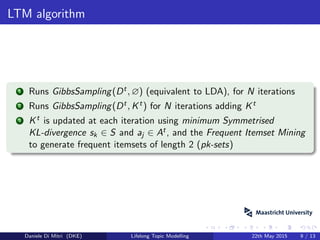

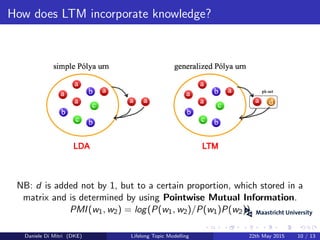

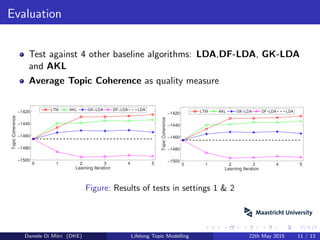

The document reviews a paper on lifelong topic modeling by Chen and Liu, which uses knowledge to enhance topic modeling beyond traditional LDA. It discusses the limitations of LDA, presents a lifelong learning approach to incorporate prior knowledge automatically, and evaluates the proposed method against various baseline algorithms. The presentation concludes with suggestions for improving the text corpora and further testing with big data.