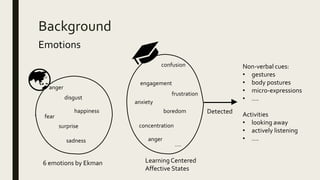

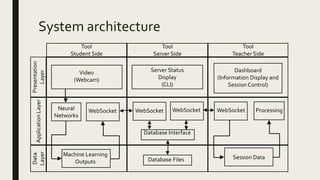

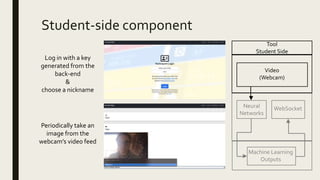

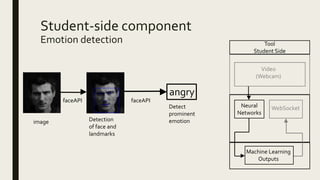

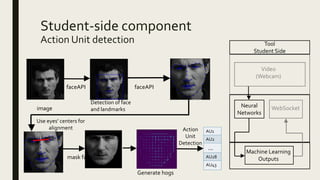

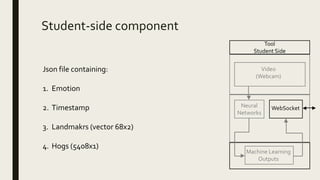

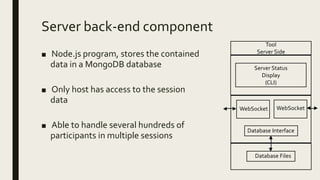

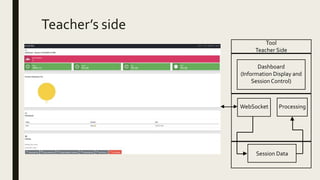

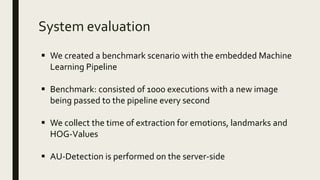

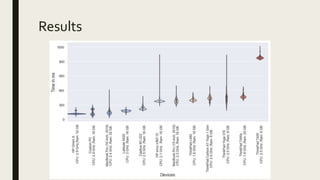

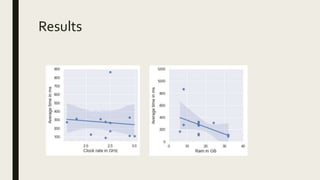

The document discusses a privacy-preserving system named STC-Live designed to detect students' emotional states in online synchronous learning environments. It highlights the importance of non-verbal cues for engagement and the challenges in emotion recognition while maintaining user privacy. The system architecture integrates machine learning to ensure effective monitoring without compromising personal data.