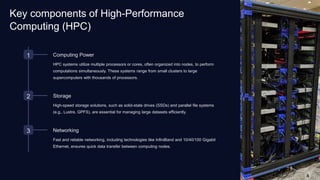

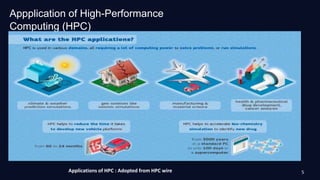

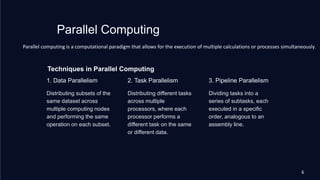

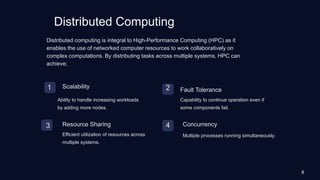

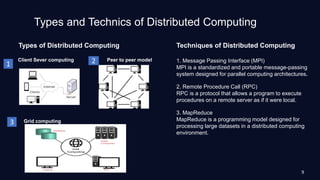

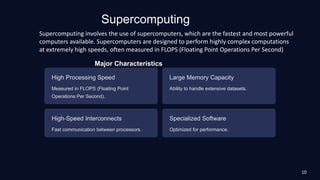

The document provides an extensive analysis of high-performance computing (HPC), computer architecture, and quantum computing, detailing their fundamental concepts, applications, and associated technologies. It discusses various computing paradigms, including parallel and distributed computing, along with examples of supercomputers and the challenges they face. Additionally, it explores quantum computing principles, algorithms, and implications for future technology and security.