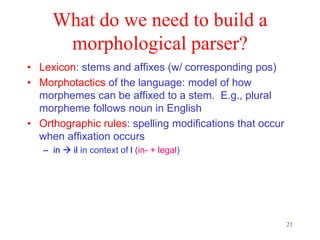

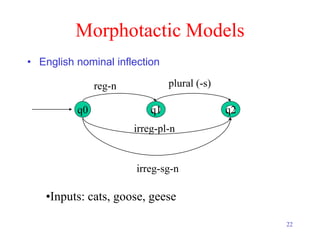

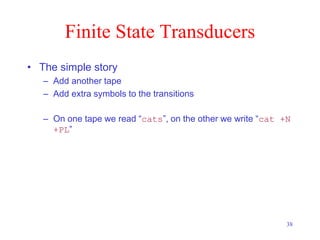

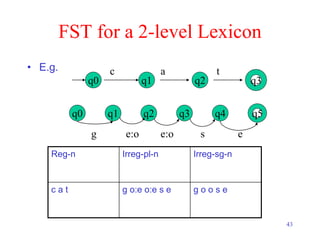

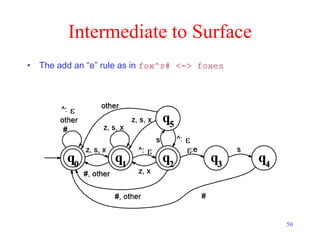

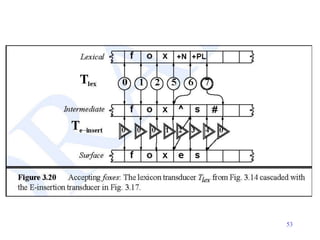

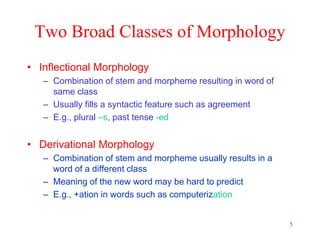

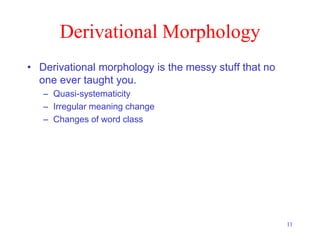

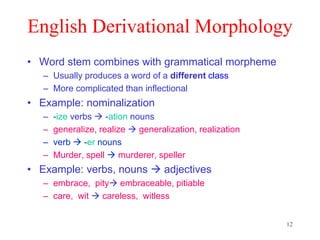

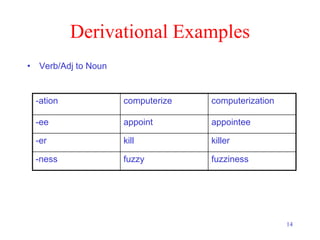

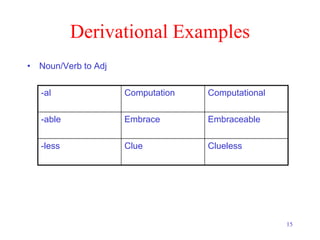

This document discusses morphology and morphological parsing. It defines morphology as the study of how words are composed of morphemes, which are the smallest meaning-bearing units. There are two broad classes of morphology: inflectional morphology, which combines a stem and affix but results in a word of the same class, and derivational morphology, which typically results in a word of a different class. Morphological parsing involves breaking words down into their constituent morphemes. Finite state transducers can be used to represent morphological rules and perform parsing by mapping between a lexical level and surface form of words.

![19

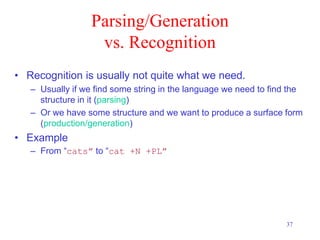

Parsing

• Taking a surface input and identifying its components

and underlying structure

• Morphological parsing: parsing a word into stem and

affixes and identifying the parts and their

relationships

– Stem and features:

• goose goose +N +SG or goose +V

• geese goose +N +PL

• gooses goose +V +3SG

– Bracketing: indecipherable [in [[de [cipher]] able]]](https://image.slidesharecdn.com/lect4-morphology-231118083821-998b0d06/85/lect4-morphology-pptx-19-320.jpg)