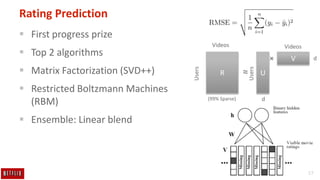

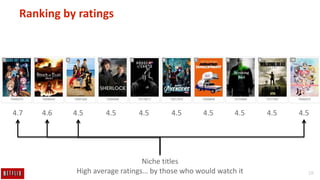

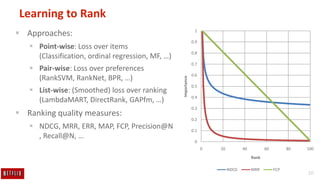

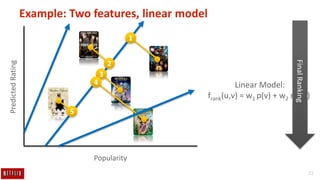

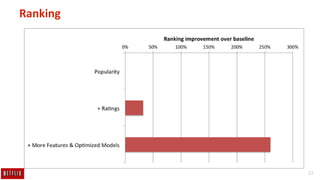

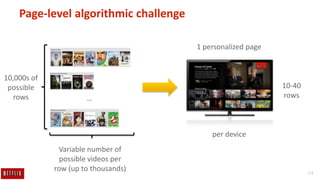

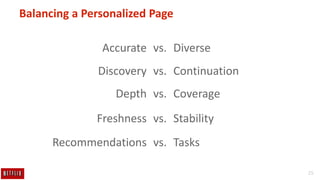

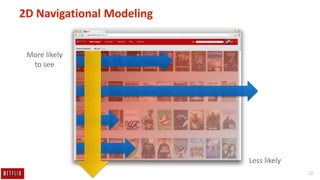

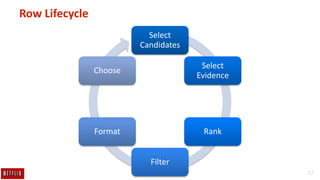

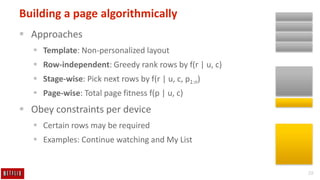

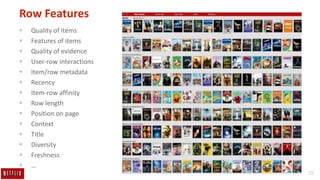

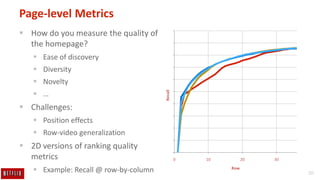

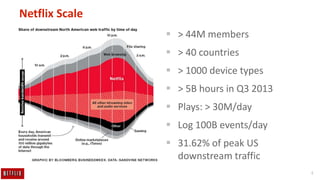

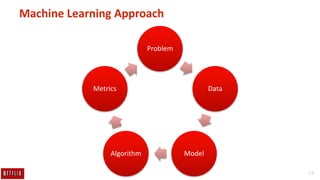

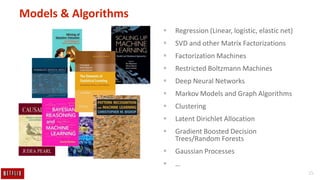

The document discusses Netflix's approach to personalized recommendations and homepage layout, detailing the algorithms and machine learning methods used to enhance user satisfaction. It covers various techniques like matrix factorization and deep learning to improve content discovery, while addressing challenges like balancing diversity and freshness. The presentation also highlights the evolution of recommendations and future research directions in the field.

![16

Offline/Online testing process

Rollout

Feature to

all users

Offline

testing

Online A/B

testing[success] [success]

[fail]

days Weeks to months](https://image.slidesharecdn.com/learningapersonalizedhomepagepublic-140410230812-phpapp01/85/Learning-a-Personalized-Homepage-16-320.jpg)