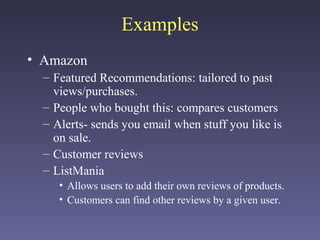

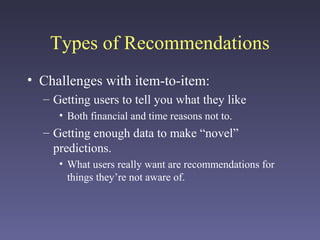

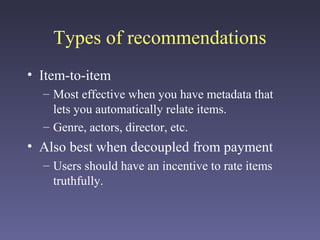

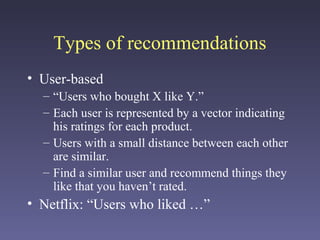

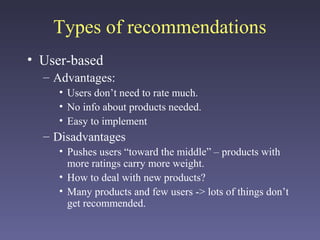

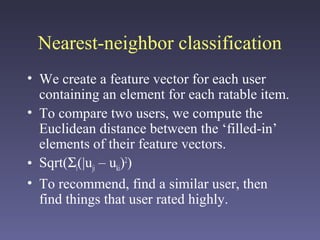

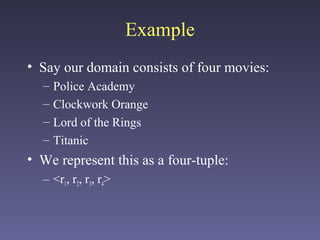

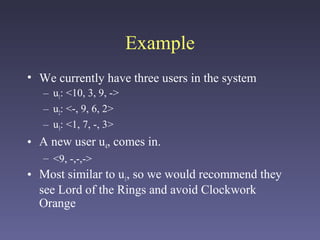

Recommender systems allow online retailers to customize their sites to meet consumer tastes by aiding browsing and suggesting related items. Personalization is one of e-commerce's advantages over brick-and-mortar stores. Common techniques include item-to-item recommendations based on user ratings, user-to-user comparisons based on item preferences, and population-based suggestions of popular items. Challenges include obtaining user data, making novel recommendations, and addressing ethical issues.