Embed presentation

Download as PDF, PPTX

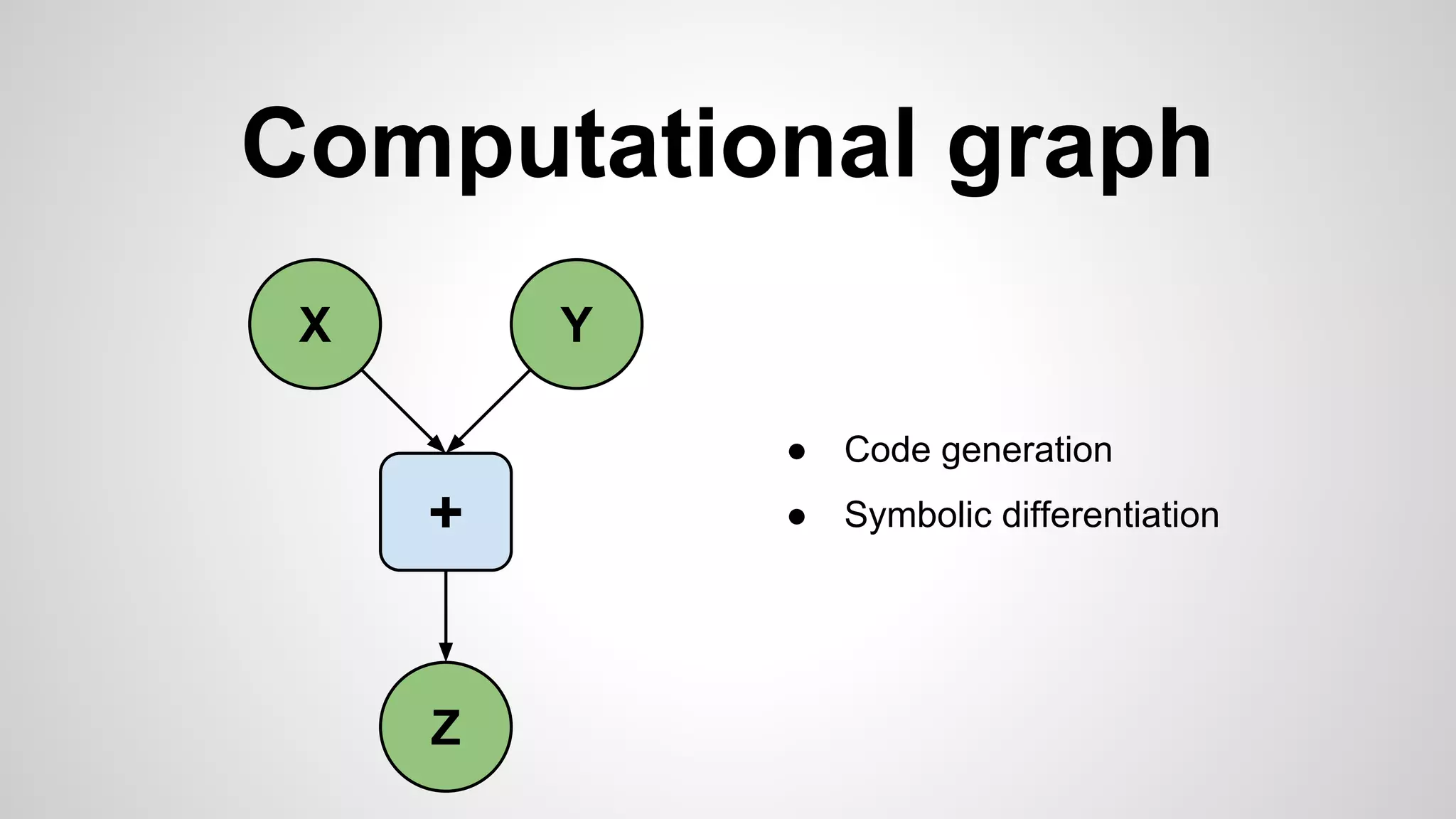

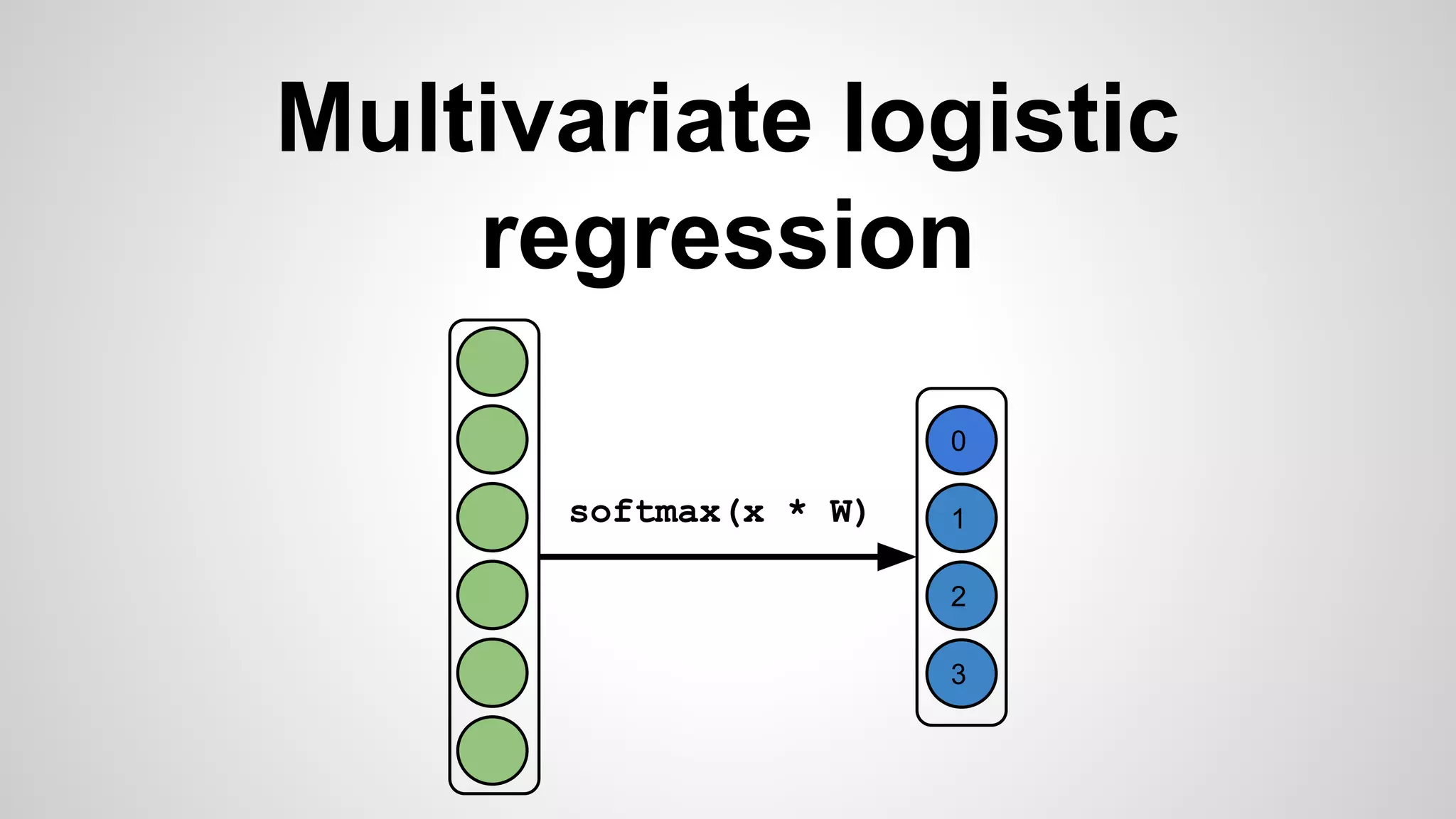

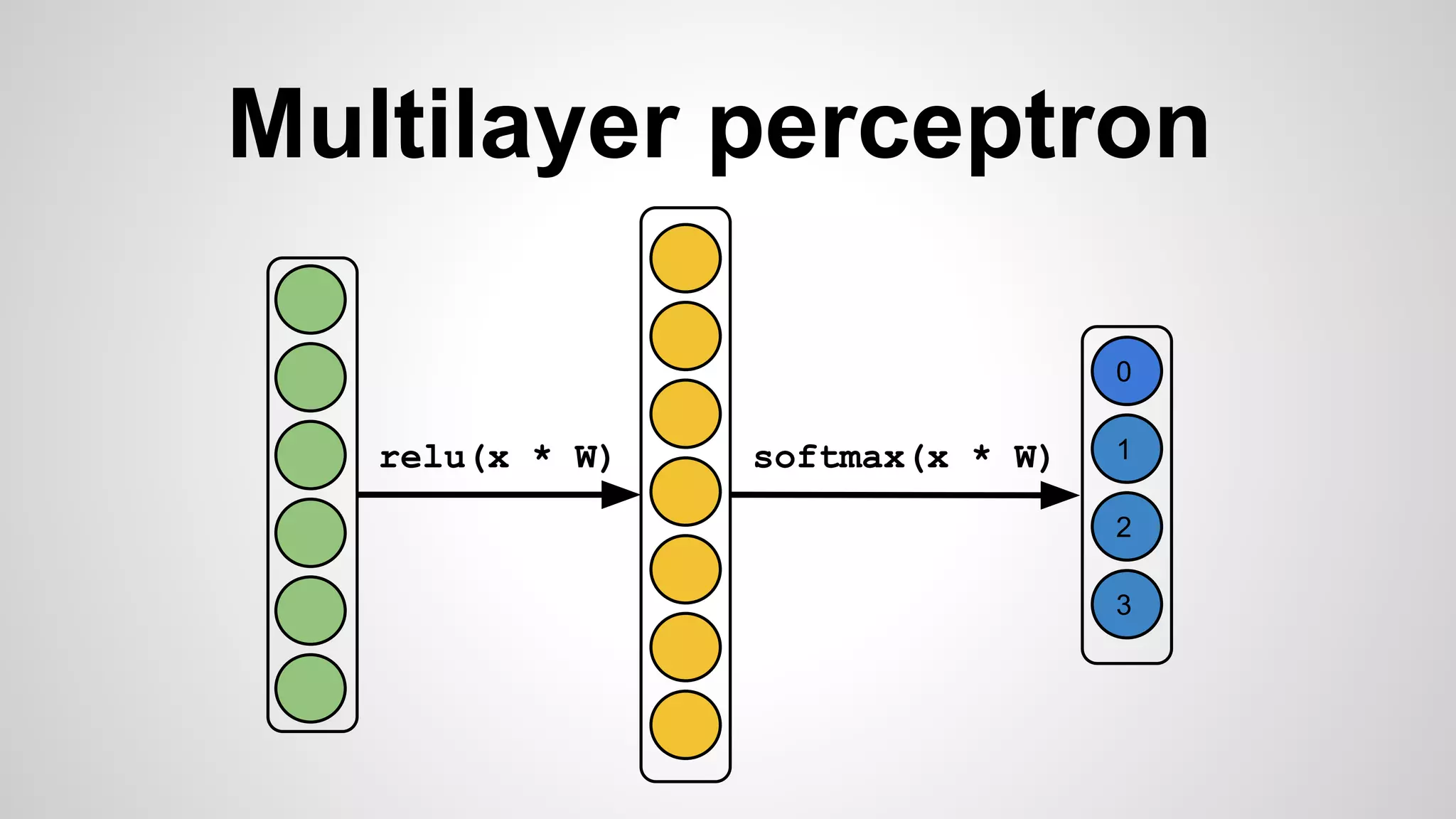

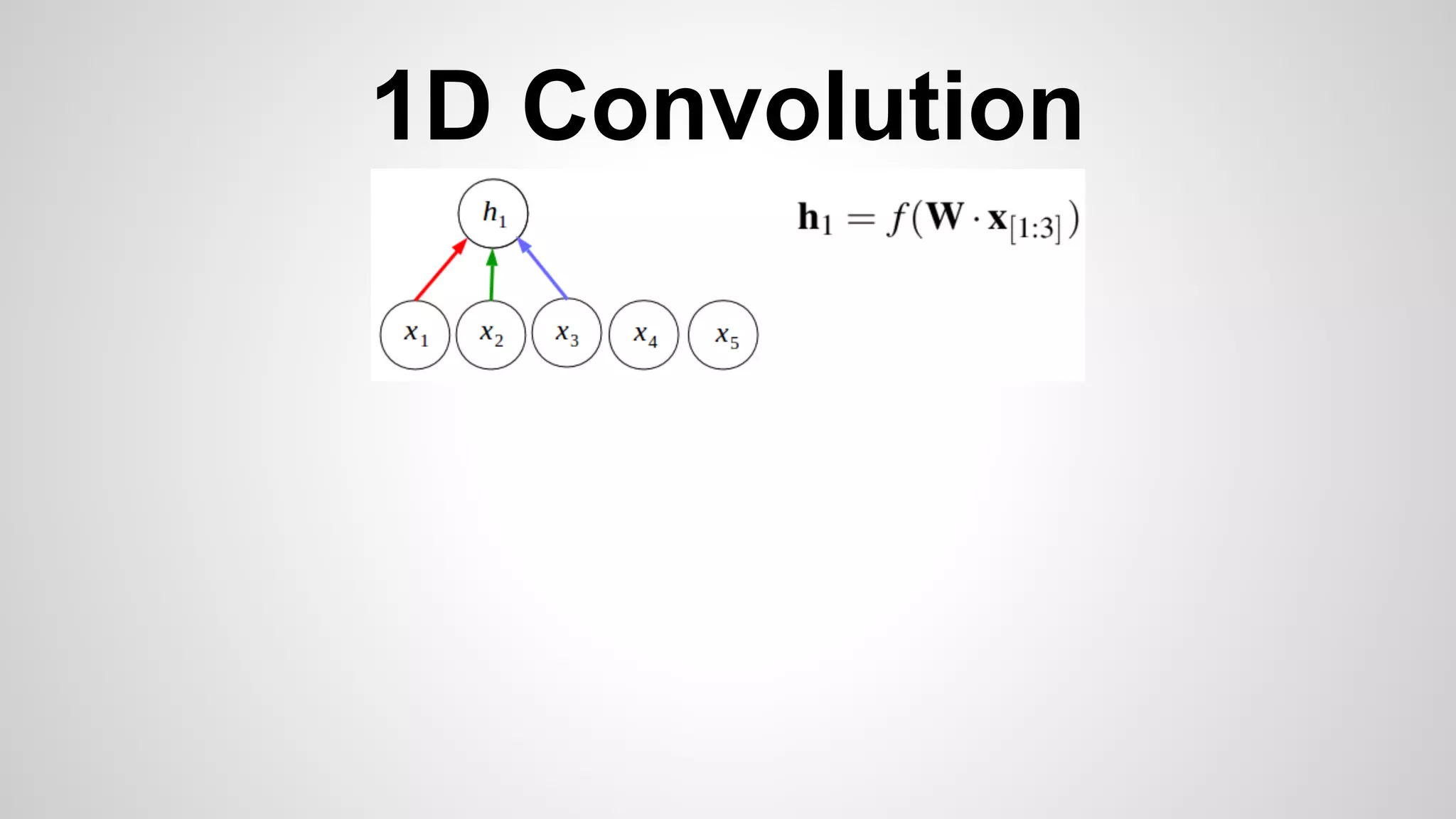

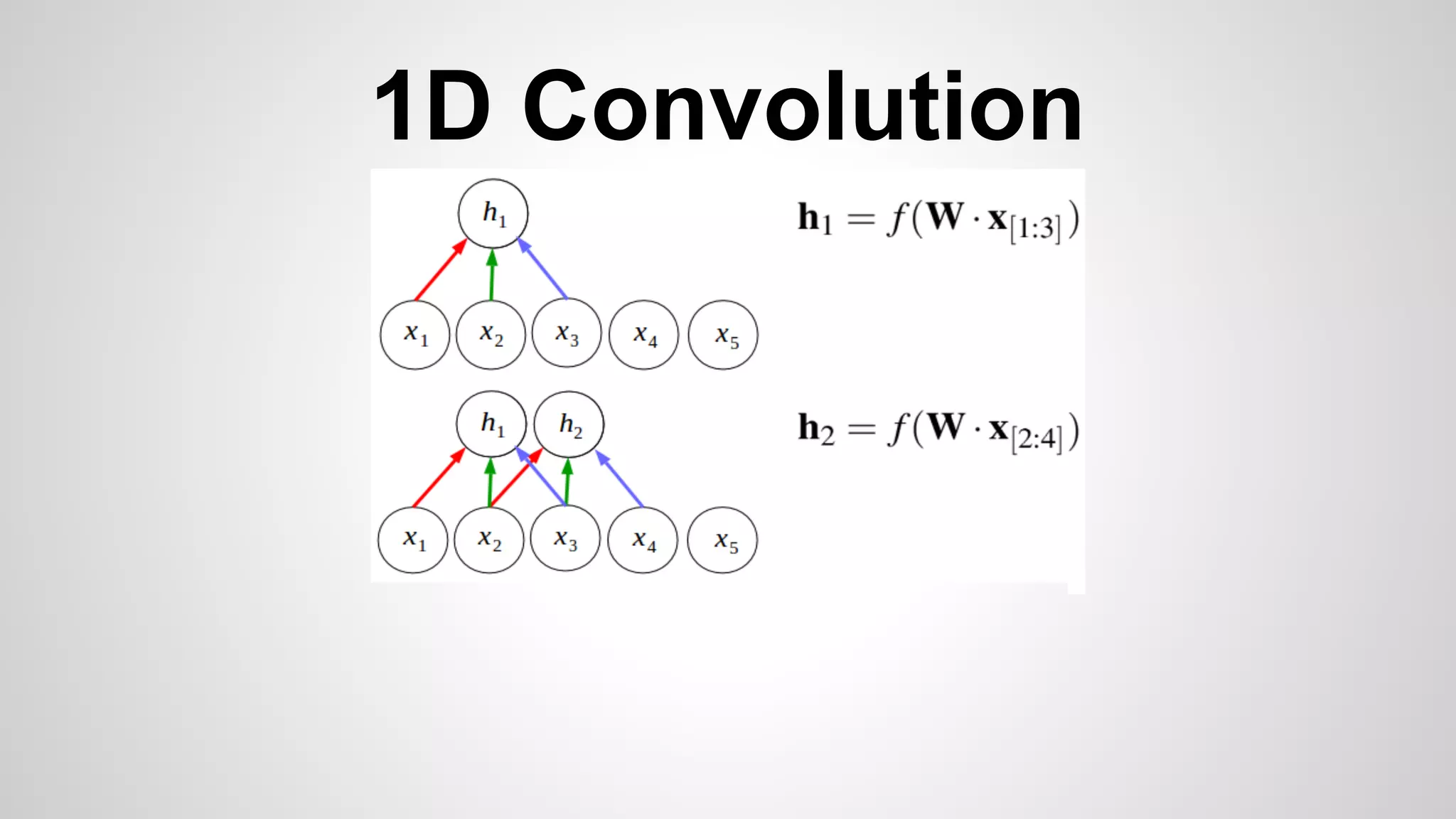

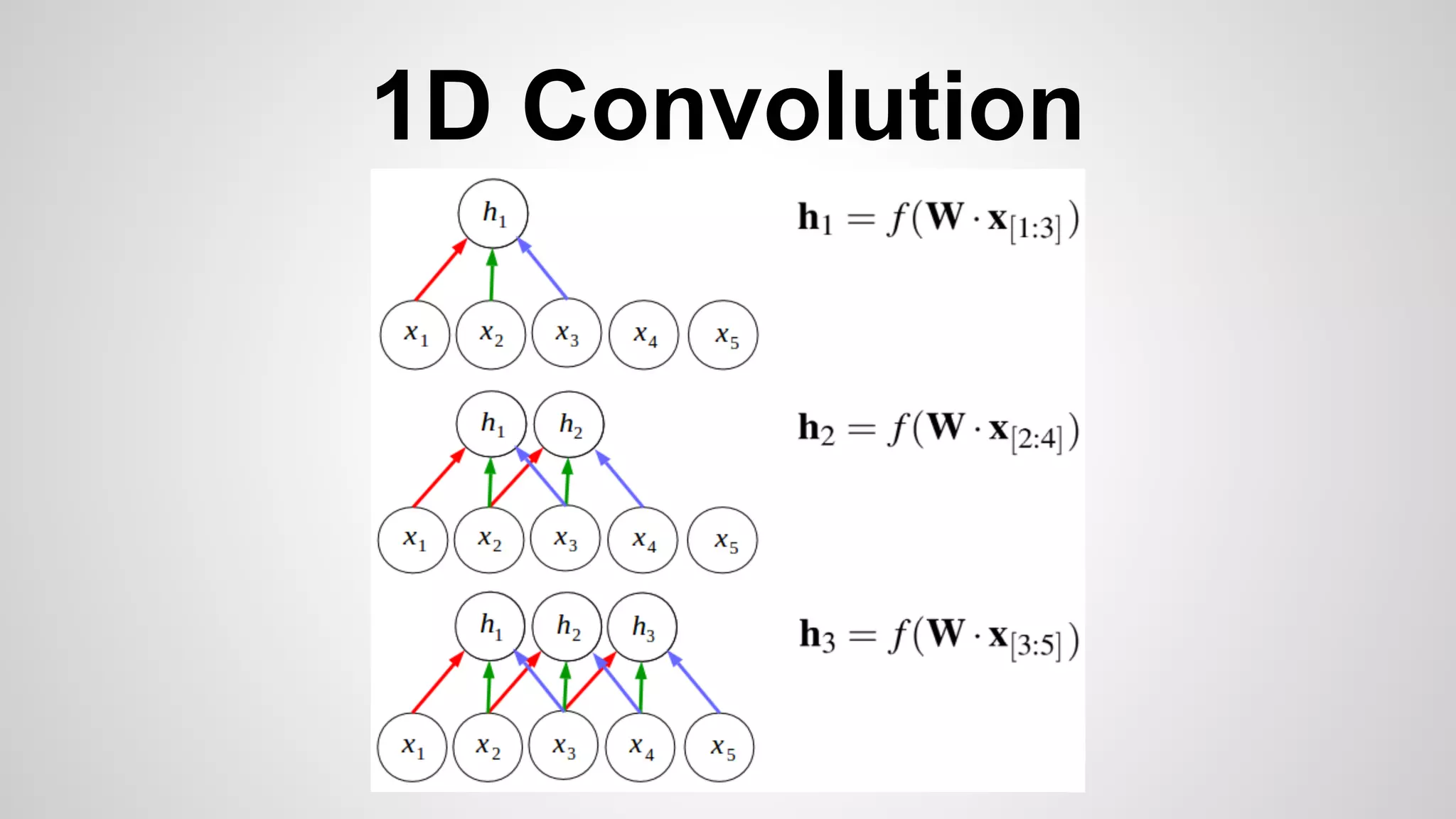

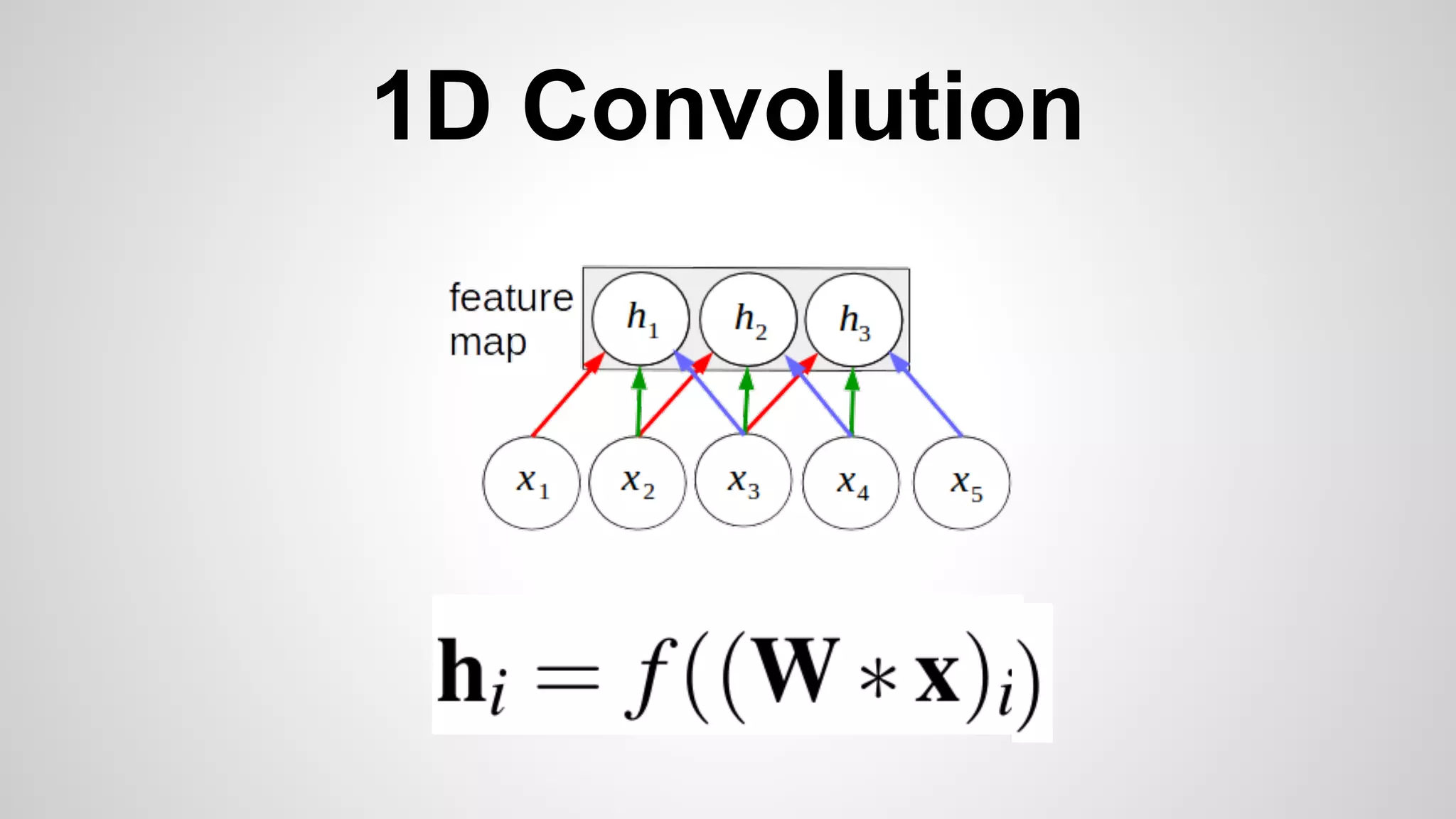

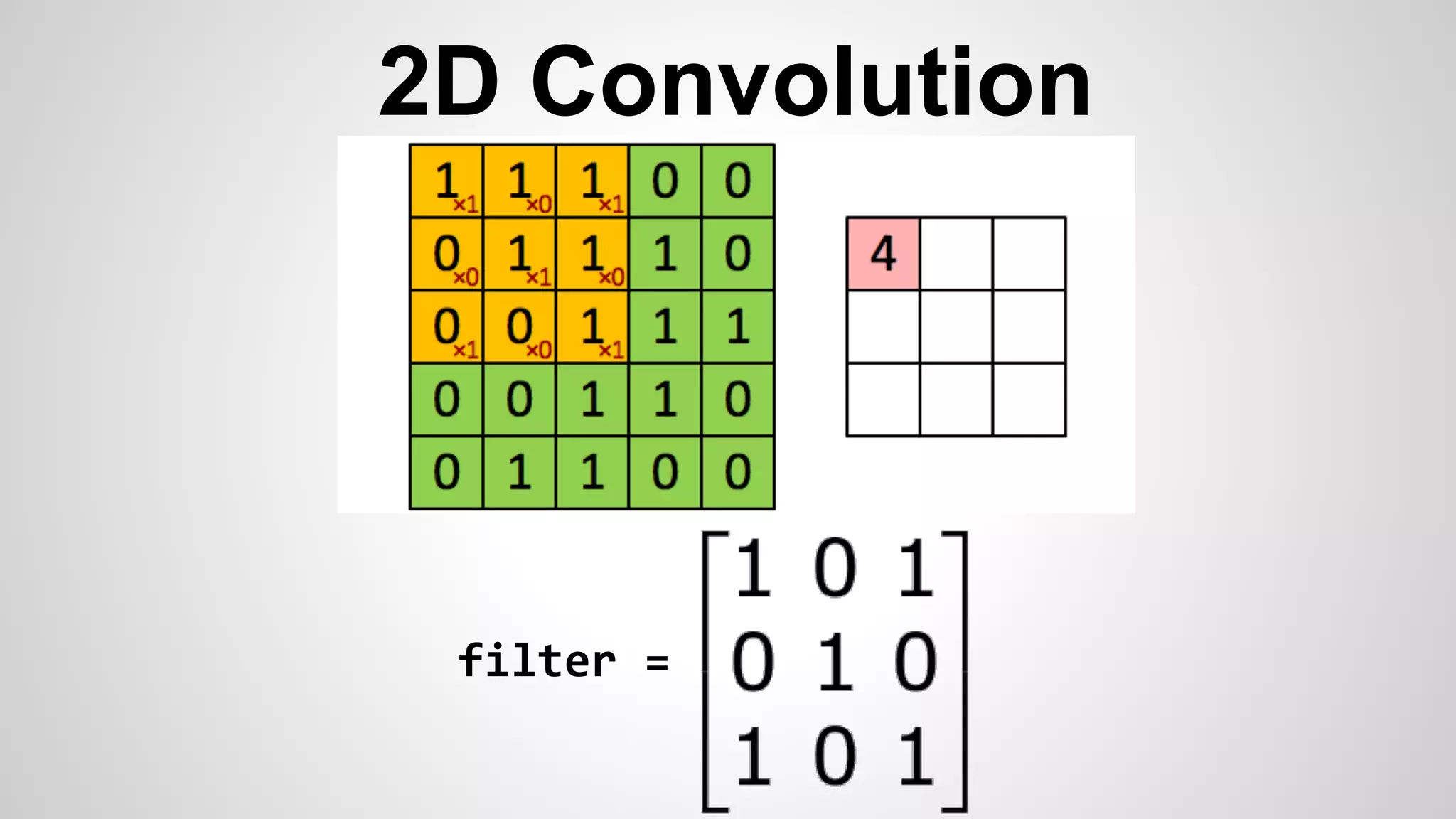

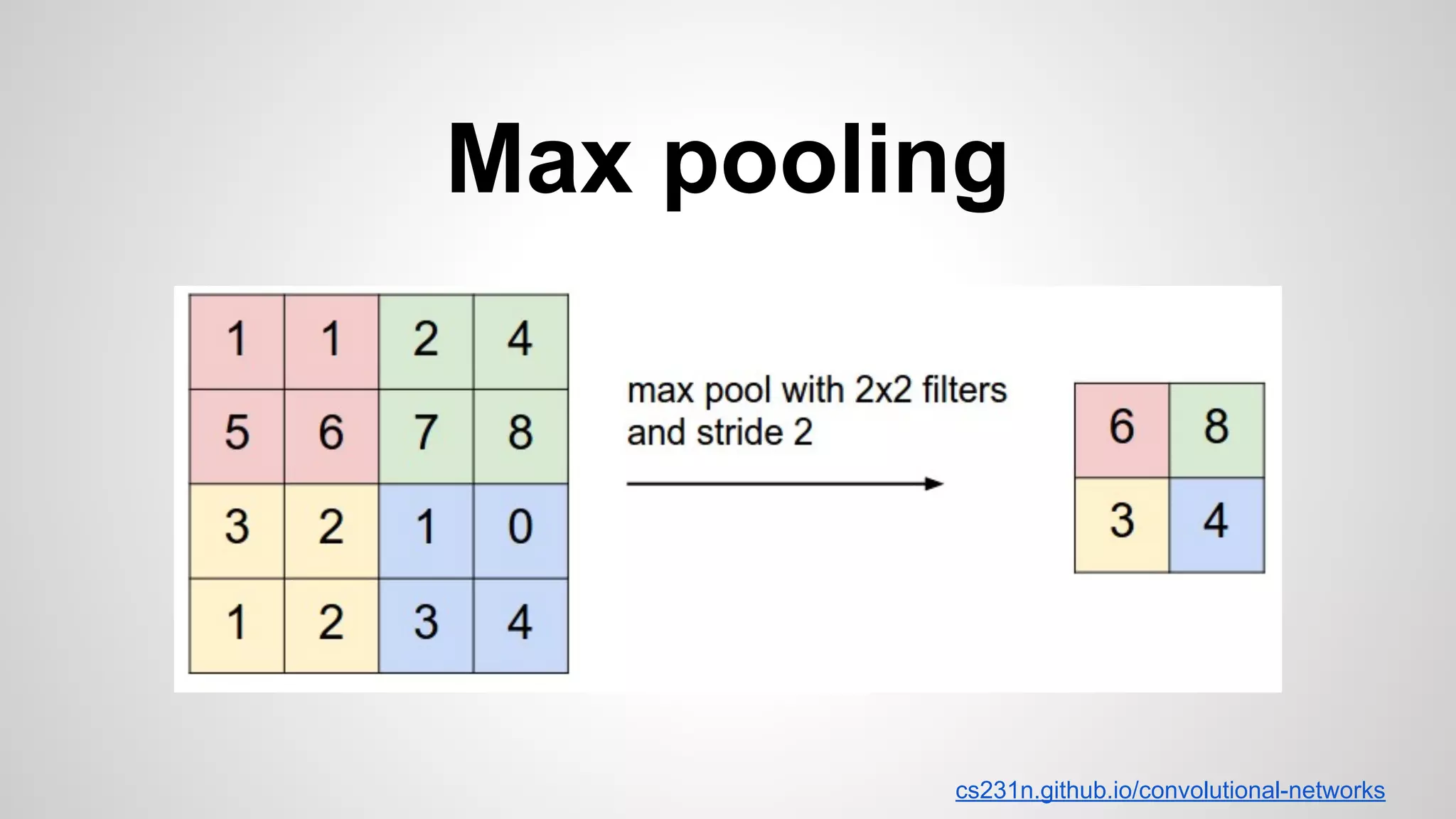

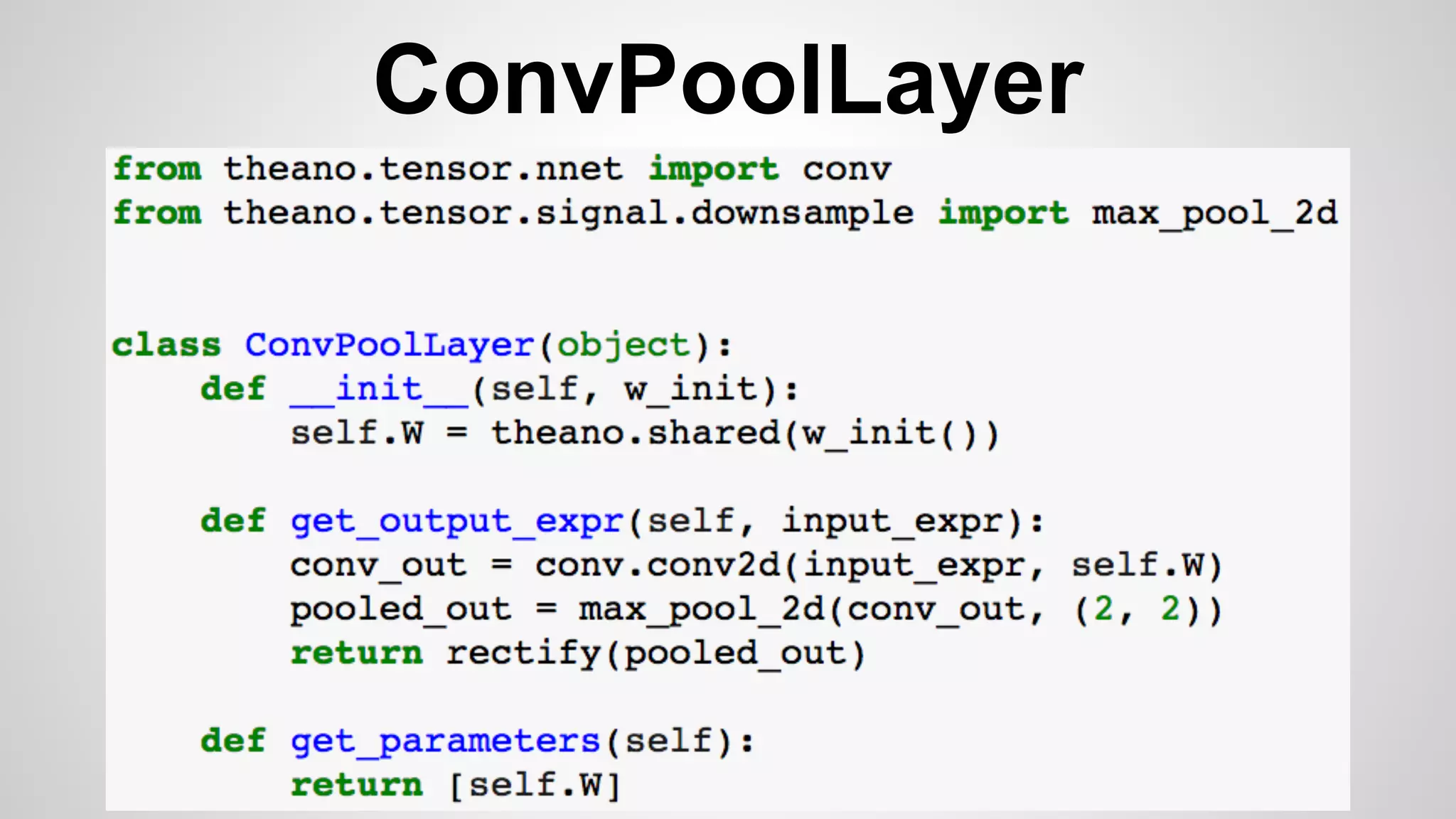

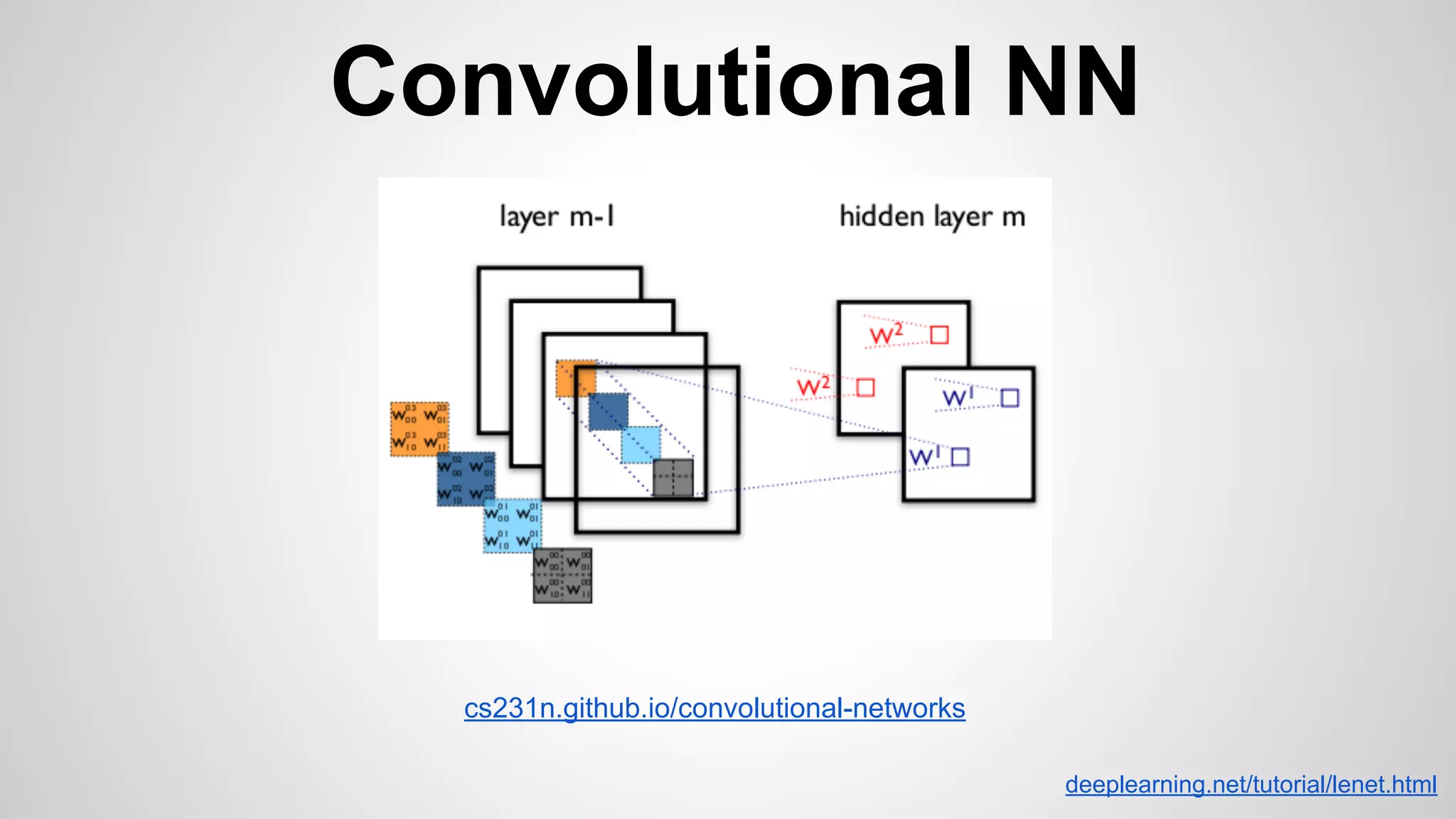

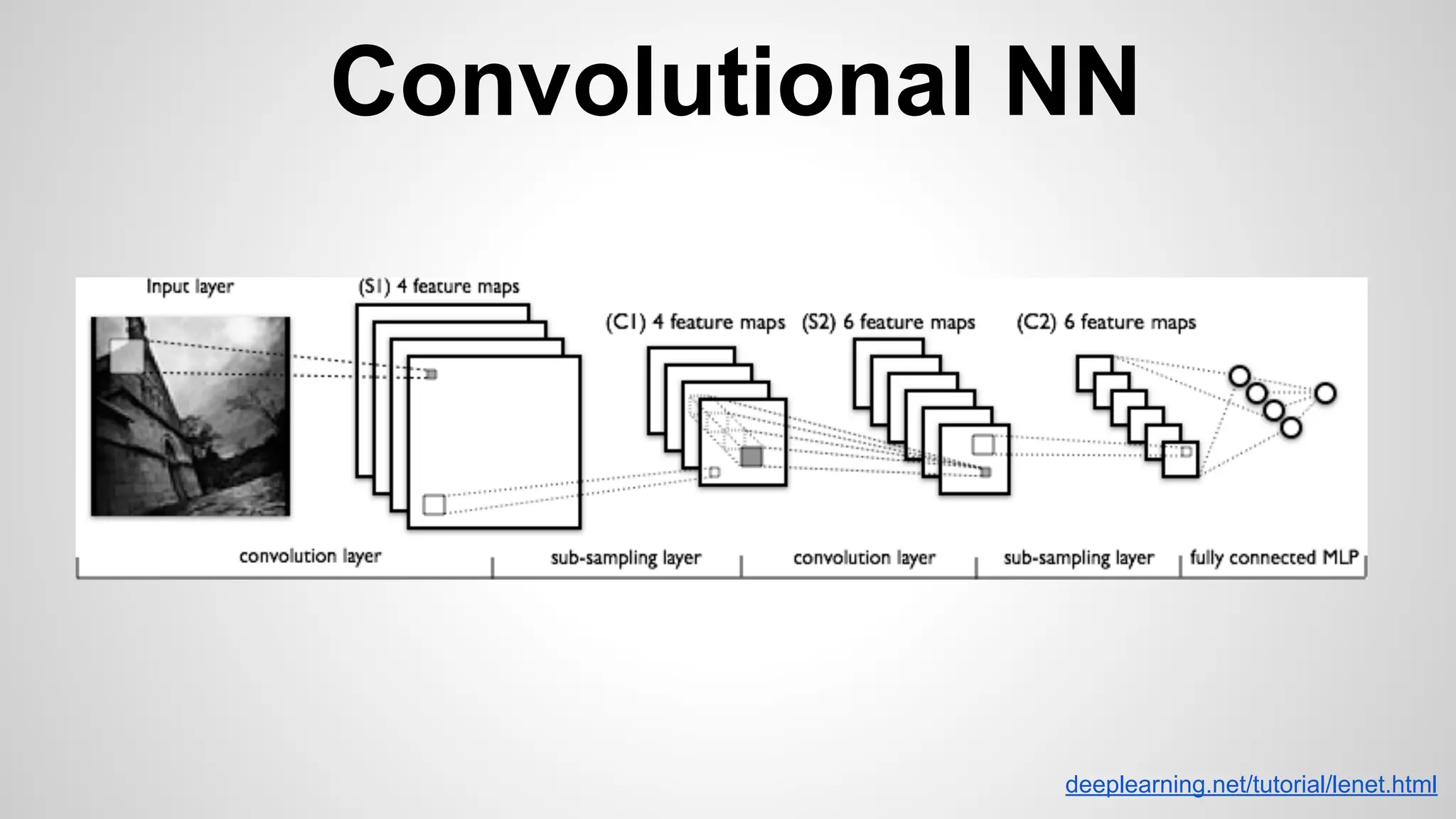

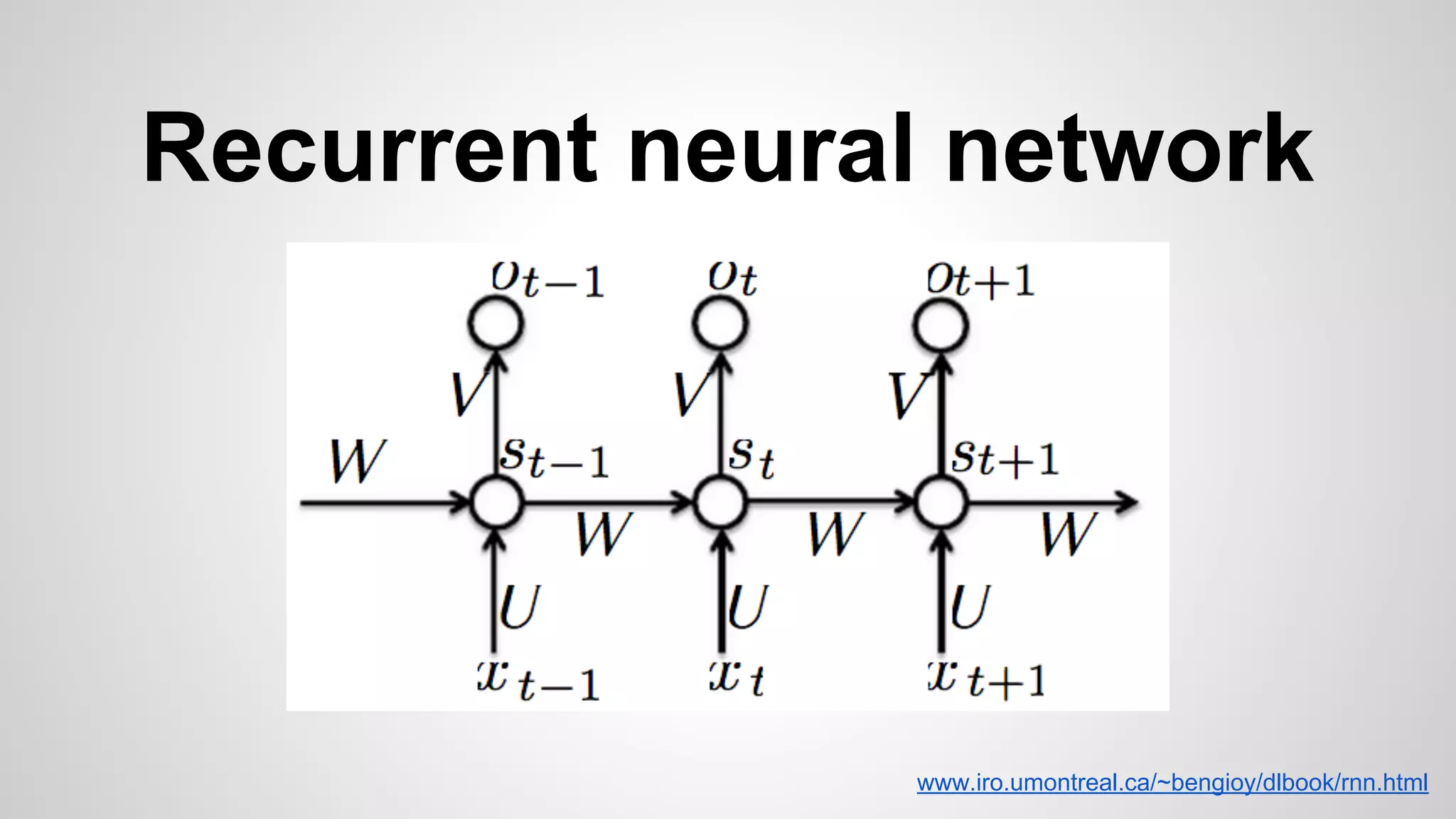

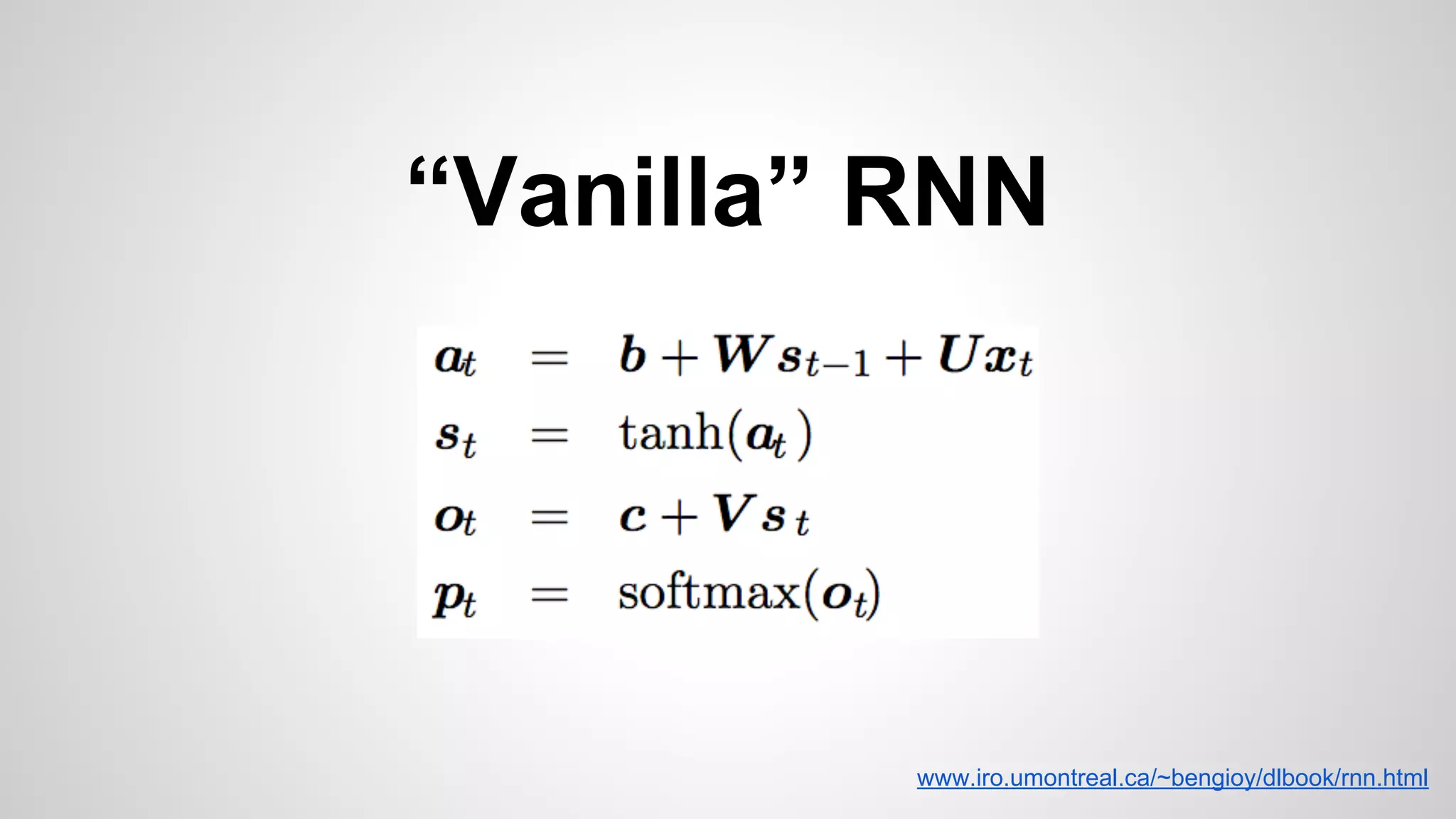

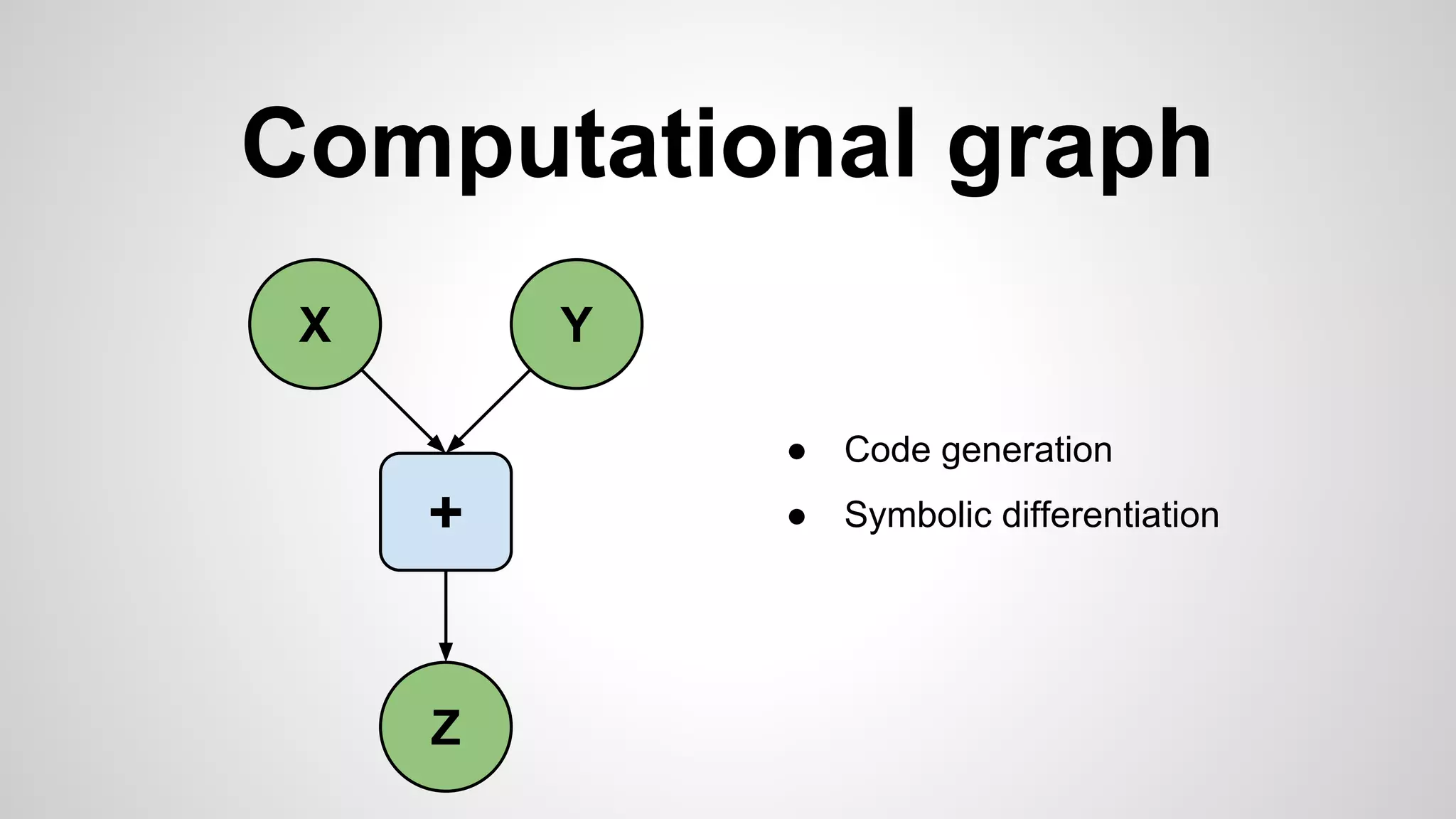

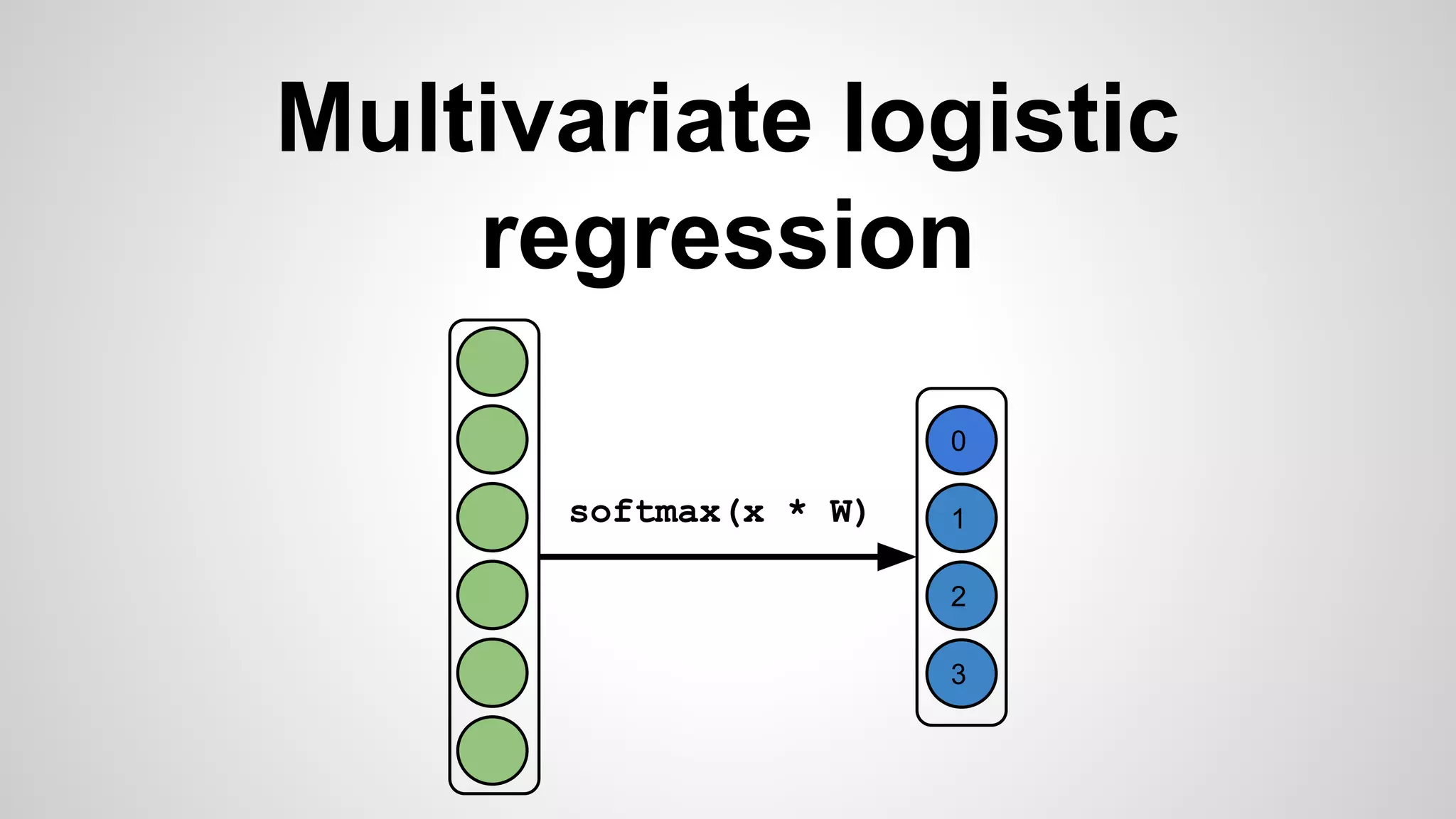

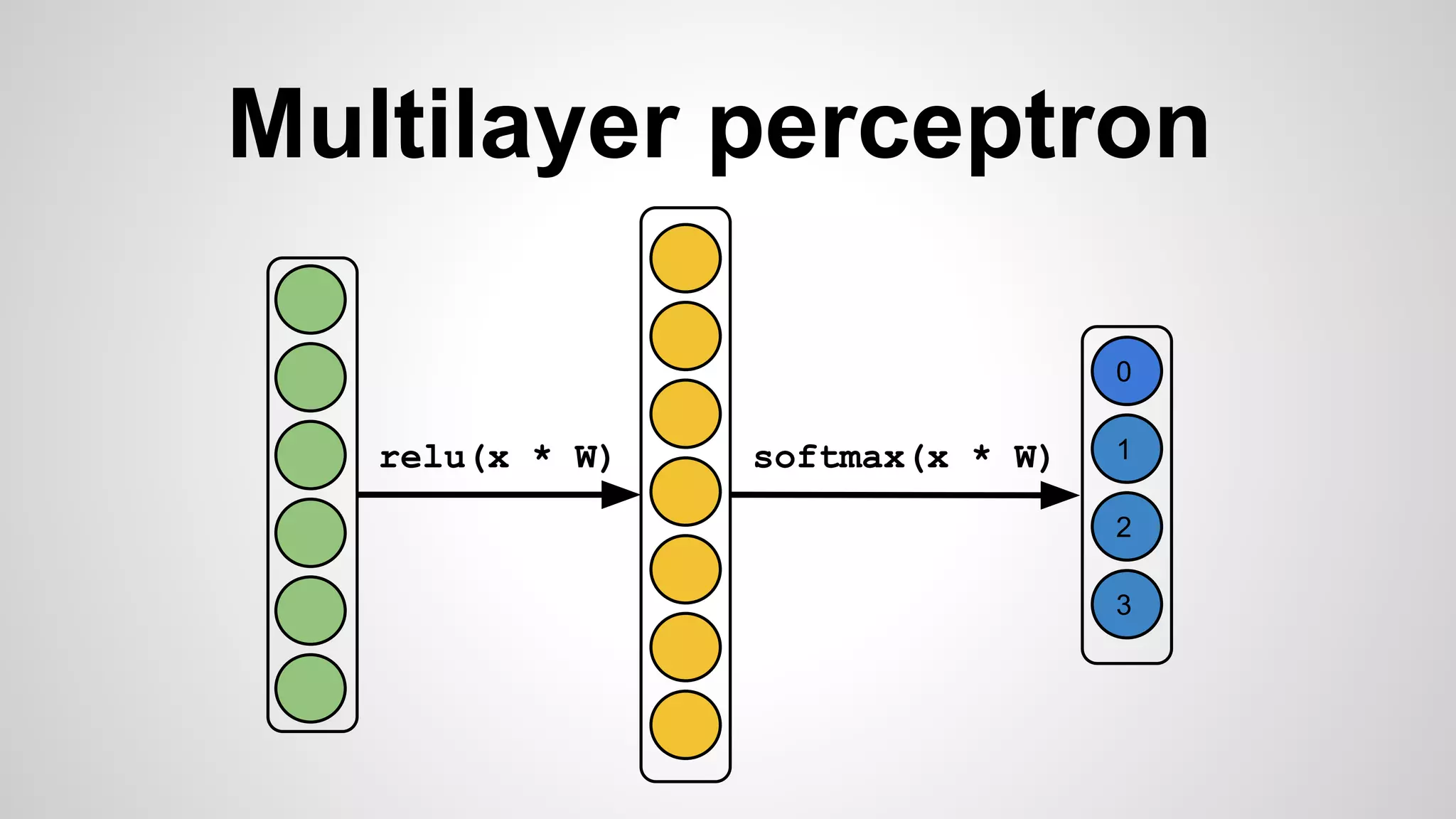

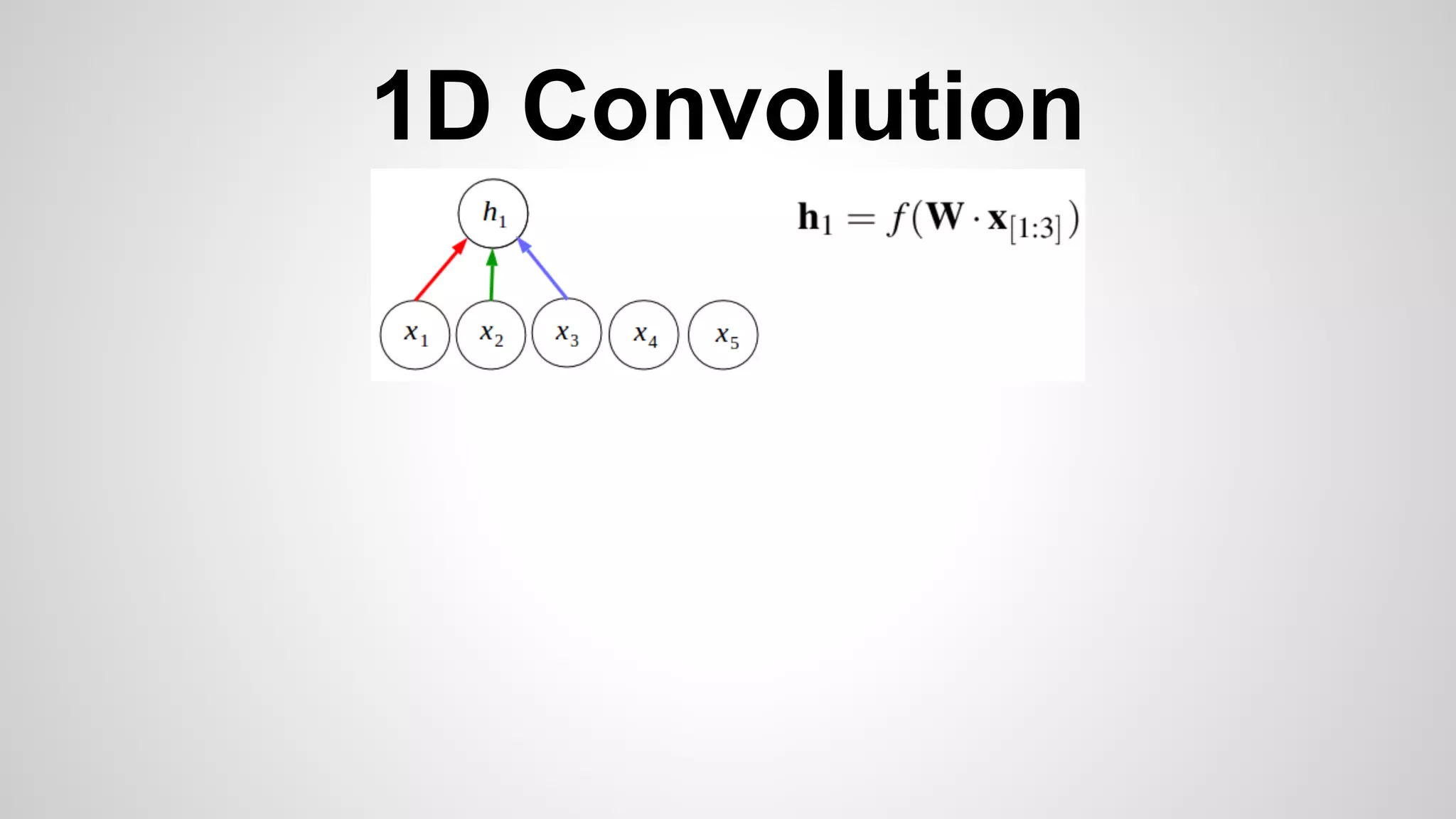

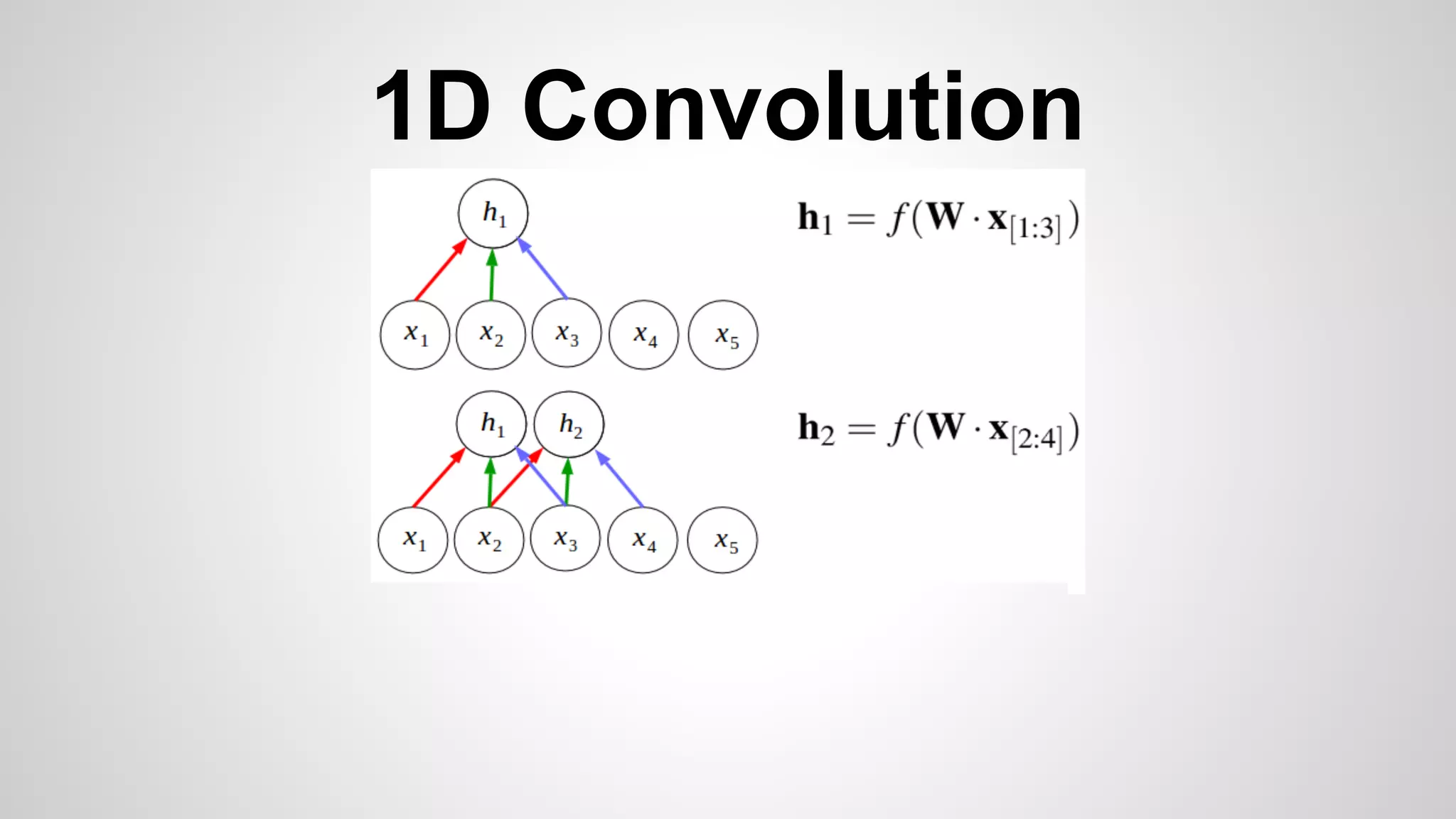

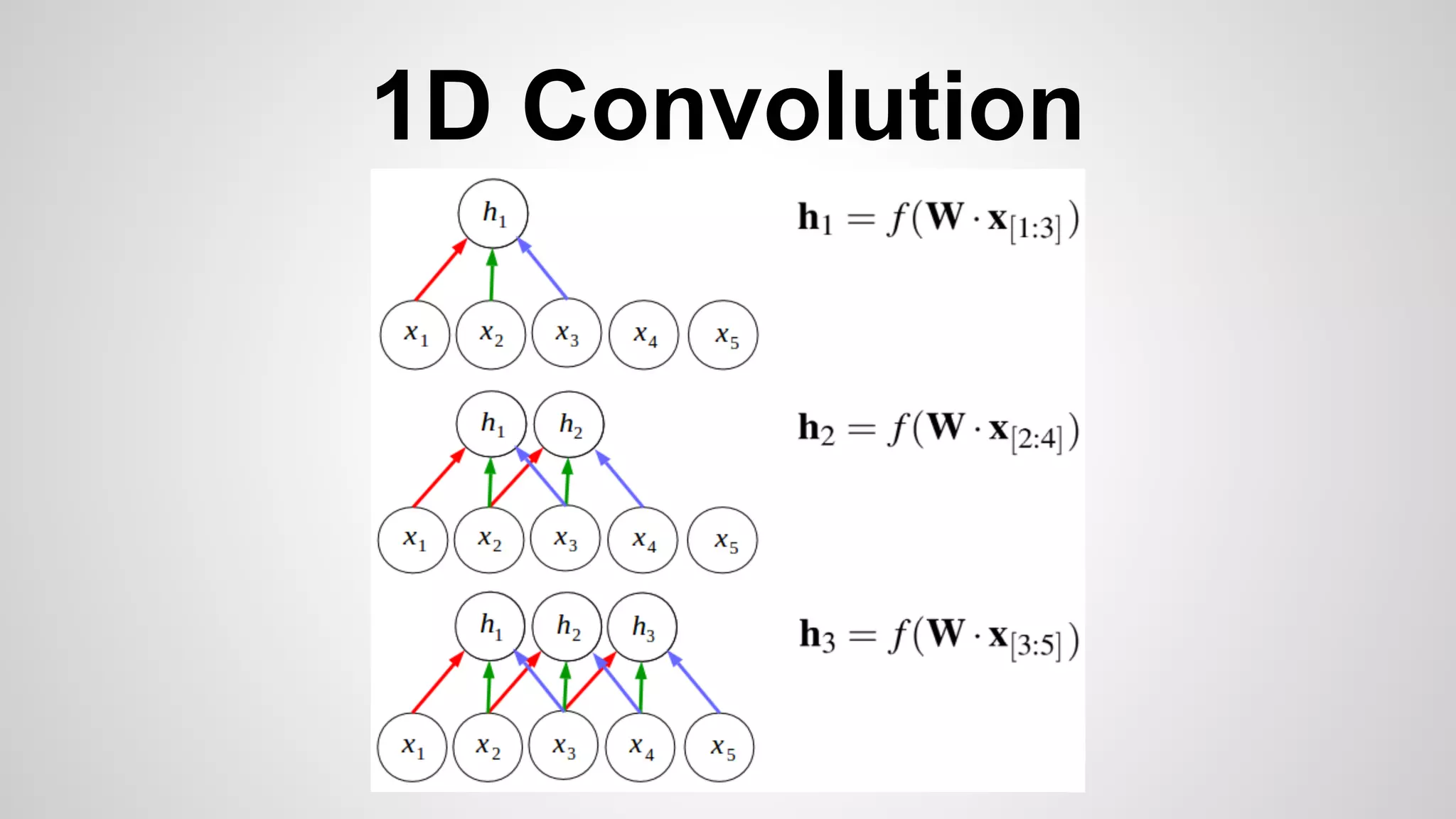

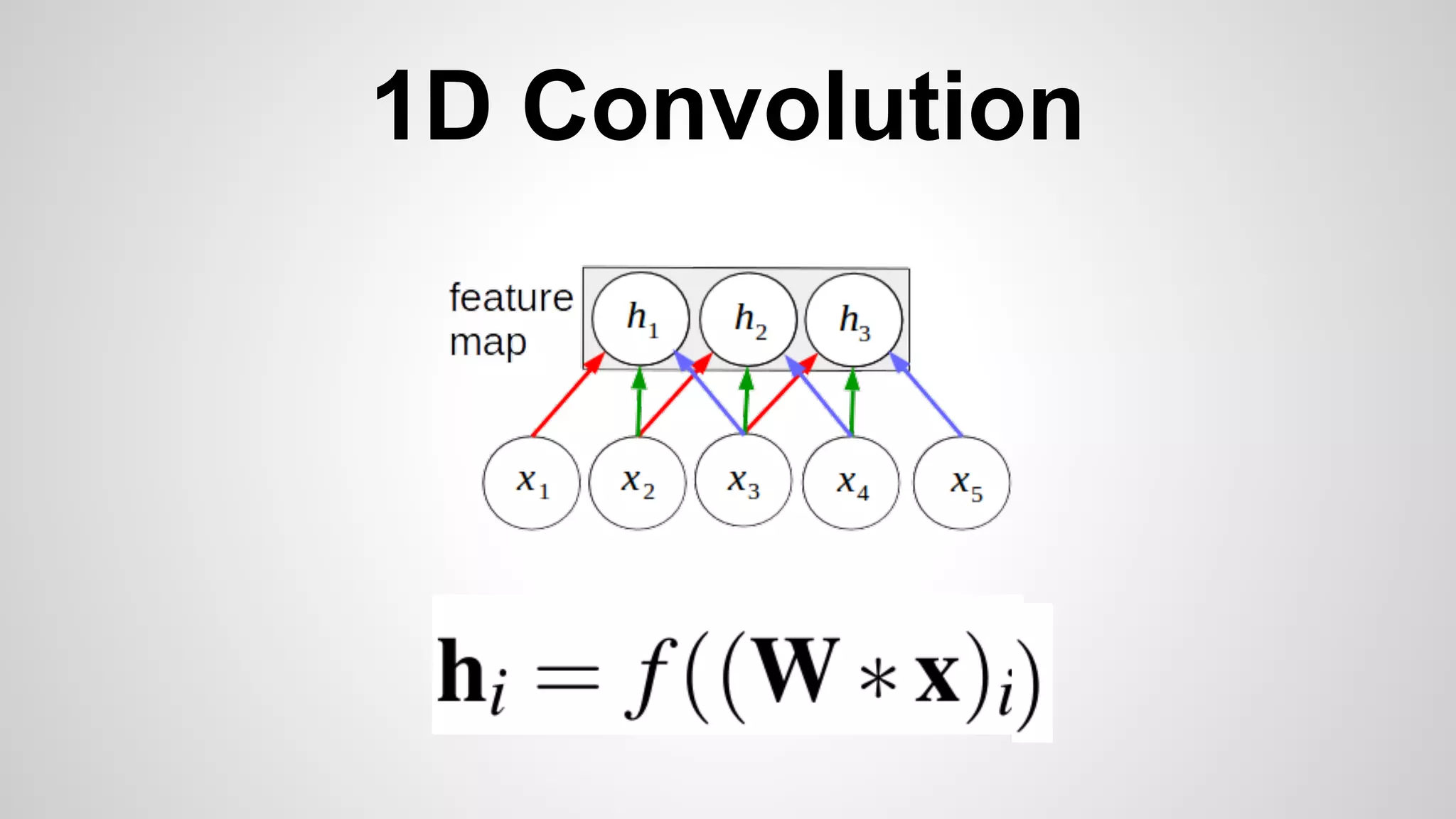

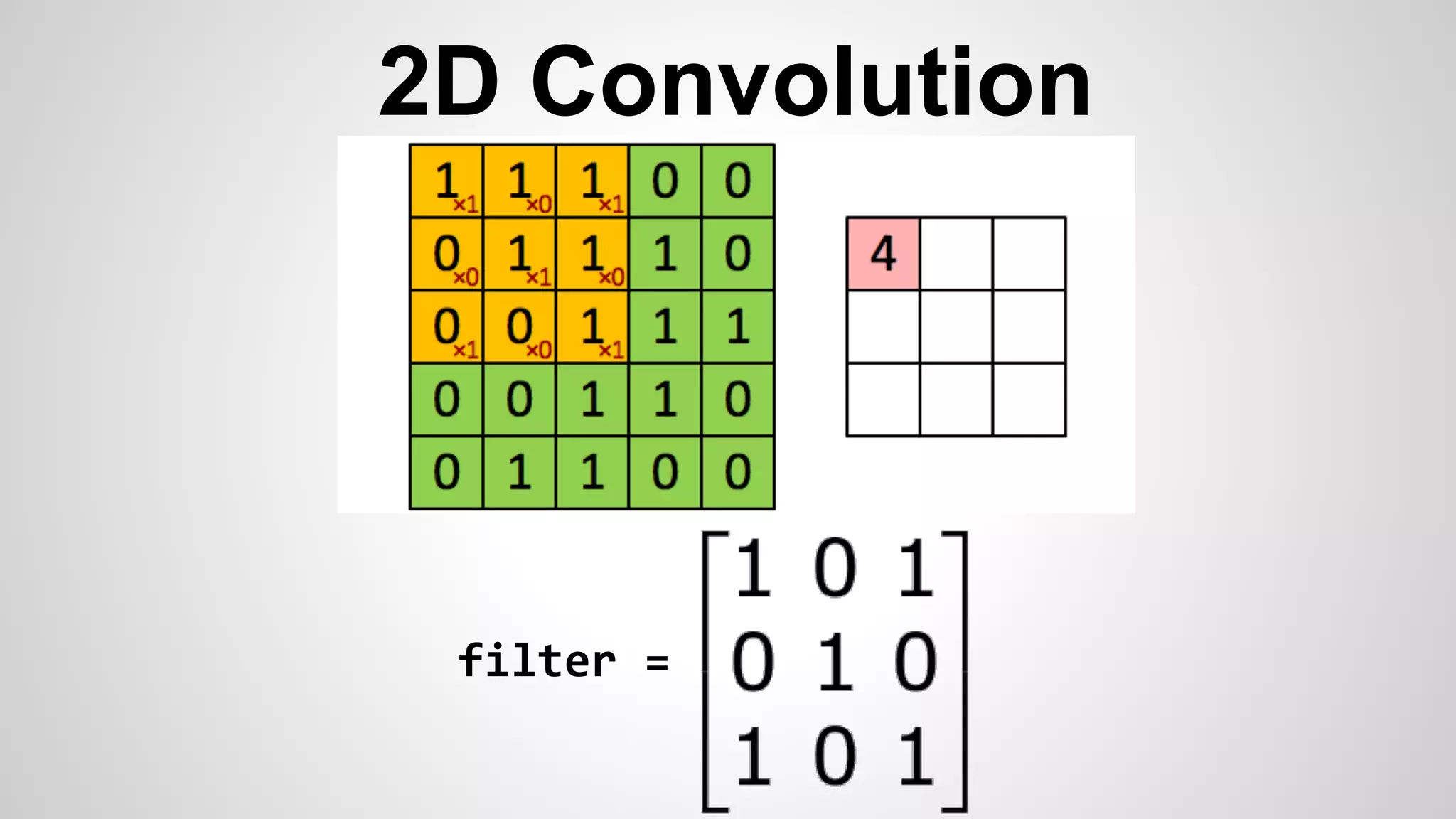

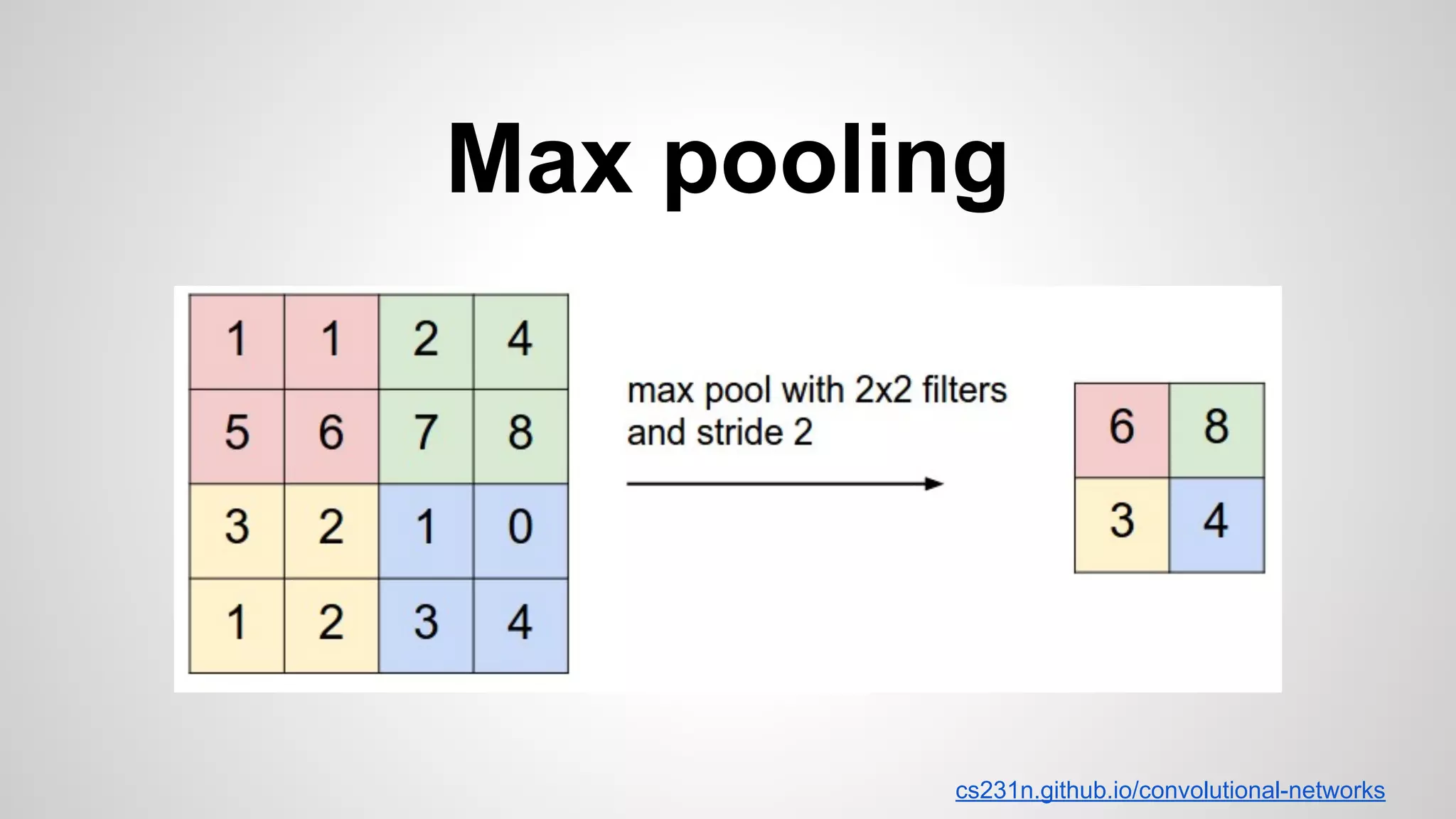

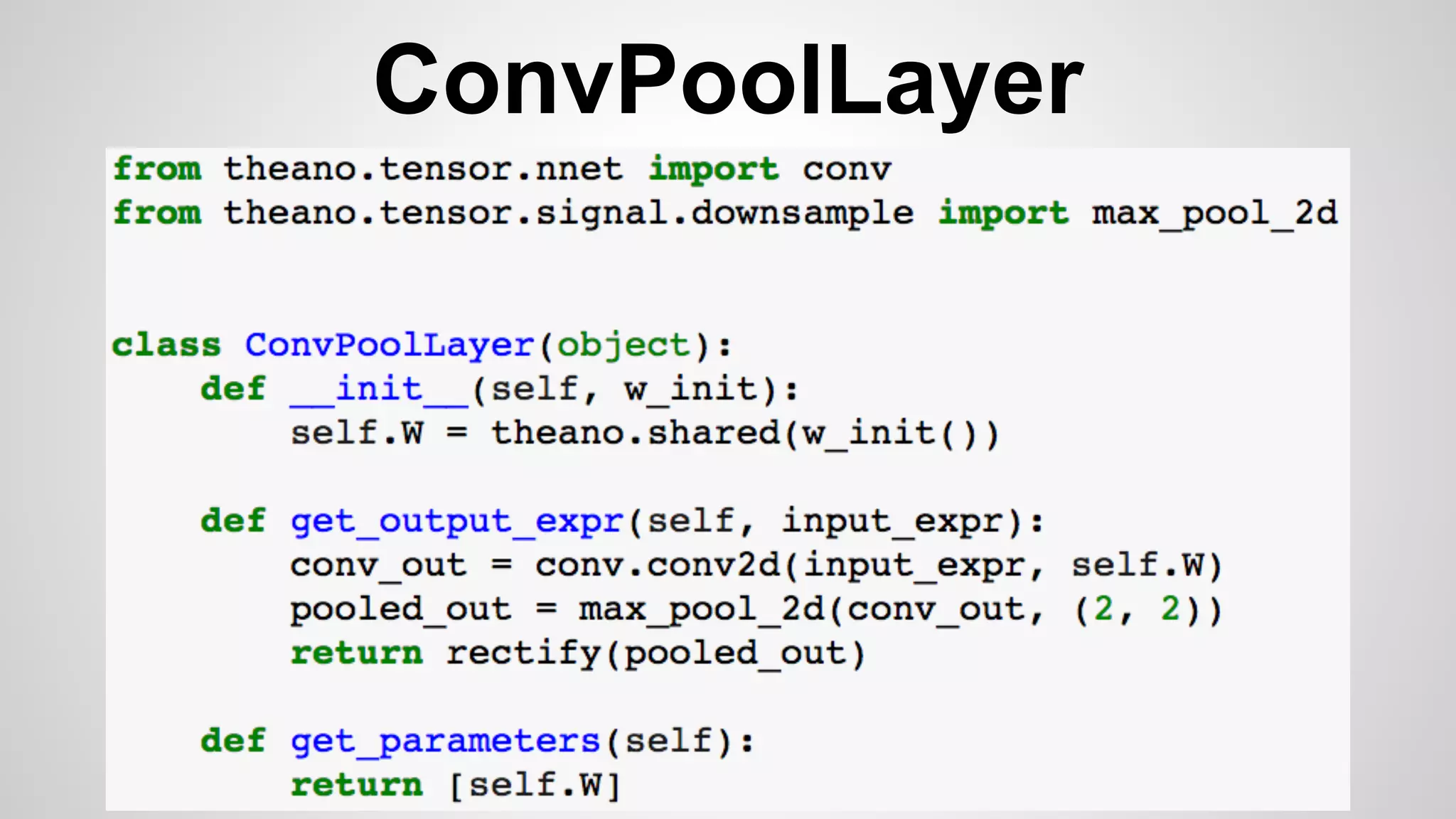

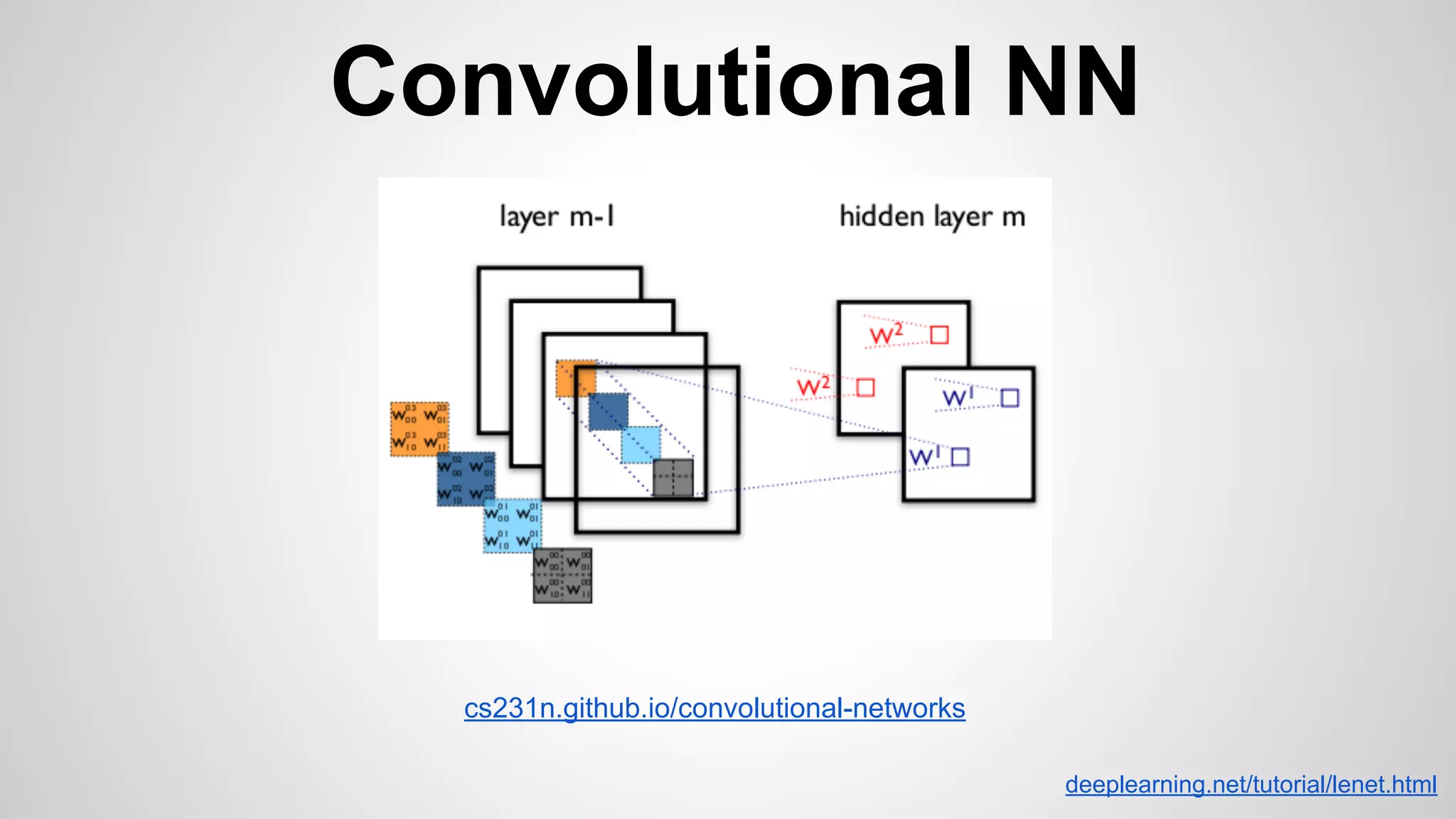

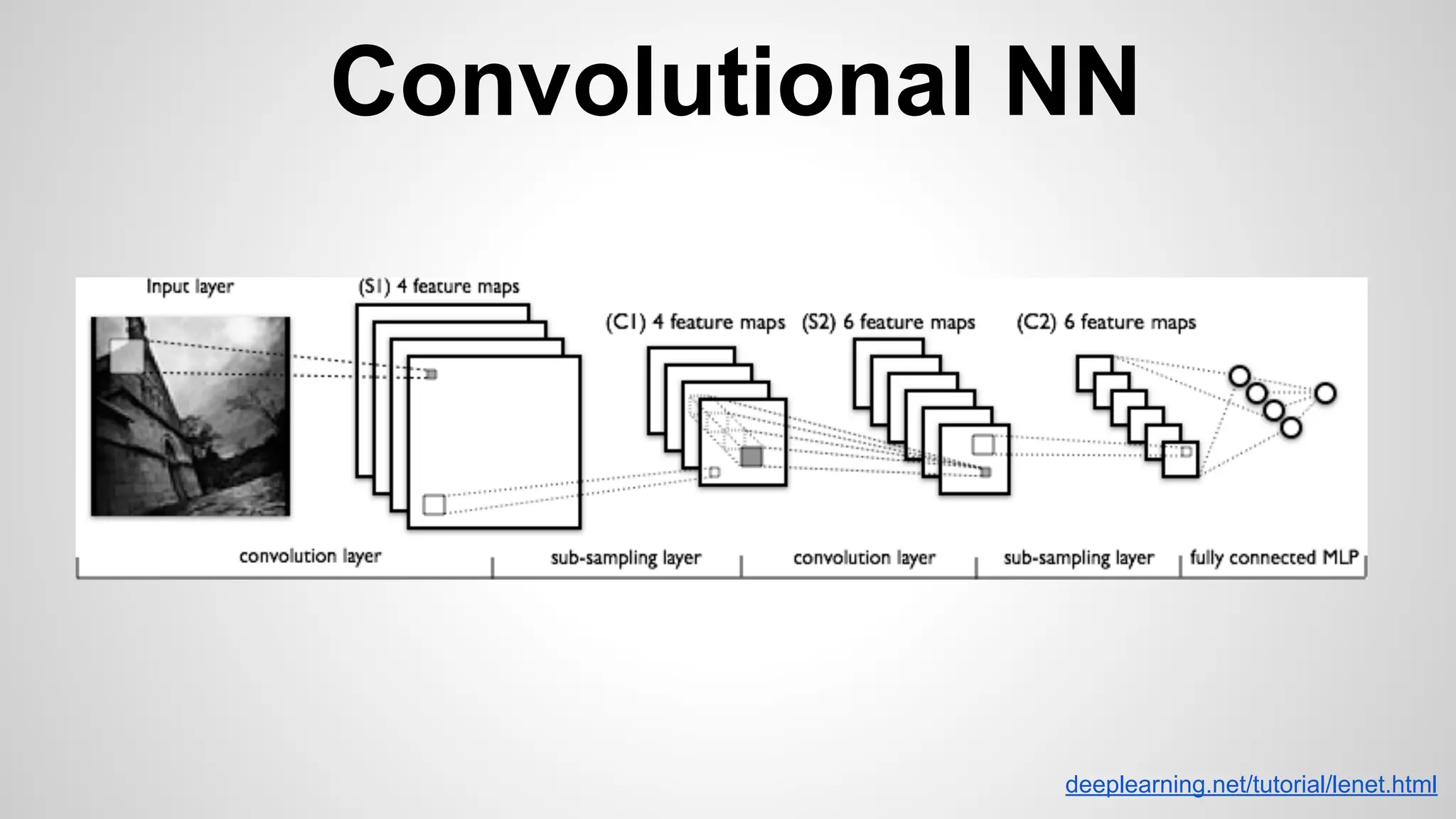

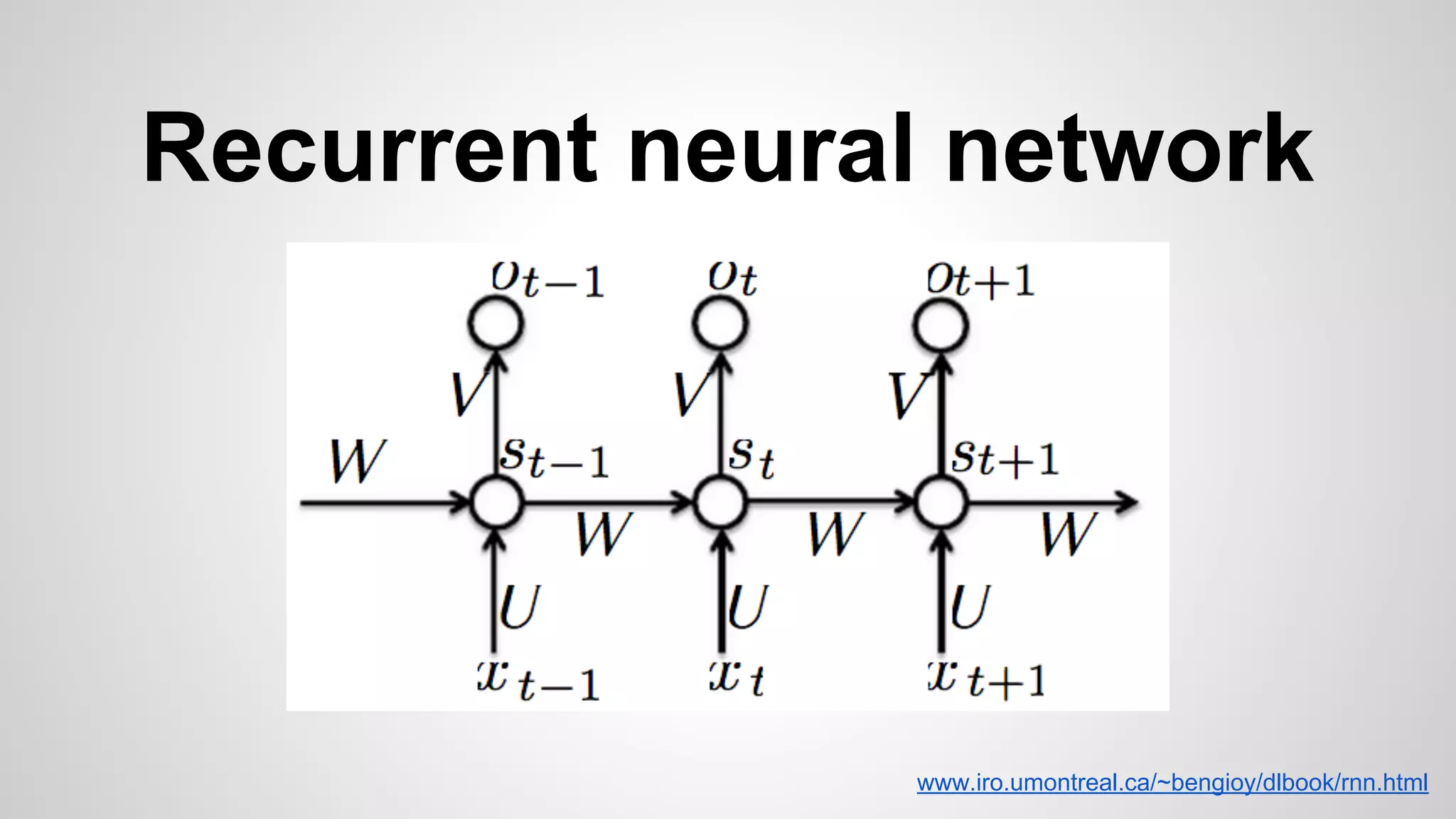

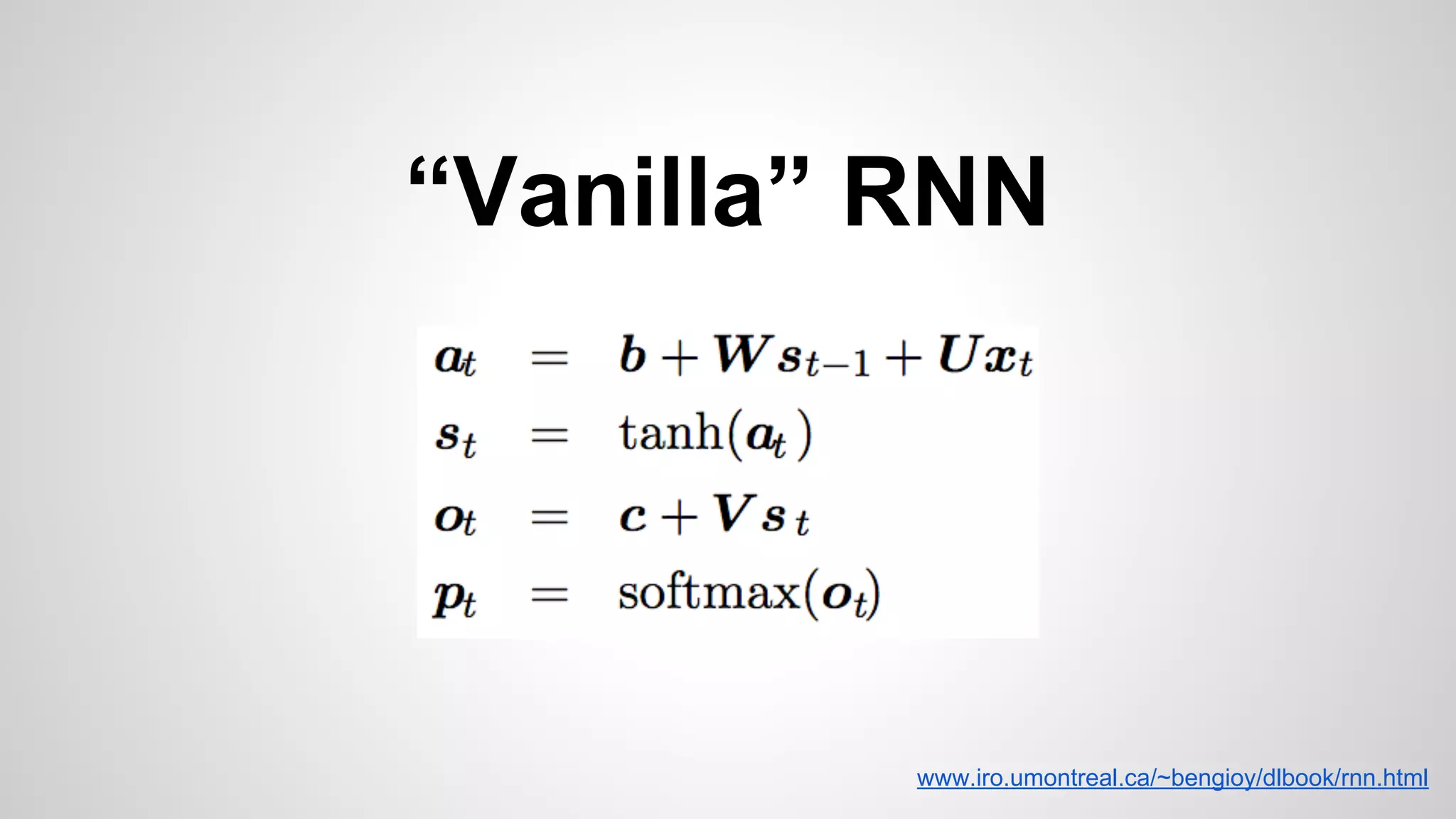

This document provides an overview of Theano tutorial part 2, including brief recaps of symbolic variables, functions, and computational graphs. It then summarizes various machine learning models like multivariate logistic regression, multilayer perceptrons, 1D and 2D convolution, max pooling, convolutional neural networks. It also mentions recurrent neural networks and the scan function in Theano for symbolic loops. References are provided for further reading on convolution networks and RNNs.