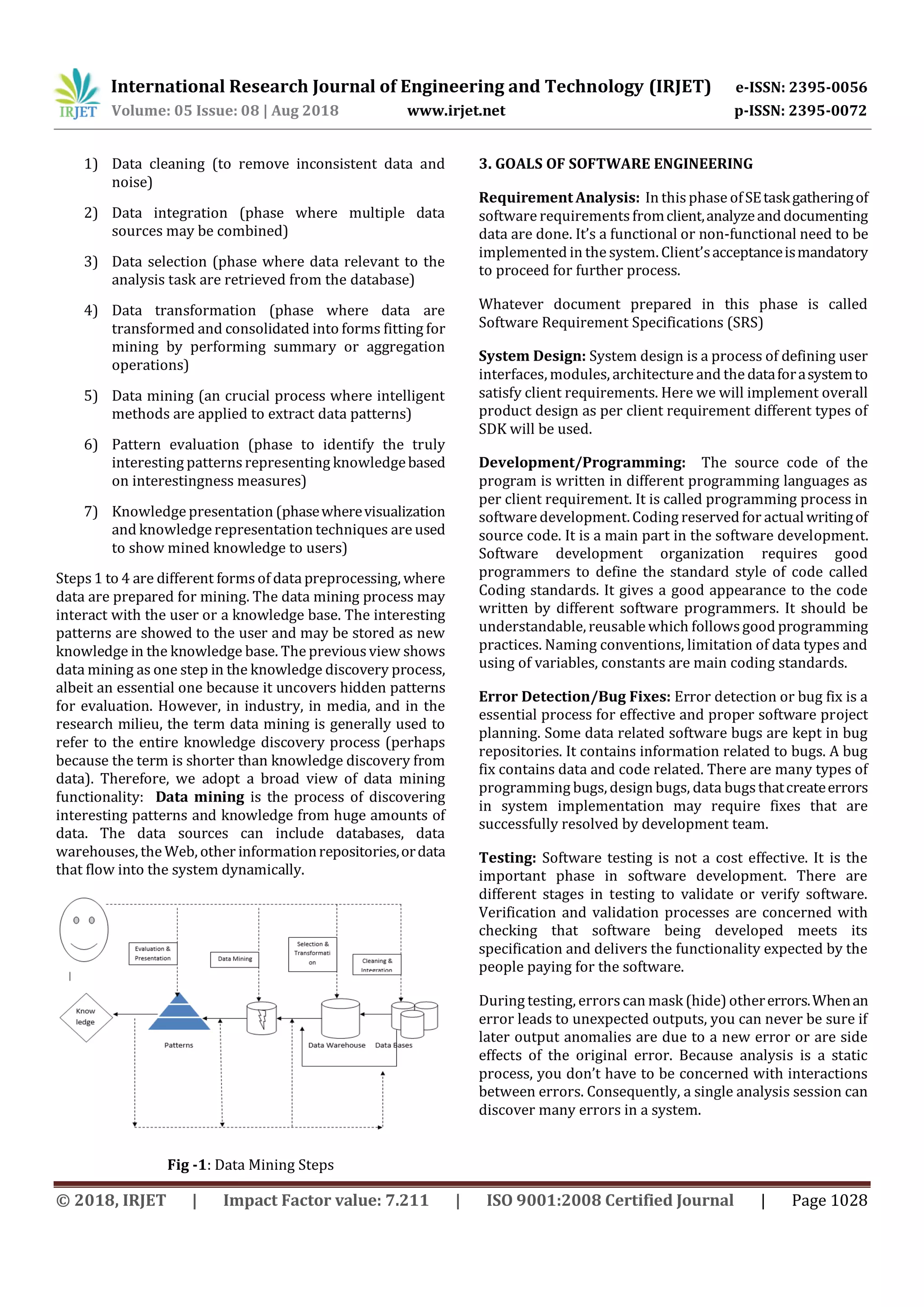

This document discusses data mining in software engineering. It begins by defining data mining as the process of discovering useful patterns and relationships in large databases to extract knowledge. The document then discusses the history and growth of data mining. It notes that vast amounts of data are now collected daily from various sources.

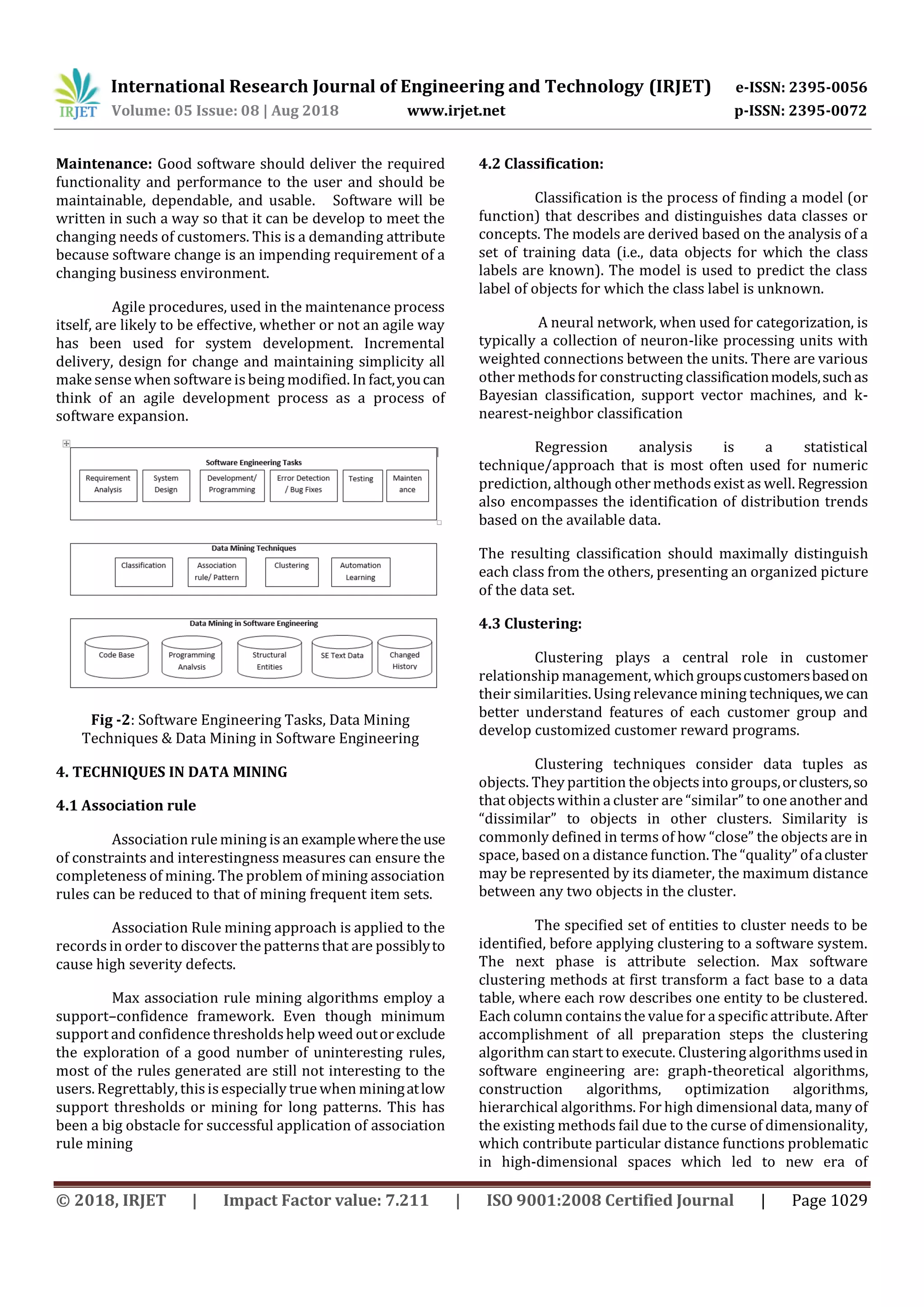

The document outlines the key goals of software engineering like requirement analysis, system design, development, testing, and maintenance. It discusses how data mining techniques like association rule mining, classification, clustering can be applied to software engineering tasks like error detection. Finally, it summarizes that data mining is essential in today's digital world to discover knowledge from huge amounts of data, and discusses how various data mining techniques can be useful for software engineering.

![International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056

Volume: 05 Issue: 08 | Aug 2018 www.irjet.net p-ISSN: 2395-0072

© 2018, IRJET | Impact Factor value: 7.211 | ISO 9001:2008 Certified Journal | Page 1030

clustering algorithms for high-dimensional data that focus

on subspace clustering and correlation clustering that also

looks for arbitrary rotated subspace clusters that can be

modeled by giving a correlation of their attributes.

Fig -3: Characterization of Data Mining

5. CONCLUSIONS

In this paper, I have tried to provide an analysis of Data

Mining and its origin. Why data mining is essentialinthisera

of computer world. An analyzed information aboutSoftware

Engineering and it various phases. How Data Mining in

Software Engineering is classified and Data Mining

Techniques used in processfor fruitfulknowledgediscovery.

REFERENCES

[1] Tao Xie,Jian Pei,Ahmed E. Hassan, "Mining Software

Engineering Data"

[2] Lovedeep, Varinder Kaur Atri, "Applications of Data

Mining Techniques in Software Engineering", IJEECS

[3] Jiawei Han,Micheline Kamber & Jian Pei, "Data Mining

Concepts and Techniques", Third Edition

[4] Ian Sommerville, "SOFTWARE ENGINEERING" Ninth

Edition](https://image.slidesharecdn.com/irjet-v5i8177-180918070457/75/IRJET-A-Study-on-Data-Mining-in-Software-4-2048.jpg)