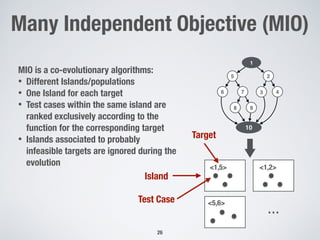

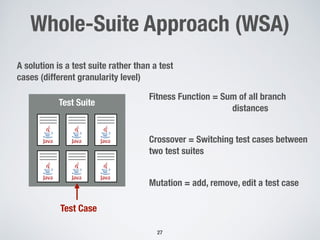

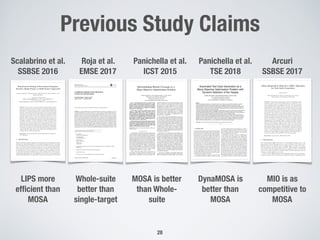

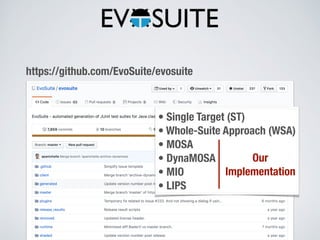

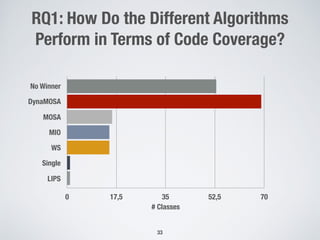

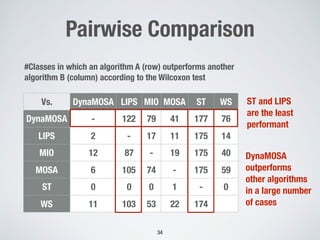

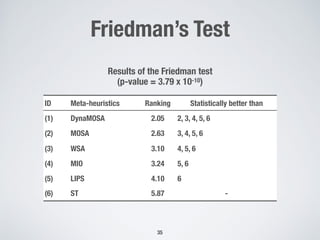

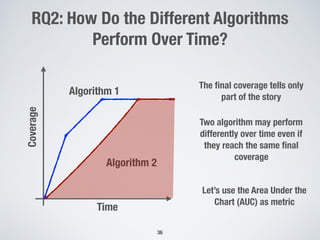

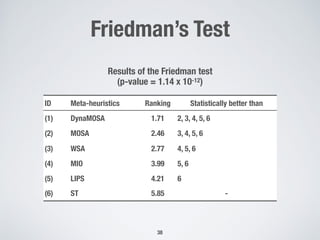

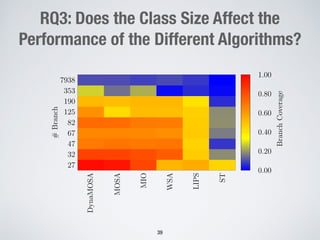

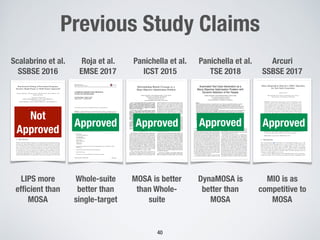

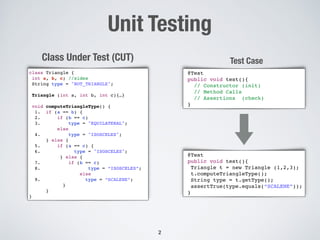

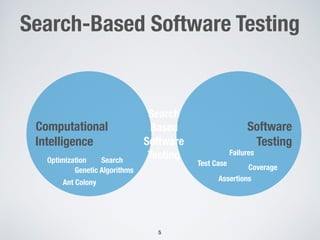

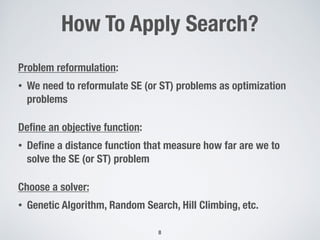

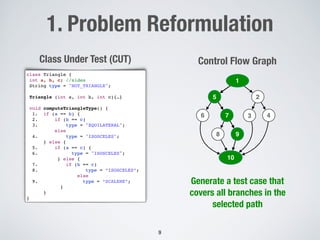

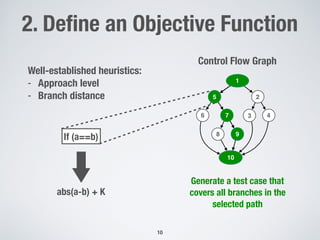

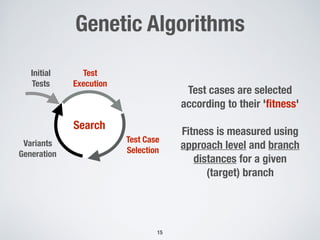

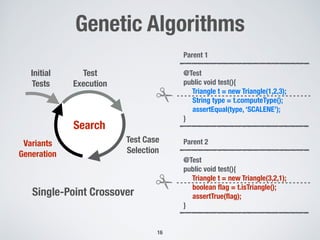

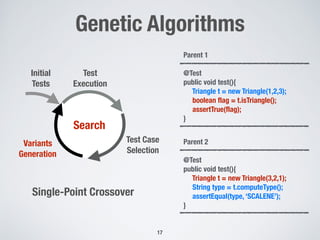

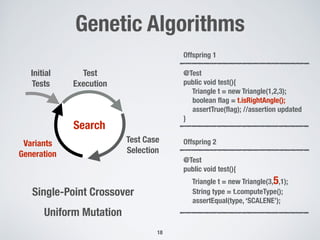

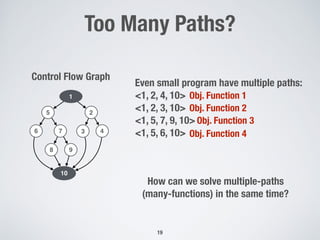

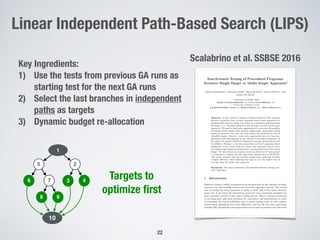

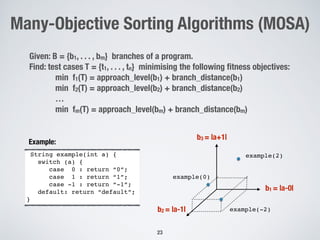

The document discusses automated test generation techniques for unit testing, focusing on search-based software testing and genetic algorithms. It highlights key concepts such as problem reformulation, test case selection, and various algorithmic approaches including many-objective sorting algorithms and whole-suite approaches. The document also presents empirical results comparing the performance of different algorithms used for code coverage in testing.

![Artificial Intelligence?

7

[Domingos2015 “The Master Algorithm”]

Neural Network

Regression

Support Vector

Tribe Origin Master Algorithm

Symbolists Logic, philosophy Inverse deduction

Connectionists Neuroscience Back-Propagation

Evolutionary Evolutionary biology Evolutionary Algorithms

Bayesian Statistics Probabilistic inference

Analogizers Psychology Kernel machines

Formal Methods](https://image.slidesharecdn.com/ipa2019-191105150110/85/IPA-Fall-Days-2019-7-320.jpg)

![21

Single Target Approach

1

25

6 7 3

98

10

4

Control Flow Graph TestSuite = {}

For each uncovered target {

[Fit,Test] = GeneticAlgorithm(target)

If (Fit == 0)

TestSuite = TestSuite + Test

}

return(TestSuite)

Limitations:

1) In which order should we select the targets?

2) Some targets are more difficult than other

(how to split the time?)

3) Infeasible paths?](https://image.slidesharecdn.com/ipa2019-191105150110/85/IPA-Fall-Days-2019-21-320.jpg)

![24

Many-Objective Sorting Algorithms (MOSA)

Objective 1

Objective 2

Not all non-dominated

solutions are optimal for

the purpose of testing

Min

Min

[A. Panichella, F. Kifetew,

P. Tonella ICST 2015]

Pareto

Front

These points are

better than others](https://image.slidesharecdn.com/ipa2019-191105150110/85/IPA-Fall-Days-2019-24-320.jpg)

![25

DynaMOSA: Dynamic MOSA

1

25

6 7 3

98

10

4

Control Flow Graph

Rationale:

• Not all branches (objectives) are

independent from one another

• We cannot cover branch <3,10> without

covering <2,3>

Idea:

• Organize targets in levels based on their

structural dependencies

• Start the search with the first-level

targets, then optimize the second level,

and so on

1* Level

2* Level

3* Level

[A. Panichella, F. Kifetew,

P. Tonella, TSE 2018]](https://image.slidesharecdn.com/ipa2019-191105150110/85/IPA-Fall-Days-2019-25-320.jpg)