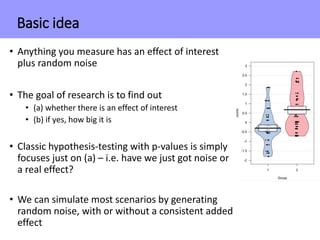

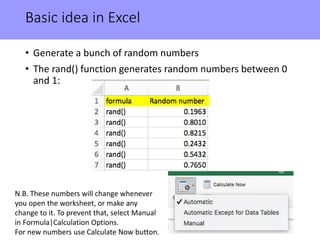

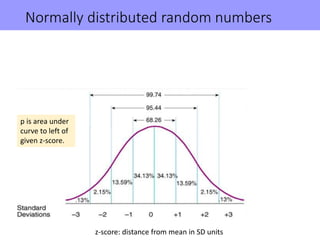

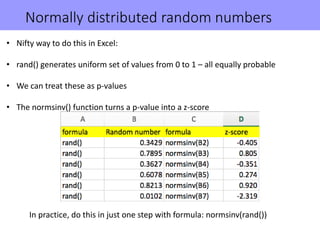

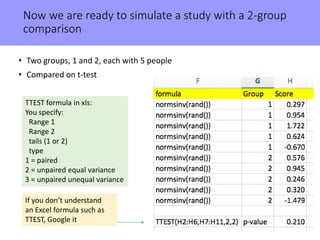

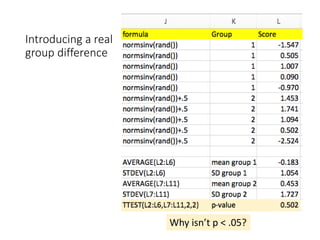

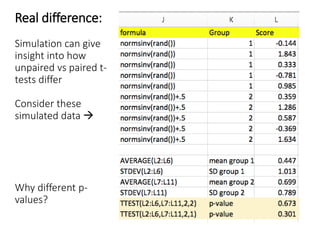

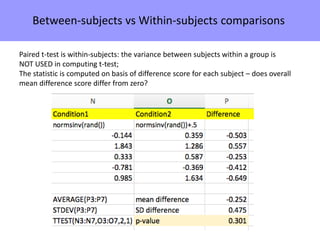

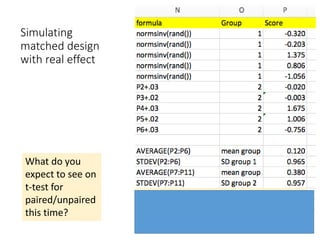

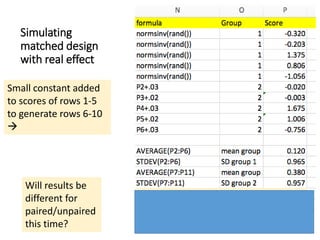

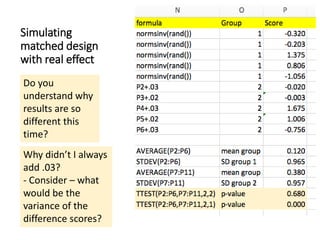

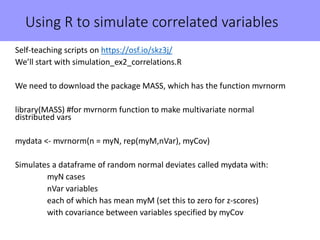

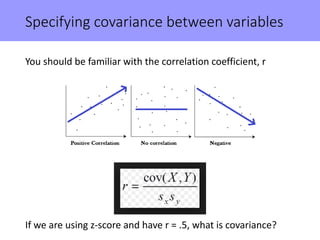

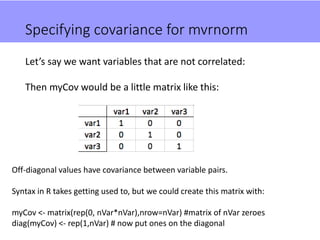

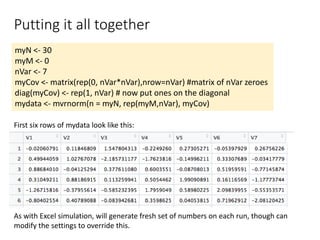

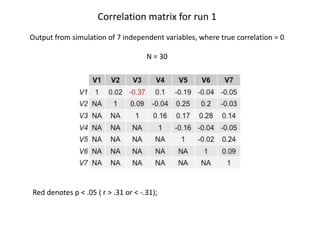

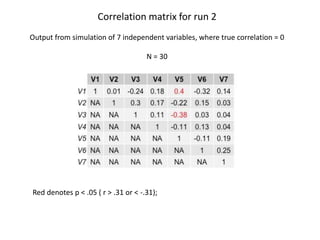

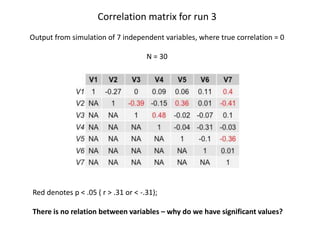

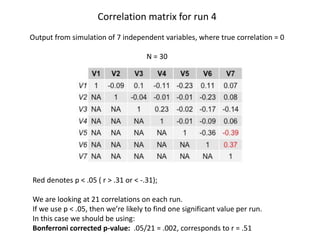

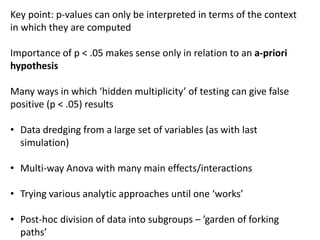

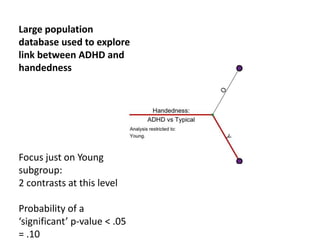

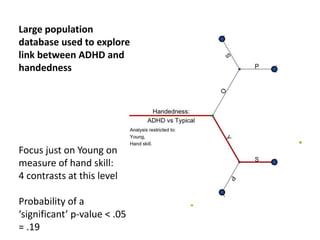

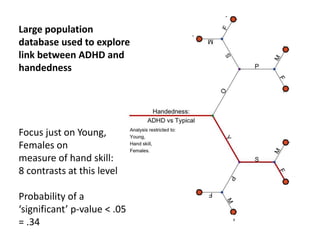

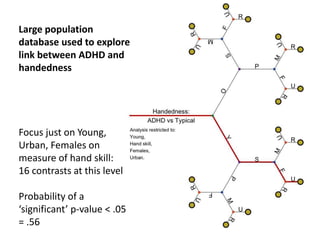

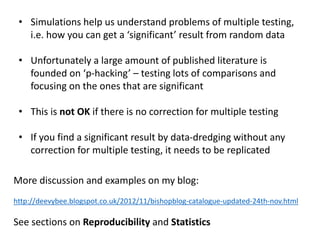

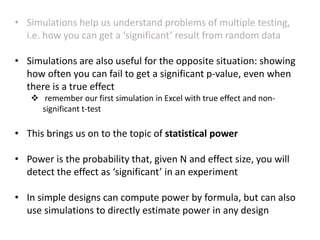

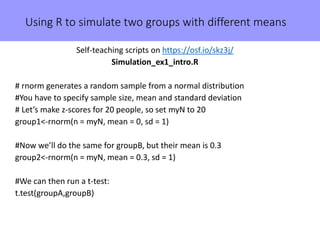

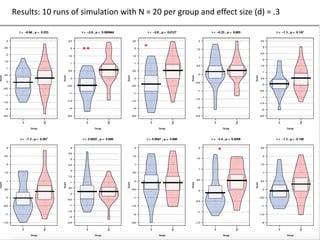

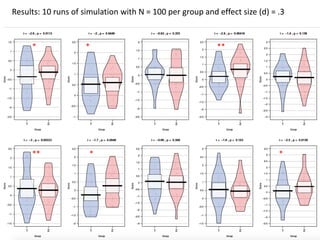

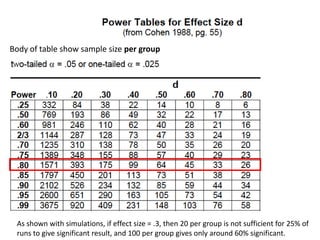

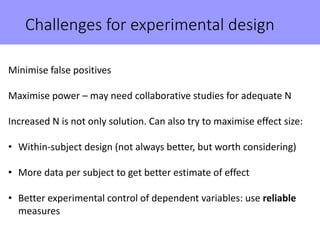

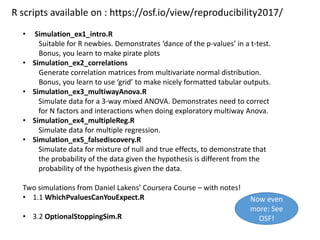

The document discusses the significance of simulating data for improving project design and analysis in research, particularly emphasizing its use in power analysis and understanding hypothesis testing. It explains methods for generating and analyzing simulated datasets using various tools, including Excel and R, to assess statistical validity and tackle issues like multiple testing and false positives. Additionally, it provides practical examples of using simulations to explore the effects of design choices on statistical outcomes.