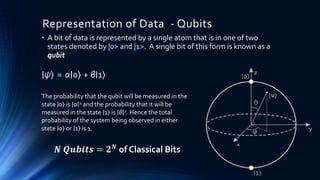

Quantum computing uses the principles of quantum mechanics to perform calculations. Richard Feynman first proposed the idea in 1982. Major developments include David Deutsch developing the quantum turing machine in 1985, and Peter Shor creating an algorithm to factor large numbers in polynomial time in 1994. Quantum computers represent data using qubits which can be in superposition of both 0 and 1 states, allowing exponentially more information to be processed than classical computers. Potential applications include machine learning, genomics, chemistry, materials science, cryptography, and defense.