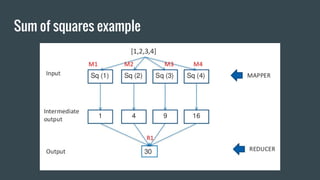

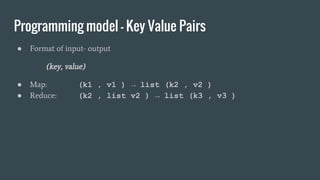

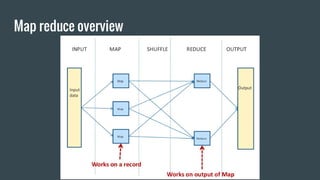

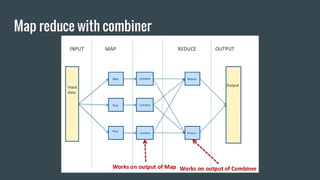

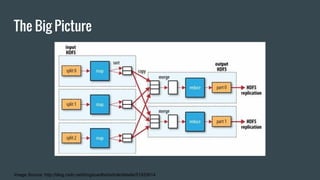

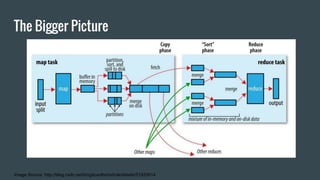

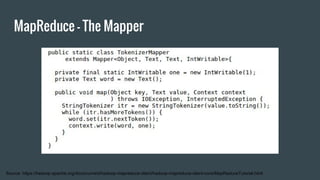

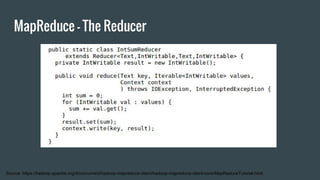

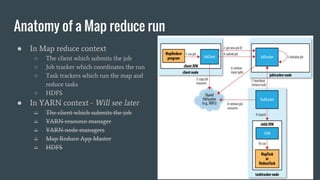

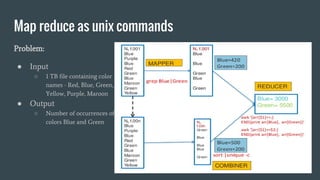

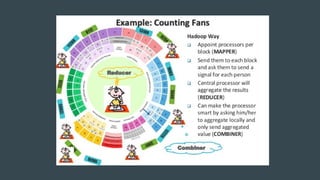

MapReduce provides a programming model for processing large datasets in a distributed, parallel manner. It involves two main steps - the map step where the input data is converted into intermediate key-value pairs, and the reduce step where the intermediate outputs are aggregated based on keys to produce the final results. Hadoop is an open-source software framework that allows distributed processing of large datasets across clusters of computers using MapReduce.

![Origin: Functional Programming

● Map - Returns a list constructed by applying a function (the first argument) to all

items in a list passed as the second argument

○ map f [a, b, c] = [f(a), f(b), f(c)]

○ map sq [1, 2, 3] = [sq(1), sq(2), sq(3)] = [1,4,9]

● Reduce - Returns a list constructed by applying a function (the first argument) on

the list passed as the second argument. Can be identity (do nothing).

○ reduce f [a, b, c] = f(a, b, c)

○ reduce sum [1, 4, 9] = sum(1, sum(4,sum(9,sum(NULL)))) = 14](https://image.slidesharecdn.com/introductiontomapreduce-170120062557/85/Introduction-to-map-reduce-8-320.jpg)