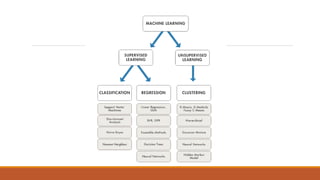

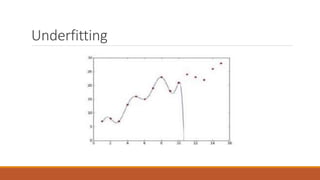

This document provides an introduction to machine learning and data science. It discusses key concepts like supervised vs. unsupervised learning, classification algorithms, overfitting and underfitting data. It also addresses challenges like having bad quality or insufficient training data. Python and MATLAB are introduced as suitable software for machine learning projects.